lecture note 8

advertisement

STAT 497

LECTURE NOTES 8

ESTIMATION

1

ESTIMATION

• After specifying the order of a stationary ARMA

process, we need to estimate the parameters.

• We will assume (for now) that:

1. The model order (p and q) is known, and

2. The data has zero mean.

• If (2) is not a reasonable assumption, we can

subtract the sample mean Y , fit a zero-mean

ARMA model: B X t B at where X t Yt Y

Then use X t Y as the model for Yt.

2

ESTIMATION

– Method of Moment Estimation (MME)

– Ordinary Least Squares (OLS) Estimation

– Maximum Likelihood Estimation (MLE)

– Least Squares Estimation

• Conditional

• Unconditional

3

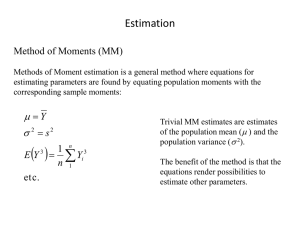

THE METHOD OF MOMENT ESTIMATION

• It is also known as Yule-Walker estimation. Easy but not

efficient estimation method. Works for only AR models

for large n.

• BASIC IDEA: Equating sample moment(s) to population

moment(s), and solve these equation(s) to obtain the

estimator(s) of unknown parameter(s).

1n

E (Yt ) Yt Y

n t 1

OR

1n

E YtYt k YtYt k k ˆk

n t 1

k ˆ k

4

THE METHOD OF MOMENT ESTIMATION

• Let n is the variance/covariance matrix of X with

the given parameter values.

• Yule-Walker for AR(p): Regress Xt onto Xt−1, . . ., Xt−p.

• Durbin-Levinson algorithm with replaced by ˆ .

• Yule-Walker for ARMA(p,q): Method of moments.

Not efficient.

5

THE YULE-WALKER ESTIMATION

• For a stationary (causal) AR(p)

p

E X t k X t j X t j E X t k at , k 0,1,..., p

j 1

0 p a2 and

p p 0

where 1 ,2 ,. p

To calculate the values E X t k at , we have used the

RSF of the process : X t B at .

6

THE YULE-WALKER ESTIMATION

• To find the Yule-Walker estimators, we are

using, k ˆk or k ˆ k .

ˆ pˆ ˆ p

Thus, the Yule - Walker equations for ˆ :

2

ˆ

a ˆ0 ˆˆ p

• These are forecasting equations.

• We can use Durbin-Levinson algorithm.

7

THE YULE-WALKER ESTIMATION

• If ˆ0 0, then ˆ m is nonsingular.

• If {Xt} is an AR(p) process,

2

asymp .

1

a

ˆ

~ N , p

n

ˆ a2 p a2

1

ˆ

kk ~ N 0, for k p.

n

asymp .

Hence, we can use the sample PACF to test for AR order, and we can

calculate approximate confidence intervals for the parameters.

8

THE YULE-WALKER ESTIMATION

• If Xt is an AR(p) process, and n is large,

n ˆ ~ N 0,ˆ a2ˆ p1

approx .

• 100(1)% approximate confidence interval

for j is

ˆ

1 1 / 2

a

ˆj z / 2 ˆ p

n

jj

9

THE YULE-WALKER ESTIMATION

• AR(1)

Yt Yt 1 at

Find the MME of .

It is known that 1 = .

1 ˆ1

n

Yt Y Yt 1 Y

ˆ1 t 1

n

Yt Y

2

t 1

10

THE YULE-WALKER ESTIMATION

• So, the MME of is

n

~

Yt Y Yt 1 Y

t 1

n

Yt Y

2

t 1

• Also, is unknown.

2

a

a2

0

1 2

• Therefore, using the variance of the process,

we can obtain MME of a2 .

11

THE YULE-WALKER ESTIMATION

0 ˆ0

a2

1 n

2

Yt Y

2

1 n t 1

n

1

~

2

2

2

~

Yt Y

a 1

n t 1

n

1

2

2

2

~

a 1 ˆ1 Yt Y

n t 1

12

THE YULE-WALKER ESTIMATION

• AR(2)

Yt 1Yt 1 2Yt 2 at

Find the MME of all unknown parameters.

• Using the Yule-Walker Equations

1

1 1 2 1 1

1 2

2 11 2 2

2

1

1 2

2

13

THE YULE-WALKER ESTIMATION

• So, equate population autocorrelations to

sample autocorrelations, solve for 1 and 2.

1

1 ˆ1

ˆ1

1 2

2 ˆ 2

2

1

1 2

2 ˆ 2

14

THE YULE-WALKER ESTIMATION

ˆ 2 ˆ12

~ ˆ1 1 ˆ 2

2

and 1

.

2

2

1 ˆ1

1 ˆ

~

1

Using these we can obtain the MME of

2

a

~

~

2

~

a ˆ0 1 1ˆ1 2 ˆ 2

2

To obtain MME of a , use the process variance

formula.

15

THE YULE-WALKER ESTIMATION

• AR(1)

0

2

a

1

2

ˆ1

1

ˆ

ˆ

1 ˆ1 ˆ1

ˆ0

• AR(2)

1

ˆ

1

ˆ

ˆ0 ˆ1 ˆ1

1 1

ˆ 2

ˆ

ˆ2 ˆ1 ˆ0 ˆ2

2

16

THE YULE-WALKER ESTIMATION

• MA(1)

Yt at at 1

• Again using the autocorrelation of the series

at lag 1,

1

ˆ1

2

Choose the root so

1

2 ˆ1 ˆ1 0

2

1

1

4

ˆ

~

1

1, 2

2 ˆ1

that the root satisfying

the invertibility

condition

17

THE YULE-WALKER ESTIMATION

• For real roots,

1 4 ˆ12 0 0.25 ˆ12

0.5 ˆ1 0.5

If ˆ1 0.5, unique real roots but non-invertible.

If ˆ1 0.5 , no real roots exists and MME fails.

If ˆ1 0.5, unique real roots and invertible.

18

THE YULE-WALKER ESTIMATION

• This example shows that the MMEs for MA and

ARMA models are complicated.

• More generally, regardless of AR, MA or ARMA

models, the MMEs are sensitive to rounding

errors. They are usually used to provide initial

estimates needed for a more efficient nonlinear

estimation method.

• The moment estimators are not recommended

for final estimation results and should not be

used if the process is close to being nonstationary

or noninvertible.

19

THE MAXIMUM LIKELIHOOD

ESTIMATION

• Assume that a ~ N 0, .

i .i . d .

t

2

a

• By this assumption we can use the joint pdf

f a1 ,, an f a1 f an

instead of f y1 ,, yn which cannot be

written as multiplication of marginal pdfs

because of the dependency between time

series observations.

20

MLE METHOD

• For the general stationary ARMA(p,q) model

Yt 1Yt 1 pYt p at 1at 1 q at q

or

at Yt 1Yt 1 pYt p 1at 1 q at q

where Yt Yt .

21

MLE

• The joint pdf of (a1,a2,…, an) is given by

f a1 ,, an , , ,

2

a

2

2 n / 2

a

1 n 2

exp 2 at

2 a t 1

where 1 ,, p and 1 ,. q .

• Let Y=(Y1,…,Yn) and assume that initial

conditions Y*=(Y1-p,…,Y0)’ and a*=(a1-q,…,a0)’

are known.

22

MLE

• The conditional log-likelihood function is given

by

n

S* , ,

2

2

ln L , , , a ln2 a

2

2 a2

where S* , , at2 , , Y , Y* , a* is the

n

t 1

conditiona l sum of squares.

Initial Conditions: Y* Y and a* E at 0.

23

MLE

• Then, we can find the estimators of =(1,…,p),

=(1,…, q) and such that the conditional

likelihood function is maximized. Usually,

numerical nonlinear optimization techniques

are required. After obtaining all the estimators,

S* ˆ ,ˆ,ˆ

d. f .

2

ˆa

where d.f.= of terms used in SS of parameters

= (np) (p+q+1) = n (2p+q+1).

24

MLE

• AR(1) Yt Yt 1 at where at ~ N 0, a2 .

i .i .d .

f a1 ,, an 2

2 n / 2

a

1 n 2

exp 2 at

2 a t 1

Y1 Y0 a1 Let' s take Y0 0

Y2 Y1 a2 a2 Y2 Y1

Y3 Y2 a3 a3 Y3 Y2

Yn Yn1 an an Yn Yn1

25

MLE

The Jacobian will be

a2

Y2

J

an

Y2

a2

a2

1 0 0

Y3

Yn 1 0

1

an

an

0 0 1

Y3

Yn

f Y2 ,, Yn Y1 f a2 ,, an J f a2 ,, an

26

MLE

• Then, the likelihood function can be written as

L , a2 f Y1 ,, Yn f Y1 f Y2 ,, Yn Y1

f Y1 f a2 ,, an

1/ 2

1

e

2 0

Y1 0 2

2 0

n 1 / 2 1 n Y Y 2

t

t 1

2

1

2

2 a

e

2 a t 2

.

where Y1 ~ N 0, 0

2

1

2

a

27

MLE

• Hence,

1 2

1 n

2

2

2

L ,

exp 2 Yt Yt 1 1 Y1

n

/

2

2 a2

2 a t 2

2

a

• The log-likelihood function:

n

n

1

2

2

ln L , a ln 2 ln a ln 1 2

2

2

2

1 n

2

2

2

2 Yt Yt 1 1 Y1

2 a t

2

S*

S

28

MLE

• Here, S*() is the conditional sum of squares

and S() is the unconditional sum of squares.

• To find the value of where the likelihood

function is maximized,

ln L , a2

0 ˆ.

• Then,

S ˆ

ˆ

.

n

2

a

29

MLE

• If we neglect ln(12), then MLE=conditional LSE.

max L ,

•

min S .

If we neglect both ln(1 ) and 1 Y

max L , min S .

2

a

2

2

2

a

2

1 ,

then

*

30

MLE

• Asymptotically unbiased, efficient, consistent,

sufficient for large sample sizes but hard to

deal with joint pdf.

31

CONDITIONAL LEST SQUARES

ESTIMATION

• AR(1)

Yt Yt 1 at where at ~ N 0, a2 .

i .i . d .

at Yt Yt 1

SSE a Yt Yt 1 S* for observed Y1 ,...,Yn .

n

t 1

2

t

n

2

t 1

n

dS*

2 Yt 1 Yt Yt 1 0

t 1

d

n

YtYt 1

ˆ t 2n

2

Y

t 1

t 2

32

CONDITIONAL LSE

• If the process mean is different than zero

Yt Y Yt 1 Y

n

ˆ t 2

Yt 1 Y

n

2

MME of

t 2

33

CONDITIONAL LSE

• MA(1)

Yt at at 1 ,1 1, at

~ WN _ Normal0,

2

a

IF : at Yt Yt 1 2Yt 2 AR

– Non-linear in terms of parameters

– LS problem

– S*() cannot be minimized analytically

– Numerical nonlinear optimization methods like

Newton-Raphson or Gauss-Newton,...

*There are similar problem is ARMA case.

34

UNCONDITIONAL LSE

min S wrt where

S Yt Yt 1 1 Y1

n

2

2

2

t 2

dS

0 ˆ

d

• This nonlinear in .

• We need nonlinear optimization techniques.

35

BACKCASTING METHOD

• Obtain the backward form of ARMA(p,q)

1 F F Y 1 F F a

p

1

p

q

t

1

q

t

where F Yt Yt j .

j

• Instead of forecasting, backcast the past

values of Yt and at, t 0. Obtain the

unconditional log-likelihood function, then

obtain the estimators.

36

EXAMPLE

• If there are only 2 observations in time series

(not realistic)

Y1 a1

Y2 a2 a1

where a1 and a2 ~ N 0, .

2

Find the MLE of and a .

i .i . d .

2

a

37

EXAMPLE

• US Quarterly Beer Production from 1975 to 1997

> par(mfrow=c(1,3))

> plot(beer)

> acf(as.vector(beer),lag.max=36)

> pacf(as.vector(beer),lag.max=36)

38

EXAMPLE (contd.)

> library(uroot)

Warning message: package 'uroot' was built under R version 2.13.0

> HEGY.test(wts =beer, itsd = c(1, 1, c(1:3)), regvar = 0,selectlags = list(mode = "bic", Pmax = 12))

Null hypothesis: Unit root.

Alternative hypothesis: Stationarity.

---HEGY statistics:

Stat. p-value

tpi_1 -3.339 0.085

tpi_2 -5.944 0.010

Fpi_3:4 13.238 0.010

> CH.test(beer)

------ - ------ ---Canova & Hansen test

------ - ------ ---Null hypothesis: Stationarity.

Alternative hypothesis: Unit root.

L-statistic: 0.817

Critical values:

0.10 0.05 0.025 0.01

0.846 1.01 1.16 1.35

39

EXAMPLE (contd.)

> plot(diff(beer),ylab='First Difference of Beer Production',xlab='Time')

> acf(as.vector(diff(beer)),lag.max=36)

> pacf(as.vector(diff(beer)),lag.max=36)

40

EXAMPLE (contd.)

> HEGY.test(wts =diff(beer), itsd = c(1, 1, c(1:3)), regvar = 0,selectlags = list(mode = "bic", Pmax = 12))

---- ---HEGY test

---- ---Null hypothesis: Unit root.

Alternative hypothesis: Stationarity.

---HEGY statistics:

Stat. p-value

tpi_1 -6.067 0.01

tpi_2 -1.503 0.10

Fpi_3:4 9.091 0.01

Fpi_2:4 7.136 NA

Fpi_1:4 26.145 NA

41

EXAMPLE (contd.)

> fit1=arima(beer,order=c(3,1,0),seasonal=list(order=c(2,0,0), period=4))

> fit1

Call:

arima(x = beer, order = c(3, 1, 0), seasonal = list(order = c(2, 0, 0), period = 4))

Coefficients:

ar1

ar2

ar3

sar1

sar2

-0.7380 -0.6939 -0.2299 0.2903 0.6694

s.e. 0.1056 0.1206 0.1206 0.0882 0.0841

sigma^2 estimated as 1.79: log likelihood = -161.55, aic = 335.1

> fit2=arima(beer,order=c(3,1,0),seasonal=list(order=c(3,0,0), period=4))

> fit2

Call:

arima(x = beer, order = c(3, 1, 0), seasonal = list(order = c(3, 0, 0), period = 4))

Coefficients:

ar1

ar2

ar3

sar1

sar2

sar3

-0.8161 -0.8035 -0.3529 0.0444 0.5798 0.3387

s.e. 0.1065 0.1188 0.1219 0.1205 0.0872 0.1210

sigma^2 estimated as 1.646: log likelihood = -158.01, aic = 330.01

42