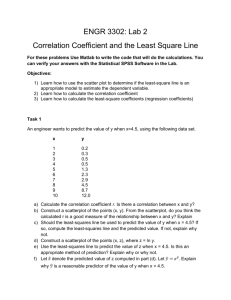

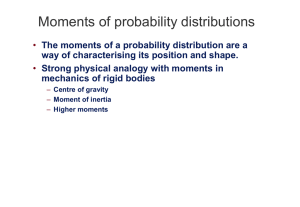

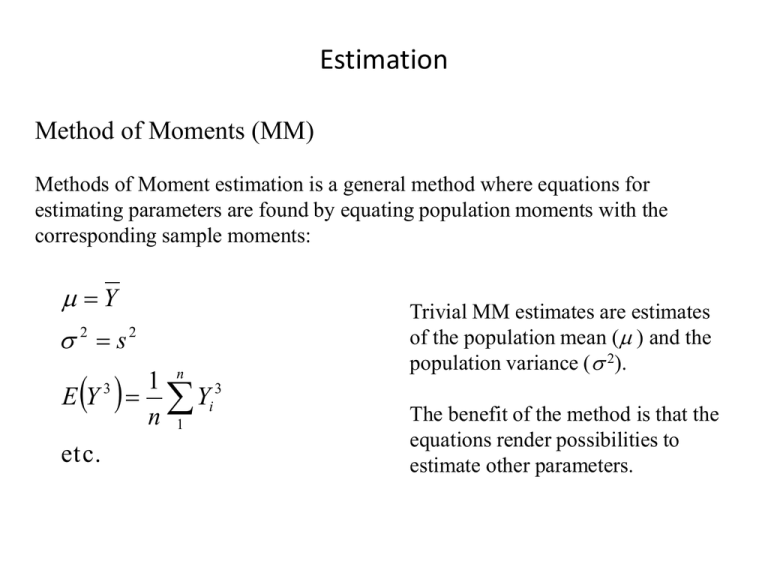

Estimation

advertisement

Estimation Method of Moments (MM) Methods of Moment estimation is a general method where equations for estimating parameters are found by equating population moments with the corresponding sample moments: Y 2 s2 E Y3 et c. 1 n 3 Yi n 1 Trivial MM estimates are estimates of the population mean ( ) and the population variance ( 2). The benefit of the method is that the equations render possibilities to estimate other parameters. Mixed moments Moments can be raw (e.g. the mean) or central (e.g. the variance). There are also mixed moments like the covariance and the correlation (which are also central). MM-estimation of parameters in ARMA-models is made by equating the autocorrelation function with the sample autocorrelation function for a sufficient number of lags. For AR-models: Replace k by rk in the Yule-Walker equations For MA-models: Use developed relationships between k and the parameters 1 , … , q and replace k by rk in these. Leads quickly to complicated equations with no unique solution. Mixed ARMA: As complicated as the MA-case Example of formulas AR(1): AR(2): k k ˆ r1 k 1 k 1 2 k 2 k 1 k 1 2 k 2 Set rk ˆ1 rk 1 ˆ2 rk 2 for k 1,2 2 r 1 r r r 2 ˆ 2 1 ˆ1 1 and 2 1 r12 1 r12 MA(1): 0 e2 1 2 ; 1 e2 1 1 2 ˆ Set r1 1 ˆ 2 ˆ 1 ˆ 2 ˆ 1 0 with solut ions r1 1 1 1 2 2r1 4r1 If r1 0.5 Real - valued roots (necessary!) ˆ 1 1 1 2 2r1 4r1 Only one solution at a time gives an invertible MA-process The parameter e2: Set 0 = s2 ˆ e2 ( MM) 1 ˆ1 (MM) r1 ˆp(MM) rp s 2 ˆ e2 ( MM) ˆ e2 ( MM) 1 ˆ1 s2 ˆ (MM) 2 for MA(q) (MM) 2 q 2 (MM) ˆ 1 1 1 2 ˆ1 (MM) ˆ1(MM) ˆ1(MM) for AR( p) 2 s2 for ARMA(1,1) Example Simulated from the model Yt 1.3 0.2 Yt 1 et ; e2 4 > ar(yar1,method="yw") Call: ar(x = yar1, method = "yw") “Yule-Walker (leads to MM-estimates) Coefficients: 1 0.2439 Order selected 1 sigma^2 estimated as 4.185 Least-squares estimation Ordinary least-squares Find the parameter values p1, … , pm that minimise the square sum S p1 ,, pm Yi E Yi X, p1 ,, pm n 2 i 1 where X stands for an array of auxiliary variables that are used as predictors for Y. Autoregressive models Yt 1 Yt 1 p Yt p et The counterpart of S (p1, … , pm ) is Sc 1 ,, p , Y Y n t p 1 t 1 t 1 Here, we take into account the possibility of a mean different from zero. p Yt p 2 Now, the estimation can be made in two steps: 1) Estimate by Y 2) Find the values of 1 , …, p that minimises Y Y Y Sc 1 ,, p , Y n t p 1 t 1 t 1 Y p Yt p Y 2 The estimation of the slope parameters thus becomes conditional on the estimation of the mean. The square sum Sc is therefore referred to as the conditional sum-of-squares function. The resulting estimates become very close to the MM-estimate for moderately long series. Moving average models More tricky, since each observed value is assumed to depend on unobservable white-noise terms (and a mean): Yt et 1 et 1 t q et q As for the AR-case, first estimate the mean and then estimate the slope parameters conditionally on the estimated mean, i.e. Substitute Yt ' Yt Y for Yt in the model For an invertible MA-process we may write Yt ' 1 1 ,, q Yt '1 2 1 ,, q Yt ' 2 et where the i ' s are known functions of 1 ,, q The square sum to be minimized is then generally S ,, e Y 2 c 1 q t t ' ,, Y 1 1 q ' t 1 2 ,, Y 1 q ' t 2 Problems: • The representation is infinite, but we only have a finite number of observed values • Sc is a nonlinear function of the parameters 1 , … , q Numerical solution is needed Compute et recursively using the observed values Y1, … , Yn and setting e0 = e–1 = … = e–q = 0 : et Yt 1et 1 q et q for a certain set of values 1 , … , q n Numerical algorithms used to find the set that minimizes 2 e t t 1 2 Mixed Autoregressive and Moving average models Least-squares estimation is applied analogously to pure MA-models. et –values are recursively calculated setting ep = ep – 1 = … = ep + 1 – q = 0 Least-squares generally works well for long series For moderately long series the initializing with e-values set to zero may have too much influence on the estimates. Maximum-Likelihood-estimation (MLE) For a set of observations y1, … , yn the likelihood function (of the parameters) is a function proportional to the joint density (or probability mass) function of the corresponding random variables Y1, … , Yn evaluated at those observations: L p1,, pm fY1 ,,Yn y1,, yn p1,, pm For a times series such a function is not the product of the marginal densities/probability mass functions. We must assume a probability distribution for the random variables. For time series it is common to assume that the white noise is normally distributed, i.e. et ~ N 0, e2 e1 e2 2 ~ MVN 0 ; I e e n with known joint density function f e e1 ,, en 2 n 2 2 e 1 exp 2 2 e 2 e t t 1 n For the AR(1) case we can use that the model defines a linear transformation to form Y2, …, Yn from Y1, …, Yn–1 and e2, …, en Y2 Y1 e2 Y3 Y2 e3 Y Y e n n 1 n This transformation has Jacobian = 1 which simplifies the derivation of the joint density for Y2, …, Yn given Y1 to fY2 ,,Yn Y1 y1 ,, yn y1 2 n 1 2 2 e 1 exp 2 2 e n t 2 2 yt yt 1 Now Y1 should be normally distributed with mean and variance e2/(1– 2) according to the derived properties and the assumption of normally distributed e. Hence the likelihood function becomes L , , e2 fY2 ,,Yn Y1 y1 ,, yn y1 fY1 y1 2 2 n 1 2 2 e 1 exp 2 2 e 1 n 2 2 e 1 2 2 n n y t 2 yt 1 2 t 2 2 1 2 e 1 2 2 y1 exp 2 2 2 e 1 1 exp S , 2 2 e where S ,μ Yt Yt 1 1 2 Y1 2 2 t 2 and the MLEs of the parameters . and e2 are found as the values that maximises L Compromise between MLE and Conditional least-squares: Unconditional least-squares estimates of and are found by minimising n S , μ Yt Yt 1 1 2 Y1 2 2 t 2 The likelihood function can be put up for ant ARMA-model, however it is more involved for models more complex than AR(1). The estimation need (with a few exceptions) to be carried out numerically Properties of the estimates Maximum-Likelihood estimators has a well-established asymptotic theory: ˆMLE is asymptotic ally unbiased and ~N , I 1 log L 2 where I is the Fisher information E Hence, by deriving large-sample expressions for the variances of the point estimates, these can be used to make inference about the parameters (tests and confidence intervals) See the textbook Model diagnostics Upon estimation of a model, its residuals should be checked as usual. Residuals eˆt Yt Yˆt should be plotted in order to check for • constant variance (plot them against predicted values) • normality (Q-Q-plots) • substantial residual autocorrelation (SAC and SPAC plots) Ljung-Box test statistic Let rˆk SAC at lag k for theobtained residuals eˆt and define K Q*, K n n 2 j 1 rˆj2 n j If the correct ARMA(p,q)-model is estimated, then Q*,K follows a Chi-square distribution with K – p – q degrees of freedom. Hence, excessive values of this statistic indicates that the model has been erroneously specified. The value of K should be chosen large enough to cover what can be expected to be a set of autocorrelations that are unusually high if the model is wrong.