Document

advertisement

Sufficient

Statistics

Dayu

11.11

Some Abbreviations

• i.i.d. : independent, identically

distributed

Content

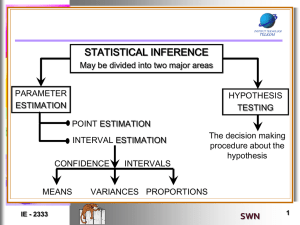

• Estimator, Biased, Mean Square Error

(MSE) and Minimum-Variance Unbiased

Estimator (MVUE)

When MVUE is unique?

• Lehmann–Scheffé Theorem

– Biased

– Complete

– Sufficient

• the Neyman-Fisher factorization criterion

How to construct MVUE is unique?

• Rao-Blackwell theorem

Estimator

• The probability mass function (or

density) of X is partially unknown,

i.e. of the form f(x;θ) where θ is a

parameter, varying in the parameter

space Θ.

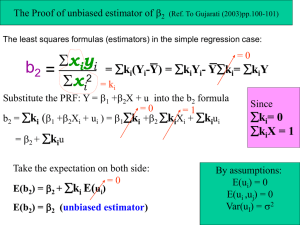

Unbiased

• An estimator ˆ t(x)is said to be

unbiased for a function ˆ if it equals in

expectation i.e. E{ˆ}

• E.g using mean of a sample to estimate

mean of the population

x is unbiased

n

1 n

1

1 n

1

E ( x ) E ( xi ) E ( xi ) E ( xi ) n

n i 1

n i 1

n i 1

n

Mean Squared Error (MSE)

• MSE of an estimator T of an

unobservable parameter θ is

MSE(T)=E[(T- θ)2]

• Since E(Y2)=V(Y)+[E(Y)]2

MSE(T)=var(T)+[bias(T)]2

where bias(T)=E(T- θ)=E(T)- θ

• For the unbiased one, MSE=V(T)

since biasd(T)=0

Examples

Two estimators for σ2 :

Results from MLE, biased, but

smaller variance

Unbiased, but bigger variance

Minimum-Variance Unbiased

Estimator (MVUE)

• An unbiased estimator of minimum

MSE also has minimum variance.

• MVUE is an unbiased estimator of

parameters, whose variance is minimized

for all values of the parameters.

• Two theorems

– Lehmann-Scheffé theorem can show that

MVUE is unique.

– Constructing a MVUE: Rao-Blackwell theorem

Lehmann–Scheffé Theorem

• any estimator that is complete,

sufficient, and unbiased is the unique

best unbiased estimator of its

expectation.

• The Lehmann-Scheffé Theorem

states that if a complete and

sufficient statistic T exists, then the

UMVU estimator of g(θ) (if it exists)

must be a function of T.

Completeness

• Suppose a random variable X has a probability

distribution belonging to a known family of

probability distributions, parameterized by θ,

• A function g(X) is an unbiased estimator of zero if

the expectation E(g(X)) remains zero regardless

of the value of the parameter θ. (by the definition

of unbiased)

• Then X is a complete statistic precisely if it

admits (up to a set of measure zero) no such

unbiased estimator of zero except 0 itself.

Example of Completeness

• suppose X1, X2 are i.i.d. random variables,

normally distributed with expectation θ and

variance 1.

• Not complete: Then X1 — X2 is an unbiased

estimator of zero. Therefore the pair (X1, X2)

is not a complete statistic.

• Complete: On the other hand, the sum X1 +

X2 can be shown to be a complete statistic.

That means that there is no non-zero function

g such that E(g(X1 + X2 )) remains zero

regardless of changes in the value of θ.

Detailed Explanations

• X1 + X2~(2θ,2)

Sufficiency

• Consider an i.i.d. sample X1, X2,.. Xn

• Two people A and B:

– A observe the entire sample X1, X2,.. Xn

– B observes only one number T,

T=T(X1, X2,.. Xn)

• Intuitionly, Who has more

information?

• Under what condition, B will have as

much information about θ as A has?

Sufficiency

• Definition:

– A statistic T(X) is sufficient for θ precisely if the

conditional probability distribution of the data X

given the statistic T(X) does not depend on θ.

• How to find?: the Neyman-Fisher

factorization criterion: If the probability

density function of X is f(x;θ), then T

satisfies the factorization criterion if and

only if functions g and h can be found such

that

• h(x): a function that does not depend on θ

• g(T(x),θ): a function that depends on data

only throught T(x)

• E.g.

• T=x1+x2+.. +xn is a sufficient statistic for p

for Bernoulli Distribution B(p)

g(T(x),p)∙1 h(x)=1

Example 2

Test

T=x1+x2+.. +xn

for Poisson Distribution Π(λ):

h(x): independent of λ

Hence, T=x1+x2+.. +xn is sufficient!

g(T(x), λ)

Notes on Sufficient

Statistics

• Note that the sufficient statistic is

not unique. If T(x) is sufficient, so

are T(x)/n and log(T(x))

Rao-Blackwell theorem

• named after

– C.R. Rao (1920- ) is a famous Indian

statistician and currently professor emeritus at

Penn State University

– David Blackwell (1919-) is Professor Emeritus

of Statistics at the UC Berkeley

• describes a technique that can transform

an absurdly crude estimator into an

estimator that is optimal by the meansquared-error criterion or any of a variety

of similar criteria.

Rao-Blackwell theorem

• Definition: A Rao–Blackwell estimator δ1(X) of an

unobservable quantity θ is the conditional

expected value E(δ(X) | T(X)) of some estimator

δ(X) given a sufficient statistic T(X).

– δ(X) : the "original estimator"

– δ1(X): the "improved estimator".

• The mean squared error of the Rao–

Blackwell estimator does not exceed that

of the original estimator.

Conditional Expectation

B {x X | f ( x) b}

E ( f ( x) | B) P( x | x B) f ( x)

xB

0, x B

P( x)

P( x | x B)

xB

P( x B)

Example I

• Phone calls arrive at a switchboard

according to a Poisson process at an

average rate of λ per minute.

• λ is not observable

• Observe: the numbers of phone calls that

arrived during n successive one-minute

periods are observed.

• It is desired to estimate the probability e−λ

that the next one-minute period passes

with no phone calls.

Original estimator:

t=x1+x2+.. +xn is sufficient

Example II

• To estimate λ for X1 … Xn ~ P(λ)

• Original estimator: X1

We know t= X1 +…+ Xn is sufficient

• Improved estimator by R-B theorem:

E[X1| X1 +…+ Xn =t] cannot compute directly

We know Σ[E(Xi| X1 +…+ Xn =t)]

=E(ΣXi| X1 +…+ Xn =t)=t

• Since X1 … Xn are i.i.d. so every term is t/n

In fact, it’s x

Thank you!