estimator - Indico

advertisement

Probability and Statistics

Basic concepts II

(from a physicist point of view)

Benoit CLEMENT – Université J. Fourier / LPSC

bclement@lpsc.in2p3.fr

Statistics

PHYSICS

parameters θ

Observable

POPULATION

SAMPLE

f(x;θ)

Finite size INFERENCE

xi

EXPERIMENT

Parametric estimation :

give one value to each parameter

Interval estimation :

derive a interval that probably contains

the true value

2

Non-parametric estimation :

estimate the full pdf of the population

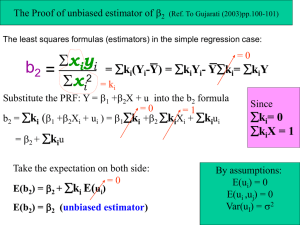

Parametric estimation

From a finite sample {xi} estimating a parameter θ

Statistic = a function S = f({xi})

Any statistic can be considered as an estimator of θ

To be a good estimator it needs to satisfy :

• Consistency : limit of the estimator for a infinite sample.

• Bias : difference between the estimator and the true

value

• Efficiency : speed of convergence

• Robustness : sensitivity to statistical fluctuations

A good estimator should at least be consistent and

asymptotically unbiased

3

Efficient / Unbiased / Robust often contradict each other

different choices for different applications

Bias and consistency

As the sample is a set of realization of random variables (or one

vector variable), so is the estimator :

ˆ

θˆ is a r ealiz atio

n of Θ

it has a mean, a variance,… and a probability density function

ˆ - θ0 ] μ ˆ - θ0

b(θˆ ) E[Θ

Bias : Mean value of the estimator

Θ

b(θˆ ) 0

unbiased estimator :

0

asymptotically unbiased : b(θˆ ) n

ˆ

P( θ - θ 0 ε) n

0, ε

Consistency: formally

0

in practice, if asymptotically unbiased σΘˆ n

biased

4

asymptotically

unbiased

unbiased

Empirical estimator

Sample mean is a good estimator of the population mean

weak law of large numbers : convergent, unbiased

1

μˆ xi ,

n

μ μˆ E[μˆ ] μ,

σ2

σ E[(μˆ - μ) ]

n

2

μˆ

2

Sample variance as an estimator of the population variance :

1

1

2

2

2

(x

μ

)

(x

μ

)

μ

μ

ˆ

ˆ

i

i

n i

n i

biased,

σ 2 n 1 2 asymptotically unbiased

1

2

2

2

2

E[sˆ ] σ σ μˆ σ

σ

n

n

n i

1

unbiased variance estimator : σˆ 2

(xi μˆ )2

n-1 i

sˆ 2

5

variance of the estimator

(convergence)

σ

2

σˆ 2

4

σ4 n 1

2σ

γ2 2

n - 1 n

n

Errors on these estimator

Uncertainty Estimator standard deviation

2

σ

σ

ˆ

ˆ

Use an estimator of standard deviation :

(!!! Biased )

Mean :

1

μˆ xi ,

n

2

σ

σ2μˆ

n

σˆ 2

Δμˆ

n

4

1

2

σ

2 2

2

2

2

2

σ

(x

μ

)

,

σ

Δ

σ

σˆ

ˆ

ˆ

ˆ

Variance :

i

σˆ 2

n-1 i

n

n

Central-Limit theorem empirical estimators of mean and

variance are normally distributed, for large enough samples

6

μˆ Δμˆ ; σˆ 2 Δσˆ 2 define 68% confidence intervals

Likelihood function

Generic function k(x,θ)

x : random variable(s)

θ : parameter(s)

fix x= u (one realization of

the random variable)

fix θ= θ0 (true value)

Probability density function

f(x;θ) = k(x,θ0)

∫ f(x;θ) dx=1

Likelihood function

L (θ) = k(u,θ)

∫ L (θ) dθ =???

for Bayesian f(x| θ)= f(x; θ)

for Bayesian f(θ|x)= L (θ)/∫ L (θ)dθ

For a sample : n independent realizations of the same variable X

7

L(θ ) k(xi , θ) f(xi ; θ)

i

i

Estimator variance

Start from the generic k function, differentiate twice, with

respect to θ, the pdf normalization condition:1 k(x, θ)dx

0

k

lnk

lnk

lnk

dx k

dx E

(b

θ

)E

0

θ

θ

θ

θ

2

lnk 2

2lnk

2k

2lnk

lnk

0 2 dx k

dx k

E

dx E

2

2

θ

θ

θ

θ

θ

Now differentiating the estimator bias : θ b θˆ (x)k(x, θ)dx

1

b ˆ

ˆ kdx θˆ k lnk dx (θˆ - b - θ)k lnk dx

θ

(x)k(x,

θ

)dx

θ

θ

θ

θ θ

θ

Finally, using Cauchy-Schwartz inequality

1 b'

b

lnk

2

2

ˆ

1

(

θ

b

θ

)

kdx

k

dx

σ

ˆ

Θ

θ

θ

lnk 2

E

θ

Cramer-Rao bound

2

8

2

2

Efficiency

For any unbiased estimator of θ, the variance cannot

exceed :

1

σ

2

lnL

2lnL

E

E

2

θ

θ

2

ˆ

Θ

1

The efficiency of a convergent estimator, is given by

its variance.

An efficient estimator reaches the Cramer-Rao bound

(at least asymptotically) : Minimal variance estimator

9

MVE will often be biased, asymptotically unbiased

Maximum likelihood

For a sample of measurements, {xi}

The analytical form of the density is known

It depends on several unknown parameters θ

eg. event counting : Follow a Poisson distribution,

with a parameter that depends on the physics : λi(θ)

eλi (θ ) λi (θ )xi

L(θ )

xi !

i

An estimator of the parameters of θ, are the ones that

maximize of observing the observed result.

Maximum of the likelihood function

L

θ

10

0

θ θˆ

rem : system of equations for several parameters

rem : often minimize -lnL : simplify expressions

Properties of MLE

Mostly asymptotic properties : valid for large sample, often

assumed in any case for lack of better information

Asymptotically unbiased

Asymptotically efficient (reaches the CR bound)

Asymptotically normally distributed

Multinormal law, with covariance given by generalization

of CR Bound :

ˆ

f(θ; θ, Σ)

1

2π Σ

e

1

( θˆ - θ )Τ Σ 1 ( θˆ - θ )

2

2

lnL

1

Σij E

θiθ j

Goodness of fit = The value of - 2lnL( θˆ ) is Khi-2 distributed, with

ndf = sample size – number of parameters

11

p value

-2lnL(θˆ )

fχ 2 (x;ndf)dx

Probability of getting a

worse agreement

Least squares

Set of measurements (xi, yi) with uncertainties on yi

Theoretical law : y = f(x,θ)

Naïve approach : use regression

w

w( θ ) (yi f(xi , θ))2 ,

0

θi

i

Reweight each term by the error

2

yi f(x i , θ) K2

2

,

K ( θ )

0

Δyi

i

θi

Maximum likelihood : assume each yi is normally distributed

with a mean equal to f(xi,θ) and a variance equal to Δyi 1 yi f(x i ,θ ) 2

L(θ )

Then the likelihood is :

Δyi

L

lnL K

0 2

0 Least squares or Khi-2 fit is

θ

θ

θ

the MLE, for Gaussian errors

1 Τ 1

2

Generic case with K (θ) (y - f(x, θ)) Σ (y - f(x, θ))

2

correlations:

2

12

i

1

2

e

2 πΔyi

Errors on MLE

ˆ

f(θ; θ, Σ)

1

2π Σ

e

1

( θˆ - θ )Τ Σ 1 ( θˆ - θ )

2

2

lnL

1

Σij E

θiθ j

Errors on parameter -> from the covariance matrix

1

2lnL

θ 2

For one parameter, 68% interval Δθ σˆ θˆ

More generally :

only one realization

of the estimator ->

empirical mean of 1

value…

1

ΔlnL lnL(θˆ ) lnL(θ) Σij1(θi - θˆ i )(θ j - θˆ j ) O(θ3 )

2 i,j

Confidence contour are

defined by the equation : ΔlnL β(nθ , α) with α

Values of β for different

number of parameters nθ

and confidence levels α

13

2β

0

fχ 2 (x;nθ )dx

nθ

α

1

(0. 5*nθ2)

2

3

68.3

0.5

1.15

1.76

95.4

2

3.09

4.01

99.7

4.5

5.92

7.08

Example : fitting a line

For f(x)=ax

K2 (a) Aa2 2Ba C -2ln L

x i2

xi yi

y i2

A 2 ,B 2 , C 2

i Δy i

i Δy i

i Δy i

K2

2Aa 2B 0

a

2K2

2

2A

2a

σ a2

14

B

aˆ ,

A

Δaˆ σ a

1

A

Example : fitting a line

For f(x)=ax+b

K2 (a, b) Aa2 Bb2 2Cab 2Da 2Eb F -2ln L

x i2

xi

xiyi

yi

y i2

1

A 2 , B 2 , C 2 , D 2 , E 2 ,F 2

i Δy i

i Δy i

i Δy i

i Δy i

i Δy i

i Δy i

K2

2Aa 2Cb 2D 0

a

2

K

2Ca 2Bb 2E 0

b

15

2K2

1

2A 2Σ 11

2

a

2 2

K

1

2B 2Σ 22

2

b

2 2

K

1

2C 2Σ 12

ab

aˆ

BD EC

,

2

AB C

ˆb AE BC

AB C2

A C

B C

1

Σ

Σ

2

AB C C A

C B

-1

Δaˆ σ a

B

,

2

AB C

Δbˆ σ b

A

AB C2

Example : fitting a line

2 dimensional error contours on a and b

68.3% : 2=2.3 95.4% : 2=6.2 99.7% : 2=11.8

16

Example : fitting a line

1 dimensional error from contours on a and b

1d errors (68.3%) are given by the edges of the

2=1 ellipse : depends on covariance

ρ abσ a σb

tan2 φ 2 2

σb - σ a

17

Frequentist vs Bayes

Frequentist

Estimator of the parameters θ maximises the

probability to observe the data .

Maximum of the likelihood function

2

ΔlnL β(nθ , α)

l nL

1

0,

Σi j E

2β

θ

θ

for α fχ (x;nθ )dx

i j

θ θˆ

0

Bayesian

Bayes theorem links :

The probability of θ kwnowing the data : a posteriori

to the probability of the data kwnowing θ : likelihood

L

θ

f (θ | m )

18

2

L( θ )π( θ )

L(θ)

L( θ )π( θ )dθ L(θ)dθ

b

f (θ | m) dθ α

a

Confidence interval

For a random variable, a confidence interval with confidence

level α, is any interval [a,b] such as :

b

P(X [a, b]) fX (x)dx α

a

Probability of finding a

realization inside the interval

Generalization of the concept of uncertainty:

interval that contains the true value with a given probability

slightly different concepts

For Bayesians : the posterior density is the probability density of

the true value. It can be used to derive interval :

P(θ [a, b]) α

No such thing for a Frequentist : The interval itself becomes the

random variable [a,b] is a realization of [A,B]

19

P(A θ and B θ) α

Independently of θ

Confidence interval

Mean centered, symetric

interval [μ-a, μ+a]

μ a

μ a

Mean centered, probability

symmetric interval : [a, b]

μ

b

α

f(x)dx

f(x)dx

a

μ

2

f(x)dx α

,

Highest Probability Density

(HDP) : [a, b]

b

f(x)dx α

a

20

f(x) f(y) for x [a, b] and y [a, b]

Confidence Belt

To build a frequentist interval for an estimator θˆ of θ

1. Make pseudo-experiments for several values of θ and

compute he estimator θˆ for each (MC sampling of the

estimator pdf)

2. For each θ, determine (θ) and (θ) such as :

θˆ Ξ(θ) for a fraction(1- α)/2 of the pseudo - experiments

θˆ Ω(θ) for a fraction(1- α)/2 of the pseudo - experiments

These 2 curves are the confidence belt, for a CL α.

-1

-1

3. Inverse these functions. The interval [Ω (θˆ ), Ξ (θˆ )] satisfy:

P Ω -1(θˆ ) θ Ξ -1(θˆ ) 1 P Ξ -1(θˆ ) θ - P Ω -1(θˆ ) θ

1 P θˆ Ξ(θ) - P θˆ Ω(θ) α

21

Confidence Belt for

Poisson parameter λ

estimated with the

empirical mean of 3

realizations (68%CL)

Dealing with systematics

The variance of the estimator only measure the

statistical uncertainty.

Often, we will have to deal with some parameters

whose values are known with limited precision.

Systematic uncertainties

The likelihood function becomes :

L( θ , ν )

ν ν 0 Δν or ν

Δ ν

0 -Δ ν

The known parameters ν are nuisance parameters

22

Bayesian inference

In Bayesian statistics, nuisance parameters are

dealt with by assigning them a prior π(ν).

Usually a multinormal law is used with mean ν0 and

covariance matrix estimated from Δν0 (+correlation,

if needed)

f(θ , ν | x)

f(x | θ, ν)π(θ)π( ν)

f(x | θ, ν)π(θ)π(ν)dθ dν

The final prior is obtained by marginalization over

the nuisance parameters

f(x | θ, ν)π(θ)π( ν)dν

f(θ | x) f(θ , ν | x)dν

f(x | θ, ν)π(θ)π(ν)dθ dν

23

Profile Likelihood

No true frequentist way to add systematic effects. Popular

method of the day : profiling

Deal with nuisance parameters as realization if random

variables : extend the likelihood : L( θ , ν ) L ' ( θ , ν )G( ν)

G(v) is the likelihood of the new parameters (identical to prior)

For each value of θ, maximize the likelihood with respect to

nuisance : profile likelihood PL(θ).

PL(θ) has the same statistical asymptotical properties than the

regular likelihood

24

Non parametric estimation

Directly estimating the probability density function

• Likelihood ratio discriminant

• Separating power of variables

• Data/MC agreement

• …

Frequency Table : For a sample {xi} , i=1..n

1.Define successive intervals (bins) Ck=[ak,ak+1[

2.Count the number of events nk in Ck

Histogram : Graphical representation of the

frequency table

25

h(x) nk if x Ck

Histogram

N/Z for stable heavy nuclei

26

1.321,

1.464,

1.448,

1.433,

1.403,

1.375,

1.500,

1.454,

1.441,

1.428,

1.464,

1.486,

1.472,

1.447,

1.480,

1.512,

1.512,

1.512,

1.586,

1.357,

1.421,

1.389,

1.466,

1.419,

1.406,

1.446,

1.469,

1.455,

1.442,

1.478,

1.500,

1.486,

1.460,

1.506,

1.538,

1.525,

1.524,

1.586

1.392,

1.438,

1.366,

1.500,

1.451,

1.421,

1.363,

1.484,

1.470,

1.457,

1.416,

1.465,

1.513,

1.473,

1.435,

1.493,

1.550,

1.536,

1.410,

1.344,

1.383,

1.322,

1.483,

1.437,

1.393,

1.462,

1.500,

1.471,

1.444,

1.479,

1.466,

1.486,

1.461,

1.450,

1.506,

1.518,

1.428,

1.379,

1.400,

1.370,

1.396,

1.453,

1.424,

1.382,

1.449,

1.485,

1.458,

1.432,

1.493,

1.500,

1.487,

1.475,

1.530,

1.577,

1.446,

1.413,

1.416,

1.387,

1.428,

1.468,

1.439,

1.411,

1.400,

1.514,

1.472,

1.459,

1.421,

1.526,

1.500,

1.500,

1.487,

1.554,

Histogram

Statistical description : nk are multinomial random variables.

with parameters :

n nk

k

μ nk np k

Ck

σn2k np k (1 pk ) μ nk

For a large sample :

nk μ k

lim

pk

n n

n

So finally :

pk P(x Ck ) fX (x)dx

pk 1

Cov(nk , nr ) np kpr 0

pk 1

For small classes (width δ):

pk

f(x)

δ0 δ

pk fX (x)dx δf(xc ) lim

Ck

1

f(x) lim

h(x)

n nδ

δ0

The histogram is an estimator of the probability density

27

Each bin can be described by a Poisson density.

The 1σ error on nk is then : Δnk σ

ˆ n2k μˆ nk nk

Kernel density estimators

Histogram is a step function -> sometime need smoother

estimator

On possible solution : Kernel Density Estimator

Attribute to each point of the sample a “kernel” function k(u)

x xi

u

, k(u) k( u),

w

k(u)du 1

Triangle kernel : k(u) 1- | u |, for - 1 u 1

Parabolic kernel : k(u) 34 (1- u2 ), for - 1 u 1

Gaussian kernel : k(u)

1

2π

e

-

u2

2

…

w = kernel width, similar to bin width of the histogram

The pdf estimator is : K(x)

28

1

1

x xi

k(u

)

k

i

n i

n i w

x(k) x(k)

2

i

Rem : for multidimensional pdf : u

(k)

w

k

2

Kernel density estimators

If the estimated density is normal, the optimal width is :

1

d 4

3

with n that sample size and d the dimension

w σ

(d 2)n

As for the histogram binning, no generic result : try and see

29

Statistical Tests

Statistical tests aim at:

• Checking the compatibility of a dataset {xi} with

a given distribution

• Checking the compatibility of two datasets {xi},

{yi} : are they issued from the same

distribution.

• Comparing different hypothesis : background

vs signal+background

30

In every case :

• build a statistic that quantify the agreement

with the hypothesis

• convert it into a probability of

compatibility/incompatibility : p-value

Pearson test

Test for binned data : use the Poisson limit of the histogram

• Sort the sample into k bins Ci : ni

• Compute the probability of this class : pi=∫Cif(x)dx

• The test statistics compare, for each bin the deviation of

the observation from the expected mean to the theoretical

standard deviation.

Data

2

(n

np

)

i

χ2 i

np i

binsi

Poisson mean

Poisson variance

Then χ2 follow (asymptotically) a Khi-2 law with k-1 degrees of

freedom (1 constraint ∑ni=n)

31

p-value : probability of doing worse, p value χ 2 fχ 2 (x;k - 1)dx

For a “good” agreement χ2 /(k-1) ~ 1,

More precisely χ 2 (k 1) 2(k 1) (1σ interval ~ 68%CL)

Kolmogorov-Smirnov test

Test for unbinned data : compare the sample cumulative density

function to the tested one

0 x x0

k

1

fs (x) δ(x - i) Fs (x) xk x xk 1

n i

n

1 x x n

Sample Pdf (ordered sample)

The the Kolmogorov statistic is the largest deviation :

Dn sup FS (x) F(x)

x

The test distribution has been computed by Kolmogorov:

P(Dn β n) 2(1) e

r 1 2r2 z 2

r

32

[0;β] define a confidence interval for Dn

β=0.9584/√n for 68.3% CL

β=1.3754/√n for 95.4% CL

Example

Test compatibility with an exponential law : f(x) λeλx , λ 0.4

0.008, 0.036, 0.112, 0.115, 0.133, 0.178, 0.189, 0.238, 0.274, 0.323, 0.364, 0.386, 0.406, 0.409, 0.418, 0.421,

0.423, 0.455, 0.459, 0.496, 0.519, 0.522, 0.534, 0.582, 0.606, 0.624, 0.649, 0.687, 0.689, 0.764, 0.768, 0.774,

0.825, 0.843, 0.921, 0.987, 0.992, 1.003, 1.004, 1.015, 1.034, 1.064, 1.112, 1.159, 1.163, 1.208, 1.253, 1.287,

1.317, 1.320, 1.333, 1.412, 1.421, 1.438, 1.574, 1.719, 1.769, 1.830, 1.853, 1.930, 2.041, 2.053, 2.119, 2.146,

2.167, 2.237, 2.243, 2.249, 2.318, 2.325, 2.349, 2.372, 2.465, 2.497, 2.553, 2.562, 2.616, 2.739, 2.851, 3.029,

3.327, 3.335, 3.390, 3.447, 3.473, 3.568, 3.627, 3.718, 3.720, 3.814, 3.854, 3.929, 4.038, 4.065, 4.089, 4.177,

4.357, 4.403, 4.514, 4.771, 4.809, 4.827, 5.086, 5.191, 5.928, 5.952, 5.968, 6.222, 6.556, 6.670, 7.673, 8.071,

8.165, 8.181, 8.383, 8.557, 8.606, 9.032, 10.482, 14.174

Dn = 0.069

p-value = 0.0617

1σ : [0, 0.0875]

33

Hypothesis testing

Two exclusive hypotheses H0 and H1

-which one is the most compatible with data

- how incompatible is the other one

P(data|H0) vs P(data|H1)

Build a statistic, define an interval w

- if the observation falls into w : accept H1

- else accept H0

Size of the test : how often did you get it right

α L (x | H0 )dx

w

Power of the test : how often do you get it wrong !

1 β L (x | H1)dx

w

34

Neyman-Pearson lemma : optimal statistic for testing hypothesis

is the Likelihood ratio λ L(x | H0 ) k

L(x | H1)

α

CLb and CLs

Two hypothesis, for counting experiment

- background only : expect 10 events

- signal+ background : expect 15 events

You observe 16 events

CLb = confidence in the

background hypothesis

(power of the test)

CLb =1-0.049

Discovery : 1- CLb < 5.7x10-7

CLs+b =0.663

CLs+b = confidence in the

signal+background

hypothesis (size of the test)

Rejection : CLs+b < 5x10-2

Test for signal (non standard)

CLs = CLs+b/CLb

35