Now What

• Last class introduced statistical concepts.

• How do we actually implement these ideas

and get some results??

• Goal: introduce you to what’s out there,

things you need to be conscious of

• Familiarity with terms (understand papers)

Experiment: IV= smartness drug; DV= IQ

Experimental group 1 scores: mean = 115

Experimental group 2 scores: mean = 102

Control group scores: mean = 100

It looks different, but how different IS

different?

T tests

Inferential test to decide if there is a real

(significant) difference between the means

of 2 data sets.

In other words, do our 2 groups of people

(experimental and control) represent 2

different POPULATIONS of people, or not?

Hypothesis Testing (review)

• The steps of hypothesis testing are:

1. Restate the question as a research

hypothesis and a null hypothesis about the

populations.

ex) Does drug A make people super smart?

null hypothesis (assumed to be true)= Drug

A has no effect on people’s intelligence.

HO: µ1 = µ2

• 2. Figure out what the comparison

distribution looks like

(your control group, usually)

3. Decide Type 1 error (alpha level)

• Determine the cutoff sample score on the

comparison distribution at which point the

null should be rejected

• Typically 5% or 1%

• 4. determine where your sample falls on

the comparison distribution

• 5. reject or retain null hypothesis

Backbone of inferential stats

• Idea of assuming null and rejecting it if

your results would only happen 5% of the

time if the null were true

• Underlies any “p” value, significance test

you’ll ever see

Stats packages

• Matlab, stata, sas, R, JMP, spss

• Different ones more popular in different

fields;

• I will reference SPSS (popular in

psychology)

Descriptive statistics

• Start off getting familiar with your data

• Analyze descriptive statistics

• Means, quartiles, outliers, plots,

frequencies

T tests-NOT interchangeable

• 1-sample T test

Use this if you know the population mean

(or you have hypothesized a population mean)

and you want to know if your sample belongs to

this population

Ex- IQ population mean is 100, is my sample

different?

Or, I have a theory that everyone is 6 feet tall. I

can take a sample of people and see if this is

true.

1 sample t test

• Analyze

Compare means

1 sample t test

Test Variable= the thing you want to know if it is different from the

hypothesized population mean

Test Value= hypothesized population mean (default is 0)

Reading output

• Is my variable ‘caldif’ significantly different

than the null population mean of zero?

One-Sample Statistics

N

caldif

67

Mean

-.1090

Std. Deviation

1.80174

Std. Error

Mean

.22012

One-Sample Test

Test Value = 0

caldif

t

-.495

df

66

Sig. (2-tailed)

.622

Mean

Difference

-.10896

95% Confidence

Interval of the

Difference

Lower

Upper

-.5484

.3305

Independent Samples T Test

Compares the mean scores of two groups,

on a given variable

Ex- is the IQ score for my control and

experimental groups different?

Is the mean height different for men and

women?

Independent Samples T Test

• Analyze

Compare means

1 sample t test

Test variable= the dependent variable (iq, height)

Grouping variable= differentiate the 2 groups you’re comparing.

Example, you have a variable called sex and the values can be 1 or

2 corresponding to male and female.

Paired samples t test

• Compares the means of 2 variables; tests if average difference is

significantly different from zero

• Use when the scores are not independent from each other (ex,

scores from the same subjects before and after some intervention)

• Ask yourself: are all the data points randomly selected, or is the

second sample paired to the first?

Ex), before using your device the subject’s mean happiness score was

100, afterwards it’s 102, is this average difference of 2 significantly

different from no difference at all?

Paired (dependent) samples T test

• Analyze

Compare means

paired samples t test

HappinessScoreBefore

HappinessScoreAfter

Which T test?

• Is there a change in children’s understanding of algebra

before and after using a learning program?

• The average IQ in America is 100. Are MIT students

different from this?

• Which deodorant is better? Each subject gets each

brand, one on each arm.

• Which shoes lead to faster running? One sibling gets

type A, the other type B.

• Which remote control do people learn to use faster? We

randomly select subjects from the population.

• You will have more power with a repeated

measure design, but sometimes (often)

there are reasons you can’t design your

study that way.

-order effects (learning, long-lasting

intervention)

- ‘demand’ effects

Important assumptions for

inferential statistics

• 1 homogeneity of variance

check and correct if necessary (ex, Levene test; Welsh procedure)

• 2. normal distribution

check and correct if necessary (ex, transform data to log, square)

• 3 random sample of population

vital! Or else be clear on what population you’re really learning about

• 4 independence of samples

vital! Knowing the score for one subject give you no specific hints on

how another will score

Anova

• T tests are when you have only 1 or 2

groups. For more, use the anova model.

• Basic method: compares the variance

between groups/within groups

• Is this ratio (‘F ratio’) is significantly >1

1 way anova

• Compare means from multiple groups

What is the effect of three different online

learning environments and students’

‘interest’ score?

Three different groups (N=12)

OnlineEnvironment1

9

7

8

9

OnlineEnvironment2

2

3

1

1

OnlineEnvironment3

8

7

9

7

8.25

1.75

7.75

Treatment means

5.92

overall mean (‘grand mean’)

1 way anova

• The basic model is that

An individual score = overall mean + effect

of treatment (group mean) + error

Total variance = total variance between

groups +total variance with group (as error

term)

‘sum of squares’

OnlineEnvironment1

9

7

8

9

OnlineEnvironment2

2

3

1

1

OnlineEnvironment3

8

7

9

7

8.25

1.75

7.75

5.92

SS total = (9-5.92)^2 + (7-5.92)^2 + (8-5.92)^2… + (7-5.92)^2 = 112.92

SS between = (8.25-5.92)^2 + (1.75-5.92)^2 + (7.75)^2 = 26.17

SS within= (9-8.25)^2 + (7-8.25)^2… + (7-7.75)^2 = 7.42

Mean squares

You get the average sum of squares, or

mean squares, by dividing sum of squares

by

degrees of freedom

(measure of independent pieces of

information)

• Df between = J-1 (groups-1)

• Df within = N-J (total people-groups)

• So, MS between = 26.17/2 = 13.1

•

MS within = 7.42/ (12-3=9) = .83

F ratio

• MS between/MS within

• Signal/ Noise ratio

• 13.1/.83 = 15.78

• If no effect, you’d expect a ratio of 1

• Ratio of 15 seems strong. Check with F

table (same principle as with T test

earlier!)

Spss 1 way anova

• Analyze

General Linear Model

Univariate

Fixed vs random factors

• Fixed factor: the levels under study are the only levels of

interest; you can’t generalize to anything else

• Random effect: levels were drawn randomly from

population, you can generalize

• Ex- do people from different countries like my new phone

differently?

Give phone to people from Japan, India, America.

2+ way anova

• Main effects

• Interaction effects :

Testing gender and age (undergrad vs senior citizen): DV =

engagedness with robot

You get 3 overall effects

-effect of gender on engagedness

-effect of age on engagedness

-interaction of gender and age on engagedness (does

the effect of gender depend on age?/ does the effect of

age depend on gender?)

Reading the output

Tests of Between-Subj ects Effects

Dependent Variable: ANXIETY

Source

Corrected Model

Intercept

CHLDREAR

SEX

CHLDREAR * SEX

Error

Total

Corrected Total

Type III Sum

of Squares

944.708a

3927.042

826.583

100.042

18.083

149.250

5021.000

1093.958

df

5

1

2

1

2

18

24

23

a. R Squared = .864 (Adjusted R Squared = .826)

Mean Square

188.942

3927.042

413.292

100.042

9.042

8.292

F

22.787

473.613

49.844

12.065

1.090

Sig .

.000

.000

.000

.003

.357

Partial Eta

Squared

.864

.963

.847

.401

.108

contrasts

OnlineEnvironment1

9

7

8

9

OnlineEnvironment2

2

3

1

1

OnlineEnvironment3

8

7

9

7

8.25

1.75

7.75

5.92

The main effects (‘omnibus test’) tells you that something is

going on here, there is some difference somewhere, but doesn’t

tell you what.

Is group 1 different than group 3?

Are groups 1 and 3 together different than group 2?

Spss contrasts

Contrast coefficients (add to zero): 1, 0, -1

.5, -1,.5

“Name brand”

polynomial etc

Omnibus v contrasts

• Significant omnibus means there will be at

least 1 significant contrast, but

• Nonsignificant omnibus DOESN’T

necessarily mean there are no significant

contrasts

A Priori vs Post-hoc

• A priori (planned) = theory driven, you

planned to test this before you saw your

data

• Post-hoc = exploratory, data-driven

• When doing post-hoc contrasts you must

be especially careful of type 1 error.

Planned v post-hoc

• Family wise error – take this SERIOUSLY

• With an alpha (type 1 error) of .05, you

expect

1 test 5% chance you’re wrong

5 tests 25% chance one of them is

wrong

20 tests 1 of them is probably

wrong

• To keep overall error at 5%, if you are

doing multiple contrasts you can do a

“Bonferroni” correction, which just means

you divide .05 by the number of contrasts

• Ex, 10 contrasts. I want overall error to be

5%.

So each contrast must meet at stricter

cutoff- .005%

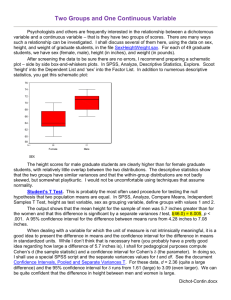

Correlation and Regression

• Correlation: linear relationship between X

and Y (no assumptions about IV/DV)

• Regression: what is the best guess about

Y given a certain value of X (X is IV)

• Similar to anova model

Best fit line to the data

racism as a function of conservatism

7

6

5

4

3

2

1

0

1

2

political conservatism

3

4

5

6

spss

-Analyze correlate bivariate

Correlations

date

date

meandif

Pearson Correlation

Sig. (2-tailed)

N

Pearson Correlation

Sig. (2-tailed)

N

1

82

.013

.908

82

meandif

.013

.908

82

1

82

The 2 variables, date and meandif, have a Pearson correlation

of .013, and the significance is .908. (i.e. not significant).

• Analyze regression linear

ANOVAb

Model

1

Regression

Residual

Total

Sum of

Squares

61.393

202.148

263.542

a. Predictors: (Constant), maxvoltage

b. Dependent Variable: secondcal

df

1

65

66

Mean Square

61.393

3.110

F

19.741

Sig.

.000a

Effect size

• Review: can be significant but tiny effect

• Knowing significance doesn’t give indication of

effect strength

• Amount by which the 2 populations don’t overlap

• Amount of total variance explained by your

variable (sometimes)

D=(mean1-mean2)/standard

deviation

Effect size

• There are different measures of effect size

• SPSS gives you ‘partial eta squared’

variance associated with your

variable/ variance associated with your

variable + error

Check box

Confidence intervals

-If you did the experiment 100 times and made a distribution of your

results, the true mean would fall within these results 95%

-In other words, there is a 95% chance that the true mean falls within

your confidence interval

-If it crosses 0, it’s nonsignificant

Independent Samples Test

Levene's Test for

Equality of Variances

F

meandif

Equal variances

assumed

Equal variances

not assumed

Sig.

.002

.964

t-test for Equality of Means

t

df

Sig. (2-tailed)

Mean

Difference

Std. Error

Difference

95% Confidence

Interval of the

Difference

Lower

Upper

1.262

80

.210

4.58285

3.63036

-2.64180

11.80750

1.266

79.027

.209

4.58285

3.62026

-2.62306

11.78876

Power calculation

• Tough to actually calculate unless you have an idea of

the effect size (which you make up, or get an idea from

past research)

• Power is a matter of effect size and N

Ex) what is the effect of a new keyboard on typing speed?

Mean typing speed with old keyboard = 40

Standard deviation of population using old keyboard =10

Researcher plans to do a study with 25 people and predicts

that with the new keyboard, people’s typing speed will be

29.

This will be tested at the 1% significance level (1-tailed).

Z scores

• Standardized way to see how many

standard deviations away from the mean

is a point?

Power calculation cont

comparison distribution mean =40; sd =10

Predicted mean = 49; sd =10

N=25

1.Sd distribution of means = sqrt (10^2/25) =2

2. Figure the cutoff on the comparison distribution

(use z table)

3. Figure the z score for this point on the

experimental distribution

4. Figure probability of getting more extreme score

than cutoff. This is your power.