SPSS Workshop - FHSS Research Support Center

advertisement

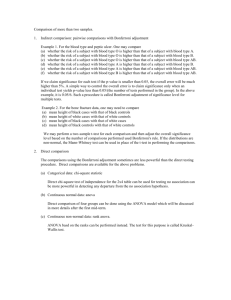

SPSS Workshop Research Support Center Chongming Yang Causal Inference • If A, then B, under condition C • If A, 95% Probability B, under condition C Student T Test (William S. Gossett’s pen name = student) • Assumptions – Small Sample – Normally Distributed • t distributions: t = [ x - μ ] / [ s / sqrt( n ) ] df = degrees of freedom=number of independent observations Type of T Tests • One sample – test against a specific (population) mean • Two independent samples – compare means of two independent samples that represent two populations • Paired – compare means of repeated samples One Sample T Test • Conceputally convert sample mean to t score and examine if t falls within acceptable region of distribution x u t s n Two Independent Samples t x1 x2 (n1 1)s (n2 1)s 1 1 ( ) n1 n2 2 n1 n2 2 1 2 2 Paired Observation Samples • d = difference value between first and second observations t d Sd n Multiple Group Issues • Groups A B C comparisons – AB AC BC – .95 .95 .95 • Joint Probability that one differs from another – .95*.95*.95 = .91 Analysis of Variance (ANOVA) • Completely randomized groups • Compare group variances to infer group mean difference • Sources of Total Variance – Within Groups – Between Groups SSB df1 F • F distribution SSW – SSB = between groups sum squares df 2 – SSW = within groups sum squares Fisher-Snedecor Distribution F Test • Null hypothesis: 𝑥1 = 𝑥2 = 𝑥3 . . . = 𝑥𝑛 • Given df1 and df2, and F value, • Determine if corresponding probability is within acceptable distribution region Issues of ANOVA • Indicates some group difference • Does not reveal which two groups differ • Needs other tests to identify specific group difference – Hypothetical comparisons Contrast – No Hypothetical comparisons Post Hoc • ANOVA has been replaced by multiple regressions, which can also be replaced by General Linear Modeling (GLM) Multiple Linear Regression • Causes 𝑥 cab be continuous or categorical • Effect 𝑦 is continuous measure y 0 1x1 2 x2 3 x3...k xk • Mild causal terms predictors • Objective identify important 𝑥 Assumptions of Linear Regression • • • • Y and X have linear relations Y is continuous or interval & unbounded expected or mean of = 0 = normally distributed not correlated with predictors • Predictors should not be highly correlated • No measurement error in all variables Least Squares Solution • Choose 𝛽0 , 𝛽1 , 𝛽2 , 𝛽3 , . . . 𝛽𝑘 to minimize the sum of square of difference between observed 𝑦𝑖 and model estimated/predicted 𝑦𝑖 ˆ ( y y ) i i 2 • Through solving many equations Explained Variance in 𝑦 (yi ) 2 y ( yi yˆi ) 2 n R 2 2 (yi ) yi n 2 2 i Standard Error of 𝛽 ( yi yiˆ ) 1 SE 2 2 n k 1 ( xi xi ) (1 R ) 2 T Test significant of 𝛽 • t = 𝛽 / SE𝛽 • If t > a critical value & p <.05 • Then 𝛽 is significantly different from zero Confidence Intervals of 𝛽 Standardized Coefficient (𝛽𝑒𝑡𝑎) • Make 𝛽s comparable among variables on the same scale (standardized scores) stdx eta stdy Interpretation of 𝛽 • If x increases one unit, y increases 𝛽 unit, given other values of X Model Comparisons • Complete Model: y 0 1 x1 2 x2 3 x3 ...k xk • Reduced Model: y 0 1 x1 2 x2 ... g xg • Test F = Msdrop / MSE – MS = mean square – MSE = mean square error Variable Selection • Select significant from a pool of predictors • Stepwise undesirable, see http://en.wikipedia.org/wiki/Stepwise_regression • Forward • Backward (preferable) Dummy-coding of Nominal 𝑥 • R = Race(1=white, 2=Black, 3=Hispanic, 4=Others) R 1 1 2 2 3 3 4 4 d1 d2 1 0 1 0 0 1 0 1 0 0 0 0 0 0 0 0 d3 0 0 0 0 1 1 0 0 • Include all dummy variables in the model, even if not every one is significant. Interaction y 0 1x1 2 x2 3 x3 4 x2 x3...k xk • Create a product term X2X3 • Include X2 and X3 even effects are not significant • Interpret interaction effect: X2 effect depends on the level of X3. Plotting Interaction • Write out model with main and interaction effects, • Use standardized coefficient • Plug in some plausible numbers of interacting variables and calculate y • Use one X for X dimension and Y value for the Y dimension • See examples http://frank.itlab.us/datamodel/node104.html Diagnostic • Linear relation of predicted and observed (plotting • Collinearity • Outliers • Normality of residuals (save residual as new variable) Repeated Measures (MANOVA, GLM) • • • • • Measure(s) repeated over time Change in individual cases (within)? Group differences (between, categorical x)? Covariates effects (continuous x)? Interaction between within and between variables? Assumptions • Normality • Sphericity: Variances are equal across groups so that • Total sum of squares can be partitioned more precisely into – Within subjects – Between subjects – Error Model yij i j ij ij • 𝜇 = grand mean • 𝜋𝑖 = constant of individual i • 𝜏𝑗 = constant of jth treatment • 𝜀𝑖𝑗 = error of i under treatment j • 𝜋𝜏 = interaction F Test of Effects • F = MSbetween / Mswithin (simple repeated) • F = Mstreatment / Mserror (with treatment) • F = Mswithin / Msinteraction (with interaction) Four Types Sum-Squares • • • • Type I balanced design Type II adjusting for other effects Type III no empty cell unbalanced design Type VI empty cells Exercise • http://www.ats.ucla.edu/stat/spss/seminars/R epeated_Measures/default.htm • Copy data to spss syntax window, select and run • Run Repeated measures GLM