PPT

advertisement

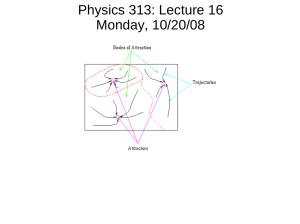

The Hopfield Network The nodes of a Hopfield network can be updated synchronously or asynchronously. Synchronous updating means that at time step (t+1) every neuron is updated based on the network state at time step t. In asynchronous updating, a random node k1 is picked and updated, then a random node k2 is picked and updated (already using the new value of k1), and so on. The synchronous mode can be problematic because it may never lead to a stable network state. December 7, 2010 Neural Networks Lecture 21: Hopfield Network Convergence 1 Asynchronous Hopfield Network Current network state O, attractors (stored patterns) X and Y: X O December 7, 2010 Y Neural Networks Lecture 21: Hopfield Network Convergence 2 Asynchronous Hopfield Network After first update, this could happen: X Y O December 7, 2010 Neural Networks Lecture 21: Hopfield Network Convergence 3 Asynchronous Hopfield Network … or this: X O Y December 7, 2010 Neural Networks Lecture 21: Hopfield Network Convergence 4 Synchronous Hopfield Network What happens for synchronous updating? X O December 7, 2010 Y Neural Networks Lecture 21: Hopfield Network Convergence 5 Synchronous Hopfield Network Something like shown below. And then? X O Y December 7, 2010 Neural Networks Lecture 21: Hopfield Network Convergence 6 Synchronous Hopfield Network The network may oscillate between these two states forever. X O December 7, 2010 Y Neural Networks Lecture 21: Hopfield Network Convergence 7 The Hopfield Network The previous illustration shows that the synchronous updating rule may never lead to a stable network state. However, is the asynchronous updating rule guaranteed to reach such a state within a finite number of iterations? To find out about this, we have to characterize the effect of the network dynamics more precisely. In order to do so, we need to introduce an energy function. December 7, 2010 Neural Networks Lecture 21: Hopfield Network Convergence 8 The Energy Function Updating rule (as used in the textbook): n x p ,k (t 1) sgn wk,j x p,j(t) I p,k (t) j 1 Often, initial input, if t 0 I p ,k (t ) otherwise 0, December 7, 2010 Neural Networks Lecture 21: Hopfield Network Convergence 9 The Energy Function Given the way we determine the weight matrix W (but also for iterative learning methods) , we expect the weight from node j to node l to be proportional to: P wl , j (i p ,l i p , j ) p 1 for P stored input patterns. In other words, if two units are often active (+1) or inactive (-1) together in the given input patterns, we expect them to be connected by large, positive weights. If one of them is active whenever the other one is not, we expect large, negative weights between them. December 7, 2010 Neural Networks Lecture 21: Hopfield Network Convergence 10 The Energy Function Since the above formula applies to all weights in the network, we expect the following expression to be positive and large for each stored pattern (attractor pattern): w i i l , j p ,l p , j l j We would still expect a large, positive value for those input patterns that are very similar to any of the attractor patterns. The lower the similarity, the lower is the value of this expression that we expect to find. December 7, 2010 Neural Networks Lecture 21: Hopfield Network Convergence 11 The Energy Function This motivates the following approach to an energy function, which we want to decrease with greater similarity of the network’s current activation pattern to any of the attractor patterns (similar to the error function in the BPN): wl , j xl x j l j If the value of this expression is minimized (possibly by some form of gradient descent along activation patterns), the resulting activation pattern will be close to one of the attractors. December 7, 2010 Neural Networks Lecture 21: Hopfield Network Convergence 12 The Energy Function However, we do not want the activation pattern to arbitrarily reach one of the attractor patterns. Instead, we would like the final activation pattern to be the attractor that is most similar to the initial input to the network. We can achieve this by adding a term that penalizes deviation of the current activation pattern from the initial input. The resulting energy function has the following form: E a wl , j xl x j b I l xl l December 7, 2010 j l Neural Networks Lecture 21: Hopfield Network Convergence 13 The Energy Function How does this network energy change with every application of the asynchronous updating rule? E (t ) E (t 1) E (t ) E (t ) a wl , j [ xl (t 1) x j (t 1) xl (t ) x j (t )] b I l [ xl (t 1) xl (t )] l j l l When updating node k, xj(t+1)=xj(t) for every node jk: E (t ) a [( wk , j w j ,k )( xk (t 1) xk (t )) x j (t )] bI k [ xk (t 1) xk (t )] j k E (t ) a ( wk , j w j ,k ) x j (t )] bI k ( xk (t 1) xk (t )) j k December 7, 2010 Neural Networks Lecture 21: Hopfield Network Convergence 14 The Energy Function Since wk,j = wj,k, if we set a = 0.5 and b = 1 we get: E (t ) w j ,k x j (t ) I k ( xk (t 1) xk (t )) j k E (t ) net k (t )xk (t ) This means that in order to reduce energy, the k-th node should change its state if and only if net k (t )xk (t ) 0 In other words, the state of a node should change whenever it differs from the sign of the net input. December 7, 2010 Neural Networks Lecture 21: Hopfield Network Convergence 15 The Energy Function And this is exactly what our asynchronous updating rule does! Consequently, every weight update reduces the network’s energy. By definition, every possible network state (activation pattern) is associated with a specific energy. Since there is a finite number of states that the network can assume (2n for an n-node network), and every update leads to a state of lower energy, there can only be a finite number of updates. December 7, 2010 Neural Networks Lecture 21: Hopfield Network Convergence 16 The Energy Function Therefore, we have shown that the network reaches a stable state after a finite number of iterations. This state is likely to be one of the network’s stored patterns. It is possible, however, that we get stuck in a local energy minimum and never reach the absolute minimum (just like in BPNs). In that case, the final pattern will usually be very similar to one of the attractors, but not identical. December 7, 2010 Neural Networks Lecture 21: Hopfield Network Convergence 17