PPT - Bo Yuan

advertisement

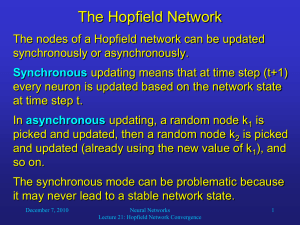

Classification III Lecturer: Dr. Bo Yuan LOGO E-mail: yuanb@sz.tsinghua.edu.cn Overview Artificial Neural Networks 2 Biological Motivation 1011: The number of neurons in the human brain 104: The average number of connections of each neuron 10-3: The fastest switching times of neurons 10-10: The switching speeds of computers 10-1: The time required to visually recognize your mother 3 Biological Motivation The power of parallelism The information processing abilities of biological neural systems follow from highly parallel processes operating on representations that are distributed over many neurons. The motivation of ANN is to capture this kind of highly parallel computation based on distributed representations. Sequential machines vs. Parallel machines Group A Using ANN to study and model biological learning processes. Group B Obtaining highly effective machine learning algorithms, regardless of how closely these algorithms mimic biological processes. 4 Neural Network Representations 5 Robot vs. Human 6 Perceptrons x1 x2 . . . w1 w2 x0=1 w0 n 1, if wi xi 0 o i 0 0, otherwise ∑ wn n w x i 0 i i xn 1, if w0 w1 x1 wn xn 0 o( x1 ,..., xn ) 0, otherwise 7 Power of Perceptrons -0.3 -0.8 0.5 0.5 0.5 AND Input 0.5 OR ∑ Output Input ∑ Output 0 0 -0.8 0 0 0 -0.3 0 0 1 -0.3 0 0 1 0.2 1 1 0 -0.3 0 1 0 0.2 1 1 1 0.3 1 1 1 0.7 1 8 Error Surface Error w1 w2 9 Gradient Descent 1 2 E ( w) (td od ) 2 dD Batch Learning E E E E ( w) , ,..., w w w 1 n 0 wi wi wi E where wi wi Learning Rate 10 Delta Rule E 1 2 ( t o ) d d wi wi 2 dD o( x) w x 1 (t d od ) 2 2 dD wi wi (td od ) xid 1 2(t d od ) (t d od ) 2 dD wi dD (t d od ) (t d w xd ) wi d D (t d od )( xid ) d D 11 Batch Learning GRADIENT_DESCENT (training_examples, η) Initialize each wi to some small random value. Until the termination condition is met, Do Initialize each Δwi to zero. For each <x, t> in training_examples, Do • Input the instance x to the unit and compute the output o • For each linear unit weight wi, Do – Δwi ← Δwi + η(t-o)xi For each linear unit weight wi, Do • wi ← wi + Δwi 12 Stochastic Learning wi wi wi where wi (t o) xi For example, if xi=0.8, η=0.1, t=1 and o=0 Δwi = η(t-o)xi = 0.1×(1-0) × 0.8 = 0.08 + + + + + - - + - - 13 Stochastic Learning: NAND Tar get Input x0 x1 x2 t Output Initial Individual Weights w0 w1 w2 Final Error Correction Sum Output C0 C1 C2 S x0 · w0 x1 · w1 x2 C0+ · C1+ w2 C2 o E R t-o LR x E Final Weights w0 w1 w2 1 0 0 1 0 0 0 0 0 0 0 0 1 +0.1 0.1 0 0 1 0 1 1 0.1 0 0 0.1 0 0 0.1 0 1 +0.1 0.2 0 0.1 1 1 0 1 0.2 0 0.1 0.2 0 0 0.2 0 1 +0.1 0.3 0.1 0.1 1 1 1 0 0.3 0.1 0.1 0.3 0.1 0.1 0.5 0 0 0 0.3 0.1 0.1 1 0 0 1 0.3 0.1 0.1 0.3 0 0.3 0 1 +0.1 0.4 0.1 0.1 1 0 1 1 0.4 0.1 0.1 0.4 0 0.1 0.5 0 1 +0.1 0.5 0.1 0.2 1 1 0 1 0.5 0.1 0.2 0.5 0.1 0.6 1 0 0 0.5 0.1 0.2 1 1 1 0 0.5 0.1 0.2 0.5 0.1 0.2 0.8 1 -1 -0.1 0.4 0 0.1 1 0 0 1 0.4 0.5 0 0.1 . 1 . 1 . 0 . 1 . . . . . 0.8 -.2 -.1 0.8 -.2 threshold=0.5 learning rate=0.1 0 0.1 0.4 0 0 0 0 0.4 0 1 +0.1 . 0 . 0.6 . 1 . 0 . 0 14 . . . 0.8 -.2 -.1 Multilayer Perceptron 15 XOR p q pq pq ( p q)( pq) + Input - q - Output 0 0 0 0 1 1 1 0 1 1 1 0 + p Cannot be separated by a single line. 16 XOR p q ( p q) ( p q) AND + OR q - NAND NAND + p OR p 17 q Hidden Layer Representations NAND - ++ p q OR NAND AND 0 0 0 1 0 0 1 1 1 1 1 0 1 1 1 1 1 1 0 0 Input AND OR 18 Hidden Output The Sigmoid Threshold Unit x1 x2 . . . w1 w2 x0=1 w0 o (net ) ∑ n wn net wi xi i 0 xn Sigm oidFunction 1 ( y) 1 e y d ( y ) ( y ) (1 ( y )) dy 19 1 1 e net Backpropagation Rule Ed ( w) 1 2 ( t o ) k k 2 koutputs Ed w ji w ji Ed Ed netj Ed x ji w ji netj w ji netj j • xji = the i th input to unit j • wji = the weight associated with the i th input to unit j • netj = ∑wjixji (the weighted sum of inputs for unit j ) i • oj= the output of unit j • tj= the target output of unit j • σ = the sigmoid function • outputs = the set of units in the final layer • Downstream (j ) = the set of units directly taking the output of unit j as inputs 20 Training Rule for Output Units Ed Ed o j net j o j net j Ed 1 (tk ok ) 2 o j o j 2 koutputs Ed 1 (t j o j ) 2 o j o j 2 (t j o j ) 1 2(t j o j ) 2 o j (t j o j ) o j netj (netj ) netj o j (1 o j ) Ed (t j o j )o j (1 o j ) netj Ed w ji (t j o j )o j (1 o j ) x ji w ji 21 Training Rule for Hidden Units Ed Ed netk netk o j k net j kDownstream ( j ) netk net j kDownstream ( j ) o j net j k kDownstream ( j ) k wkj o j net j wkj o j (1 o j ) k Ed netk k kDownstream ( j ) j o j (1 o j ) k w k kj kDownstream ( j ) j wji j x ji netj 22 BP Framework BACKPROPAGATION (training_examples, η, nin, nout, nhidden) Create a network with nin inputs, nhidden hidden units and nout output units. Initialize all network weights to small random numbers. Until the termination condition is met, Do For each <x, t> in training_examples, Do • Input the instance x to the network and computer the output o of every unit. • For each output unit k, calculate its error term δk k ok (1 ok )(tk ok ) • For each hidden unit h, calculate its error term δh h oh (1 oh ) w koutputs kh k • Update each network weight wji wji wji wji wji j x ji 23 More about BP Networks … Convergence and Local Minima The search space is likely to be highly multimodal. May easily get stuck at a local solution. Need multiple trials with different initial weights. Evolving Neural Networks Black-box optimization techniques (e.g., Genetic Algorithms) Usually better accuracy Can do some advanced training (e.g., structure + parameter). Xin Yao (1999) “Evolving Artificial Neural Networks”, Proceedings of the IEEE, pp. 1423-1447. Representational Power Deep Learning 24 More about BP Networks … Overfitting Tend to occur during later iterations. Use validation dataset to terminate the training when necessary. Practical Considerations Momentum Adaptive learning rate wji (n) j x ji wji (n 1) • Small: slow convergence, easy to get stuck • Large: fast convergence, unstable Validation Error Error Training Time Weight 25 Beyond BP Networks XOR In 011000110101… Out ? 1 ? ? 0 ? ? 0 ? ? 1 ? … Elman Network 26 Beyond BP Networks Hopfield Network Energy Landscape of Hopfield Network 27 Beyond BP Networks 28 When does ANN work? Instances are represented by attribute-value pairs. Input values can be any real values. The target output may be discrete-valued, real-valued, or a vector of several real- or discrete-valued attributes. The training samples may contain errors. Long training times are acceptable. Can range from a few seconds to several hours. Fast evaluation of the learned target function may be required. The ability to understand the learned function is not important. Weights are difficult for humans to interpret. 29 Reading Materials Text Book Richard O. Duda et al., Pattern Classification, Chapter 6, John Wiley & Sons Inc. Tom Mitchell, Machine Learning, Chapter 4, McGraw-Hill. http://page.mi.fu-berlin.de/rojas/neural/index.html.html Online Demo http://neuron.eng.wayne.edu/software.html http://www.cbu.edu/~pong/ai/hopfield/hopfield.html Online Tutorial http://www.autonlab.org/tutorials/neural13.pdf http://www.cs.cmu.edu/afs/cs.cmu.edu/user/mitchell/ftp/faces.html Wikipedia & Google 30 Review What is the biological motivation of ANN? When does ANN work? What is a perceptron? How to train a perceptron? What is the limitation of perceptrons? How does ANN solve non-linearly separable problems? What is the key idea of Backpropogation algorithm? What are the main issues of BP networks? What are the examples of other types of ANN? 31 Next Week’s Class Talk Volunteers are required for next week’s class talk. Topic 1: Applications of ANN Topic 2: Recurrent Neural Networks Hints: Robot Driving Character Recognition Face Recognition Hopfield Network Length: 20 minutes plus question time 32 Assignment Topic: Training Feedforward Neural Networks Technique: BP Algorithm Task 1: XOR Problem 4 input samples • • 000 101 Task 2: Identity Function 8 input samples • • • 10000000 10000000 00010000 00010000 … Use 3 hidden units Deliverables: Report Code (any programming language, with detailed comments) Due: Sunday, 14 December Credit: 15% 33