associative memory ENG - Weizmann Institute of Science

advertisement

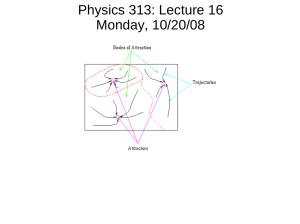

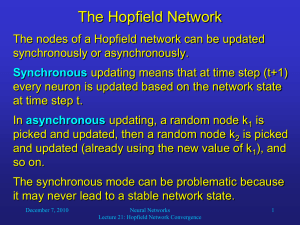

Basic Models in Neuroscience Oren Shriki 2010 Associative Memory 1 Associative Memory in Neural Networks • Original work by John Hopfield (1982). • The model is based on a recurrent network with stable attractors. 2 The Basic Idea • Memory patterns are stored as stable attractors of a recurrent network. • Each memory pattern has a basin of attraction in the phase space of the network. 3 4 Information Storage • The information is stored in the pattern of synaptic interactions. 5 Energy Function In some models the dynamics are governed by an energy function The dynamics lead to one of the local minima of the energy function, which are the stored memories. 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 Important properties of the model • Content Addressable Memory (CAM) Access to memory is based on the content and not an address. • Error correction – The network “corrects” the neurons which are inconsistent with the memory pattern. 40 The Mathematical Model 41 Binary Networks • We will use binary neurons: (-1) means ‘inactive’ and (+1) means ‘active’. • The dynamics are given by: si t 1 sgn hi (t ) N hi (t ) J ij s j (t ) h (t ) j 1 0 i Input from within the network External input 42 Stability Condition for a Neuron • The condition for a neuron to remain with the same activity is that its current activity and its current input have the same sign: si t hi (t ) 0 43 Energy Function • If the external inputs are constant the network may reach a stable state, but this is not guaranteed (the attractors may be limit cycles and the network may even be chaotic). • When the recurrent connections are symmetric and there is no self coupling we can write an energy function, such that at each time step the energy decreases or does not change. • Under these conditions, the attractors of the network are stable fixed points, which are the local 44 minima of the energy function. Energy Function • Mathematically, the conditions are: J ij J ji • The energy is given by: J ii 0 N 1 N N E (s) J ij si s j hi0 si 2 i 1 j 1 i 1 • And one can prove that: E (t 1) E (t ) 0 45 Setting the Connections • Our goal is to embed in the network stable stead-states which will form the memory patterns. • To ensure the existence of such states, we will choose symmetric connections, that guarantee the existence of an energy function. 46 Setting the Connections • We will denote the P memory patterns by: , 1,2,P • For instance, for a network with 4 neurons and 3 memory patterns, the patterns can be: 1 1 1 , 1 1 1 1 2 , 1 1 1 1 3 , 1 1 47 Setting the Connections • Hopfield proposed the following rule: 1 P J ij i j , N 1 A normalizatio n factor J ii 0 The correlation among neurons across memory patterns 48 Choosing the Patterns to Store • To enhance the capacity of the network we will choose patterns that are not similar to one another. • In the Hopfield model, (-1) and (+1) are chosen with equal probabilities. In addition, there are no correlations among the neurons within a pattern and there are no correlations among patterns. 49 Memory Capacity • Storing more and more patterns adds more constraints to the pattern of connections. • There is a limit on the number of stable patterns that can be stored. • In practice, a some point a new pattern will not be stable even if we set the network to this pattern. 50 Memory Capacity • If we demand that at every pattern all neurons will be stable, we obtain: Pmax N 2 ln N 51 Memory Capacity • What happens to the system if we store more patterns? • Initially, the network will still function as associative memory, although the local minima will differ from the memory states by a few bits. • At some point, the network will abruptly stop functioning as associative memory. 52 Adding “Temperature” • It is also interesting to consider the case of stochastic dynamics. We add noise to the neuronal dynamics in analogy with the temperature in physical systems. • Physiologically, the noise can arise from random fluctuations in the synaptic release, delays in nerve conduction, fluctuations in ionic channels and more. 53 Adding “Temperature” P(s ) S=1 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 -5 -4 -3 -2 -1 0 h 1 2 3 4 5 54 Adding “Temperature” P(s ) S=-1 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 -5 -4 -3 -2 -1 0 h 1 2 3 4 5 55 Adding “Temperature” • Adding temperature has computational advantages: It drives the system out of spurious local minima, such that only the deep volleys in the energy landscape affect the dynamics. • One approach is to start the system at high temperature and then gradually cool it down and allow it to stabilize (Simulated annealing). • In general, increasing the temperature reduces the storage capacity but can prevent undesirable 56 attractors. Associative Memory - Summary • The Hopfield model is an example of connecting between dynamical concepts (attractors and basins of attraction) and functional concepts (associative memory). • The work pointed out the relation between neural networks and statistical physics and attracted many physicists to the field. 57 Associative Memory - Summary • Over the years, models that are based on the same principles but are more biologically plausible were developed. • Attractor networks are still useful in modelling a wide variety of phenomena. 58 References • Hopfield, 1982 – Hopfield, J. (1982). Neural networks and physical systems with emergent collective computational properties. Proceedings of the National Academy of Sciences of the USA, 79:2554 - 2588. • Hopfield, 1984 – Hopfield, J. (1984). Neurons with graded response have collective computational properties like those of two-state neurons. Proceedings of the National Academy of Sciences of the USA, 81:3088 - 3092. 59 מקורות • Amit, 1989 – Amit, D. Modeling Brain Function. Cambridge University Press, 1989 • Hertz et al., 1991 – John Hertz, Anders Krogh, Richard G. Palmer. Introduction to the Theory of Neural Computation. Addison-Wesley, 1991. 60 Associative Memory of Sensory Objects – Theory and Experiments Misha Tsodyks, Dept of Neurobiology, Weizmann Institute, Rehovot, Israel Joint work with Son Preminger and Dov Sagi 61 Experiments - Terminology Friends Non Friends … 62 … Experiment – Terminology (cont.) Basic Friend or Non-Friend task (FNF task) Face images of faces are flashed for 200 ms – for each image the subject is asked whether the – image is a friend image (learned in advance) or not. 50% of images are friends, 50% non-friends, in – random order; each friend is shown the same number of times. No feedback is given 63 200ms 200ms 200ms • ? ? ? F/NF F/NF F/NF 200ms 200ms 200ms 200ms 200ms 200ms Experiment – Terminology (cont.) Morph Sequence 1 … Source (friend) 64 20 … 40 … 60 … 80 … 100 Target (unfamiliar) Two Pairs: Source and Target Pair 1 Pair 2 65 FNF-Grad on Pair 1 Number of ‘Friend’ responses Subject HL -------- (blue-green spectrum) days 1-18 66 Bin number