Associative memory

advertisement

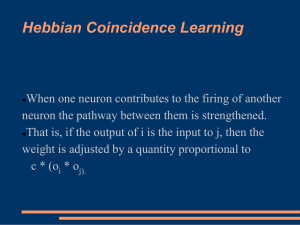

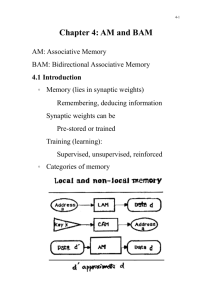

Pattern Association Introduction Pattern association involves associating a new pattern with a stored pattern. Is a “simplified” model of human memory. Types of associative memory: Heteroassociative memory Autoassociative memory Hopfield Net Bidirectional Associative Memory (BAM) Introduction These are usually single-layer networks. The neural network is firstly trained to store a set of patterns in the form s : t s represents the input vector and t the corresponding output vector. The neural network is then tested on a set of data to test its “memory” by using it to identify patterns containing incorrect or missing information. Introduction Associative memory can be feedforward or recurrent. Autoassociative memory cannot hold an infinite number of patterns. Factors that affect this: Complexity of each pattern Similarity of input patterns Heteroassociative Memory Architecture w11 x1 . w1j . wi1 . w1m wij xi . wim wnj w n1 . . wnm xn y1 . . . yj . . . ym Heteroassociative Memory The inputs and output vectors s and t are different. The Hebb rule is used as a learning algorithm or calculate the weight matrix by summing the outer products of each input-output pair. The heteroassociative application algorithm is used to test the algorithm. The Hebb Algorithm Initialize weights to zero, wij =0, where i = 1, …, n and j = 1, …, m. For each training case s:t repeat: xi = si , where i=1,...,n yi = tj, where j = 1, .., m Adjust weights wij(new) = wij(old) + xiyj, where i = 1, .., n and j = 1, .., m Exercise Train a heteroassociative neural network to store the following input and output vectors: 1 -1 -1 -1 1 1 -1 -1 -1 -1 -1 1 -1 -1 1 1 1 -1 1 -1 -1 1 -1 1 Test the neural network using all input data and the following input vector: 0 1 0 -1 Autoassociative Memory The inputs and output vectors s and t are the same. The Hebb rule is used as a learning algorithm or calculate the weight matrix by summing the outer products of each input-output pair. The autoassociative application algorithm is used to test the algorithm. Autoassociative Memory Architecture w11 x1 . w1i . wi1 . w1n wii xi . win wni . wn1 . wnn xn y1 . . . yi . . . yn Exercise Store the pattern 1 1 1 -1 in an autoassociative neural network. Test the neural network on the following input: 1 1 1 -1 -1 1 1 -1 1 -1 1 -1 1 1 -1 -1 1111 0 0 1 -1 0 1 0 -1 0110 The Hopfield Neural Network Is a recurrent associative memory neural network. Application algorithm Exercise: Store the pattern [1 1 1 0] using a Hopfield neural network. Test the neural network to see whether it is able to correctly identify an input vector with two mistakes in it: [0 1 1 0]. Note θi=0, for i=1,..,4 Bidirectional Associative Memory (BAM) Consists of two layers, x and y. Signals are sent back and forth between both layers until an equilibrium is reached. An equilibrium is reached if the x and y vectors no longer change. Given an x vector the BAM is able to produce the y vector and vice versa. Application algorithm BAM Exercise Store the vectors representing the following patterns using a BAM: [ 1 -1 1] with the output vector [1 -1] [-1 1 -1] with the output vector [-1 1] θi=0, θj=0 for i = 1,..3 and j=1..2