Farber

advertisement

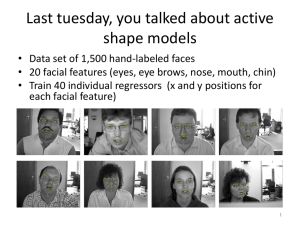

Massively Parallel Near-Linear Scalability Algorithms with Application to Unstructured Video Analysis Robert Farber and Harold Trease Pacific Northwest National Laboratory Acknowledge: Adam Wynne (PNNL) , Lynn Trease (PNNL), Tim Carlson (PNNL), Ryan Mooney (now at google.com) 1 Image/Video Analysis and Applications “Have we seen this person’s face before?” Goals: Image/Video content analysis, 1 million frames-persecond of processing capability (~1 TByte/sec) Streaming, unstructured video data represents high-volume, low information content data Huge volumes of archival data Requirement: Scalable algorithms to transform unstructured data into large sparse graphs for analysis This talk will focus on the Principle Component Analysis of video signatures The framework is generally applicable to other problems! Video analysis has many applications Face recognition (and object recognition) Social networks Many others 2 First Task: Isolate the Faces 1 2 3 4 1. Original frame 2. RGB-to-HIS 3. Sobel edge detection 4. Only skin pixels The bottom row contains frames of skin pixel patches that ID the three faces in this frame 3 Workflow Archival Data YouTube (huge!) 10k cameras = ~300,000 fps = ~300 GB/sec Split into frames and calculate entropic measures Signatures Algorithms PCA NLPCA MDS Clustering Others 1. Frames/Faces are separable 2. Faces form trajectories 3. Face DB 4. Derive social networks 4 Workflow Archival Data YouTube (huge!) 10k cameras = ~300,000 fps = ~300 GB/sec Split into frames and calculate entropic measures Signatures First steps are embarrassingly parallel 1. Split video into separate frames 2. Calculate signature of each frame and write to file 5 Workflow Archival Data YouTube (huge!) 10k cameras = ~300,000 fps = ~300 GB/sec Split into frames and calculate entropic measures Signatures Algorithms PCA NLPCA MDS Clustering Others 1. Frames/Faces are separable 2. Faces form trajectories 3. Face DB 4. Derive social networks 6 Working with large data sets (Think BIG: 108 signatures and greater) Formulate PCA (NLPCA, MDS, and others) as an objective function Use your favorite solver (Conjugate Gradient) Map to massively parallel hardware (SIMD, MIMD, SPMD, etc.) Ranger, NVIDIA GPUs, others Massive parallelism needed to handle large data sets 10,000 video cameras = ~300,000 fps = ~300 GB/sec Consider all of YouTube as a video archive Our Supercomputing 2005 data set = 2.2M frames Test YouTube dataset consisted of over 22M frames 7 Formulate PCA as objective function energy func( p1 , p2 ,...pn ) Calculate the PCA by passing information though a bottleneck layer in a linear feed-forward neural network Oja, Erkki (November 1982). "Simplified neuron model as a principal component analyzer". Journal of Mathematical Biology 15 (3): 267-273. Sanger, Terence D. (1989). "Optimal unsupervised learning in a single-layer linear feedforward neural network". Neural Networks 2 (6): 459-473. Use your favorite solver (Conjugate Gradient …) Saul A. William H. Press, Brian P. Flannery and William T. Vetterling. “Numerical Recipes in C: The Art of Scientific Computing”. Cambridge University Press, 1993. 8 Pass the information through a bottleneck O O O O O O O O O O B I I I I I I I I I I O O O O O B I I I I I O O O O O B O O O O O B1 B1 Poffset I I I I I nInput PB I 1 j j j I I I I I 9 Map to massively parallel hardware Large data sets require parallel data load to deliver necessary bandwidth Use Lustre because it scales: PNNL achieved 136 GB/s sustained read, 86 GB/s sustained write Data Partitioned Partitioned equally across Data all processing cores Core 1 Core 2 Core 3 Core 4 Examples 1,N Examples N+1,2N Examples 2N+1,3N Examples 3N+1,4N 1. Broadcast filename plus data size and file offset to each MPI client 2. Each client opens the data file, seeks to location and reads appropriate data 10 Evaluate objective function in massively parallel manner Step 1 Broadcast Parameters, P Scales by P Step 2 Calculate Partial Energies Scales by (data/Nproc) Step 33 Step Sum Partial Energies Optimization Routine (Powell, (Powell, Conjugate Conjugate Gradient, Gradient, or other etcetera) method) Objective Function Energy = func(P 1,P 2, …, P N) Step 1 Broadcast Parameters, P Step 2 Calculate Partial Energies Step Step Step 3 Step 33 3 Sum Partial Sum Partial Energies Energies Core 1 Core 2 Core 3 Core 4 P11,P ,P22,, …, …, PPNN P P11,P ,P22,, …, …, P PNN P P11,P ,P22,, …, …, P PNN P P11,P ,P22,, …, …, P PNN P Examples 1,N Examples N+1,2N Examples 2N+1,3N Examples 3N+1,4N O(log2(Nproc)) 11 Report Effective Rate TotalOpCount EffectiveRate TbroadcastP aram T func Treduce Every evaluation of the objective function requires: Broadcasting a new set of parameters Calculating the partial sum of the errors on each node Obtain the global sum of the partial sum of the errors Treduce is highly network dependent Low bandwidth and/or high latency is bad! 12 Very efficient and near-linear scaling on Ranger Ranger Scaling as a Function of Number of Cores 80000 70000 GF/s Effective Rate 60000 50000 40000 30000 20000 10000 0 0 2000 4000 6000 8000 10000 12000 14000 Number of Cores Note: 32k and 64k runs will occur when possible 13 Reduce operation does affect scaling Performance per Core (GF/s) for Various Run Sizes 8.00 7.00 GF/s per Core 6.00 5.00 4.00 3.00 2.00 1.00 0.00 0 2000 4000 6000 8000 10000 12000 14000 Num be r of Core s 14 Objective function performance scaling by data size on Ranger (Synthetic benchmark with no communications) 16way Performance vs Datasize Without Prefetch Achieved 8 GF/s per core using SSE Interesting performance segregation 8 7 6 GF/s 9 5 4 3 2 1 0 0 200000 400000 600000 800000 1000000 1200000 1000000 1200000 Number of 80 Byte Examples With Prefetch 16way performace vs data size With Prefetch Achieved nearly 8 GF/s per core Bizarre jump at 800k examples 7 6 5 GF/s 8 4 3 2 1 0 0 200000 400000 600000 800000 Number of 80 byte examples 15 Most time (> 90%) is spent in objective function when solving PCA problem Number of Number of calls to Percentage of wall Cores the objective clock time spent function in optimization routine Percentage of wall clock time in objective function 256 1,173,533 91.11% 90.89% 512 1,142,588 90.83% 90.44% 2048 1,141,565 93.79% 92.55% 4096 1,142,557 95.08% 93.22% Note: data sizes were kept constant per node, which meant each trial trained on different data 16 Mapping works with other problems (and architectures) SIMD version used by Farber since early 1980s on 64k processor Connection Machines (and other SIMD, MIMD, SPMD, Vector, & Cluster architectures) R.M Farber, “Efficiently Modeling Neural Networks on Massively Parallel Computers”, Los Alamos National Laboratory Technical Report LA-UR-92-3568. Kurt Thearling, “Massively Parallel Architectures and Algorithms for Time Series Analysis” , published in the 1993 Lectures in Complex Systems, edited by L. Nadel and D. Stein, AddisonWesley, 1995. Alexander Singer, “Implementations of Artificial Neural Networks on the Connection Machine. Technical Report” RL90-2, Thinking Machines Corporation, 245 First Street, Cambridge, MA 02142, January 1990. Many different applications aside from PCA Independent Component Analysis K-means Fourier approximation Expectation Maximization Logistic regression Gaussian Discriminative Analysis Locally weighted Linear Regression Naïve Bayes Support Vector Machines Others Performance of NVIDIA CUDA-enabled GPUs (Courtesy of NVIDIA corp.) 1200 1000 Effective Rate (GF/s) 800 600 400 200 0 0 2 4 6 8 10 12 14 16 Numbe r of GPUs 17 PCA components form trajectories in 3space 1. Separable trajectories – can build face DB! • Different faces form separate tracks • Same faces continuous across cameras 2. Multiple faces extracted from individual frames can infer social networks! 18 Preliminary results using PCA Public ground truth datasets are scarce – work in progress PCA was first step (funding limited) NLPCA, MDS and other methods promise to increase accuracy Using Eucledian distance between points as a recognition metric: 99.9% accuracy in one data set 2 false positives in a 2k database of known faces Each face in the database was compared against the entire database as a self-consistency check. Social networks have been created and are being evaluated Again, ground truth data is scarce 19 Summary: High-Performance Video Analysis SC05 Videos Streaming video Face database Building Social Network Graphs From Face Data and Face DB Social Network Partitioning face based graphs to discover relationships 20 Two video examples (in conjunction with Blogosphere text analysis by Michelle Gregory and Andrew Cowell) 351 videos, ~3.6 million frames, ~4.4 Tbytes (Each point is a video frame, each color is a different video, coordinates are PCA projection of N-d feature vector into 3-D) 512 YouTube videos, ~22.6 million frames, ~5.2 Tbytes 21 Connecting the points and forming the sparse graph connectivity for analysis Delaunay/Voronoi mesh: Shows the mesh connections where “points” are connected by “edges” which equals a graph Adjacency matrix: Each row represents a frame, columns represent connected frames Clusters and social network defines how one frame (face) relates to another 22 Graph Partitioning (using Voronoi/Delaunay mesh connected graphs) Point distribution Delaunay/Voronoi mesh Adjacency before partitioning Adjacency after partitioning Partitioned Mesh 23 Classification, Characterization and Clustering of High-Dimensional Data 24