Slides

advertisement

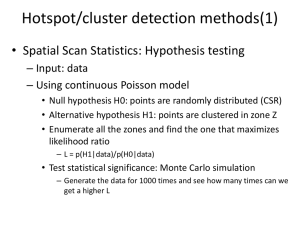

Presented by: GROUP 7 Gayathri Gandhamuneni & Yumeng Wang AGENDA Problem Statement Motivation / Novelty Related Work & Our Contributions Proposed Approach Key Concepts Validation Results Conclusions Future Work AGENDA Problem Statement Motivation / Novelty Related Work & Our Contributions Proposed Approach Key Concepts Validation Results Conclusions Future Work PROBLEM STATEMENT Input: Two different Clustering algorithms (DBScan, SatScan) Same Input Dataset Criteria of Comparison Output: Result of Comparison – Data / Graph Constraints: DBScan – No data about efficiency SatScan Software – 1 pre defined shape Objective: Usage Scenarios – Which algorithm can be used where? AGENDA Problem Statement Motivation / Novelty Related Work & Our Contributions Proposed Approach Key Concepts Validation Results Conclusions Future Work MOTIVATION/NOVELTY Different clustering algorithms Categorized into different types Comparisons Algorithms - Same category No Systematic way of comparison, Biased Comparisons No situation based comparison – Which to use where? No comparison betn. DBScan & SatScan AGENDA Problem Statement Motivation / Novelty Related Work & Our Contributions Proposed Approach Key Concepts Validation Results Conclusions Future Work RELATED WORK Comparison of Clustering Algorithms Same type of Algorithms Density Based – DBScan & OPTICS Density Based – DBScan & SNN Different type of Algorithms DBScan Vs K-Means Our Work – DBSCan Vs SatScan K-means (Centroid Based) Vs Hierarchical, Expectation Vs Maximization (Distance Based) AGENDA Problem Statement Motivation / Novelty Related Work & Our Contributions Proposed Approach Key Concepts Validation Results Conclusions Future Work PROPOSED APPROACH Our Approach: Two different types of Clustering algorithms DBScan SatScan Unbiased comparison Systematic – 3 factors & Same Input datasets Shape of the cluster Statistical Significance Scalability AGENDA Problem Statement Motivation / Novelty Related Work & Our Contributions Proposed Approach Key Concepts Challenges Validation Results Conclusions Future Work KEY CONCEPTS - 1 Clustering Task of grouping a set of objects in such a way that objects in the same group (called a cluster) are more similar to each other than to those in other groups. Data Mining, Statistical Analysis & many more fields Real world Application: Earthquake studies: Clustering observed earthquake epicenters to identify dangerous zones Field Robotics: For robotic situational awareness to track objects and detect outliers in sensor data KEY CONCEPTS - 2 Types of Clustering Algorithms ……… Connectivity based / Hierarchical Core Idea Objects being more related to nearby objects (distance) than to objects farther away Centroid Based Core IdeaClusters are represented by a central vector, which may not necessarily be a member of the data set Ex: K - Means Distribution Based Core Idea Clusters can be defined as objects belonging most likely to the same distribution Density Based Core Idea Clusters are areas of higher density than the remainder of the data set KEY CONCEPTS - DBSCAN Density based Clustering Arguments Minimum number of Points – MinPts Radius - Eps Density = Number of Points within specified radius (Eps) Three types of Points Core Point – No. of points > MinPts within Eps Border point – No. of Points < MinPts within Eps but is in neighborhood of a core point Noise point - Neither a core point nor a border point EXAMPLE - DBSCAN Dataset 1 : DBSCAN RESULTS - 1 DB Scan o/p on dataset1: Min-Neighbors=3, Radius = 5 150 Number of Clusters = 36 100 50 0 -50 -100 -150 -150 -100 -50 0 50 100 150 DBSCAN RESULTS - 2 DB Scan o/p on dataset1: Min-Neighbors=7, Radius = 1 150 100 Number of clusters =0 50 0 -50 -100 -150 -150 -100 -50 0 50 100 150 DBSCAN RESULTS - 3 DB Scan o/p on dataset1: Min-Neighbors=20,Radius = 20 150 Number of clusters =4 100 50 0 -50 -100 -150 -150 -100 -50 0 50 100 150 KEY CONCEPTS - 3 SaTScan – Spatial Scan Statistics Input: Dataset null hypothesis model Procedure: Pre-defined shape scanning window Variating size of the window Calculate likelyhood ratio => Most Likely clusters Test statistical significance (Monte Carlo Sampling, 1000 runs) Significant/primary Output: Clusters with p-value Insignificant/secondary AGENDA Problem Statement Motivation / Novelty Related Work & Our Contributions Proposed Approach Key Concepts Challenges Validation Results Conclusions Future Work CHALLENGES Tuning parameters - DBScan Manual tuning to detect clusters Hard to set correct parameters Design of appropriate Datasets To demonstrate Criteria of Comparison AGENDA Problem Statement Motivation / Novelty Related Work & Our Contributions Proposed Approach Key Concepts Challenges Validation Results Conclusions Future Work VALIDATION Experiment Assumptions based on theory Designing datasets and running experiment Able to validate them with results AGENDA Problem Statement Motivation / Novelty Related Work & Our Contributions Proposed Approach Key Concepts Challenges Validation Results Conclusions Future Work CLUSTER SHAPE - DBSCAN Vs SatScan CLUSTER SHAPE - DBSCAN Vs SatScan STATISTICAL SIGNIFICANCE DBScan k = 5, eps = 5 SATScan 100 100 90 90 80 80 70 70 60 60 50 50 40 40 30 30 20 20 10 10 0 0 10 20 30 40 50 60 70 80 90 100 CSR Dataset -1000 points 0 0 10 20 30 40 50 60 70 80 90 100 STATISTICAL SIGNIFICANCE DBScan k = 2, eps = 10 SATScan 500 500 450 450 400 400 350 350 300 300 250 250 200 200 150 150 100 100 50 50 0 0 50 100 150 200 250 300 350 400 450 500 0 0 50 100 CSR Dataset - 2000 points 150 200 250 300 350 400 450 500 RUNTIME – Number of Points - DBScan DBScan 3.5 3.252 3 2.5 Runtime/s 2.194 2 1.5 1.31 1.011 1 0.611 0.5 0.066 0.069 0 500 1000 0.384 1500 2000 2500 3000 3500 Number of Data Points 4000 4500 5000 RUNTIME – Number of Points - SATScan SATScan 4500 4085 4000 3500 Runtime/s 3000 2566 2500 2000 1443 1500 965 1000 600 500 0 332 1 0 39 500 39 1000 1500 2000 2500 3000 Number of points 3500 4000 4500 5000 RUNTIME – Number of Clusters – DB Vs SAT 100 100 100 80 80 80 60 60 60 40 40 40 20 20 20 0 0 20 40 60 80 100 0 100 100 80 80 60 60 40 40 20 20 0 0 20 40 60 80 100 0 0 20 40 60 80 100 0 20 40 60 80 100 Datasets: 3000 points 0 0 20 40 60 80 100 RUNTIME – Number of Clusters – DBScan DBScan 0.7 0.65 0.641 0.6 Runtime/s 0.55 0.533 0.5 0.45 0.434 0.4 0.346 0.35 0.302 1 1.5 2 2.5 3 3.5 Number of Clusters 4 4.5 5 RUNTIME – Number of Clusters – SATScan SATScan 900 843 800 Runtime/s 700 686 600 549 500 432 400 325 300 1 1.5 2 2.5 3 3.5 Number of Clusters 4 4.5 5 AGENDA Problem Statement Motivation / Novelty Related Work & Our Contributions Proposed Approach Key Concepts Challenges Validation Results Conclusions Future Work CONCLUSIONS S.N o 1 Factor of Comparison Number of clusters not known beforehand DBSCAN SATSCAN Yes 2 Shape: Data has different shaped clusters 3 Runtime: How much time to form clusters? Less runtime 4 Scalability: How well it scales when data size is increased Still manageable runtime - Runtime α Size, Number of Curse of dimensionality clusters 5 Statistical Significance: How significant are the clusters detected? No significance factor 6 Noise: Is noise allowed or should all points be in Yes Yes No - Only 1 shape of clusters (Circle, ellipse, rectangle.. ) More runtime Iterative approach to detect clusters and Monte Carlo Sampling too Yes Significance is at the core Yes AGENDA Problem Statement Motivation / Novelty Related Work & Our Contributions Proposed Approach Key Concepts Challenges Validation Results Conclusions Future Work FUTURE WORK Same project – Real World Datasets Run more instances of the experiments Control over parameters Compare with other types of clustering algorithms QUESTIONS? BACKUP SLIDE - 1 DBSCAN requires two parameters: epsilon (eps) and minimum points (minPts). It starts with an arbitrary starting point that has not been visited. It then finds all the neighbor points within distance eps of the starting point. If the number of neighbors is greater than or equal to minPts, a cluster is formed. The starting point and its neighbors are added to this cluster and the starting point is marked as visited. The algorithm then repeats the evaluation process for all the neighbors recursively. If the number of neighbors is less than minPts, the point is marked as noise. If a cluster is fully expanded (all points within reach are visited) then the algorithm proceeds to iterate through the remaining unvisited points in the dataset. BACKUP SLIDE 2 -CONCLUSIONS DBScan Works Same density clusters Don’t know the number of clusters beforehand Different shaped clusters All points need not be in clusters – Noise concept is present DBScan doesn’t work Varying density clusters Quality of DBScan depends on – Epsilon – If Euclidean distance High dimension data – Curse of dimensionality TO DO CLUSTER SHAPE - DBSCAN Results 150 5 30 100 20 0 50 10 0 -5 0 -50 -10 -10 -100 -150 -150 -20 -100 -50 0 50 100 150 -15 -15 40 15 30 10 20 -10 -5 0 5 10 15 -30 -30 -20 -10 0 10 20 20 40 60 80 100 100 80 5 60 10 0 0 40 -5 -10 -30 -30 20 -10 -20 -20 -10 0 10 20 30 -15 -10 -5 0 5 10 0 0 SHAPE - SATSCAN RESULTS 150 5 30 100 20 0 50 10 0 -5 0 -50 -10 -10 -100 -150 -150 -20 -100 -50 0 50 100 150 -15 -15 40 15 30 10 20 -10 -5 0 5 10 15 -30 -30 -20 -10 0 10 20 20 40 60 80 100 100 80 5 60 10 0 0 40 -5 -10 -30 -30 20 -10 -20 -20 -10 0 10 20 30 -15 -10 -5 0 p-value: 0.001 5 10 0 0