+ A(2)

advertisement

并行算法概述

2

Content

• Parallel Computing Model

• Basic Techniques to Parallel

Algorithm

3

Von Neumann Model

M E M O RY

M AR

MDR

IN P U T

OUTPUT

Ke yb o ard

M ouse

S c an n e r

D is k

M o n ito r

P rin te r

LE D

D is k

P R O C E S S IN G U N IT

A LU

TE M P

C O N TR O L U N IT

PC

IR

4

Instruction Processing

Fetch instruction from memory

Decode instruction

Evaluate address

Fetch operands from memory

Execute operation

Store result

5

并行计算模型

Parallel Computing Model

• 计算模型

• 桥接软件和硬件

• 为算法设计提供抽象体系结构

• Ex) PRAM, BSP, LogP

6

并行程序设计模型

Parallel Programming Model

• 程序员使用什么来编码?

• 确定通信(communication)和同步

(synchronization)

• 暴露给程序员的通信原语(Communication

primitives)实现编程模型

• Ex) Uniprocessor, Multiprogramming, Data

parallel, message-passing, shared-addressspace

7

Aspects of Parallel Processing

4 Application developer

3 Algorithm developer

Parallel computing model

Parallel programming model

2 System programmer

Middleware

Interconnection Network

Memory

P

P

P

Memory

P

Multiprocessors

P

P

P

Memory

P

Multiprocessors

1 Architecture designer

P

P

P

Memory

P

P

P

P

P

Multiprocessors Multiprocessors

8

Parallel Computing Models –并行随机存

取机(Parallel Randon Access Machine)

特性:

• Processors Pi (i (0 i p-1 )

• 每一处理器配有局部内存

• 一全局共享内存

• 所有处理器都可以访问

9

Illustration of PRAM

CLK

Single program executed in MIMD mode

Each processor has P1

a unique index.

P2

P3

Pp

Shared Memory

P processors connected to a single shared memory

10

Parallel Randon Access Machine

操作类型:

• 同步

• 处理器执行时会加锁

F每一步,处理器或者工作或者待机

F适用于SIMD和MIMD体系结构

• 异步

• 处理器有局部时钟,用于同步处理器

F适用于MIMD architecture

11

Problems with PRAM

• 是对现实世界并行系统的一种简化描

述

• 未考虑多种开销

• 延迟,带宽,远程内存访问,内存访问冲突,

同步开销, etc

• 在PRAM上理论分析性能分析好的算法,

实际性能可能差

12

Parallel Randon Access Machine

Read / Write冲突

• EREW : Exclusive - Read, Exclusive -Write

• 对一变量吴并发操作 ( read or write)

• CREW : Concurrent – Read, Exclusive –

Write

• 允许并发读同一变量

• 互斥写

• ERCW : Exclusive Read – Concurrent Write

• CRCW : Concurrent – Read, Concurrent – Write

15

Parallel Randon Access Machine

基本Input/Output 操作

• 全局内存

• global read (X, x)

• global write (Y, y)

• 局部内存

• read (X, x)

• write (Y, y)

16

Example: Sum on the PRAM model

对有n = 2k个数的数组A求和

A PRAM machine with n processor

计算S = A(1) + A(2) + …. + A(n)

构建二叉树计算和

17

Example: Sum on the PRAM model

Level >1, Pi compute

B(i) = B(2i-1) + B(2i)

S=B(1)

P1

Level 1, Pi

B(i) = A(i)

B(1)

P1

B(1)

B(2)

P1

P2

B(1)

B(2)

B(3)

B(4)

P1

P2

P3

P4

B(1)

=A(1)

B(2)

=A(2)

B(1)

=A(1)

P1

P2

P3

B(2)

=A(2)

B(1)

=A(1)

P4

P5

B(2)

=A(2)

B(1)

=A(1)

P6

P7

B(2)

=A(2)

P8

18

Example: Sum on the PRAM model

Algorithm processor Pi ( i=0,1, …n-1)

Input

A : array of n = 2k elements in global memory

Output

S : S= A(1) + A(2) + …. . A(n)

Local variables Pi

n :

i : processor Pi identity

Begin

1. global read ( A(i), a)

2. global write (a, B(i))

3. for h = 1 to log n do

if ( i ≤ n / 2h ) then begin

global read (B(2i-1), x)

global read (b(2i), y)

z = x +y

global write (z,B(i))

end

4. if i = 1 then global write(z,S)

End

19

其它分布式模型

• Distributed Memory Model

• 无全局内存

• 每一处理器有局部内存

• Postal Model

• 当访问非局部内存时,处理器发送请求

• 处理器不会停止,它会继续工作直到数据到达

20

Network Models

• 关注通信网络拓扑的影响

• 早期并行计算关注点

• 分布式内存模型

• 远程内存访问的代价与拓扑和访问模式相关

• 提供有效的

• 数据映射

• 通信路由

21

LogP

• 受并行计算机设计的影响

• 分布式内存多处理器模型

• 处理器通信通过点对点的消息通信实现

• 目标是分析并行计算机的性能瓶颈

• 制定通信网络的性能特点

• 为数据放置提供帮助

• 显示了平衡通信的重要性

22

Model Parameters

• Latency (L)

• 从源到目的端发送消息的延迟

• Hop(跳) count and Hop delay

• Communication Overhead (o)

• 处理器在发送或接收一条消息时的时间开销

• Communication bandwidth (g)

• 消息之间的最小时间间隔

• Processor count (P)

• 处理器个数

23

LogP Model

g

sender

o

receiver

L

t

o

24

Bulk Synchronous Parallel

• Bulk Synchronous Parallel(BSP)

• P个配有局部内存的处理器

• 路由器

• 周期性全局同步

• 考虑因素

• 带宽限制

• 延迟

• 同步开销

• 未考虑因素

• 通信开销

• 处理器拓扑

25

BSP Computer

• 分布式内存体系结构

• 3 种部件

• 节点

• 处理器

• 局部内存

• 路由器 (Communication Network)

• 点对点(Point-to-point),消息传递( message

passing)或者共享变量(shared variable)

• 路障

• 全部或部分

26

Illustration of BSP

Node (w)

M

P

Node

P

M

Node

P

M

Barrier (l)

Communication Network (g)

w parameter

g parameter

每一超步(superstep)最大计算时间

计算最多消耗w个时钟周期.

当所有处理器都参与通信时,发送一消息单元所需要的时钟周期# ,即

网络带宽

h:每一超步最大接收和发送消息的数量

通信操作需要gh 时钟周期

l parameter

路障(Barrier)同步需要l 时钟周期

27

BSP Program

• 每一BSP计算由S个超步构成

• 一超步包括一系列步骤和一个路障

• Superstep

• 任何远程内存访问需要路障 – 松散同步

28

BSP Program

Superstep 1

P1

P2

Computation

Communication

Superstep 2

Barrier

P3

P4

Example: Pregel

Pregel

is a framework developed by

Google:

SIGMOD 2010

High scalability

Fault-tolerance

• 灵活实现图算法

30

Bulk Synchronous Parallel Model

Iterations

Data

Data

CPU 1

Data

CPU 1

Data

CPU 1

Data

Data

Data

Data

Data

Data

Data

Data

CPU 2

CPU 2

CPU 2

Data

Data

Data

Data

Data

Data

Data

Data

CPU 3

CPU 3

CPU 3

Data

Data

Data

Data

Data

Barrier

Data

Barrier

Data

Barrier

Data

31

图

Graph

32

Entities and Supersteps

计算由顶点、边和一系列迭代(即超步)构成

每一顶点赋有值。

每一边包含与源点、边值和目的顶点

每一超步:

用户定义的函数F 处理每一顶点V

F 在超步S – 1 读发送给V的消息,发送消息给

其它顶点。这些消息将在S + 1超步收到

F 更改顶点V 和出边的状态

F可以改变图的拓扑

33

Algorithm Termination

根据各顶点投票决定算法是否终止

superstep 0,每一顶点活跃

所有活跃顶点参与任意给定超步中的计算

当顶点投票终止时,顶点进入非活跃状态

如果顶点收到外部消息,顶点可以进入活跃状态

当所有节点都同时变为非活跃状态时,程序

终止

Vote to Halt

Active

Inactive

Message Received

Vertex State Machine

34

The Pregel API in C++

A Pregel program is written by subclassing the vertex class:

template <typename VertexValue,

typename EdgeValue,

typename MessageValue>

To define the types for vertices,

edges and messages

class Vertex {

public:

virtual void Compute(MessageIterator* msgs) = 0;

const string& vertex_id() const;

int64 superstep() const;

const VertexValue& GetValue();

VertexValue* MutableValue();

OutEdgeIterator GetOutEdgeIterator();

Override the

compute function to

define the

computation at

each superstep

To get the value of the

current vertex

To modify the value of

the vertex

void SendMessageTo(const string& dest_vertex,

const MessageValue& message);

void VoteToHalt();

To pass messages

to other vertices

35

Pregel Code for Finding the Max Value

Class MaxFindVertex

: public Vertex<double, void, double> {

public:

virtual void Compute(MessageIterator* msgs) {

int currMax = GetValue();

SendMessageToAllNeighbors(currMax);

for ( ; !msgs->Done(); msgs->Next()) {

if (msgs->Value() > currMax)

currMax = msgs->Value();

}

if (currMax > GetValue())

*MutableValue() = currMax;

else VoteToHalt();

}

};

36

Finding the Max Value in a Graph

3

6

2

1

节点内数值是节点值

蓝色箭头是消息

3

6

6

2

1

6

蓝色节点投票终

止

6

6

2

6

6

6

6

6

6

37

Model Survey Summary

• No single model is acceptable!

• Between models, subset of characteristics are

focused in majority of models

• Computational Parallelism

• Communication Latency

• Communication Overhead

• Communication Bandwidth

• Execution Synchronization

• Memory Hierarchy

• Network Topology

38

Computational Parallelism

• Number of physical processors

• Static versus dynamic parallelism

• Should number of processors be fixed?

• Fault-recovery networks allow for node failure

• Many parallel systems allow incremental

upgrades by increasing node count

39

Latency

• Fixed message length or variable

message length?

• Network topology?

• Communication Overhead?

• Contention based latency?

• Memory hierarchy?

40

Bandwidth

• Limited resource

• With low latency

• Tendency for bandwidth abuse by flooding

network

41

Synchronization

• Ability to solve a wide class of problems

require asynchronous parallelism

• Synchronization achieved via message

passing

• Synchronization as a communication cost

42

Unified Model?

• Difficult

• Parallel machines are complicated

• Still evolving

• Different users from diverse disciplines

• Requires a common set of characteristics

derived from needs of different users

• Again need for balance between

descriptivity and prescriptivity

43

Content

• Parallel Computing Model

• Basic Techniques of Parallel Algorithm

• Concepts

• Decomposition

• Task

• Mapping

• Algorithm Model

44

分解、任务及依赖图

• 设计并行算法的第一步是将问题分解成可并发执

行的任务

• 分解可用任务依赖图(task dependency graph)

表示。图中节点代表任务,边代表任务依赖

45

Example: Multiplying a Dense Matrix with

a Vector

计算输出向量y的每一元素可独立进行。因此,矩阵

与向量之积可分解为n个任务

Example: Database Query Processing

在如下数据库上执行查询:

MODEL = ``CIVIC'' AND YEAR = 2001 AND

(COLOR = ``GREEN'' OR COLOR = ``WHITE)

ID# Model

4523 Civic

3476 Corolla

7623 Camry

9834 Prius

6734 Civic

5342 Altima

3845 Maxima

8354 Accord

4395 Civic

7352 Civic

Year

2002

1999

2001

2001

2001

2001

2001

2000

2001

2002

Color

Blue

White

Green

Green

White

Green

Blue

Green

Red

Red

Dealer

MN

IL

NY

CA

OR

FL

NY

VT

CA

WA

Price

$18,000

$15,000

$21,000

$18,000

$17,000

$19,000

$22,000

$18,000

$17,000

$18,000

46

Example: Database Query Processing

执行查询可分成任务。每一任务可看作产生满足

某一条件的中间结果

边表示一个任务的输出是另一个任务的输入

47

Example: Database Query Processing

同一问题可采用其它方式分解。不同的分解可能存在重

大的性能差异

48

任务粒度

• 分解的任务数量越多,粒度越小。否则粒度越大

49

50

并行度Degree of Concurrency

• 能并行执行的任务数称为一分解的degree of

concurrency

• maximum degree of concurrency

• average degree of concurrency

• 当任务粒度小时,并行度大。

51

任务交互图Task Interaction Graphs

• 任务之间通常需要交换数据

• 表达任务之间交换关系的图称为task interaction

graph.

• task interaction graphs 表达数据依赖;task

dependency graphs表达control dependencies.

Task Interaction Graphs: An Example

稀疏矩阵A乘以向量 b.

计算结果向量的每一元素可视之为独立任务

由于内存优化,可以将b 根据任务划分,可以发现任务交

互图和矩阵A的图一样

52

53

进程和映射Processes and Mapping

• 任务的数量超过处理单元的数量,因此必须将任务

映射到进程

• 恰当的任务映射对并行算法的性能非常重要

• 映射由任务依赖图和任务交互图决定

• 任务依赖图确保任务在任何时间点均匀分布到所有

进程 (minimum idling and optimal load balance).

• 任务交互图用于确保进程与其它进程之间的交互最

少 (minimum communication).

•

Processes and Mapping: Example

将数据库查询任务映射到进程. 根据同一层没有依

赖关系,同一层任务可分配给不同进程

54

55

分解技术Decomposition Techniques

•递归分解(recursive decomposition)

•数据分解(data decomposition)

•探索分解(exploratory decomposition)

•猜测分解(speculative decomposition)

56

Recursive Decomposition

• 适合可用分治法解决的问题.

• 给定问题首先分解为一系列子问题

• 这些子问题进一步递归分解,直到所需要

的任务粒度

Recursive Decomposition: Example

经典的例子是快速排序

In this example, once the list has been partitioned around the pivot,

each sub-list can be processed concurrently (i.e., each sub-list

represents an independent subtask). This can be repeated recursively.

57

58

Recursive Decomposition: Example

We first start with a simple serial loop for computing the

minimum entry in a given list:

1. procedure SERIAL_MIN (A, n)

2. begin

3. min = A[0];

4. for i := 1 to n − 1 do

5.

if (A[i] < min) min := A[i];

6. endfor;

7. return min;

8. end SERIAL_MIN

59

Recursive Decomposition: Example

We can rewrite the loop as follows:

1. procedure RECURSIVE_MIN (A, n)

2. begin

3. if ( n = 1 ) then

4. min := A [0] ;

5. else

6. lmin := RECURSIVE_MIN ( A, n/2 );

7. rmin := RECURSIVE_MIN ( &(A[n/2]), n - n/2 );

8. if (lmin < rmin) then

9.

min := lmin;

10. else

11.

min := rmin;

12. endelse;

13. endelse;

14. return min;

15. end RECURSIVE_MIN

Recursive Decomposition: Example

以上代码可用如下求最小数例子说明.

求{4, 9, 1, 7, 8, 11, 2, 12}的最小数. 任务依赖图如下:

60

61

Data Decomposition

• 划分数据,将数据分配给不同任务

• 输入数据划分

• 中间数据划分

• 输出划分

• 输出数据的每一元素可以独立计算出

Output Data Decomposition: Example

n x n 矩阵A和B相乘得到矩阵C. 输出矩阵C的计算 可以分为

如下四个任务:

Task 1:

Task 2:

Task 3:

Task 4:

62

Output Data Decomposition: Example

以前面的矩阵相乘例子为例,还可以派生如下两

种划分:

Decomposition I

Decomposition II

Task 1: C1,1 = A1,1 B1,1

Task 1: C1,1 = A1,1 B1,1

Task 2: C1,1 = C1,1 + A1,2 B2,1

Task 2: C1,1 = C1,1 + A1,2 B2,1

Task 3: C1,2 = A1,1 B1,2

Task 3: C1,2 = A1,2 B2,2

Task 4: C1,2 = C1,2 + A1,2 B2,2

Task 4: C1,2 = C1,2 + A1,1 B1,2

Task 5: C2,1 = A2,1 B1,1

Task 5: C2,1 = A2,2 B2,1

Task 6: C2,1 = C2,1 + A2,2 B2,1

Task 6: C2,1 = C2,1 + A2,1 B1,1

Task 7: C2,2 = A2,1 B1,2

Task 7: C2,2 = A2,1 B1,2

Task 8: C2,2 = C2,2 + A2,2 B2,2

Task 8: C2,2 = C2,2 + A2,2 B2,2

63

64

Input Data Partitioning

• 如果输出事先未知,这时可以考虑输入划分

• 每一任务处理一部分输入数据,形成局部结果。

合并局部结果形成最终结果

Input Data Partitioning: Example

统计事务数量的例子可采用输入数据划分。

65

Partitioning Input and Output Data

也可以将输入划分和输出划分相结合以便得到更高的并行度.

对 于 统 计 事 务 的 例 子 , 事 务 集 (input) 和 事 务 统 计 数 量

(output) 可同时划分如下:

66

67

Intermediate Data Partitioning

• 计算通常可视为一系列从输入到输出的变换.

• 因此,可考虑将中间结果进行分解

Intermediate Data Partitioning: Example

Let us revisit the example of dense matrix multiplication.

68

Intermediate Data Partitioning: Example

A decomposition of intermediate data structure leads to the following

decomposition into 8 + 4 tasks:

Stage I

Stage II

Task 01: D1,1,1= A1,1 B1,1

Task 02: D2,1,1= A1,2 B2,1

Task 05: D1,2,1= A2,1 B1,1

Task 06: D2,2,1= A2,2 B2,1

Task 03: D1,1,2= A1,1 B1,2

Task 07: D1,2,2= A2,1 B1,2

Task 09: C1,1 = D1,1,1 + D2,1,1

Task 11: C2,1 = D1,2,1 + D2,2,1

Task 04: D2,1,2= A1,2 B2,2

Task 08: D2,2,2= A2,2 B2,2

Task 10: C1,2 = D1,1,2 + D2,1,2

Task 12: C2,,2 = D1,2,2 + D2,2,2

69

Intermediate Data Partitioning: Example

The task dependency graph for the decomposition

(shown in previous foil) into 12 tasks is as follows:

70

71

Exploratory Decomposition

• 在许多场合,随着执行的逐步推进而进行划分.

• 这些应用通常涉及搜索解答的状态空间

• 适合应用包括:组合优化,定理证明,游戏, etc.

Exploratory Decomposition: Example

15 puzzle (a tile puzzle).

72

Exploratory Decomposition: Example

产生当前状态的后继状态,将搜索每一状态视为一独

立任务

73

74

Speculative Decomposition

• 在某些应用,任务之间依赖事先未知

• 两种方法:

• 保守方法(conservative approaches):当确认没有依

赖时,可以识别独立任务,

• 乐观方法(optimistic approaches)即使可能是错误的,

仍然调度任务

• 保守方法可能产生较少的并发;乐观方法可能需要回滚

Speculative Decomposition: Example

模拟网络的例子(例如生产线和计算机网络).

任务是模拟不同输入和节点参数(如延迟)下

网络的行为

75

76

Hybrid Decompositions

在quicksort, 递归分解限制了并发。这时可用数据分

解和递归分解

离散事件模拟(discrete event simulation)可用数

据分解和猜测分解

对于找最小数,可用数据分解和递归分解

77

任务特性

• 任务特征影响并行算法的选择及其性能

• 任务生成

• 任务粒度

• 与任务相关的数据规模

78

Task Generation

• 静态任务生成

• 例如:矩阵运算,图算法,图像处理应用以及其它结构

化问题.

• 任务分解通常用数据分解和递归分解.

• 动态任务生成

• 一个例子是15谜 – 每一15谜棋局由前一棋局产生.

• 应用通常用探索和猜测法分解.

79

Task Sizes

• 任务粒度可以是统一,也可以是非一致

• 例如:组合优化问题里很难估计状态空间的大小

80

Size of Data Associated with Tasks

• The size of data associated with a task may be small or

large when viewed in the context of the size of the task.

• A small context of a task implies that an algorithm can

easily communicate this task to other processes

dynamically (e.g., the 15 puzzle).

• A large context ties the task to a process, or alternately,

an algorithm may attempt to reconstruct the context at

another processes as opposed to communicating the

context of the task.

81

Characteristics of Task Interactions

• Tasks may communicate with each other in various ways.

The associated dichotomy is:

• Static interactions:

• The tasks and their interactions are known a-priori. These are

relatively simpler to code into programs.

• Dynamic interactions:

• The timing or interacting tasks cannot be determined a-priori. These

interactions are harder to code, especially, as we shall see, using

message passing APIs.

82

Characteristics of Task Interactions

• Regular interactions:

• There is a definite pattern (in the graph sense) to the

interactions. These patterns can be exploited for

efficient implementation.

• Irregular interactions:

• Interactions lack well-defined topologies.

83

Characteristics of Task Interactions:

Example

A simple example of a regular static interaction pattern

is in image dithering. The underlying communication

pattern is a structured (2-D mesh) one as shown here:

84

Characteristics of Task Interactions:

Example

The multiplication of a sparse matrix with a vector is

a good example of a static irregular interaction

pattern. Here is an example of a sparse matrix and its

associated interaction pattern.

85

Characteristics of Task Interactions

• Interactions may be read-only or read-write.

• In read-only interactions, tasks just read data

items associated with other tasks.

• In read-write interactions tasks read, as well as

modify data items associated with other tasks.

86

Mapping

• Mapping Techniques for Load Balancing

• Static and Dynamic Mapping

• Methods for Minimizing Interaction Overheads

• Maximizing Data Locality

• Minimizing Contention and Hot-Spots

• Overlapping Communication and Computations

• Replication vs. Communication

• Group Communications vs. Point-to-Point Communication

• Parallel Algorithm Design Models

• Data-Parallel, Work-Pool, Task Graph, Master-Slave, Pipeline,

and Hybrid Models

87

Mapping Techniques

• Mappings must minimize overheads.

• Primary overheads are communication and idling.

• Minimizing these overheads often represents

contradicting objectives.

• Assigning all work to one processor trivially

minimizes communication at the expense of

significant idling.

88

Mapping Techniques for Minimum Idling

Mapping must simultaneously minimize idling and load

balance. Merely balancing load does not minimize

idling.

89

Mapping Techniques for Minimum Idling

Mapping techniques can be static or dynamic.

• Static Mapping

• Tasks are mapped to processes a-priori

• For this to work, we must have a good estimate of the

size of each task. Even in these cases, the problem

may be NP complete.

• Dynamic Mapping

• Tasks are mapped to processes at runtime

• This may be because the tasks are generated at

runtime, or that their sizes are not known.

90

Schemes for Static Mapping

• Mappings based on data partitioning

• Mappings based on task graph

partitioning

• Hybrid mappings

91

Mappings Based on Data Partitioning

The simplest data decomposition schemes for dense

matrices are 1-D block distribution schemes.

Block Array Distribution Schemes

Block distribution schemes can be generalized

to higher dimensions as well.

92

93

Block Array Distribution Schemes:

Examples

• For multiplying two dense matrices A and

B, we can partition the output matrix C

using a block decomposition.

• For load balance, we give each task the

same number of elements of C. (Note that

each element of C corresponds to a single

dot product.)

94

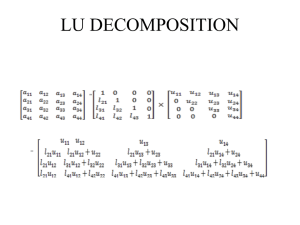

Cyclic and Block Cyclic Distributions

• If the amount of computation associated with

data items varies, a block decomposition may

lead to significant load imbalances.

• A simple example of this is in LU

decomposition (or Gaussian Elimination) of

dense matrices.

LU Factorization of a Dense Matrix

A decomposition of LU factorization into 14 tasks notice the significant load imbalance.

1:

6:

11:

2:

7:

12:

3:

8:

13:

4:

9:

14:

5:

10:

95

Block Cyclic Distributions

Variation of the block distribution scheme

that can be used to alleviate the loadimbalance and idling problems.

Partition an array into many more blocks than

the number of available processes.

Blocks are assigned to processes in a roundrobin manner so that each process gets

several non-adjacent blocks.

96

99

Mappings Based on Task Partitioning

• Partitioning a given task-dependency

graph across processes.

• Determining an optimal mapping for a

general task-dependency graph is an NPcomplete problem.

100

Task Partitioning: Mapping a Binary Tree

Dependency Graph

Example illustrates the dependency graph of one view

of quick-sort and how it can be assigned to processes

in a cube.

101

Hierarchical Mappings

• Sometimes a single mapping technique is

inadequate.

• For example, the task mapping of the binary tree

(quicksort) cannot use a large number of

processors.

• For this reason, task mapping can be used at the

top level and data partitioning within each level.

102

An example of task partitioning at top level with

data partitioning at the lower level.

103

Schemes for Dynamic Mapping

• Dynamic mapping is sometimes also referred to

as dynamic load balancing, since load balancing

is the primary motivation for dynamic mapping.

• Dynamic mapping schemes can be centralized

or distributed.

104

Centralized Dynamic Mapping

• Processes are designated as masters or slaves.

• When a process runs out of work, it requests the master

for more work.

• When the number of processes increases, the master

may become the bottleneck.

• To alleviate this, a process may pick up a number of

tasks (a chunk) at one time. This is called Chunk

scheduling.

• Selecting large chunk sizes may lead to significant load

imbalances as well.

• A number of schemes have been used to gradually decrease

chunk size as the computation progresses.

105

Distributed Dynamic Mapping

• Each process can send or receive work from other

processes.

• This alleviates the bottleneck in centralized schemes.

• There are four critical questions:

• how are sensing and receiving processes paired together

• who initiates work transfer

• how much work is transferred

• when is a transfer triggered?

• Answers to these questions are generally application

specific.

106

Minimizing Interaction Overheads

• Maximize data locality

• Where possible, reuse intermediate data. Restructure

computation so that data can be reused in smaller

time windows.

• Minimize volume of data exchange

• There is a cost associated with each word that is

communicated

• Minimize frequency of interactions

• There is a startup cost associated with each

interaction

• Minimize contention and hot-spots

• Use decentralized techniques, replicate data where

necessary.

107

Minimizing Interaction Overheads (cont.)

• Overlapping computations with interactions

• Use non-blocking communications, multithreading,

and prefetching to hide latencies.

• Replicating data or computations.

• Using group communications instead of point-

to-point primitives.

• Overlap interactions with other interactions.

108

Parallel Algorithm Models

算法模型主要涉及选择划分方法以及映射技术

以减少任务之间的交互.

• Data Parallel Model

• 任务静态映射到进程,每一任务在不同数据上执行相似

操作.

• Task Graph Model

• 根据任务依赖图,任务之间的交互用于增强局部性或减

少交互开销

109

Parallel Algorithm Models (cont.)

• Master-Slave Model

• 一个或多个进程产生任务,并静态或动态分配给工作

进程

• Pipeline / Producer-Consumer Model

• 数据流通过一系列进程,每一进程在数据上执行任务.

• Hybrid Models

• 由多种模型水平、垂直或顺序组合来解决应用问题