Collaborative Competitive Filtering: Learning

advertisement

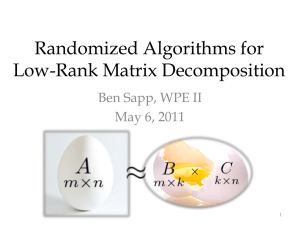

Intro to RecSys and CCF Brian Ackerman 1 Roadmap • Introduction to Recommender Systems & Collaborative Filtering • Collaborative Competitive Filtering 2 Introduction to Recommender Systems & Collaborative Filtering 3 Motivation • Netflix has over 20,000 movies, but you may only be interested in a small number of these movies • Recommender systems can provide personalized suggestions based on a large set of items such as movies – Can be done in a variety of ways, the most popular is collaborative filtering 4 Collaborative Filtering • If two users rate a subset of items similarly, then they might rate other items similarly as well User 1 User 2 Item A ? 1 Item B 3 3 Item C 4 4 Item D 5 5 Item E 3 ? 5 Roadmap (RS-CF) • Motivation • Problem • Main CF Types – Memory-based – User-based – Model-based – Regularized SVD 6 Problem Setting • Set of users, U • Set of items, I • Users can rate items where rui is user u’s rating on item i • Ratings are often stored in a rating matrix – R|U|×|I| 7 Sample Rating Matrix Item A Item B Item C Item D Item E Item F Item G Item H Item I User 1 - 5 - 3 - - 2 - - User 2 4 - 5 - - 4 - 1 - User 3 - 4 - 3 - - 2 - - User 4 1 2 - - - 5 - 3 - User 5 - - 3 - 4 - - 2 - User 6 - 2 - - 1 - - 2 - User 7 4 - - 5 - - 4 - 1 # is a user rating, - means a null entry, not rated 8 Problem • Input – Rating matrix (R|U|×|I|) – Active user, a (user interacting with the system) • Output – Prediction for all null entries of the active user 9 Roadmap (RS-CF) • Motivation • Problem • Main CF Types – Memory-based – User-based – Model-based – Regularized SVD 10 Main Types • Memory-based – User-based* [Resnick et al. 1994] – Item-based [Sarwar et al. 2001] – Similarity Fusion (User/Item-based) [Wang et al. 2006] • Model-based – SVD (Singular Value Decomposition) [Sarwar et al. 2000] – RSVD (Regularized SVD)* [Funk 2006] 11 User-based Item A Item B Item C Item D Item E Item F Item G Item H Item I Active ? 5 ? 3 ? ? 2 ? ? User 2 4 - 5 - - 4 - 1 - User 3 - 4 - 3 - - 2 - - User 4 1 2 - - - 5 - 3 - User 5 - - 3 - 4 - - 2 - User 6 - 2 - - 1 - - 2 - User 7 4 - - 5 - - 4 - 1 • Find similar user’s – KNN or threshold • Make prediction 12 User-based – Similar Users • Consider each user (row) to be a vector • Compare each vector to find the similarity between two users – Let a be the vector for active user and u3 be the vector for user 3 – Cosine similarity can be used to compare vectors 13 User-based – Similar Users Item A Item B Item C Item D Item E Item F Item G Item H Item I User 1 ? 5 - 3 - - 2 - - User 2 4 - 5 - - 4 - 1 - User 3 - 4 - 3 - - 2 - - User 4 1 2 - - - 5 - 3 - User 5 - - 3 - 4 - - 2 - User 6 - 2 - - 1 - - 2 - User 7 4 - - 5 - - 4 - 1 • KNN (k-nearest neighbors or top-k) – Only find the k most similar users • Threshold – Find all users that are at most θ level of similarity 14 User-based – Make Prediction • Weighted by similarity – Weight each similar user’s rating based on similarity to active user Similar users Prediction for active user on item i 15 Main Types • Memory-based – User-based* [Resnick et al. 1994] – Item-based [Sarwar et al. 2001] – Similarity Fusion (User/Item-based) [Wang et al. 2006] • Model-based – SVD (Singular Value Decomposition) [Sarwar et al. 2000] – RSVD (Regularized SVD)* [Funk 2006] 16 Regularized SVD • Netflix data has 8.5 billion entries based on 17 thousand movie and .5 million users • Only 100 million ratings – 1.1% of all possible ratings • Why do we need to operate on such a large matrix? 17 Regularized SVD – Setup • Let each user and item be represented by a feature vector of length k – E.g. Item A may be vector Ak = [a1 a2 a3 … ak] • Imagine the features for items were fixed – E.g. items are movies and each feature is a genre such as comedy, drama, etc… • Features of the user vector are how well a user likes that feature 18 Regularized SVD – Setup • Consider the movie Die Hard – Its feature vector may be i = [1 0 0] if the features are action, comedy, and drama • Maybe the user has the feature vector u = [3.87 2.64 1.32] • We can try to predict a user’s rating using the dot product of these two vectors – r’ui= u ∙ i = [1 0 0] ∙ [3.87 2.64 1.32] = 3.87 19 Regularized SVD – Goal • Try to find values for each item vector that work for all users • Try to find value for each user vector that can produce the actual rating when taking the dot product with the item vector • Minimizing the difference between the actual and predicted (based on dot product) rating 20 Regularized SVD – Setup • In reality, we cannot choose k to be large enough for a fixed number of features – There are too many to consider (e.g. genre, actors, directors, etc…) • Usually k is only 25 to 50 which reduces the total size of the matrices to only roughly 25 million to 50 million (compared to 8.5 billion) • Because of the size of k, the values in the vectors are NOT directly tied to any feature 21 Regularized SVD – Goal • Let u be a user, i be an item, rui is a rating by user u on item i where R is the set of all ratings, and φu, φi are the vectors • At first thought, it seems simple to have the following optimization goal 22 Regularized SVD – Overfitting • Problem is overfitting of the features – Solved by regularization 23 Regularized SVD – Regularization • Introduce a new optimization goal including a term for regularization • Minimizing the magnitude of the feature vectors – Controlled by fixed parameters λu and λi 24 Regularized SVD • Many improvements have been proposed to improve the regularized optimization goal – RSVD2/NSVD1/NSVD2 [Paterek 2007]: added term for user bias and a term for item bias, minimize number of parameters – Integrated Neighborhood SVD++ [Koren 2008]: used a neighborhood-based approach to RSVD 25 Roadmap • Introduction to Recommender Systems & Collaborative Filtering • Collaborative Competitive Filtering 26 Collaborative Competitive Filtering: Learning Recommender Using Context of User Choice Georgia Tech and Yahoo! Labs Best Student Paper at SIGIR’11 27 Motivation • A user may be given 5 random movies and chooses Die Hard – This tells us the user prefers action movies • A user may be given 5 actions movies and chooses Die Hard over Rocky and Terminator – This tells us the user prefers Bruce Willis 28 Roadmap (CCF) • • • • Motivation Problem Setting & Input Techniques Extensions 29 Problem Setting • Set of users, U • Set of items, I • Each user interaction has an offer set O and a decision set D • Each user interaction is stored as a tuple (u, O, D) where D is a subset of O 30 CCF Input Item A Item B Item C Item D Item E Item F Item G Item H U1-S1 1 - U1-S2 - - U1-S3 - - - 1 - - - - U2-S2 U3-S2 1 - U2-S1 U3-S1 - Item I 1 1 - - - - - 1 - - 1 1 means user interaction, - means it was in the offer set 31 Roadmap (CCF) • • • • Motivation Problem Setting & Input Techniques Extensions 32 Local Optimality of User Choice • Each item has a potential revenue to the user which is rui • Users also consider the opportunity cost (OC) when deciding potential revenue – OC is what the user gives up for making a given decision • OC is cui = max( i’ | i’ in O \ i) • Profit is πui= rui – cui 33 Local Optimality of User Choice • A user interaction is an opportunity give and take process – User is given a set of opportunities – User makes a decision to select one of the many opportunities – Each opportunity comes with some revenue (utility or relevance) 34 Competitive Collaborative Filtering • Local optimality constraint – Each item in the decision set has a revenue higher than those not in the decision set – Problem becomes intractable with only this constraint, no unique solution 35 CCF – Hinge Model • Optimization goal – Minimize error (ξ, slack variable) & model complexity 36 CCF – Hinge Model • Find average potential utility – Average utility of non-chosen items • Constraints – Chosen items have a higher utility – eui is an error term 37 CCF – Hinge Model • Optimization Goal – Assume ξ is 0 Average Relevance of Non-chosen Items 38 CCF – How to use results • We can predict the relevance of all items based on user and item vectors – Can set threshold if more than one item can be chosen (e.g. θ > .9 implies action) Item User Action Predicted Relevance A 1 .98 B - .93 C - .56 D - .25 E - .11 39 Roadmap (CCF) • • • • Motivation Problem Setting & Input Techniques Extensions 40 Extensions • Sessions without a response – User does not take any opportunity • Adding content features – Fixed features for each item rather than a limited number of parameters to improve accuracy of new item prediction 41