Class presentation

advertisement

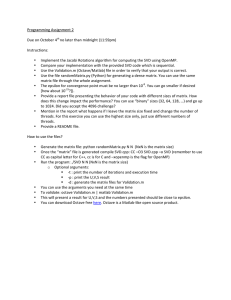

Netflix Prize Solution: A Matrix Factorization Approach By Atul S. Kulkarni kulka053@d.umn.edu Graduate student University of Minnesota Duluth Agenda • • • • • • • • • • Problem Description Netflix Data Why is it a tough nut to crack? Overview of methods already applied to this problem Overview of the Paper Details of the method How does this method works for the Netflix problem My implementation Results Q and A? Netflix Prize Problem • Given a set of users with their previous ratings for a set of movies, can we predict the rating they will assign to a movie they have not previously rated? • Defined at http://www.netflixprize.com//index • Seeks to improve the Cinematch’s (Netflix’s existing movie recommender system) prediction performance by 10%. • How is the performance measured? – Root Mean Square Error (RMSE) • Winner gets a prize of 1 Million USD. Problem Description • Recommender Systems – Use the knowledge about preference of a group of users about a certain items and help predict the interest level for other users from same community. [1] • Collaborative filtering – Widely used method for recommender systems – Tries to find traits of shared interest among users in a group to help predict the likes and dislikes of the other users within the group. [1] Why is this problem interesting? • Used by almost every recommender system today – Amazon – Yahoo – Google – Netflix –… Netflix Data • • • • • • Netflix released data for this competition Contains nearly 100 Million ratings Number of users (Anonymous) = 480,189 Number of movies rated by them = 17,770 Training Data is provided per movie To verify the model developed without submitting the predictions to Netflix “probe.txt” is provided • To submit the predictions for competition “qualifying.txt” is used Netflix Data in Pictures • These pictures are taken as is from [5] Num. Users with Avg. Rating of 45000 40000 35000 30000 25000 20000 15000 10000 5000 0 1 1.2 1.4 1.6 1.8 2 2.2 2.4 2.6 2.8 3 3.2 3.4 3.6 3.8 4 4.2 4.4 4.6 4.8 5 1909 1918 1914 1924 1915 1920 1926 1928 1925 1930 1933 1938 1934 1936 1943 1940 1944 1939 1946 1949 1953 1952 1958 1961 1957 1967 1964 1962 1965 1971 1975 1976 1973 1978 1979 1980 1984 1986 1989 1990 1992 1994 2005 1997 1999 2000 2002 Netflix Data in Pictures Contd. Number of movies per year 1600 1400 1200 1000 800 600 400 200 0 Netflix Data in Pictures Contd. Distribution of ratings 4 9 26 1 2 3 28 4 5 33 Netflix Data • Data in the training file is per movie – It looks like this Movie# Customer#,Rating,Date of Rating Customer#,Rating,Date of Rating Customer#,Rating,Date of Rating - Example 4: 1065039,3,2005-09-06 1544320,1,2004-06-28 410199,5,2004-10-16 Netflix Data Data points in the “probe.txt” looks like this (Have answers) Data in the qualifying.txt looks like this (No answers) Movie# Customer# Customer# Movie# Customer#, DateofRating Customer#, DateofRating 1: 30878 2647871 1283744 1: 1046323,2005-12-19 1080030,2005-12-23 1830096,2005-03-14 Hard Nut to Crack? • Why is this problem such a difficult one? – Total ratings possible = 480,189 (user) * 17,770 (movies) = 8532958530 (8.5 Billion) – Total available = 100 Million – The User x Movies matrix has 8.4 Billion entries missing – Consider the problem as Least Square problem – We can consider this problem by representing it as system of equation in a matrix Technically tough as well • Huge memory requirements • High time requirements • Because we are using only ~100 Million of possible 8.5 Billion ratings the predictors have some error in their weights (small training data) Various Methods Employed for Netflix Prize Problem • Nearest Neighbor methods – k-NN with variations • Matrix factorization – Probabilistic Latent Semantic Analysis – Probabilistic Matrix Factorization – Expectation Maximization for Matrix Factorization – Singular Value Decomposition – Regularized Matrix Factorization [2] The Paper • Title: “Improving regularized singular value decomposition for collaborative filtering” - Arkadiusz Paterek, Proceedings of KDD Cup and Workshop, 2007. [3] • Uses Algorithm described by Simon Funk (Brandyn Webb) in [4]. • The algorithm revolves around regularized Singular Value Decomposition (SVD) described in [4] and suggests some interesting use of biases to it to improve performance. • It also proposes some methods for post processing of the features extracted from the SVD. • It compares the various combinations of methods suggested in the paper for the Netflix Data. Singular Value Decomposition • Consider the given problem as a Matrix of Users x Movies A or • Movies x Users • Show are the two examples • What do we do with this representation? U1 M1 M2 M3 M4 2 4 5 5 3 5 4 5 U2 U3 2 U1 U2 M5 1 1 5 5 U3 M1 2 M2 4 M3 5 3 4 M4 5 5 5 M5 M6 2 1 1 5 M6 5 Singular Value Decomposition • Method of Matrix Factorization • Applicable to rectangular matrices and square alike • Decomposes the matrix in to 3 component matrices whose product approximates the original matrix • E.g. • • • D $d [1] 13.218989 4.887761 1.538870 U $u [,1] [,2] [,3] [1,] -0.5606779 0.8192382 -0.1203705 [2,] -0.5529369 -0.4786352 -0.6820331 [3,] -0.6163612 -0.3158436 0.7213472 V $v [,1] [,2] [,3] [1,] -0.17808307 0.20598164 0.78106201 [2,] -0.16965834 0.67044040 -0.31288023 [3,] -0.52406769 0.28579770 0.15429276 [4,] -0.65435261 0.02532797 -0.26336364 [5,] -0.04182898 -0.09792523 -0.44320373 [6,] -0.48469427 -0.64511243 0.04951659 Can we recover original Matrix? • Yes. (Well almost!) Here is how. • We will Multiply the 3 Matrices U*D*VT • We get – A* ~= A. • [,1] [1,] 2.000000e+00 [2,] -8.564655e-16 [3,] 2.000000e+00 [,2] 4.000000e+00 -1.221706e-15 -1.231356e-15 [,3] 5 3 4 [,4] 5 5 5 [,5] -1.557185e-17 1.000000e+00 1.757492e-16 [,6] 1 5 5 • We can see this is an Approximation of the original matrix. How do we use SVD? • We use the 2 matrices U and V to estimate the original matrix A. • So what happened to the diagonal matrix D? • We train our method on the given training set and learn by rolling the diagonal matrix in the two matrices. • We do U * VT and obtain A’. • Error = ∀i∀jAij’ – Aij. Algorithm variations covered in this paper • • • • • • • • Simple Predictors Regularized SVD Improved Regularized SVD (with Biases) Post processing SVD with KNN Post processing SVD with kernel ridge regression K-means Linear model for each item Decreasing the number of Parameters The SVD Algorithm from paper [3,4,6] • Initialize 2 arrays movieFeatures (U) and customerFeatures (V) to very small value 0.1 • For every feature# in features Until minimum iterations are done or RMSE is not improving more than minimum improvement For every data point in training set //data point has custID and movieID prating = customerFeatures[feature#][custID] * movieFeatures [feature#][movieID] //Predict the rating error = originalrating - prating //Find the error squareerrsum += error * error //Sum the squared error for RMSE. cf = customerFeatures[feature#][custID] //locally copy current feature value mf = movieFeatures [feature#][movieID] //locally copy current feature value Contd. Algorithm contd. customerFeatures[feature#][custID] += learningrate *(error * mf – regularizationfactor * cf) //Rolling the ERROR in to the features movieFeatures [feature#][movieID] += learningrate *(error * cf – regularizationfactor * mf) //Rolling the ERROR in to the feature RMSE = (squareerrsum / total number of data points) // Calculate RMSE • Now we do the testing • For every test point with custID and movieID For every feature# in Features predictedrating += customerFeatures[feature#][custID] * movieFeatures [feature#][movieID] • Caveats – clip the ratings in the range (1, 5) predicted rating might go out of bounds • “Regularization factor” is introduced by Brandyn Webb in [4] to reduce the over fitting Variation: Improved Regularized SVD • That was regularized SVD • Improved Regularized SVD with Biases – Predict the rating with 2 added biases Ci per customer and Dj per movie • Rating = Ci + Dj + coustomerFeatures[featue#][i] * movieFeatures[Feature#][j] – During training update the biases as • Ci += learningrate * (err – regularization(Ci + Dj – global_mean)) • Dj += learningrate * (err – regularization(Ci + Dj – global_mean)) • Learningrate = .001, regularization = 0.05, global_mean = 3.6033 Variation: KNN for Movies • Post processing with KNN – On the Regularized SVD movieFeature matrix we run cosine similarity between 2 vectors similarity = movieFeature[movieID1]T * movieFeature[movieID2] ||movieFeature[movieID1]||*||movieFeature[movieID2]|| – Using this similarity measure we build a neighborhood of 1 nearest movies and predict rating of the nearest movie as the predicted rating Experimentation Strategy by author • Select 1.5% - 15% of the probe.txt as hold-out set or test set. • Train all models on rest of the ratings • All models predict the ratings • Merge the results using linear regression on the test set • Combining two methods for initial prediction & then performing linear regression Results from the Paper[2] Predictor Test RMSE with BASIC Test RMSE with BASIC and RSVD2 Cumulative Test RMSE BASIC .9826 .9039 .9826 RSVD .9024 .9018 .9094 RSVD2 .9039 .9039 .9018 KMEANS .9410 .9029 .9010 SVD_KNN .9525 .9013 .8988 SVD_KRR .9006 .8959 .8933 LM .9506 .8995 .8902 NSVD1 .9312 .8986 .8887 NSVD2 .9590 .9032 .8879 SVD_KRR * NSVD1 - - .8879 SVD_KRR * NSVD2 - - .8877 Replicated from the paper as is My Experiments • I am trying out the regularized SVD method and Improved Regularized SVD method with qualifying.txt, probe.txt • Also, going to implement first 3 steps of the author’s experimentation strategy (in my case I will predict with regularized SVD and Improved regularized SVD) • If time permits might try SVD KNN method • I am also varying some parameters like learning rate, number of features, etc. to see its effect on the results. • I shall have all my results posted on the web site soon Questions? References 1. Herlocker, J, Konstan, J., Terveen, L., and Riedl, J. Evaluating Collaborative Filtering Recommender Systems. ACM Transactions on Information Systems 22 (2004), ACM Press, 5-53. 2. Gábor Takács, István Pilászy, Bottyán Németh, Domonkos Tikk Scalable Collaborative Filtering Approaches for Large Recommender Systems. JMLR Volume 10 :623--656, 2009. 3. Arkadiusz Paterek, Improving regularized singular value decomposition for collaborative filtering - Proceedings of KDD Cup and Workshop, 2007. 4. http://sifter.org/~simon/journal/20061211.html 5. http://www.igvita.com/2006/10/29/dissecting-the-netflix-dataset/ 6. G. Gorrell and B. Webb. Generalized hebbian algorithm for incremental latent semantic analysis. Proceedings of Interspeech, 2006. Thanks for your time! Atul S. Kulkarni kulka053@d.umn.edu