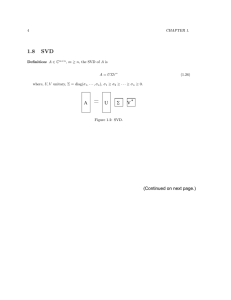

SVD: Singular Value Decomposition

advertisement

SVD: Singular Value Decomposition Motivation A : any matrix with com plete set of e-vectors A SS 1 A : any matrix A1 S1S 1 A LU LUx b Ly b x S1S 1b Assume A full rank Ux y Clearly the winner A : sym m etricmat rix A : any matrix A Q Q T A U V A1 Q1Q T 1 T 1 A V U T x Q1Q T b x V 1U T b 2 Ideas Behind SVD There are many choices of basis in C(AT) and C(A), but we want the orthonormal ones Goal: for Am×n find orthonormal bases for C(AT) and C(A) orthonormal basis in C(AT) row space column space A Rn Ax=0 orthonormal basis in C(A) Rm ATy=0 3 SVD (2X2) I haven’t told you how to find vi’s (p.9) Assume r 2 unit vectors in C ( A ) : v1 v2 T AND we want their images in C ( A) : Av1 Av2 u1 Av1 Av1 Av1 1 , u2 Av2 Av2 Av2 2 Av1 v2 1u1 2u2 u1 AV U : represent the length of images; hence non-negative 1 u2 2 4 SVD 2x2 (cont) AV U A UV Another diagonalization using 1 UV T 2 sets of orthogonal bases Compare When A has complete set of e-vectors, we have AS=S , A=SS1 but S in general is not orthogonal When A is symmetric, we have A=QQT 5 Why are orthonormal bases good? ( )-1=( )T Implication: Matrix inversion A UV T 1 Ax=b A UV T 1 V 1U T A UV T Ax b UV x b V x U b ... diagonalsystem T T T 6 More on U and V 2 T V: eigenvectors of ATA T T T T 1 A A V U UV V V 2 2 U: eigenvectors of AAT Similarly, 2 T T T T T 1 AA UV V U U U 2 2 [Find vi first, then use Avi to find ui This is the key to solve SVD 7 SVD: A=UVT The singular values are the diagonal entries of the matrix and are arranged in descending order The singular values are always real (nonnegative) numbers If A is real matrix, U and V are also real 8 Example (2x2, full rank) 2 2 A 1 1 1 5 3 A A , v1 3 5 1 T 1 2 2 , v2 2 1 2 2 2 1 1 Av1 1 , u1 0 0 0 0 0 0 Av2 2 , u2 2 1 1 1 0 2 2 A UV 0 1 0 T STEPS: 1. Find e-vectors of ATA; normalize the basis 2. Compute Avi, get i If i 0, get ui Else find ui from N(AT) 0 1 2 1 2 1 2 1 2 2 9 SVD Theory AV U Av j j u j , j 1,2,, r If j=0, Avj=0vj is in N(A) The corresponding uj in N(AT) Else, vj in C(AT) [UTA=VT=0] The corresponding uj in C(A) #of nonzero j = rank 10 Example (2x2, rank deficient) 2 2 1 1 1 T A , r 1, C ( A ) basis : ; v1 1 1 1 1 2 Av1 1u1 2 2 1 1 1 2 1 1 1 1 1 1 10 5 2 1 v2 v1 v2 N ( A) Av2 0 v2 2 Can also be obtained from e-vectors of ATA 1 1 1 1 u2 u1 u2 N ( A ) A u2 0 u A 0 u1 2 5 T T T 2 2 2 1 2 1 10 0 1 1 1 T 1 1 UV 1 2 1 1 5 2 0 0 11 Example (cont) 2 2 1 2 1 10 0 1 1 1 T 1 1 UV 1 2 1 1 5 2 0 0 A UV T u1 u1 1 0 v1T u2 T 0 0 v2 1v1T T u2 u v 1 1 1 0 Bases of N(A) and N(AT) (u2 and v2 here) do not contribute the final result. The are computed to make U and V orthogonal. 12 Extend to Amxn Avi i ui , i 1,, r Av1 vr vr 1 vn u1 Basis of N(A) 1 ur ur 1 um r Basis of N(AT) 0 Dimension Check AV U Amn U mm mnVnTn A A AA T nn T mm UV V V UV UV U V UV T T T T T T nn mm T nn T T nn mm T mm 13 Extend to Amxn (cont) Av1 vr vr 1 vn u1 ur 1 ur 1 um r 0 Summation of r rank-one matrices! v vrT A 1u1 r ur 0 0 T 1u1v1T r ur vrT vr 1 Bases of N(A) and N(AT) T They are useful only for vm do not contribute T 1 nullspace solutions 14 1 1 0 A , r 2, A23V33 U 22 23 0 1 1 1 1 0 1 3 3 2 T Av1 V : A A 1 2 1 6 3 6 0 1 1 1 1 Av2 1 1 1 3, v1 2 1 6 1 0 1 1 2 1, v2 0 2 1 1 1 0 1 3 0, v3 1 (nullspace ) 3 1 1 0 1 1 1 2 1 1, 1 3 1, 2 1 2 1 6 2 6 1 6 1 2 0 1 2 1 3 1 3 1 3 1 1 1 3 1 1 2 1 C(AT) N(A) C(A) 1 0 1 1 1 3 1 1 2 1 1 1 1 6 1 2 1 3 2 6 0 1 3 1 2 1 2 1 3 15 1 2 Example :A 2 3 1 2 6 10 6 1 2 , AA T A T A 10 17 10 6 10 6 1 Eigen valuesof A T A ,A T A 28 .86 0 . 14 values rankof A , Number of non - zerosingular 0 Eigen vectorsof A T A ,A T A 0 .454 0 .542 0 .707 u 1 v 1 0 .776 u 2 v 2 0 .643 u 3 v 3 0 0 .454 0 .542 0 .707 Expansionof A A 2 iu iv Ti i 1 16 Summary SVD chooses the right basis for the 4 subspaces AV=U v1…vr: orthonormal basis in Rn for C(AT) vr+1…vn: N(A) u1…ur: in Rm C(A) ur+1…um: N(AT) These bases are not only , but also Avi=iui High points of Linear Algebra Dimension, rank, orthogonality, basis, diagonalization, … 17 SVD Applications Using SVD in computation, rather than A, has the advantage of being more robust to numerical error Many applications: Inverse of matrix A Conditions of matrix Image compression Solve Ax=b for all cases (unique, many, no solutions; least square solutions) rank determination, matrix approximation, … SVD usually found by iterative methods (see Numerical Recipe, Chap.2) 18 Matrix Inverse A isnonsingular iff i 0 foralli A U V T or A 1 V 1U T where 1 diag1/ 1,1/ 2 ,...., 1/ n A issingular or ill - conditione d A 1 U V T 1 V 01U T 1/ i if i t where 01 0 otherwise Degree of singularit y of a matrix System of linear equations Ax b Ill - conditione d when smallchanges inb produces largechanges inx Degree of singularit y A : 1 / n 19 SVD and Ax=b (mn) Check for existence of solution UV x b Vx U b T T T z d z d If i 0 but d i 0, solutiondoes not exist 20 Ax=b (inconsistent) 2 2 8 A ,b 1 1 3 2 2 1 2 1 10 0 1 1 1 T 1 1 UV 1 2 1 1 5 2 0 0 10 0 z1 1 2 1 8 20 5 T U b 5 1 2 3 2 5 0 z 2 0 No solution! 21 Ax=b (underdetermined) 2 2 8 A ,b 1 1 4 2 2 1 2 1 10 0 1 1 1 T 1 1 UV 1 2 1 1 5 2 0 0 10 0 z1 1 2 1 8 20 5 T U b 5 1 2 4 0 0 0 z 2 z1 2 2 1 1 1 2 2 2 x particular Vz z 1 1 2 0 2 2 0 2 1 2 xcomplete x particular xnull c 2 1 2 22 Pseudo Inverse (Sec7.4, p.395) The role of A: Takes a vector vi from row space to iui in the column space The role of A-1 (if it exists): Does the opposite: takes a vector ui from column space to row space vi Avi i ui v i i A1ui A1ui 1i v i 23 Pseudo Inverse (cont) While A-1 may not exist, a matrix that takes ui back to vi/i does exist. It is denoted as A+, the pseudo inverse A+: dimension n by m A ui 1 i vi for i r and A ui 0 for i r A Vnn nmU mT m v1 vr 11 vn r1 T u u u r m 1 24 Pseudo Inverse and Ax=b Ax b A panacea for Ax=b x A b V U b T Overdetermined case: find the solution that minimize the error r=|Ax–b|, the least square solution Compare A T A xˆ A T b 25 Ex: full rank 2 2 0 A ,b 1 1 2 1 0 2 2 0 1 2 1 A UV 2 1 2 1 0 1 0 Ax b UV T x b x Vdiag(1 / i )U T b T 1 x 1 2 2 1 1 2 2 2 0 1 2 2 2 0 1 0 0 1 1 0 1 2 1 2 26 Ex: over-determined 1 0 1 A 1 1, b 2 , r 2, A32V22 U 33 32 0 1 1 1 0 1 1 0 1 A UV T 1 6 2 6 1 6 1 2 0 1 2 1 3 1 3 1 3 3 1 1 1 1 1 1 2 A V U T Will show this need not be computed… 27 Over-determined (cont) x V U T b Compare A T A xˆ A T b 16 26 16 1 1 1 0 2 2 2 x y z 1 4 6 2 2 x 2 y z 1 1 1 2 1 1 1 3 1 2 1 1 1 1 1 3 1 2 1 1 2 3 2 53 1 1 1 2 3 1 1 2 1 x 1 3 1 2 x 2 1 x 1 53 1 x 2 3 Same result!! 28 Ex: general case, no solution 2 2 8 A ,b 1 1 3 2 2 1 2 1 10 0 1 1 1 T 1 1 UV 1 2 1 1 5 0 2 0 x V U T b x y 0 2 1 5 0 25 1 0 2 5 x 1 2 1 2 1 10 0 0 x 2 5 8 y 3 1 5 8 15 1 y 3 5 1 5 1 10 1 10 2 x 2 y 8 x y 3 8 19 10 19 3 10 29 Matrix Approximation Ai U iV T i : the rank i versionof (by settinglast m i ' s to zero) Ai : thebest rank i approximation toA in thesence of Euclidean distance A 1u1v1T 2u2 v2T mum vmT Storage save : rank one matrix(m n) numbers Operationsave : m n making small ’s to zero and back substitute (see next page for application in image compression) 30 Image Compression As described in text p.352 For grey scale images: mn bytes AfterSVD, takingthemost significant r terms: A 1u1v1T 2u2v2T r ur vrT Only need to store r(m+n+1) Original 6464 r = 1,3,5,10,16 (no perceivable difference afterwards) 31 32 33