CMPT 334 Computer Organization

advertisement

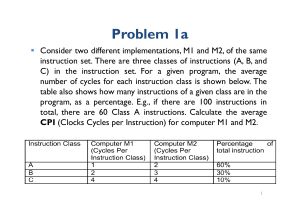

CMPT 334 Computer Organization Chapter 4 The Processor (Pipelining) [Adapted from Computer Organization and Design 5th Edition, Patterson & Hennessy, © 2014, MK] Improving Performance • Ultimate goal: improve system performance • One idea: pipeline the CPU • Pipelining is a technique in which multiple instructions are overlapped in execution. • It relies on the fact that the various parts of the CPU aren’t all used at the same time • Let’s look at an analogy Sequential Laundry • Four roommates need to do laundry • How long to do laundry sequentially? ▫ Washer, dryer, “folder”, “storer” each take 30 minutes ▫ Total time: 8 hours for four loads Pipelined Laundry • How long to do if can overlap tasks? ▫ Only 3.5 hours! Pipelining Notes • Pipelining doesn’t help latency of single task, it helps throughput of entire workload ▫ How many instructions can we execute per second? • Potential speedup = number of stages MIPS Pipeline • Five stages, one step per stage 1. 2. 3. 4. 5. IF: Instruction fetch from memory ID: Instruction decode & register read EX: Execute operation or calculate address MEM: Access memory operand WB: Write result back to register Stages of the Datapath • Stage 1: Instruction Fetch ▫ No matter what the instruction, the 32-bit instruction word must first be fetched from memory ▫ Every time we fetch an instruction, we also increment the PC to prepare it for the next instruction fetch PC = PC + 4, to point to the next instruction Stages of the Datapath • Stage 2: Instruction Decode ▫ First, read the opcode to determine instruction type and field lengths ▫ Second, read in data from all necessary registers For add, read two registers For addi, read one register For jal, no register read necessary Stages of the Datapath • Stage 3: Execution ▫ Uses the ALU ▫ The real work of most instructions is done here: arithmetic, logic, etc. ▫ What about loads and stores – e.g., lw $t0, 40($t1) Address we are accessing in memory is 40 + contents of $t1 We can use the ALU to do this addition in this stage Stages of the Datapath • Stage 4: Memory Access ▫ Only the load and store instructions do anything during this stage; the others remain idle • Stage 5: Register Write ▫ Most instructions write the result of some computation into a register ▫ Examples: arithmetic, logical, shifts, loads, slt ▫ What about stores, branches, jumps? Don’t write anything into a register at the end These remain idle during this fifth stage MIPS Pipeline • Five stages, one step per stage 1. 2. 3. 4. 5. IF: Instruction fetch from memory ID: Instruction decode & register read EX: Execute operation or calculate address MEM: Access memory operand WB: Write result back to register Datapath Walkthrough: LW, SW • lw $s3, 17($s1) ▫ ▫ ▫ ▫ ▫ Stage 1: fetch this instruction, increment PC Stage 2: decode to find it’s a lw, then read register $s1 Stage 3: add 17 to value in register $s1 (retrieved in Stage 2) Stage 4: read value from memory address compute in Stage 3 Stage 5: write value read in Stage 4 into register $s3 • sw $s3, 17($s1) ▫ ▫ ▫ ▫ Stage 1: fetch this instruction, increment PC Stage 2: decode to find it’s a sw, then read registers $s1 and $s3 Stage 3: add 17 to value in register $1 (retrieved in Stage 2) Stage 4: write value in register $3 (retrieved in Stage 2) into memory address computed in Stage 3 ▫ Stage 5: go idle (nothing to write into a register) Datapath Walkthrough: SLTI, ADD • slti $s3,$s1,17 ▫ Stage 1: fetch this instruction, increment PC ▫ Stage 2: decode to find it’s an slti, then read register $s1 ▫ Stage 3: compare value retrieved in Stage 2 with the integer 17 ▫ Stage 4: go idle ▫ Stage 5: write the result of Stage s3 in register $s3 • add $s3,$s1,$s2 ▫ Stage 1: fetch this instruction, increment PC ▫ Stage 2: decode to find it’s an add, then read registers $s1 and $s2 ▫ Stage 3: add the two values retrieved in Stage 2 ▫ Stage 4: idle (nothing to write to memory) ▫ Stage 5: write result of Stage 3 into register $s3 Pipeline Performance • Assume time for stages is ▫ 100ps for register read or write ▫ 200ps for other stages • Compare pipelined datapath with single-cycle datapath Instr Instr fetch Register read ALU op Memory access Register write Total time lw 200ps 100 ps 200ps 200ps 100 ps 800ps sw 200ps 100 ps 200ps 200ps R-format 200ps 100 ps 200ps beq 200ps 100 ps 200ps 700ps 100 ps 600ps 500ps Pipeline Performance Single-cycle (Tc= 800ps) Pipelined (Tc= 200ps) Pipeline Speedup • If all stages are balanced ▫ i.e., all take the same time ▫ Time between instructionspipelined = Time between instructionsnonpipelined Number of stages • If not balanced, speedup is less Limits to Pipelining: Hazards • Situations that prevent starting the next instruction in the next cycle • Structure hazards ▫ A required resource is busy • Data hazard ▫ Need to wait for previous instruction to complete its data read/write • Control hazard ▫ Deciding on control action depends on previous instruction Data Hazards • An instruction depends on completion of data access by a previous instruction ▫ add sub $s0, $t0, $t1 $t2, $s0, $t3 stall the pipeline Exercise 4.8 IF ID EX MEM WB 250ps 350ps 150ps 300ps 200ps R-type beq lw sw 45% 20% 20% 15% • What is the clock cycle time in a pipelined and non-pipelined processor? Pipelined 350 ps Single-cycle 1250 ps Exercise 4.8 IF ID EX MEM WB 250ps 350ps 150ps 300ps 200ps R-type beq lw sw 45% 20% 20% 15% • What is the total latency of an lw instruction in a pipelined and non-pipelined processor? Pipelined 1250 ps Single-cycle 1250 ps Exercise 4.8 IF ID EX MEM WB 250ps 350ps 150ps 300ps 200ps R-type beq lw sw 45% 20% 20% 15% • What is the total latency of an lw instruction in a pipelined and non-pipelined processor? Pipelined 1250 ps Single-cycle 1250 ps Exercise 4.8 IF ID EX MEM WB 250ps 350ps 150ps 300ps 200ps R-type beq lw sw 45% 20% 20% 15% • What is the utilization of the data memory? 35% Exercise 4.8 IF ID EX MEM WB 250ps 350ps 150ps 300ps 200ps R-type beq lw sw 45% 20% 20% 15% • What is the utilization of the write-register port of the “Registers” unit? 65%