Exploiting Choice : Instruction Fetch and Issue on an Implementable

advertisement

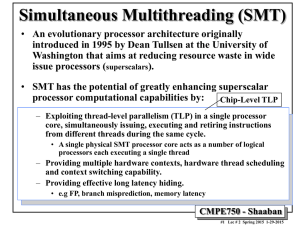

Dean M. Tullsen, Susan J. Eggers, Joel S. Emer, Henry M. Levy, Jack L.Lo, and Rebecca L. Stamm Presented by Kim Ki Young @ DCSLab Simultaneous Multithreading(SMT) A Technique that permits multiple independent threads to issue multiple instructions each cycle to a superscalar processor’s functional unit Two major impediments to processor utilization long latencies limited per-thread parallelism 2/20 1.Demonstrate the throughput gains of SMT a re possible without extensive changes to a co nventional, wide-issue superscalar processor 2.Show that SMT need not compromise single -thread performance 3.Detailed architecture model to analyze an d relieve bottlenecks that did not exist in th e more idealized model 4.Show how simultaneous multithreading cre ates an advantage previously unexploitable i n other architecture 3 4 A projection of current superscalar design tren ds 3-5 years into the future Changes necessary to support simultaneous m ultithreading Multiple program counters Separate return stack for each thread Per-thread instruction retirement, instru ction queue flush, and trap mechanisms A thread id with each branch target buffe r entry A larger register file 5 6 7 MIPSI MIPS-based simulator executes unmodified Alpha object code Workload SPEC92 benchmark suite five floating point programs, two integer programs, TeX Multiflow trace scheduling compiler 8 9 With only single thread, throughput is less than 2% below a superscalar w/o SMT support Peak throughput is 84% higher than the superscalar Three problems IQ size Fetch throughput Lack of parallelism 10 Improve fetch throughput w/o increasing the fetch b andwidth alg.num1.num2 alg : Fetch selection method num1 : # of threads that can fetch in 1 cycle num2 : max # of instructions fetched per thread i n 1 cycle Partitioning the fetch unit RR.1.8 RR.2.4, RR.4.2 Some hardware addition RR.2.8 Additional logic is required 11 12 Fetch Policies BRCOUNT that are least likely to be on a wrong path MISSCOUNT that have the fewest outstanding D cache miss ICOUNT with the fewest instructions in decode IQPOSN with instructions farther from head of IQ 13 14 15 Unblocking the Fetch Unit BIGQ increase IQ’s size as long as we don’t increase the search s pace double size, search first 32 entries ITAG do I cache tag lookup a cycle early 16 Two sources of issue slot waste Wrong-path instructions result from mispredicted branches Optimistically issued instructions result from cache miss or bank conflict Issue Algorithms OPT_LAST SPEC_LAST BRANCH_FIRST 17 The Issue Bandwidth not a bottleneck Instruction Queue Size not a bottleneck experiment with larger queues increased thr oughput by less than 1% Fetch Bandwidth prime candidate for bottleneck status increasing IQ and excess registers increased performance another 7% Branch Prediction less sensitive in SMT 18 Speculative Execution not a bottleneck eliminating will be a issue Memory Throughput infinite bandwidth caches will increase throughput only by 3% Register File Size no sharp drop-off point Fetch Throughput is still a bottleneck 19 Borrows heavily from conventional superscalar design, requiring little additional hardware support Minimizes the impact on single-thread performance, running only 2% slower in that scenario Achieves significant throughput improvements over the superscalar when many threads are running 20 Intel Pentium4, 2002 Hyper-Threading Technology(HTT) 30% speed improvement MIPS MT IBM POWER5, 2004 two-thread SMT engine SUN Ultrasparc T1, 2005 CMT : SMT + CMP(Chip-level multiprocessing) 21