Slides

advertisement

Spectral Approaches to

Nearest Neighbor Search

arXiv:1408.0751

Robert Krauthgamer (Weizmann Institute)

Joint with: Amirali Abdullah, Alexandr Andoni,

Ravi Kannan

Les Houches, January 2015

Nearest Neighbor Search (NNS)

Preprocess: a set 𝑃 of 𝑛 points in ℝ𝑑

Query: given a query point 𝑞, report a

point 𝑝∗ ∈ 𝑃 with the smallest distance

to 𝑞

𝑝∗

𝑞

Motivation

Generic setup:

Application areas:

Points model objects (e.g. images)

Distance models (dis)similarity measure

machine learning: k-NN rule

signal processing, vector quantization,

bioinformatics, etc…

Distance can be:

Hamming, Euclidean,

edit distance, earth-mover distance, …

000000

011100

010100

000100

010100

011111

000000

001100

000100

000100

110100

111111

𝑝∗

𝑞

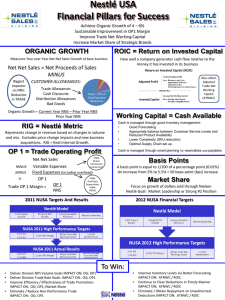

Curse of Dimensionality

All exact algorithms degrade rapidly with the

dimension 𝑑

Algorithm

Query time

Space

Full indexing

𝑂(log 𝑛 ⋅ 𝑑)

No indexing –

linear scan

𝑂(𝑛 ⋅ 𝑑)

𝑛𝑂(𝑑) (Voronoi diagram size)

𝑂(𝑛 ⋅ 𝑑)

Approximate NNS

Given a query point 𝑞, report 𝑝′ ∈ 𝑃 s.t.

∗

𝑝′ − 𝑞 ≤ 𝑐 min

𝑝

−𝑞

∗

𝑝

𝑐 ≥ 1 : approximation factor

randomized: return such 𝑝′ with probability

≥ 90%

Heuristic perspective: gives a set of

candidates (hopefully small)

𝑝∗

𝑞

𝑝′

NNS algorithms

It’s all about space partitions !

Low-dimensional

[Arya-Mount’93], [Clarkson’94], [Arya-MountNetanyahu-Silverman-We’98], [Kleinberg’97],

[HarPeled’02],[Arya-Fonseca-Mount’11],…

High-dimensional

[Indyk-Motwani’98], [Kushilevitz-OstrovskyRabani’98], [Indyk’98, ‘01], [Gionis-IndykMotwani’99], [Charikar’02], [Datar-ImmorlicaIndyk-Mirrokni’04], [Chakrabarti-Regev’04],

[Panigrahy’06], [Ailon-Chazelle’06], [AndoniIndyk’06], [Andoni-Indyk-NguyenRazenshteyn’14], [Andoni-Razenshteyn’15]

6

Low-dimensional

kd-trees,…

𝑐 =1+𝜖

runtime: 𝜖 −𝑂(𝑑) ⋅ log 𝑛

7

High-dimensional

Locality-Sensitive Hashing

Crucial use of random projections

Johnson-Lindenstrauss Lemma: project to random subspace of

dimension 𝑂(𝜖 −2 log 𝑛) for 1 + 𝜖 approximation

Runtime: 𝑛1/𝑐 for 𝑐-approximation

8

Practice

Data-aware partitions

optimize the partition to your dataset

PCA-tree [Sproull’91, McNames’01, Verma-Kpotufe-Dasgupta’09]

randomized kd-trees [SilpaAnan-Hartley’08, Muja-Lowe’09]

spectral/PCA/semantic/WTA hashing [Weiss-Torralba-Fergus’08,

Wang-Kumar-Chang’09, Salakhutdinov-Hinton’09, Yagnik-Strelow-RossLin’11]

9

Practice vs Theory

Data-aware projections often outperform (vanilla)

random-projection methods

But no guarantees (correctness or performance)

JL generally optimal [Alon’03, Jayram-Woodruff’11]

Even for some NNS setups! [Andoni-Indyk-Patrascu’06]

Why do data-aware projections outperform random projections ?

Algorithmic framework to study phenomenon?

10

Plan for the rest

Model

Two spectral algorithms

Conclusion

11

Our model

“low-dimensional signal + large noise”

inside high dimensional space

Signal: 𝑃 ⊂ 𝑈 for subspace 𝑈 ⊂ ℝ𝑑 of dimension 𝑘 ≪ 𝑑

Data: each point is perturbed by a full-dimensional

Gaussian noise 𝑁𝑑 (0, 𝜎 2 𝐼𝑑 )

𝑈

12

Model properties

Data 𝑃 = 𝑃 + 𝐺

Query 𝑞 = 𝑞 + 𝑔𝑞 s.t.:

points in P have at least unit norm

||𝑞 − 𝑝∗ || ≤ 1 for “nearest neighbor” 𝑝∗

||𝑞 − 𝑝|| ≥ 1 + 𝜖 for everybody else

Noise entries 𝑁(0, 𝜎 2 )

1

up to factor poly(𝜖 −1 𝑘 log 𝑛)

𝜎≈

Claim: exact nearest neighbor is still the same

𝑑 1/4

Noise is large:

13

has magnitude 𝜎 𝑑 ≈ 𝑑1/4 ≫ 1

top 𝑘 dimensions of 𝑃 capture sub-constant mass

JL would not work: after noise, gap very close to 1

Algorithms via PCA

Find the “signal subspace” 𝑈 ?

Use Principal Component Analysis (PCA)?

then can project everything to 𝑈 and solve NNS there

≈ extract top direction(s) from SVD

e.g., 𝑘-dimensional space 𝑆 that minimizes

𝑝∈𝑃 𝑑

2 (𝑝, 𝑆)

If PCA removes noise “perfectly”, we are done:

14

𝑆=𝑈

Can reduce to 𝑘-dimensional NNS

NNS performance as if we are in 𝑘 dimensions for full model?

Best we can hope for

dataset contains a “worst-case” 𝑘-dimensional instance

Reduction from dimension 𝑑 to 𝑘

Spoiler: Yes

15

PCA under noise fails

Does PCA find “signal subspace” 𝑈 under noise ?

No

2 (𝑝, 𝑆)

𝑑

𝑝∈𝑃

PCA minimizes

good only on “average”, not “worst-case”

weak signal directions overpowered by noise directions

typical noise direction contributes 𝑛𝑖=1 𝑔𝑖2 𝜎 2 = Θ(𝑛𝜎 2 )

𝑝∗

16

1st Algorithm: intuition

Extract “well-captured points”

points with signal mostly inside top PCA space

should work for large fraction of points

Iterate on the rest

𝑝∗

17

Iterative PCA

•

•

•

•

Find top PCA subspace 𝑆

𝐶=points well-captured by 𝑆

Build NNS d.s. on {𝐶 projected onto 𝑆}

Iterate on the remaining points, 𝑃 ∖ 𝐶

Query: query each NNS d.s. separately

To make this work:

Nearly no noise in 𝑆: ensuring 𝑆 close to 𝑈

Capture only points whose signal fully in 𝑆

18

𝑆 determined by heavy-enough spectral directions (dimension may be

less than 𝑘)

well-captured: distance to 𝑆 explained by noise only

•

•

•

•

Simpler model

Assume: small noise

𝑝𝑖 = 𝑝𝑖 + 𝛼𝑖 ,

well-captured if 𝑑 𝑝, 𝑆 ≤ 2𝛼

Claim 1: if 𝑝∗ captured by 𝐶, will find it in NNS

Query: query each NNS separately

can be even adversarial

Algorithm:

where ||𝛼𝑖 || ≪ 𝜖

Find top-k PCA subspace 𝑆

𝐶=points well-captured by 𝑆

Build NNS on {𝐶 projected onto 𝑆}

Iterate on remaining points, 𝑃 ∖ 𝐶

for any captured 𝑝:

||𝑝𝑆 − 𝑞𝑆 || = || 𝑝 − 𝑞|| ± 4𝛼 = ||𝑝 − 𝑞|| ± 5𝛼

Claim 2: number of iterations is 𝑂(log 𝑛)

19

𝑝∈𝑃 𝑑

2

(𝑝, 𝑆) ≤

𝑝∈𝑃 𝑑

2

𝑝, 𝑈 ≤ 𝑛 ⋅ 𝛼 2

for at most 1/4-fraction of points, 𝑑 2 𝑝, 𝑆 ≥ 4𝛼 2

hence constant fraction captured in each iteration

Analysis of general model

Need to use randomness of the noise

Want to say that “signal” is stronger than “noise” (on

average)

Use random matrix theory

𝑃 =𝑃+𝐺

𝐺 is random 𝑛 × 𝑑 with entries 𝑁(0, 𝜎 2 )

𝑃 has rank ≤ 𝑘 and (Frobenius-norm)2 ≥ 𝑛

20

All singular values 𝜆2 ≤ 𝜎 2 𝑛 ≈ 𝑛/ 𝑑

important directions have 𝜆2 ≥ Ω(𝑛/𝑘)

can ignore directions with 𝜆2 ≪ 𝜖𝑛/𝑘

Important signal directions stronger than noise!

Closeness of subspaces ?

Trickier than singular values

Top singular vector not stable under perturbation!

Only stable if second singular value much smaller

How to even define “closeness” of subspaces?

To the rescue: Wedin’s sin-theta theorem

sin 𝜃 𝑆, 𝑈 = max min ||𝑥 − 𝑦||

𝑥∈𝑆 𝑦∈𝑈

|𝑥|=1

21

𝑆

𝜃

𝑈

Wedin’s sin-theta theorem

Developed by [Davis-Kahan’70], [Wedin’72]

Theorem:

Consider 𝑃 = 𝑃 + 𝐺

𝑆 is top-𝑙 subspace of 𝑃

𝑈 is the 𝑘-space containing 𝑃

Then: sin 𝜃 𝑆, 𝑈 ≤

𝜃

||𝐺||

𝜆𝑙 (𝑃)

Another way to see why we need to take directions with

sufficiently heavy singular values

22

Additional issue: Conditioning

After an iteration, the noise is not random anymore!

non-captured points might be “biased” by capturing criterion

Fix: estimate top PCA subspace from a small sample of

the data

Might be purely due to analysis

23

But does not sound like a bad idea in practice either

Performance of Iterative PCA

Can prove there are 𝑂

In each, we have NNS in ≤ 𝑘 dimensional space

Overall query time: 𝑂

Reduced to 𝑂

24

𝑑 log 𝑛 iterations

1

𝜖𝑂 𝑘

⋅ 𝑑 ⋅ log 3/2 𝑛

𝑑 log 𝑛 instances of 𝑘-dimension NNS!

2nd Algorithm: PCA-tree

Closer to algorithms used in practice

•

•

•

•

Find top PCA direction 𝑣

Partition into slabs ⊥ 𝑣

Snap points to ⊥ hyperplane

Recurse on each slice

≈ 𝜖/𝑘

25

Query:

• follow all tree paths that

may contain 𝑝∗

2 algorithmic modifications

Find top PCA direction 𝑣

Partition into slabs ⊥ 𝑣

Snap points to ⊥ hyperplanes

Recurse on each slice

•

•

•

•

Centering:

Query:

• follow all tree paths that

may contain 𝑝∗

Need to use centered PCA (subtract average)

Otherwise errors from perturbations accumulate

Sparsification:

Need to sparsify the set of points in each node of the tree

Otherwise can get a “dense” cluster:

26

not enough variance in signal

lots of noise

Analysis

An “extreme” version of Iterative PCA Algorithm:

just use the top PCA direction: guaranteed to have signal !

Main lemma: the tree depth is ≤ 2𝑘

because each discovered direction close to 𝑈

snapping: like orthogonalizing with respect to each one

cannot have too many such directions

𝑘 2𝑘

𝜖

Query runtime: 𝑂

Overall performs like 𝑂(𝑘 ⋅ log 𝑘)-dimensional NNS!

27

Wrap-up

Why do data-aware projections outperform random projections ?

Algorithmic framework to study phenomenon?

Here:

Immediate questions:

Model: “low-dimensional signal + large noise”

like NNS in low dimensional space

via “right” adaptation of PCA

Other, less-structured signal/noise models?

Algorithms with runtime dependent on spectrum?

Broader Q: Analysis that explains empirical success?

28