Slides

advertisement

Jamie Frost – Franks Society MT10

What is language?

What is a language?

Wikipedia

“A set of symbols of

communication and

the elements used to

manipulate them.”

OED

“The system of spoken or

written communication used

by a particular country,

people, community, etc.,

typically consisting of words

used within a regular

grammatical and syntactic

structure.”

What is a language

• An alphabet

The possible symbols that each ‘unit’ in the

language can take.

For human languages, the alphabet may be at a

character level.

Or we could choose it to be at a word level...

What is a language

• An alphabet

•...can form a ‘string’

Σ2 = Σ × Σ gives us all the possible pairs of symbols.

Σ* = {λ} ∪ Σ ∪ (Σ × Σ) ∪ (Σ × Σ × Σ) ∪ ...

is known as the Kleene Star, and gives us all possible

strings, i.e. containing any combination of symbols and

of any length.

What is a language

• An alphabet

•...can form a ‘string’

•...which can be constrained by a grammar

Any sensible language doesn’t allow a unrestricted

combination of symbols. Human languages are bounded

by some grammatical structure.

Mint.

Grammars

So how do we define a grammar?

Grammars limit our possible strings to certain forms.

These vary in expressiveness – the more expressible

they are, the harder it is to do certain common tasks

with them.

Tasks might include:

Expressiveness

• “finding the

grammatical structure

of a string given a

grammar”, or

•“does a string satisfy a

given grammar?”.

•“are two grammars

equivalent?”

Task Complexity

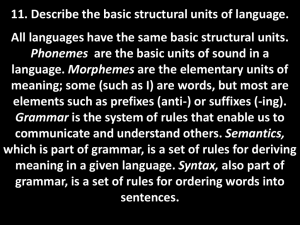

The Chomsky Hierarchy

In 1956, Noam Chomsky characterised languages according

to how ‘complex’ or expressible they are.

Grammar

Language

Type-0

Recursively Enumerable

Type-1

Context Sensitive

Type-2

Context Free

Type-3

Regular

This is known as the Chomsky Hierarchy.

A language that satisfies a given type is also a instance of all

the grammars above it.

A Formal Grammar

Consists of:

A start symbol (S ϵ N)

Terminal symbols (i.e. our

alphabet Σ)

1. S ⟶ i love T

2. T ⟶ T and T

3. T ⟶ smurfs

4. T ⟶ smurfettes

Non-terminal symbols (N)

Production rules

A Formal Grammar

Think of it as a game...

1. S ⟶ i love T

2. T ⟶ T and T

3. T ⟶ smurfs

4. T ⟶ smurfettes

A) Start with start symbol

S

B) We can use the production

rules the replace things.

⟶ i love T

⟶ i love T and T

⟶ i love smurfs and T

⟶ i love smurfs and T and T

⟶ i love smurfs and smurfs and T

⟶ i love smurfs and smurfs and smurfettes

C) We’re not allowed to

‘finish’ until we only have

terminal symbols.

Regular Grammars

The most restrictive.

The LHS of the production rules can only be a single

non-terminal.

The RHS of the production rules can be one of (a) a

single terminal symbol (b) a single non-terminal symbol

(c) a terminal followed by a non-terminal or (d) the

empty symbol.

The idea is that you don’t

have ‘memory’ of the

symbols you’ve

previously emitted in the

string.

Regular Grammars

Example

Example Generation:

S

⟶ aS

⟶ aaS

⟶ aaaT

⟶ aaabT

⟶aaabb

Notice we’re

always generating

at the end of the

string.

Regular Grammar

: If Σ = {a,b}, the language where two

b’s are never seen next to each other.

e.g. a, ababa, baa, b, aaa, ...

As a regular expression: L = a*(baa*)*(b|ϵ)

Often helpful to draw a picture:

a

b

a

This kind of

diagram is known

as a

‘nondeterministic

finite automaton’

or NFA.

Regular Grammar

We can use this picture to work out the regular

grammar:

a

b

a

It’s Voting Time...

The language of palindromes,

i.e. strings which are the same when

reversed, e.g. “madam”, “acrobats stab

orca”.

{ anbn | n ≥ 1 }

i.e. ab, aabb, aaabbb, aaaabbbb, ...

Neither are.

The problem is that we cannot ‘remember’ the symbols already emitted.

We can use something called the pumping lemma to check if a language is regular.

Context Free Grammars

The restriction on the RHS of the production rules is

now loosened; we can have any combination of nonterminals and terminals.

We still restrict the LHS however to a single nonterminal. This is why the grammar is known as

“context free”, since the production is not dependent

on the context in which it occurs.

While generating a string:

...

⟶ abXd

⟶ abyd

The production rule which allows the X to

become a y is not contingent on the

context, i.e. The preceding b or the

proceeding d.

Context Free Grammars

Examples

Example Generation:

S

⟶ aSa

⟶ acSca

⟶ acbca

Context Free Grammars

Examples

Example generation:

S

⟶ aSb

⟶ aaSbb

⟶ aaaSbbb

⟶ aaabbb

It’s Voting Time...

{ anbncn| n ≥ 1 }

i.e. abc, aabbcc, aaabbbccc, ...

Nope.

A bit harder to see this time.

Can use a variant of the Pumping Lemma called the Bar-Hillel Lemma to show it isn’t.

(But informal explanation as such: We could have non-terminals at the a-b and b-c boundary generating

these pairs, but since our language is context free these non-terminals expand independently of each

other, thus we can only ensure a and b have the same count, or b and c. And we can’t have a rule of the

form S-> X abc Y because then we can’t subsequently increase the number of b’s.)

Context-Sensitive Grammars

Now an expansion of a non-terminal is dependent on

the context it appears in.

Example generation:

S

⟶ aSBC

⟶ aaBCBC

⟶ aaBHBC

⟶ aaBBCC

⟶ aabBCC

⟶ aabbCC

⟶ aabbcC

⟶ aabbcc

i.e. a ‘C’ can change into a ‘c’ only when preceded by another ‘c’. Note

that this context (i.e. this preceding ‘c’) must remain unchanged.

Preservation of context is the only restriction in CSGs.

The Chomsky Hierarchy

Gram

mar

Language

Automaton

Type-0 Recursively Turing Machine

enumerable

Rules

α⟶β

Linear bounded Nondeterministic

Turing Machine

αAβ ⟶ αγβ

Type-2 Context

Free

Non-deterministic

Pushdown

Automaton

A⟶γ

Type-3 Regular

Finite State

Automaton

A ⟶ a and

A ⟶ aB

Type-1

Context

sensitive

The picture with circles and

arrows we saw earlier.

English as a CFG

Before we get on to classifying English according to the

Chomsky Hierarchy, let’s see how English might be

represented as a CFG.

Our starting non-terminal S is a sentence. Since

sentences operate independently syntactically, it’s

sufficient to examine grammar on a sentence level.

Our terminals/alphabet Σ is just a dictionary in the

literal sense.

Σ = { a, aardvark, .... , zebra, zoology, zyzzyva }

English as a CFG

Our non-terminals are ‘constituents’, such as noun phrases,

verb phrases, verbs, determiners, prepositional phrases, etc.

These can be subdivided into further constituents (e.g. NP =

noun phrase), or generate a terminals (e.g. V = verb)

Non-Terminal

Name

Example

NP

Noun Phrase

the cat

VP

Verb Phrase

chastised the politician

PP

Prepositional Phrase

with the broccoli

CONJ

Conjunction

and

V

Verb

chundered

ADV

Adverb

everywhere

English as a CFG

Can use an American style ‘top-down’ generative

form of grammar.

S ⟶ NP VP

NP ⟶ DT N

NP ⟶ PN

VP ⟶ VP PP

NP ⟶ NP PP

PP ⟶ P NP

NP ⟶ NP CONJ NP

DT ⟶ the

DT ⟶ a

N ⟶ monkey

N ⟶ student

N ⟶ telescope

PN ⟶ Corey

P ⟶ with

P ⟶ over

CONJ ⟶ and

CONJ ⟶ or

V ⟶ saw

V ⟶ ate

Ambiguity

Curiously, it’s possible to generate a sentence in

multiple ways!

S

⟶ NP VP

⟶ PN VP

⟶ Corey VP

⟶ Corey VP PP

⟶ Corey V NP PP

⟶ Corey saw NP PP

⟶ ...

⟶ Corey saw the monkey

with the telescope.

S

⟶ NP VP

⟶ PN VP

⟶ Corey VP

⟶ Corey V NP

⟶ Corey saw NP

⟶ Corey saw NP PP

⟶ ...

⟶ Corey saw the monkey

with the telescope.

What do each of these sentences

semantically mean?

Ambiguity

We say that a formal grammar that can yield the same string from multiple

derivations is ‘ambiguous’.

So what kind of language...

Is a Regular Grammar sufficient?

Chomsky demonstrates this by showing a particular part of

English grammar that behaves akin to a palindrome. We saw

earlier that palindromes are not regular.

But there’s a nicer more recent proof...

Embedded Structures (Yngve 60)

The cat likes tuna fish.

The cat the dog chased likes tuna fish.

The cat the dog the rat bit chased likes tuna fish.

The cat the dog the rat the elephant admired bit chased likes tuna fish.

Embedded Structures

The cat the dog the rat the elephant admired bit chased likes tuna fish.

If we let A = { the dog, the rat, the elephant } and

B = { admired, bit, chased } then we represent centre-embedding as

such:

But we already know from earlier that anbn is not regular!

So what kind of language...

Is a Context Free Grammar sufficient?

The fact constituents in a sentence are syntactically

independent of each other naturally supports a grammar that

is ‘context free’.

A number of scholars initially argued that number agreement

(e.g. “Which problem did your professor say was unsolvable?”)

couldn’t be captured by a CFG, but they were just being a bit

dim. This can easily be incorporated by just introducing a few

new non-terminals (e.g. NP_PLUR, NP_SING).

Swiss German

A number of languages, such as Dutch and Swiss

German, allow for cross-serial dependencies

...mer d’chind

...we

em Hans es huus

haend wele

the children/ACC Hans/DAT the house/ACC have

laa halfe aastriiche

wanted to let help paint.

DAT = Dative noun: the indirect object of a verb

(e.g. John gave Mary the book”).

ACC = Accusative noun: the direct object of a verb

(e.g. John gave Mary the book”).

Swiss German

Shieber (1985) notes that among such sentences, those with all accusative

NPs preceding all dative NPs, and all accusative-subcategorising verbs

preceding all dative-subcategorising verbs are acceptable.

...mer d’chind

...we

em Hans es huus

haend wele

the children/ACC Hans/DAT the house/ACC have

laa halfe aastriiche

wanted to let help paint.

The number of verbs requiring dative objects (halfe) must equal the

number of dative NPs (em Hans) and similarly for accusatives.

Can therefore put in form:

Swiss German

...mer d’chind

...we

em Hans es huus

haend wele

the children/ACC Hans/DAT the house/ACC have

laa halfe aastriiche

wanted to let help paint.

anbmcndm is not context free. It’s context-sensitive.

Summary

The Chomsky Hierarchy brings together different

grammar formalisms, listing them in increasing order of

expressiveness.

Languages don’t have to be human ones: they allow us to

generate strings with some given alphabet Σ, subject to

some grammatical constraints.

The least expressive languages are Regular Grammars,

which are insufficient to represent the English language.

But Context Free Grammars are insufficient to represent

languages with cross-serial dependencies, such as Swiss

German.

Questions?