Document

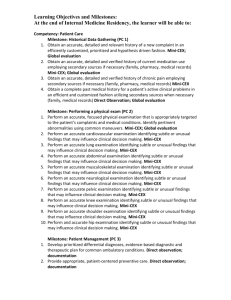

advertisement

Using the Mini-Clinical Evaluation Exercise (Mini-CEX) as an assessment tool for medical students April 29, 2011 Why consider the Mini-CEX? Year 4 OSCE 2011 Overall Results • Number sitting OSCE 248 • Number passing 245 (98.8%) Competency Domains Reliably Measured Class Mean SD Requires Improvement Alpha Counselling 70 10 35 .63 History-taking 65 5 38 .72 Physical examination 78 8 3 .75 Diagnosis 71 13 46 .15 Management 66 8 53 .02 Lab data 80 11 17 .21 Communications 77 3 0 .69 Professionalism 77 3 0 .48 Competencies/Skills Other Competencies and Skills Measured What is mini-CEX? • Structured 10 min observation of a student performing specified tasks during routine practice • Feedback session (10 min) • Completion of a standardized one-page rating form Mini-CEX: Types of tasks • Focused history • Physical examination • Counselling – advise patient regarding management options – provide appropriate education – make recommendations that address patient’s concerns • Clinical reasoning skills – diagnostic and therapeutic skills • Case presentation History-taking Form Guidelines for marking Reliability of the Mini-CEX Average Composite Ratings Changes in reliability as a function of the observed number of encounters 1 0.8 0.6 0.4 0.2 0 1 4 8 12 16 20 24 Number of encounters 28 32 36 Implementing a Mini-CEX Assessment Process Implementing a Mini-CEX • Orientation – Familiarize yourself with the mini-CEX rating form and definition of the components of the student’s performance you will be rating • Schedule the mini-CEX – 1-2 / week – Allow 20 minutes for each assessment – Obtain patient permission Implementing a Mini-CEX • Select the patient encounters to be observed – Year 3 “must see” list of medical conditions • • • • new or existing medical problem acute vs. chronic illness different age groups and both genders different clinical settings (e.g. office, hospital) if possible – Ask the student to perform the task without prompting about the possible diagnosis • perform an abdominal examination • Not: examine the patient for possible appendicitis Assessment Process • Avoid interrupting the student during the patient encounter – no questions, comments or suggestions – if you want to follow-up findings with patients, do this after the student is finished • Conduct immediate feedback (10 minutes) • Complete rating form • Discuss rating or comments with student Mini-CEX: Feedback • • • • • • Immediate Specific Limited to key issues Honest Fair Descriptive, not judgmental, e.g. “you did not examine X” NOT “you were way off base” Mini-CEX: Feedback • Two-way process (inter-active) • Start by asking the student some questions – how they felt they did with the patient – what findings they found – what they think is the most likely diagnosis – why they ordered a particular investigation or suggested a particular treatment • Answers can stimulate specific feedback and also guide ratings of performance Feedback challenge • Easy when the student does well • More difficult when the performance is poor – do not hesitate to point out area of weakness – multiple assessments with multiple examiners (reliability) – sampling performance across the spectrum of clinical situations (validity) Mini-CEX: Feedback Closing the loop Provide a recommendation – interviewing/ examination/ counselling skills/ management/ presentation • Develop a specific action plan – allows student to act on the recommendation Example of use of Mini-CEX ER • A man presents with abdominal pain • The student performs a focused abdominal examination (10 minutes) • The preceptor notices that the student did not examine the inguinal areas adequately • Feedback is given – the preceptor demonstrates the correct technique – recommends a review of hernias in clinical skills textbook – suggests plan to practise exam technique Summary: Key features of the Mini-CEX • Direct assessment of actual patient care • Allows assessment of performance • good evidence supporting mini-CEX’s validity and reliability • cumulatively can infer student’s competence • Can be incorporated into daily activities • efficient use of resources • Allows immediate and substantive feedback Assessor’s training • Paper-based orientation – Familiarize with the process, specific observation task and ratings form – All assessors who participated received this form of training • Workshop* - video-based training – Videos exemplifying three levels of performance – Rated at the end on the form by all participants *(Modeled after ABIM/NBME ‘Direct observation of Competence Training Program’, Holmboe et al., 2004) Effects of training • Assessors of the postgraduate trainees – Raters not trained in workshop were more lenient: 3.17 vs. 2.31 6.17 vs. 4.85 8.29 vs. 7.38 – Both the scenario and training-group effects were significant Reliability • Generalizability-theory approach to reliability – Allows for estimating the variance-components attributable to the different factors of the measurement situation – Calculating G-coefficient (reliability coefficient) – Modeling the effect of changes in these factors (e.g., number of items needed to achieve certain level of precision) • Number of mini-CEXs needed was the main factor followed through all studies (in one case, the effect of blueprinting was also explored)