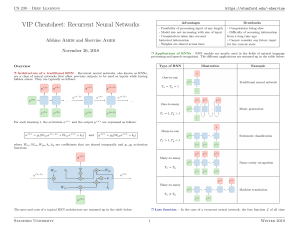

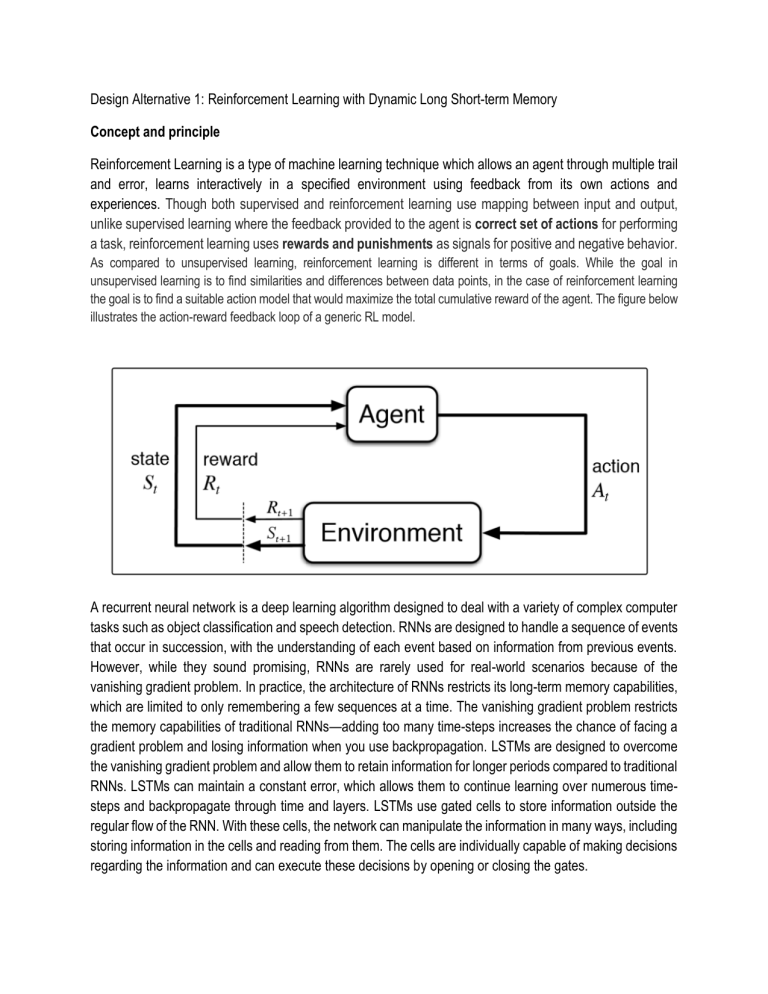

Design Alternative 1: Reinforcement Learning with Dynamic Long Short-term Memory Concept and principle Reinforcement Learning is a type of machine learning technique which allows an agent through multiple trail and error, learns interactively in a specified environment using feedback from its own actions and experiences. Though both supervised and reinforcement learning use mapping between input and output, unlike supervised learning where the feedback provided to the agent is correct set of actions for performing a task, reinforcement learning uses rewards and punishments as signals for positive and negative behavior. As compared to unsupervised learning, reinforcement learning is different in terms of goals. While the goal in unsupervised learning is to find similarities and differences between data points, in the case of reinforcement learning the goal is to find a suitable action model that would maximize the total cumulative reward of the agent. The figure below illustrates the action-reward feedback loop of a generic RL model. A recurrent neural network is a deep learning algorithm designed to deal with a variety of complex computer tasks such as object classification and speech detection. RNNs are designed to handle a sequence of events that occur in succession, with the understanding of each event based on information from previous events. However, while they sound promising, RNNs are rarely used for real-world scenarios because of the vanishing gradient problem. In practice, the architecture of RNNs restricts its long-term memory capabilities, which are limited to only remembering a few sequences at a time. The vanishing gradient problem restricts the memory capabilities of traditional RNNs—adding too many time-steps increases the chance of facing a gradient problem and losing information when you use backpropagation. LSTMs are designed to overcome the vanishing gradient problem and allow them to retain information for longer periods compared to traditional RNNs. LSTMs can maintain a constant error, which allows them to continue learning over numerous timesteps and backpropagate through time and layers. LSTMs use gated cells to store information outside the regular flow of the RNN. With these cells, the network can manipulate the information in many ways, including storing information in the cells and reading from them. The cells are individually capable of making decisions regarding the information and can execute these decisions by opening or closing the gates. Combining this two will enable to create an algorithm that adapts to its environment effectively as it learns through reinforcement and stores the memory in LSTM which would be ideal on dynamic pricing as the volatile price movement in fresh produce market in retail is caused by multiple factors and through this, it could make a good price suggestion for automation. Design Methodology For data processing feature scaling on the data is needed. To do this, Standardisation or Normalization is used. where µx represents the mean of vector X and the σx represents the standard variation of vector X. Furthermore, Xmin and Xmin refer to the minimum and maximum values of vector X, respectively. In case of Normalization, as the denominator will always be larger than the nominator, the output value will always be a number between 0 and 1. Given that our methodology is based on LSTMs that include Sigmoid function, we will use normalization rather than standardization as a feature scaling method. This transformation can be done using the MinMaxScaler from the Scikit-Learn Python library. RNN process What RNN does is that it translates the provided inputs to a machine-readable vectors. For example, if we aim to use RNN that will classify a review consisting of multiple words as negative or positive then the RNN will translate each word to a vector. Then the system processes each of this sequence of vectors one by one, moving from very first vector to the next one in a sequential order. While processing, the system passes the information through the hidden state (memory) to the next step of the sequence. RNN Information Flow LSTMs have the same information flow as usual RNNs with the sequential information transition where data propagates forward through sequential steps. The difference between the usual RNN and LSTM is the set of operations that are performed on the passed information and the input value in that specific step.These set of various operations gives the LSTM the opportunity to keep or forget parts of the information that flows into that particular step. The information in LSTMs flows through its gates which are LSTMs’ main concepts; forget gate, input gate, cell state, and output gate. LSTM Information Flow