CNNs & Bidirectional RNNs: Deep Learning Networks

advertisement

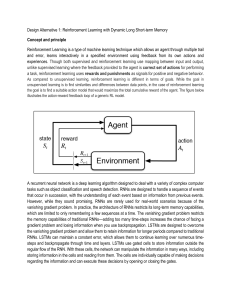

2.Convolutional Neural Networks: Convolutional Neural Networks (CNNs) are a class of deep learning models designed for processing and analyzing visual data, particularly images. They have been widely successful in various applications, including image recognition, object detection, and natural language processing. Key Concepts: 1. Objective of CNNs: Goal: CNNs aim to learn higher-order features in data through convolutions, making them well-suited for image recognition and classification tasks. Applications: They excel in identifying objects, faces, signs, and more in visual data. Additionally, CNNs find use in text analysis and sound analysis. 2.Biological Inspiration: Mimic Animal Vision: Inspired by the visual cortex in animals, with simple and complex cells. 3.Architecture Overview: .3D Structure: Neurons organized in a 3D structure matching image characteristics. Layer Types: Input, feature-extraction (convolution, pooling), and classification layers. 4.Convolutional Layers: Pattern Detection: Use filters to detect patterns in different parts of the input data. Parameter Sharing: Neurons share information, reducing the learning load. Activation Maps: Show where specific patterns are recognized in the input. 5.Pooling Layers: Downsampling: Reduce spatial dimensions, aiding in network manageability. Prevent Overfitting: Contribute to preventing the network from becoming too specialized. 6.Fully Connected Layers: Generate Output: Compute final output, providing class scores for recognition. Connections: Neurons connect to all neurons in the previous layer. 7.Batch Normalization: Speed up Training: Adjust data at each step to maintain a reasonable range. Mean and Standard Deviation: Ensure internal values stay within an optimal range. 8.Hyperparameters: Filter Size: Determines how big the filters are that slide over the input data. Output Depth: Controls how many filters are used in each layer. Stride: Dictates how much the filters move as they slide over the input. Zero-padding: Adjusts the spatial size of the output data. 3.Bidirectional Recurrent Neural Networks (Bidirectional RNNs): Introduction: Recurrent Neural Networks (RNNs) are foundational for sequence-related tasks, but traditional RNNs have a limitation – they are causal, meaning they consider only past and present information. Bidirectional RNNs were introduced to address scenarios “where predicting the current output depends on future information”, a requirement found in applications like speech and handwriting recognition. This explanation delves into the concept, architecture, and applications of Bidirectional RNNs. Need for Bidirectional RNNs: In various applications, predicting an output (e.g., phoneme in speech recognition) at a given time may depend on future information, which is not accommodated by traditional RNNs. Bidirectional RNNs become essential when there's a need to capture dependencies both in the past and future to disambiguate interpretations. For instance, in speech recognition, understanding the current sound may require considering the next few phonemes, and even the next few words due to linguistic dependencies. Applications and Success: Bidirectional RNNs have found success in various applications, Including handwriting recognition, speech recognition, and bioinformatics. Their ability to consider both past and future context makes them effective in tasks where understanding the current input requires a broader temporal context. Extension to 2-Dimensional Input: Bidirectional RNNs can be extended to 2-dimensional inputs, such as images, by employing RNNs in all four directions (up, down, left, right). This extension allows the model to capture local information while also considering long-range dependencies. Compared to convolutional networks, RNNs on images are more computationally expensive but offer the advantage of long-range lateral interactions between features in the same feature map. The equations governing the forward propagation in these scenarios reveal a convolutional nature that computes bottom-up inputs prior to recurrent propagation, facilitating lateral interactions. “In summary, Bidirectional RNNs address the limitations of traditional RNNs by considering both past and future information”.