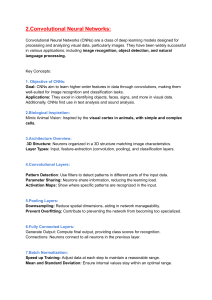

Multi-Task Transformer for Stock Market Trend Prediction KS by Kushal Shah This paper proposes a new method for predicting stock market trends using deep learning models, specifically transformers. The approach leverages a large amount of data through data paneling and uses a multi-task technique to speed up optimization and improve accuracy. Issues faced Indispensable part of a nation's economy Efficient market hypothesis suggests one cannot outperform the market and earn profits consistently in the long run Some theories also suggest that predicting stock price from past performance is not possible. Some use regression approaches but statistical models are unreliable. While maximum investing organizations use classification methods as they are concerned more dabout the trend. Different Models for Stock Market Prediction Exponential Smoothing Model It is a statistical model used to predict future stock prices based on past data where recent observations have higher weight than older ones. Neural Networks ARIMA A commonly used model for time-series prediction, using a combination of autoregression, integrated, and moving average components. Neural networks have been widely used for stock market prediction due to their ability to learn complex nonlinear relationships between input variables and output variables. Deep Learning for Stock Market Prediction Different types of Artificial Neural Networks are used for stock market prediction such as ANN , CNN and RNN. RNNs work better with time series data so they are therefore used for stock forecasting and managing sequence dependencies. Due to vanishing and exploding gradient descent LSTM and GRU networks (Also a type of RNN) are preferred over classical RNNs Recurrent Neural Network (RNN) From the structure, it is evident that the input provided to the RNN is taken one by one, and each output is then passed to the next cell. This helps to keep long-term dependencies, making RNNs a preferred choice over Artificial Neural Networks (ANNs) and Convolutional Neural Networks (CNNs) for tasks such as stock forecasting, machine translation, sentiment analysis, and more. The primary reason for this preference is that in such tasks, previous context at every input is very important. The ability of RNNs to learn from sequential data by taking into account previous inputs helps in capturing long-term dependencies and patterns in the data. This makes RNNs a powerful tool for timeseries analysis and forecasting. LSTM (Long Short-Term Memory) LSTM (Long Short-Term Memory) is a type of Recurrent Neural Network (RNN) that is designed to solve the problem of vanishing and exploding gradients, which are common issues in traditional RNNs. The vanishing gradient problem occurs when the gradients of the loss function with respect to the weights become very small during backpropagation, making it difficult for the network to update the weights properly. LSTM solves this problem by introducing a gating mechanism that controls the flow of information in the network. Limitations of LSTMs 1. Sequential processing: LSTMs process the input sequence sequentially, which means that they cannot take advantage of parallel processing like Transformers 2. Long-term dependencies: While LSTMs are designed to capture long-term dependencies in the input sequence, they can still have difficulty with capturing dependencies that are spread out over very long distances. 3. Limited context: LSTMs have a limited context window, which means that they can only process a fixed number of elements at a time. Transformers In the multi-headed attention layer, the input sequence is transformed into a set of queries, keys, and values, which are then used to compute multiple attention heads. Each attention head is essentially a scaled dot-product attention mechanism, which computes the weighted sum of the values, where the attention weights are computed based on the similarity between the queries and keys. After computing the attention heads, they are concatenated and passed through a linear projection layer, which produces the final output of the multi-headed attention layer. This output is then fed to the feedforward layer, which applies a non-linear transformation to the input before passing it to the next layer of the network. The multi-headed attention layer in the decoder of a Transformer architecture is a critical component for generating high-quality output sequences from input sequences. By attending to both the encoder output and the previously generated tokens in the decoder, the model is able to capture complex relationships between the input and output sequences, and generate more accurate and coherent output sequences. A unified framework for both regression and classification tasks for the stock market is proposed. The framework uses a transformer model, where its output is passed through fully connected layers for regression. The encoder and decoder outputs of the transformer are concatenated and passed through fully connected, batch normalization and softmax layers for trend prediction. The two tasks work in tandem, limiting the search space of each other and resulting in a simultaneous decrease in their respective losses. This approach leads to improved performance in both tasks. How Transformers Improve Stock Market Predictions 1 Multi-Task Learning Architecture Transformers combine multiple tasks centered on stock market prediction in the same architecture, harnessing a broad range of data holdings and potentially leading to more significant accuracy. Adapting Attention Mechanism 2 Transformers adapt their attention to inputs and thus capture more nuances than traditional machine learning models. 3 Superior Performance Transformers outperform traditional statistical models like ARIMA, LSTM, and Random Forest in stock market prediction accuracy, according to a variety of studies. THANK YOU