Assidere Necesse Est

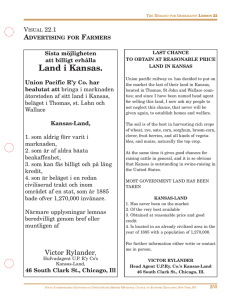

advertisement