2 .

advertisement

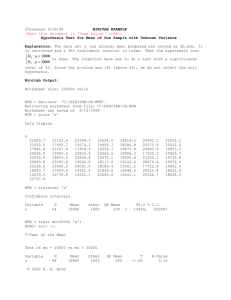

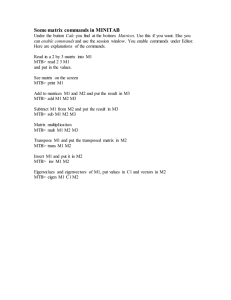

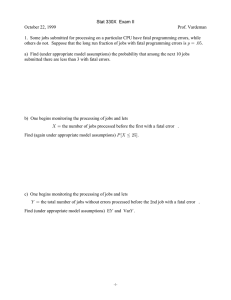

252y0731 4/25/07 (Page layout view!) ECO252 QBA2 THIRD HOUR EXAM April 16, 2007 Name KEY Student number_____________ I. (8 points) Do all the following (2points each unless noted otherwise). Make Diagrams! Show your work! x ~ N 13, 7.2 Material in italics below is a description of the diagrams you were asked to make or a general explanation and will not be part of your written solution. The x and z diagrams should look similar. If you know what you are doing, you only need one diagram for each problem. General comment - I can't give you much credit for an answer with a negative probability or a probability above one, because there is no such x thing!!! In all these problems we must find values of z corresponding to our values of x before we do anything else. A diagram for z will help us because, if areas on both sides of zero are shaded, we will add probabilities, while, if an area on one side of zero is shaded and it does not begin at zero, we will subtract probabilities. Note: All the graphs shown here are missing a vertical line. They are also to scale. A hand drawn graph should exaggerate the distances of the points from the mean. Note also that, because of the rounding error necessary to use a conventional normal table, the results for x will disagree with results for z, especially for small values of z. 0 13 Pz 1.81 Pz 0 P1.81 z 0 .5 .4649 .0351 1. Px 0 P z 7.2 This is how we usually find a p-value for a left-sided test when the distribution of the test ratio is Normal. For z make a diagram. Draw a Normal curve with a mean at 0. Indicate the mean by a vertical line! Shade the entire area below -1.81. Because this is entirely on the left side of zero, we must subtract the area between -1.81 and zero from the area below zero. If you wish, make a completely separate diagram for x . Draw a Normal curve with a mean at 13. Indicate the mean by a vertical line! Shade the area below 0. This area is completely to the left of the mean (13), so we subtract the smaller area between zero and the mean from the larger area below the mean. 1 252y0731 4/25/07 (Page layout view!) 20 13 6 13 z P 0.97 z 0.97 2P0 z 0.97 2.3340 .6680 2. P6 x 20 P 7.2 7.2 For z make a diagram. Draw a Normal curve with a mean at 0. Indicate the mean by a vertical line! Shade the entire area between -0.97 and 0.97. Because this is entirely on both sides of zero, we must add the area between -0.97 and zero to the area from zero to 0.97. If you wish, make a completely separate diagram for x . Draw a Normal curve with a mean at 13. Indicate the mean by a vertical line! Shade the area from 6 to 20. This area is on both sides of the mean (13), so we add the area between 6 and the mean to the area between the mean and 20. 12 13 1 13 z P 1.94 z 0.14 P1.94 z 0 P0.14 z 0 3. P1 x 12 P 7.2 7.2 .4738 .0557 .4181 For z make a diagram. Draw a Normal curve with a mean at 0. Indicate the mean by a vertical line! Shade the area between -1.94 and -0.14. Because this is entirely on the left side of zero, we must subtract the area between -0.14 and zero from the larger area between -0.14 and zero. If you wish, make a completely separate diagram for x . Draw a Normal curve with a mean at 13. Indicate the mean by a vertical line! Shade the area between -1 and 12. This area is completely to the left of the mean (13), so we subtact the smaller area between 12 and the mean from the larger area between -1 and the mean. 2 252y0731 4/25/07 (Page layout view!) 4. x.145 (Find z .145 first) Solution: x.145 is, by definition, the value of x with a probability of 14.5% above it. Make a diagram. The diagram for z will show an area with a probability of 85.5% below z .145 . It is split by a vertical line at zero into two areas. The lower one has a probability of 50% and the upper one a probability of 85.5% - 50% = 35.5%. The upper tail of the distribution above z .145 has a probability of 14.5%, so that the entire area above 0 adds to 35.5% + 14.5% = 50%. From the diagram, we want one point z .145 so that Pz z .145 .855 or P0 z z.145 .3550 . If we try to find this point on the Normal table, the closest we can come is P0 z 1.06 .3555 . So we will use z.145 1.06 , though 1.05 would be acceptable. Since x ~ N 13, 7.2 , the diagram for x would show 85.5% probability split in two regions on either side of 13 with probabilities of 50% below 13 and 35.5% above 13 and below x.145 , and with 14.5% above x.145 . z.145 1.06 , so the value of x can then be written x.145 z .145 13 1.067.2 13 7.63 20.63. 20 .63 13 To check this: Px 20 .63 P z Pz 1.06 Pz 0 P0 z 1.06 .5 + .3554 7.2 20 .63 13 .8554 85.5% . Or Px 20 .63 P z Pz 1.06 Pz 0 P0 z 1.06 7.2 .5 .3554 .1446 14.5% 3 252y0731 4/25/07 (Page layout view!) II. (22+ points) Do all the following (2 points each unless noted otherwise). Do not answer a question ‘yes’ or ‘no’ without giving reasons. Show your work when appropriate. Use a 5% significance level except where indicated otherwise. 1. Turn in your computer problems 2 and 3 marked as requested in the Take-home. (5 points, 2 point penalty for not doing.) 2. (Dummeldinger) As part of a study to investigate the effect of helmet design on football injuries, head width measurements were taken for 30 subjects randomly selected from each of 3 groups (High school football players, college football players and college students who do not play football – so that there are a total of 90 observations) with the object of comparing the typical head widths of the three groups. Before the data was compared, researchers ran two tests on it. They first ran a Lilliefors test and found that the null hypothesis was not rejected. They then ran a Bartlett test and found that the null hypothesis was not rejected. On the basis of these results they should use the following method. a) The Kruskal-Wallis test. b) *One-way ANOVA c) The Friedman test d) Two-Way ANOVA e) Chi-square test [7] Explanation: The null hypothesis of the Lilliefors test is that the distribution is Normal. The null hypothesis of the Bartlett test is equal variances. These two results are the requirements of a one-way ANOVA to compare the means of the three groups. 3. The coefficient of determination a) Is the square of the sample correlation b) Cannot be negative c) Gives the fraction of the variation in the dependent variable explained by the independent variable. d) *All of the above. e) None of the above. S xy 2 SSR Explanation: The coefficient of determination R is the ratio of two sums or SST SS x SS y 2 squares, which must be positive quantities. The correlation is the positive or negative square root of S xy 2 SS x SS y . 4. The Kruskal-Wallis test is an extension of which of the following for two independent samples? a) Pooled-variance t test b) Paired-sample t test c) * (Mann – Whitney -) Wilcoxon rank sum test d) Wilcoxon signed ranks test e) A test for comparison of means for samples with unequal variances f) None of the above. Explanation: The Wilcoxon rank sum test ranks all the number in the samples, just like the KruskalWallis test. Both are tests for comparison of medians. 4 252y0731 4/25/07 (Page layout view!) 5. One-way ANOVA is an extension of which of the following for two independent samples? a) *Pooled-variance t test b) Paired-sample t test c) (Mann – Whitney -) Wilcoxon rank sum test d) Wilcoxon signed ranks test e) A test for comparison of means for samples with unequal variances f) None of the above. [13] Explanation: The variances are pooled in the pooled-variance t test because equal variances is an assumption of ANOVA. Both are tests for comparison of means. Exhibit 1: As part of an evaluation program, a sporting goods retailer wanted to compare the downhill coasting speeds of 4 brands of bicycles. She took 3 of each brand and determined their maximum downhill speeds. The results are presented in miles per hour in the table below. She assumes that the Normal distribution is not applicable and uses a rank test. She treats the columns as independent random samples. Barth Tornado Reiser Shaw 43 37 41 43 46 38 45 45 43 39 42 46 6. What are the null and alternative hypotheses? Solution: H 0 : All medians equal H 1 : Not all medians equal 7. Construct a table of ranks for the data in Exhibit 1. Barth Tornado Reiser Shaw x1 r1 x 2 r2 x3 r3 x 4 r4 43 7.0 37 1.0 41 4.0 43 7.0 46 11.5 38 2.0 45 9.5 45 9.5 43 7.0 39 3.0 42 5.0 46 11.5 25.5 6.0 18.5 28.0 8. Complete the test using a 5% significance level (3) [20] 2 12 SRi 3n 1 Now, compute the Kruskal-Wallis statistic H nn 1 i ni 12 25 .52 6.02 18 .52 28 .02 313 1 604 .1667 39 7.4744 . This is too large for the 12 13 3 3 3 3 13 3 K-W table, so we compare this to 2 .05 7.8145 . Since the computed statistic is below the table statistic, do not reject H 0 . 5 252y0731 4/25/07 (Page layout view!) Exhibit 2: (Webster) A placement director is trying to find out if a student’s GPA can influence how many job offers the student receives. Data is assembled and run on Minitab, with the results below. The regression equation is Jobs = - 0.248 + 1.27 GPA Predictor Coef SE Coef Constant -0.2481 0.7591 GPA 1.2716 0.2859 S = 1.03225 R-Sq = 71.2% Analysis of Variance Source DF SS Regression 1 21.076 Residual Error 8 8.524 Total 9 29.600 T P -0.33 0.752 4.45 0.002 R-Sq(adj) = 67.6% MS 21.076 1.066 F 19.78 P 0.002 9. In Exhibit 2, how many job offers would you predict for someone with a GPA of 3. Solution: Jobs = - 0.248 + 1.27(3)= 3.562 10. In Exhibit 2, what percent of the variation in job offers is explained by variation of GPA? (1) Solution: 71.2% 11. For the regression in Exhibit 2 to give us ‘good’ estimates of the coefficients. a) The variances of ‘Jobs’ and ‘GPA’ must be equal. b)* The amount of variation of ‘Jobs’ around the regression line must be independent of ‘GPA.’ c) ‘Jobs’ must be independent of ‘GPA.’ d) The p-value for ‘Constant’ must be higher than the p-value for ‘GPA.’ e) All of the above must be true. f) None of the above need to be true. [25] 12. Assuming that the Total of 29.600 in the ANOVA in Exhibit 2 is correct, but that you cannot read any other part of the printout, what are the largest and smallest values that the Regression Sum of Squares could take? [27] Solution: 0 to 29.600. 13. A corporation wishes to test the effectiveness of 3 different designs of pollution-control devices. It takes its 6 factories and puts each type of device for one week during each month of the year, for a total of 216 measurements. Pollution in the smoke is measured and an ANOVA is begun. Let factor A be months. Factor B will be factories and Factor C will be the devices. Fill in the following table. Note that we have too little data to compute interaction ABC. The Total sum of squares is 106. SSA is 44, SSB is 11, SSC is 21, SSAB is 9, SSAC is 7 and SSBC is 3. Use a significance level of 1%. Do we have enough information to decide which of the devices is best? (Do not answer this without showing your work!) (6) [33] Row 1 2 3 4 5 6 7 8 Factor A B C AB AC BC Within Total SS DF MS F F01 Significant? 6 252y0731 4/25/07 Solution: Row 1 2 3 4 5 6 7 8 Factor A B C AB AC BC Within Total (Page layout view!) SS 44 11 21 9 7 3 11 106 DF 11 5 2 55 22 10 110 215 MS 4.0000 2.2000 10.5000 0.1636 0.3182 0.3000 0.1000 F 40.000 22.000 105.000 1.636 3.182 3.000 F05 1.88 2.30 3.08 1.47 1.64 1.96 C7 s s s s s s F01 2.41 3.19 4.80 1.76 2.00 2.47 pval s ns ns ns s s The SS Column must add so the missing number is 11. As is implied by the diagram and your experience with 2-way ANOVA, there is no ABC interaction. 12 months means 11 A df, 6 plants means 5 B df, 3 devices means 2 C DF. AB df are 11(5) = 55. AC df are 11(2) = 22, BC df is 5(2) = 10. Total df is 12(6)3 – 1 = 216 – 1 = 215. Within df can be gotten by making sure that the DF column adds or by noting 11(5)2 = 110. MS is SS divided by DF. F is MS divided by MSW = 0.1000. Though all are significant at the 5% level, at the 1% level we cannot say that the Factor C means are different since the F is not significant at 1%. Exhibit 3: A plant manager is concerned for the rising blood pressure of employees and believes that it is related to the level of satisfaction that they get out of their jobs. A random sample of 10 employees rates their satisfaction on a 1to 60 scale and their blood pressure is tested. Row 1 2 3 4 5 6 7 8 9 10 Satisfaction BloodPr 34 124 23 128 19 157 43 133 56 116 47 125 32 147 16 167 55 110 25 156 We also know that Sum of Satisfaction = 350, Sum of BloodPr = 1363, Sum of squares (uncorrected) of Satisfaction = 14170 and Sum of squares (uncorrected) of BloodPr = 189113. It is also true that the sum of the first eight numbers of the product of Satisfaction and Blood is 35609. 14. a) Finish the computation of xy (1). The sum of the first eight numbers of the product of Satisfaction and Blood is 35609. So 35609 + 110(55) + 156(25) = 35609 + 6050 + 3900 = 45559 b) Try to do a regression explaining blood pressure as a result of job satisfaction (4). Please do not recompute things that I have already done for you. xy 45559 and y 1363, x 350, x 2 14170, We now have n 10, 350 1363 y 2 189113. We can begin by computing x 35 and y 136.3. Now it’s 10 10 time for spare parts calculations SSx SST SS y y 2 x 2 nx 2 14170 10352 1920, ny 2 18911310136.32 3336.10, S xy 45559 1035136.3 -2146. 2146 1.1177 means b0 Y b1 X 136 .3 1.1177 35 175 .4195 . SS x 1920 So our regression equation is Yˆ 175 .4195 1.1177 X or Y 175 .4195 1.1177 X e . b1 S xy 7 252y0731 4/25/07 (Page layout view!) c) Find the slope and test its significance(4). SSR b1 S xy 1.11772146 2398.5842 , df n 2 8 s e2 SSE SST SSR SS y b1 S xy 3336 .10 2398 .5842 117 .1895 , n2 n2 n2 8 s e 117 .1895 10 .8254 1 117 .1895 s b21 s e2 0.06104 . So s b 0.06104 0.24706 1 1920 SS x H : 0 b 0 1.117 8 To test 0 1 use t 1 1.860 . 4.5211 . We compare this with t .05 sb1 0.24706 H 1 : 1 0 Since our computed t is larger that the table t, we reject the null hypothesis. d) Do the prediction or confidence interval as appropriate for the blood pressure of the highly satisfied employee (satisfaction level is 57) that you hired last month. (3) [45] 2 Recall that x 35, SS x 1920.00, s e 117.1895 and Yˆ 175.4195 1.1177X 175.4195 1.117757 111.71 . 1 X X 2 The prediction Interval is Y0 Yˆ0 t sY , where sY2 s e2 0 1 n SS x 2 1 57 35 484 117 .1895 1 117 .1895 0.1 1 117 .1895 1.35208 158.500. This 10 1920 1920 8 1.860 , and we put the whole mess together. implies that sY 158 .500 12 .5876 Now t .05 Y0 Yˆ0 t sY 111.71 1.860158.5 11.71 294.21 . A prediction interval is appropriate for a one-shot situatuin like this one. A confidence interval is an interval for the average response. 8 252y0731 4/25/07 (Page layout view!) ECO252 QBA2 THIRD EXAM April 16, 2007 TAKE HOME SECTION Name: _________________________ Student Number: _________________________ Class days and time : _________________________ Please Note: Computer problems 2 and 3 should be turned in with the exam (2). In problem 2, the 2 way ANOVA table should be checked. The three F tests should be done with a 5% significance level and you should note whether there was (i) a significant difference between drivers, (ii) a significant difference between cars and (iii) significant interaction. In problem 3, you should show on your third graph where the regression line is. Check what your text says about normal probability plots and analyze the plot you did. Explain the results of the t and F tests using a 5% significance level. (2) III Do the following. (20+ points) Note: Look at 252thngs (252thngs) on the syllabus supplement part of the website before you start (and before you take exams). Show your work! State H 0 and H 1 where appropriate. You have not done a hypothesis test unless you have stated your hypotheses, run the numbers and stated your conclusion. (Use a 95% confidence level unless another level is specified.) Answers without reasons or accompanying calculations usually are not acceptable. Neatness and clarity of explanation are expected. This must be turned in when you take the in-class exam. Note that from now on neatness means paper neatly trimmed on the left side if it has been torn, multiple pages stapled and paper written on only one side. To personalize these data, let the last digit of your student number be a . 1) (Ken Black) A national travel organization wanted to determine whether there is a significant difference between the cost of premium gas in various regions of the country. The following data was gathered in July 2006. Because the brand of gas may confuse the results, data is blocked by brand. Data Display Row 1 2 3 4 5 6 Brand A B C D E F City 1 3.47 3.43 3.44 3.46 3.46 3.44 City 2 3.40 3.41 3.41 3.45 3.40 3.43 City 3 3.38 3.42 3.43 3.40 3.39 3.42 City 4 3.32 3.35 3.36 3.30 3.39 3.39 City 5 3.50 3.44 3.45 3.45 3.48 3.49 Mark your problem ‘VERSION a .’ If a is zero, use the original numbers. If a is 1 through 4, add a to the city 4 column. If a is 5 through 9, add 0.04 a number is 090909, so he adds 0.04 9 100 100 100 to the city 5 column. Example: Seymour Butz’ .05 to column 5 (subtracts .05) and gets the table below. Version 9 Row 1 2 3 4 5 6 Brand A B C D E F City 1 3.47 3.43 3.44 3.46 3.46 3.44 City 2 3.40 3.41 3.41 3.45 3.40 3.43 City 3 3.38 3.42 3.43 3.40 3.39 3.42 City 4 3.32 3.35 3.36 3.30 3.39 3.39 City 5 3.45 3.39 3.40 3.40 3.43 3.44 a) Do a 2-way ANOVA on these data and explain what hypotheses you test and what the conclusions are. Show your work. (6) b) Using your results from a) present two different confidence intervals for the difference between numbers of defects for the best and worst city and for the defects from the best and second best brands. Explain which of the intervals show a significant difference and why. (3) 9 252y0731 4/25/07 (Page layout view!) c) What other method could we use on these data to see if city makes a difference while allowing for crossclassification? Under what circumstances would we use it? Try it and tell what it tests and what it shows. (3) d) (Extra credit) Check you results on the computer. The Minitab setup for both 2-way ANOVA and the corresponding non-parametric method is the same as the extra credit in the third graded assignment or the third computer problem. You may want to use and compare the Statistics pull-down menu and ANOVA or Nonparametrics to start with. Explain your results. (4) [12] 2) A local official wants to know how land value affects home price. The official collects the following data. 'Home price' is the dependent variable and 'Land Value' is the independent variable. Both are in thousands of dollars. If you don’t know what dependent and independent variables are, stop work until you find out. Row 1 2 3 4 5 6 7 8 9 10 HomePr 67 63 60 54 58 36 76 87 89 92 LandVal 7.0 6.9 5.5 3.7 5.9 3.8 8.9 9.6 9.9 10.0 To personalize the data let g be the second to last digit in your student number. Mark your problem ‘VERSION g . ’Subtract g from 92, which is the last home price. a) Compute the regression equation Y b b x to predict the home price on the basis of land value. 0 1 (3).You may check your results on the computer, but let me see real or simulated hand calculations. b) Compute R 2 . (2) c) Compute s e . (2) d) Compute s b0 and do a significance test on b0 (2) e) Use your equation to predict the price of the average home that is on land worth $9000. Make this into a 95% interval. (3) f) Make a graph of the data. Show the trend line and the data points clearly. If you are not willing to do this neatly and accurately, don’t bother. (2) [26] 3) A sales manager is trying to find out what method of payment works best. Salespersons are randomly assigned to payment by commission, salary or bonus. Data for units sold in a week is below. Row 1 2 3 4 5 6 7 8 9 10 11 12 13 Commission 25 35 20 30 25 30 26 17 31 30 Salary 25 25 22 20 25 23 22 25 20 26 24 Bonus 28 41 21 31 26 26 46 32 27 35 23 22 26 To personalize the data let g be the second to last digit in your student number. Subtract 2 g from 46 in the last column. Mark your problem ‘VERSION g .’Example: Payme Well’s student number is 000090, so she subtracts 18 and gets the following numbers. 10 252y0731 4/25/07 (Page layout view!) Version 9 Row 1 2 3 4 5 6 7 8 9 10 11 12 13 Commission 25 35 20 30 25 30 26 17 31 30 Salary 25 25 22 20 25 23 22 25 20 26 24 Bonus 28 41 21 31 26 26 28 32 27 35 23 22 26 a) State your null hypothesis and test it by doing a 1-way ANOVA on these data and explain whether the test tells us that payment method matters or not. (4) b) Using your results from a) present individual and Tukey intervals for the difference between earnings for all three possible contrasts. Explain (i) under what circumstances you would use each interval and (ii) whether the intervals show a significant difference. (2) c) What other method could we use on these data to see if payment method makes a difference? Under what circumstances would we use it? Try it and tell what it tests and what it shows. (3) [37] d) (Extra Credit) Do a Levene test on these data and explain what it tests and shows. (4) f) (Extra credit) Check you results on the computer. The Minitab setup for 1-way ANOVA can either be unstacked (in columns) or stacked (one column for data and one for column name). There is a Tukey option. The corresponding non-parametric methods require the stacked presentation. You may want to use and compare the Statistics pull-down menu and ANOVA or Nonparametrics to start with. You may also want to try the Mood median test and the test for equal variances which are on the menu categories above. Even better, do a normality test on your columns. Use the Statistics pull-down menu to find the normality tests. The Kolmogorov-Smirnov option is actually Lilliefors. The ANOVA menu can check for equality of variances. In light of these tests was the 1-way ANOVA appropriate? You can get descriptions of unfamiliar tests by using the Help menu and the alphabetic command list or the Stat guide. (Up to 7) [12] A 5% significance level is assumed throughout this section. 1) (Ken Black) A national travel organization wanted to determine whether there is a significant difference between the cost of premium gas in various regions of the country. The following data was gathered in July 2006. Because the brand of gas may confuse the results, data is blocked by brand. a) Do a 2-way ANOVA on these data and explain what hypotheses you test and what the conclusions are. Show your work. (6) An example of 2-way ANOVA with one measurement per cell is in 252anovaex3. Note that all three solutions below are essentially identical. To save space the row and column giving 17 .07 3.414 . The sum of column and row counts is omitted. In row 1 the mean price for brand A was 5 squares for the first row is 3.47 2 3.40 2 3.38 2 3.32 2 3.50 2 58.2977 . The mean price squared was 3.414 2 11 .6554 . 3.41867, the number at the bottom of the mean column and on the right of the mean row is the overall mean, which can be found by averaging over the row or column or over the 30 original numbers. In every case we seem to reject the similarity of city means but not the similarity of brand means. 11 252y0731 4/25/07 (Page layout view!) Solution: Version 0 Row Brand 1 A 2 B 3 C 4 D 5 E 6 F Sum SS Mean Meansq City 1 3.47 3.43 3.44 3.46 3.46 3.44 20.70 71.4162 3.45000 11.9025 City 2 3.40 3.41 3.41 3.45 3.40 3.43 20.50 70.0436 3.41667 11.6736 City 3 3.38 3.42 3.43 3.40 3.39 3.42 20.44 69.6342 3.40667 11.6054 City 4 3.32 3.35 3.36 3.30 3.39 3.39 20.11 67.4087 3.35167 11.2337 City 5 Sum SS mean 3.50 17.07 58.2977 3.414 3.44 17.05 58.1455 3.410 3.45 17.09 58.4187 3.418 3.45 17.06 58.2266 3.412 3.48 17.12 58.6262 3.424 3.49 17.17 58.9671 3.434 20.81 102.56 350.682 3.41867 72.1791 350.682 3.46833 3.41867 12.0293 58.4445 meansq 11.6554 11.6281 11.6827 11.6417 11.7238 11.7924 70.1241 x nx 350.682 303.41867 350.682 350.6191 0.0629 SSR C x nx 570.1241 303.41867 350.6205 350.6191 0.0014 SSC R x nx 658.4445 303.41867 350.6667 350.6191 0.0479 SST 2 2 2 2 i. 2 2 2 .j 2 2 Source SS Rows 0.0014 Columns Within Total DF MS F F.05 F 5,20 2.71 F 4, 20 2.87 5 0.00028 0.41 ns 0.0479 4 0.011975 17.61 s 0.0136 0.0629 20 29 0.00068 City 3 3.38 3.42 3.43 3.40 3.39 3.42 20.44 69.6342 3.40667 11.6054 City 4 3.36 3.39 3.40 3.34 3.43 3.43 20.35 69.0271 3.39167 11.5034 H0 Row means equal Column means equal s MSW .0261 Solution: Version 4 Row Brand City 1 1 A 3.47 2 B 3.43 3 C 3.44 4 D 3.46 5 E 3.46 6 F 3.44 Sum 20.70 SS 71.4162 Mean 3.45000 Mean sq 11.9025 City 2 3.40 3.41 3.41 3.45 3.40 3.43 20.50 70.0436 3.41667 11.6736 City 5 3.50 3.44 3.45 3.45 3.48 3.49 20.81 72.1791 3.46833 12.0293 sum SS mean 17.11 58.5649 3.422 17.09 58.4151 3.418 17.13 58.6891 3.426 17.10 58.4922 3.420 17.16 58.8990 3.432 17.21 59.2399 3.442 102.8 352.300 3.42667 352.300 3.42667 58.7142 mean sq 11.7101 11.6827 11.7375 11.6964 11.7786 11.8474 70.4527 x nx 352.300 303.42667 352.300 352.2620 0.0380 SSR C x nx 570.4527 303.42667 352.2627 352.2620 0.0007 SSC R x nx 658.7142 303.42667 352.2852 352.2620 0.0232 SST 2 2 2 2 i. 2 2 2 .j 2 2 Source SS DF MS Rows 0.0007 5 0.00014 0.197 ns Columns 0.0232 4 0.0058 8.169 s Within Total 0.0141 0.0380 20 29 0.00071 F F.05 F 5,20 2.71 F 4, 20 2.87 H0 Row means equal Column means equal s MSW .0266 12 252y0731 4/25/07 (Page layout view!) Solution: Version 9 Row Brand City 1 City 2 City 3 City 4 1 A 3.47 3.40 3.38 3.32 2 B 3.43 3.41 3.42 3.35 3 C 3.44 3.41 3.43 3.36 4 D 3.46 3.45 3.40 3.30 5 E 3.46 3.40 3.39 3.39 6 F 3.44 3.43 3.42 3.39 Sum 20.70 20.50 20.44 20.11 SS 71.4162 70.0436 69.6342 67.4087 Mean 3.45000 3.41667 3.40667 3.35167 MeanSq 11.9025 11.6736 11.6054 11.2337 City 5 sum SS mean 3.45 17.02 57.9502 3.404 3.39 17.00 57.8040 3.400 3.40 17.04 58.0762 3.408 3.40 17.01 57.8841 3.402 3.43 17.07 58.2807 3.414 3.44 17.12 58.6206 3.424 20.51 102.26 348.616 3.40867 70.1131 348.616 3.41833 3.40867 11.6850 58.1002 mean sq 11.5872 11.5600 11.6145 11.5736 11.6554 11.7238 69.7145 x nx 348.616 303.40867 348.616 348.5709 0.04506 SSR C x nx 569.7145 303.40867 348.5725 348.5709 0.00156 SSC R x nx 658.1002 303.40867 348.6012 348.5709 0.03030 SST 2 2 2 2 i. 2 2 2 .j 2 2 Source SS DF MS Rows 0.00156 5 0.00031 0.473 ns Columns 0.03030 4 0.00758 11.48 s Within Total 0.01320 0.04506 20 29 0.00066 F.05 F F 5,20 2.71 F 4, 20 2.87 H0 Row means equal Column means equal s MSW .0257 Note that s is essentially the same for all three versions so there is no point in sticking to separate solutions for b). b) Using your results from a) present two different confidence intervals for the difference between numbers of defects for the best and worst city and for the defects from the best and second best brands. Explain which of the intervals show a significant difference and why. (3) Solution: Since I blew the statement of this section, but no one complained, foll credit will be given for any sets of meaningful comparison of rows and columns. The intervals presented in the outline were Scheffé Confidence Interval If we desire intervals that will simultaneously be valid for a given confidence level for all possible intervals between means, use the following formulas. For row means, use 1 2 x1 x 2 R 1FR 1, RC P 1 2MSW PC . For column means, use C 1FC 1, RCP 1 2MSW . Note that if P 1 we replace RC P 1 with PR R 1C 1 . We have 6 rows and 5 columns with 1 measurement per cell. So R 1 5 , C 1 4 and 1 2 x1 x2 RC P 1 is replaced by 54 20 . We already know that s MSW .026 The row mean contrast is 1 2 x1 x 2 1 2 x1 x2 4F4,20 2MSW 6 5F5,20 2MSW 5 . The column mean contrast is We already know that the 5% values are F 4, 20 2.87 and F 5,20 2.71 . 13 252y0731 4/25/07 (Page layout view!) Bonferroni Confidence Interval with m 1 is an individual interval, not simultaneously valid at 95%. Note that if P 1 we replace RC P 1 with R 1C 1 . We have 6 rows and 5 coloumns with 1 measurement per cell. So R 1 5 , C 1 4 and RC P 1 is replaced by 54 20 The row mean contrast is 1 2 x1 x 2 t 20 2MSW and the column mean contrast is 5 2 1 2 x1 x2 t 20 2MSW 20 . t .025 2.086 . 6 2 Tukey Confidence Interval These are sort of a loose version of the Scheffe intervals. Note that if P 1 we replace RC P 1 with R 1C 1 . We have 6 rows and 5 coloumns with 1 measurement per cell. So R 1 5 , C 1 4 and RC P 1 is replaced by 54 20 The row mean contrast is 1 2 x1 x 2 q6, 20 MSW and the column mean contrast is 5 1 2 x1 x2 q5, 20 MSW . The Tukey table is shown below and it seem that the values we 6 5, 20 4, 20 4.23 and q.05 3.96 need are q.05 df 20 0.05 0.01 * 2 3 * * 2.95 4.02 * * * 3.58 4.64 * 4 * * 3.96 5.02 * * m 6 5 * 4.23 5.29 * 7 * * 4.45 5.51 * * * 4.62 5.69 * 8 9 * * 4.77 5.84 * * * 4.90 5.97 * 10 * 5.01 6.09 c) What other method could we use on these data to see if city makes a difference while allowing for crossclassification? Under what circumstances would we use it? Try it and tell what it tests and what it shows. (3) Solution: The Friedman method is similar in intent to 2-way ANOVA with one measurement per cell. It is used to compare medians when the underlying distribution cannot be considered Normal. The data is ranked within rows. Solution: Version 0 If the cities are x1 through x5 and the ranks are r1 through r5 we can make the table below. Brand x1 r1 x2 r2 x3 r3 x4 r4 x5 r5 A B C D E F Sum 3.47 3.43 3.44 3.46 3.46 3.44 4 4 4 5 4 4 25 3.40 3.0 3.41 2.0 3.41 2.0 3.45 3.5 3.40 2.0 3.43 2.5 15.0 3.38 2 3.42 3 3.43 3 3.40 2 3.39 1 3.42 1 12 The sums of ranks sum to 90, which checks our as 12 is F2 rc c 1 SR 12 SR 3r c 1 656 25 2 i i i 2 3.36 3.39 3.40 3.34 3.43 3.43 1.0 1.0 1.0 1.0 3.0 2.5 9.5 3.50 3.44 3.45 3.45 3.48 3.49 5.0 5.0 5.0 3.5 5.0 5.0 28.5 rcc 1 656 90 . The Friedman statistic 2 2 15 2 12 2 9.5 2 28 .5 2 366 2 1896 .5 108 126 .43333 108 18 .4333 . This has the chi-squared distribution with 4 degrees of 30 freedom. The upper right corner of the chi-squared table appears below. Since the computed value of 2 14 252y0731 4/25/07 (Page layout view!) exceeds any value on the table, we can say that the p-value is below .005 and reject the null hypothesis of equal medians for cities. Degrees of Freedom 1 2 3 4 0.005 7.87946 10.5966 12.8382 14.8603 0.010 6.63491 9.2103 11.3449 13.2767 0.025 5.02389 7.3778 9.3484 11.1433 0.050 3.84146 5.9915 7.8147 9.4877 0.100 2.70554 4.6052 6.2514 7.7794 Solution: Version 4 Brand x1 r1 x2 r2 x3 r3 x4 r4 x5 r5 A B C D E F Sum 3.47 3.43 3.44 3.46 3.46 3.44 4 4 4 5 4 4 25 3.40 3.41 3.41 3.45 3.40 3.43 3.0 2.0 2.0 3.5 3.0 3.0 16.5 3.38 3.42 3.43 3.40 3.39 3.42 The sums of ranks sum to 90, which checks our as 12 is F2 rc c 1 SR i 12 SR 3r c 1 656 25 2 i i 2.0 3.0 3.0 2.0 1.5 2.0 13.5 2 3.32 3.35 3.36 3.30 3.39 3.39 1.0 1.0 1.0 1.0 1.5 1.0 6.5 3.50 3.44 3.45 3.45 3.48 3.49 5.0 5.0 5.0 3.5 5.0 5.0 28.5 rcc 1 656 90 . The Friedman statistic 2 2 16 .5 2 13 .5 2 6.5 2 28 .5 2 36 6 2 1934 108 128 .9333 108 20 .93 . This has the chi-squared distribution with 4 degrees of 30 freedom. The upper right corner of the chi-squared table appears above. Since the computed value of 2 exceeds any value on the table, we can say that the p-value is below .005 and reject the null hypothesis of equal medians for cities. Solution: Version 9 Brand x1 r1 x2 r2 x3 r3 x4 r4 x5 r5 A B C D E F Sum 3.47 3.43 3.44 3.46 3.46 3.44 5.0 5.0 5.0 5.0 5.0 4.5 29.5 3.40 3.41 3.41 3.45 3.40 3.43 3 3 3 4 3 3 19 3.38 3.42 3.43 3.40 3.39 3.42 The sums of ranks sum to 90, which checks our as 2.0 4.0 4.0 2.5 1.5 2.0 16 SR i 3.32 3.35 3.36 3.30 3.39 3.39 1.0 1.0 1.0 1.0 1.5 1.0 6.5 3.45 3.39 3.40 3.40 3.43 3.44 4.0 2.0 2.0 2.5 4.0 4.5 19 rcc 1 656 90 . The Friedman statistic 2 2 12 12 29 .5 2 19 2 16 2 6.5 2 19 2 36 6 SRi2 3r c 1 is F2 656 rc c 1 i 2 1890 .50 108 126 .0333 108 18 .033 . This has the chi-squared distribution with 4 degrees of 30 freedom. The upper right corner of the chi-squared table appears above. Since the computed value of 2 exceeds any value on the table, we can say that the p-value is below .005 and reject the null hypothesis of equal medians for cities. d) (Extra credit) Check your results on the computer. The Minitab setup for both 2-way ANOVA and the corresponding non-parametric method is the same as the extra credit in the third graded assignment or the third computer problem. You may want to use and compare the Statistics pull-down menu and ANOVA or Nonparametrics to start with. Explain your results. (4) [12] 15 252y0731 4/25/07 (Page layout view!) Solution: Note that because of rounding and small numbers there is quite a bit of difference between the numbers in the ANOVAs here and above. There is no difference in conclusions and little in the value of the standard errors. The Friedman test results are essentially identical. Version 0 MTB > WSave "C:\Documents and Settings\RBOVE\My Documents\Minitab\252x0703101.MTW"; SUBC> Replace. Saving file as: 'C:\Documents and Settings\RBOVE\My Documents\Minitab\251x07031-01.MTW' MTB > print c1-c6 Data Display Row 1 2 3 4 5 6 Brand A B C D E F City 1 3.47 3.43 3.44 3.46 3.46 3.44 City 2 3.40 3.41 3.41 3.45 3.40 3.43 City 3 3.38 3.42 3.43 3.40 3.39 3.42 MTB > Stack c2-c6 c50; SUBC> Subscripts c11; SUBC> UseNames. MTB > STACK c1 c1 c1 c1 c1 c51 MTB > print c50 - c52 Data Display Row 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 C50 3.47 3.40 3.38 3.32 3.50 3.43 3.41 3.42 3.35 3.44 3.44 3.41 3.43 3.36 3.45 3.46 3.45 3.40 3.30 3.45 3.46 3.40 3.39 3.39 3.48 3.44 3.43 3.42 3.39 3.49 Rowno A A A A A B B B B B C C C C C D D D D D E E E E E F F F F F City City City City City City City City City City City City City City City City City City City City City City City City City City City City City City City City 4 3.32 3.35 3.36 3.30 3.39 3.39 City 5 3.50 3.44 3.45 3.45 3.48 3.49 #Puts all columns into C50 and column labels into C52. #This could be done by hand, but pull-down #menus are easy to use. #Stacks row labels in 51 1 2 3 4 5 1 2 3 4 5 1 2 3 4 5 1 2 3 4 5 1 2 3 4 5 1 2 3 4 5 16 252y0731 4/25/07 (Page layout view!) MTB > Twoway C50 C51 C52; SUBC> Means C51 C52. Two-way ANOVA: C50 versus Rowno, City Source Rowno DF 5 SS 0.0020267 MS 0.0004053 F 0.63 P 0.677 #High p-value. Do not reject equal firm #means. City 4 0.0485133 0.0121283 18.94 0.000 #Low p-value. Reject equal city means. Error 20 0.0128067 0.0006403 Total 29 0.0633467 S = 0.02530 R-Sq = 79.78% R-Sq(adj) = 70.69% Rowno 1 2 3 4 5 6 City City City City City City Mean 3.414 3.410 3.418 3.412 3.424 3.434 1 2 3 4 5 Individual 95% CIs For Mean Based on Pooled StDev -------+---------+---------+---------+-(-----------*-----------) (-----------*-----------) (-----------*-----------) (-----------*-----------) (-----------*-----------) (-----------*-----------) -------+---------+---------+---------+-3.400 3.420 3.440 3.460 Mean 3.45000 3.41667 3.40667 3.35167 3.46833 Individual 95% CIs For Mean Based on Pooled StDev -------+---------+---------+---------+-(-----*----) (----*-----) (-----*----) (----*----) (----*----) -------+---------+---------+---------+-3.360 3.400 3.440 3.480 MTB > Friedman C50 C52 C51. Friedman Test: C50 versus City blocked by Rowno S = 20.93 S = 21.29 City City City City City City 1 2 3 4 5 N 6 6 6 6 6 DF = 4 DF = 4 P = 0.000 #Low p-value. Reject equal city medians. P = 0.000 (adjusted for ties) Sum of Est Median Ranks 3.4375 25.0 3.4095 16.5 3.4025 13.5 3.3525 6.5 3.4555 28.5 Grand median = 3.4115 Version 4 Results for: 252x07031-01a4.MTW MTB > Stack c2-c6 c10; SUBC> Subscripts c11; SUBC> UseNames. MTB > STACK c1 c1 c1 c1 c1 c12 17 252y0731 4/25/07 (Page layout view!) MTB > Twoway c10 c12 c11; SUBC> Means c12 c11. Two-way ANOVA: C10 versus C12, C11 Source C12 DF 5 SS 0.0020267 MS 0.0004053 F 0.63 P 0.677 #High p-value. Do not reject equal firm #means. 0.000 #Low p-value. Reject equal city means. C11 4 0.0240333 0.0060083 9.38 Error 20 0.0128067 0.0006403 Total 29 0.0388667 S = 0.02530 R-Sq = 67.05% R-Sq(adj) = 52.22% C12 A B C D E F C11 City City City City City Individual 95% CIs For Mean Based on Pooled StDev ---+---------+---------+---------+-----(-----------*-----------) (-----------*-----------) (-----------*-----------) (-----------*-----------) (-----------*-----------) (-----------*-----------) ---+---------+---------+---------+-----3.400 3.420 3.440 3.460 Mean 3.422 3.418 3.426 3.420 3.432 3.442 1 2 3 4 5 Mean 3.45000 3.41667 3.40667 3.39167 3.46833 Individual 95% CIs For Mean Based on Pooled StDev -------+---------+---------+---------+-(------*------) (------*------) (-------*------) (-------*------) (------*------) -------+---------+---------+---------+-3.390 3.420 3.450 3.480 MTB > Friedman c50 c52 c51. Friedman Test: C50 versus C52 blocked by C51 S = 18.43 S = 18.75 DF = 4 DF = 4 P = 0.001 #Low p-value. Reject equal city medians. P = 0.001 (adjusted for ties) Sum of C52 N Est Median Ranks City 1 6 3.4375 25.0 City 2 6 3.4095 15.0 City 3 6 3.4025 12.0 City 4 6 3.3925 9.5 City 5 6 3.4555 28.5 Grand median = 3.4195 Version 9 MTB > WOpen "C:\Documents and Settings\RBOVE\My Documents\Minitab\252x0703101a9.MTW". Retrieving worksheet from file: 'C:\Documents and Settings\RBOVE\My Documents\Minitab\252x07031-01a9.MTW' Worksheet was saved on Wed Apr 04 2007 Results for: 252x07031-01a9.MTW MTB > Stack c2-c6 c10; SUBC> Subscripts c12; SUBC> UseNames. MTB > stack c1 c1 c1 c1 c1 c11 18 252y0731 4/25/07 (Page layout view!) MTB > Twoway c10 c11 c12; SUBC> Means c11 c12. Two-way ANOVA: C10 versus C11, C12 Source C11 DF 5 SS 0.0020267 MS 0.0004053 F 0.63 P 0.677 #High p-value. Do not reject equal firm #means. C12 4 0.0307133 0.0076783 11.99 0.000 #Low p-value. Reject equal city means Error 20 0.0128067 0.0006403 Total 29 0.0455467 S = 0.02530 R-Sq = 71.88% R-Sq(adj) = 59.23% C11 A B C D E F C12 City City City City City Mean 3.404 3.400 3.408 3.402 3.414 3.424 1 2 3 4 5 Individual 95% CIs For Mean Based on Pooled StDev --+---------+---------+---------+------(-----------*-----------) (-----------*-----------) (-----------*-----------) (-----------*-----------) (-----------*-----------) (-----------*-----------) --+---------+---------+---------+------3.380 3.400 3.420 3.440 Mean 3.45000 3.41667 3.40667 3.35167 3.41833 Individual 95% CIs For Mean Based on Pooled StDev -------+---------+---------+---------+-(-----*----) (----*-----) (-----*----) (----*----) (-----*----) -------+---------+---------+---------+-3.360 3.400 3.440 3.480 MTB > Friedman c50 c52 c51. Friedman Test: C50 versus C52 blocked by C51 S = 18.03 S = 18.50 DF = 4 DF = 4 P = 0.001 #Low p-value. Reject equal city medians. P = 0.001 (adjusted for ties) Sum of C52 N Est Median Ranks City 1 6 3.4375 29.5 City 2 6 3.4095 19.0 City 3 6 3.4025 16.0 City 4 6 3.3525 6.5 City 5 6 3.4055 19.0 Grand median = 3.4015 19 252y0731 4/25/07 (Page layout view!) 2) A local official wants to know how land value affects home price. The official collects the following data. 'Home price' is the dependent variable and 'Land Value' is the independent variable. Both are in thousands of dollars. If you don’t know what dependent and independent variables are, stop work until you find out. Row 1 2 3 4 5 6 7 8 9 10 HomePr 67 63 60 54 58 36 76 87 89 92 LandVal 7.0 6.9 5.5 3.7 5.9 3.8 8.9 9.6 9.9 10.0 To personalize the data let g be the second to last digit in your student number. Mark your problem ‘VERSION g . ’Subtract g from 92, which is the last home price. a) Compute the regression equation Y b b x to predict the home price on the basis of land value. 0 1 (3).You may check your results on the computer, but let me see real or simulated hand calculations. Version 0 Y i 1 2 3 4 5 6 7 8 9 10 67 63 60 54 58 36 76 87 89 92 682 7.0 6.9 5.5 3.7 5.9 3.8 8.9 9.6 9.9 10.0 71.2 To summarize n 10, Y 2 49.00 469.0 4489 47.61 434.7 3969 30.25 330.0 3600 13.69 199.8 2916 34.81 342.2 3364 14.44 136.8 1296 79.21 676.4 5776 92.16 835.2 7569 98.01 881.1 7921 100.00 920.0 8464 559.18 5225.2 49364 X 71.2, Y 682 , XY 5225 .2, X X 71.2 7.12 n 10 Spare Parts: SS x S xy Y2 XY 2 559 .18 and 4364 . df n 2 10 2 8. Means: X SS y X2 X Y 2 X 2 Y Y 682 68.2 n 10 nX 559.18 107.122 52.236 2 nY 2 49364 1068.22 2851.6 SST (Total Sum of Squares) XY nXY 5225 .2 107.12 68.2 369 .36 Coefficients: b1 S xy SS x XY nXY X nX 2 2 369 .36 7.0710 52 .236 b0 Y b1 X 68 .2 70 .0710 7.12 17 .85458 So our equation is Yˆ 17.85458 7.0710 X Check: Regression Analysis: HomePr versus LandVal The regression equation is HomePr = 17.9 + 7.07 LandVal Predictor Coef SE Coef T P Constant 17.855 5.665 3.15 0.014 LandVal 7.0710 0.7576 9.33 0.000 S = 5.47564 R-Sq = 91.6% R-Sq(adj) = 90.5% Analysis of Variance 20 252y0731 4/25/07 (Page layout view!) Source Regression Residual Error Total DF 1 8 9 SS 2611.7 239.9 2851.6 Unusual Observations Obs LandVal HomePr 4 3.7 54.00 MS 2611.7 30.0 Fit 44.02 SE Fit 3.12 F 87.11 P 0.000 Residual 9.98 St Resid 2.22R b) Compute R 2 . (2) Please do not repeat any calculations from a), but recall the following. Spare Parts: SS x SS y S xy Y 2 X 2 nX 2 559.18 107.122 52.236 nY 2 49364 1068.22 2851.6 SST (Total Sum of Squares) XY nXY 5225 .2 107.12 68.2 369 .36 S xy 369 .36 b0 Y b1 X 68 .2 70 .0710 7.12 17 .85458 7.0710 SS x 52 .236 So SSR b1 Sxy 7.0710 369 .36 2611 .745 , and b1 R2 S xy 2 369 .36 2 .9159 . SSR b1 S xy 2611 .745 .9159 or R 2 SST SSy 2851 .6 SS x SS y 52 .236 2851 .6 c) Compute s e . (2) SSE SST SSR 2851 .6 2611 .745 239 .855 s e2 SSE 239 .855 29 .9819 s e 29 .9819 5.47557 This is more accurate than the next calculation. n2 8 2 Note also that if R is available s e2 SS y 1 R 2 n2 2851 .61 .9159 29.97745 8 and s e 29 .97745 5.47517 . d) Compute s b0 and do a significance test on b0 (2). Recall X 7.12 and SS x 52.236 . H 0 : 0 00 b 00 8 We are now testing with t 0 . We are using t n2 t.025 2.306. 2 H : s 1 0 00 b0 1 7.12 2 1 X 2 s b20 s e2 29 .9819 1.07049 32 .0953 . So b0 17 .85458 and 29 .9819 n SS x 10 52 .236 H 0 : 0 0 b 0 17 .8548 sb0 39.0953 5.6653 . If we are testing t 0 3.152 . Since the s b0 5.6653 H 1 : 0 0 rejection region is above 2.306 and below -2,306, we reject the null hypothesis and say that 0 is significant. A confidence interval would be 0 b0 t sb0 17.855 2.3065.6653 17.855 13.064 . 2 Since this confidence interval does not include zero, we reject the null hypothesis of insignificance. Note that at the 1% significance level we could not reject the null hypothesis. e) Use your equation to predict the price of the average home that is on land worth $9000. Make this into a 95% interval. (3) Solution: Recall that n 10, X 7.12 , SS x 52.236 , s e2 29.9819 (or s e 29 .9819 5.47557 ), that both the dependent and independent variables are measured in thousands and that our equation is Yˆ 17.85458 7.0710 X . 21 252y0731 4/25/07 (Page layout view!) So if X 9 , Yˆ 17.85458 7.07109 81.49 . Since we are concerned with an average value, we do not use the prediction interval The formula for the Confidence Interval is Yˆ t s ˆ , where Y0 1 X X sY2ˆ s e2 0 n SS x 0 Y 2 29 .9819 1 9 7.12 10 52 .236 29.9819 0.1 0.06766 29.9819 0.16766 8 5.02683 or s ˆ 5.02683 2.2421 . We already know that t n2 t.025 2.306. and that Yˆ 81.49 . 2 Y 2 So that our confidence interval is Y0 Yˆ0 t sY 81.49 2.3062.2421 81.49 5.17 , so that we can say that we are 95% sure that the average house standing on $9(thousand) worth of land will sell for between $76.32 and $86.66 (thousand). f) Make a graph of the data. Show the regression line and the data points clearly. If you are not willing to do this neatly and accurately, don’t bother. (2) [26] Fitted Line: HomePrice versus LandVal Version 9 Because time was short, I was only able to run one other version of the the regression and that only on the computer, but because it is the most extreme version, all other results should fall between the results below and the results above. The run follows with interpretation. This is a run of my ‘exec’ 252sols. If you wish to check your results, you are welcome to use it. As you can see, it is easy to run and internally documented. To prepare your data, put y in c1 and x in c2. Then store it using the files pull-down menu and ‘save worksheet.’ This is to establish a path for Minitab to find 252sols. Give Minitab the command echo in order to be able to track what it is doing. Then download 252sols.txt from the courses server to your storage location, and start it with the Minitab command, exec '252sols.txt'. Here is the annotated output. MTB > WOpen "C:\Documents and Settings\RBOVE\My Documents\Minitab\252x0703201a9.MTW". Retrieving worksheet from file: 'C:\Documents and Settings\RBOVE\My Documents\Minitab\252x07032-01a9.MTW' Worksheet was saved on Thu Apr 05 2007 A worksheet of the same name is already open... current worksheet overwritten. MTB > Save "C:\Documents and Settings\RBOVE\My Documents\Minitab\252x0703201a9.MTW"; 22 252y0731 4/25/07 (Page layout view!) SUBC> Replace. Saving file as: 'C:\Documents and Settings\RBOVE\My Documents\Minitab\252x07032-01a9.MTW' Existing file replaced. MTB > #252sols Fakes simple ols regression by hand MTB > regress c1 1 c2 Regression Analysis: HomePr versus LandVal The regression equation is HomePr = 20.5 + 6.57 LandVal Predictor Coef SE Coef Constant 20.488 5.644 LandVal 6.5748 0.7548 S = 5.45503 R-Sq = 90.5% T P 3.63 0.007 8.71 0.000 R-Sq(adj) = 89.3% Analysis of Variance Source DF SS Regression 1 2258.0 Residual Error 8 238.1 Total 9 2496.1 MS 2258.0 29.8 F 75.88 P 0.000 Unusual Observations Obs LandVal HomePr Fit SE Fit Residual St Resid 4 3.7 54.00 44.81 3.10 9.19 2.05R 6 3.8 36.00 45.47 3.04 -9.47 -2.09R R denotes an observation with a large standardized residual. MTB MTB MTB MTB MTB MTB MTB MTB MTB > > > > > > > > > let c3=c2*c2 let c4=c1*c2 let c5=c1*c1 let k1=sum(c1) let k2=sum(c2) let k3=sum(c3) let k4=sum(c4) let k5=sum(c5) print c1-c5 Data Display Row 1 2 3 4 5 6 7 8 9 10 MTB MTB MTB MTB MTB MTB MTB MTB MTB MTB HomePr 67 63 60 54 58 36 76 87 89 83 > > > > > > > > > > C3 49.00 47.61 30.25 13.69 34.81 14.44 79.21 92.16 98.01 100.00 in c1, x in c2 x squared, #c4 is xy y squared sum of y sum of x sum of x squared sum of xy sum of y squared C4 469.0 434.7 330.0 199.8 342.2 136.8 676.4 835.2 881.1 920.0 C5 4489 3969 3600 2916 3364 1296 5776 7569 7921 8464 let k17=count(c1) #k17 is n let k21=k2/k17 #k21 is mean of x let k22=k1/k17 #k22 is mean of y let k18=k21*k21*k17 let k18=k3-k18 #k18 is SSx let k19=k22*k22*k17 let k19=k5-k19 #k19 is SSy let k20=k21*k22*k17 let k20=k4-k20 #k20 is Sxy print k1-k5 Data Display K1 K2 K3 K4 K5 LandVal 7.0 6.9 5.5 3.7 5.9 3.8 8.9 9.6 9.9 10.0 #Put y #c3 is #c5 is #k1 is #k2 is #k3 is #k4 is #k5 is 673.000 71.2000 559.180 5225.20 49364.0 #k1 #k2 #k3 #k4 #k5 is is is is is sum sum sum sum sum of of of of of y x x squared xy y squared 23 252y0731 4/25/07 (Page layout view!) MTB > print k17-k22 Data Display K17 K18 K19 K20 K21 K22 10.0000 52.2360 4071.10 433.440 7.12000 67.3000 #k17 #k18 #k19 #k20 #k21 #k22 is is is is is is n SSx SSy Sxy mean of x mean of y MTB > end 24 252y0731 4/25/07 (Page layout view!) 3) A sales manager is trying to find out what method of payment works best. Salespersons are randomly assigned to payment by commission, salary or bonus. Data for units sold in a week is below. Row 1 2 3 4 5 6 7 8 9 10 11 12 13 Commission 25 35 20 30 25 30 26 17 31 30 Salary 25 25 22 20 25 23 22 25 20 26 24 Bonus 28 41 21 31 26 26 46 32 27 35 23 22 26 To personalize the data let g be the second to last digit in your student number. Subtract 2 g from 46 in the last column. Mark your problem ‘VERSION g .’Example: Payme Well’s student number is 000090, so she subtracts 18 and gets the following numbers. Version 9 Row Commission 1 25 2 35 3 20 4 30 5 25 6 30 7 26 8 17 9 31 10 30 11 12 13 Salary 25 25 22 20 25 23 22 25 20 26 24 Bonus 28 41 21 31 26 26 28 32 27 35 23 22 26 a) State your null hypothesis and test it by doing a 1-way ANOVA on these data and explain whether the test tells us that payment method matters or not. (4) Version 0: You should be able to do the calculations below. Only the three columns of numbers were given to us. Payment Method Sum Line Commission Salary Bonus 1 2 3 4 5 6 7 8 9 10 11 12 13 25 35 20 30 25 30 26 17 31 30 25 25 22 20 25 23 22 25 20 26 24 269 + 257 + 384 910 nj 10 + 11 + 13 34 n x j 26.9 23.3636 29.5385 26 .7647 x SS 7501 + 6049 + 12002 25552 Sum x 2j 723.61 545.8595 28 41 21 31 26 26 46 32 27 35 23 22 26 x ij x 2 ij 872.5207 25 252y0731 4/25/07 (Page layout view!) Note that all the squared means were gotten by going back to the original ratios used to calculate the means. x nx 25552 3426.7647 2552 2435.8824 1196.1177 SSB n x nx 1026.9 1123.3636 1329.5385 3426.7647 SST 2 ij 2 j .j 2 2 2 2 2 2 2 10723 .61 11545 .8595 13872 .5207 24355 .8824 7236 .1 6004 .4545 11342 .7691 24355 .8824 24583 .3236 24355 .8824 227 .4413 Source Between SS DF MS F F.05 2 113.7207 3.6393 s F 2,31 3.30 227.4413 H0 Column means equal Within 968.6765 31 31.2476 Total 1196.1177 33 Because our computed F statistic exceeds the 5% table value, we reject the null hypothesis of equal means for each payment method and conclude that method of payment matters. This was verified by the Minitab printout . MTB > AOVOneway c1-c3; SUBC> Tukey 5; SUBC> Fisher 5. One-way ANOVA: Commission, Salary, Bonus Source DF Factor 2 Error 31 Total 33 S = 5.590 Level Commission Salary Bonus SS MS 227.4 113.7 968.7 31.2 1196.1 R-Sq = 19.01% N 10 11 13 Mean 26.900 23.364 29.538 F 3.64 P 0.038 R-Sq(adj) = 13.79% Individual 95% CIs For Mean Based on Pooled StDev StDev ---+---------+---------+---------+-----5.425 (---------*---------) 2.111 (---------*---------) 7.412 (--------*--------) ---+---------+---------+---------+-----21.0 24.5 28.0 31.5 Pooled StDev = 5.590 b) Using your results from a) present individual and Tukey intervals for the difference between earnings for all three possible contrasts. Explain (i) under what circumstances you would use each interval and (ii) whether the intervals show a significant difference. (2) Let’s start with s MSW 31.2476 5.5899, x1 26 .9, x2 23.3636 , x3 29.5385 , n1 10, n2 11 and n3 13 . There are m 3 columns and the ANOVA printout gives us n m 31 for 3,31 31 3.49 2.040 and q.05 the within degrees of freedom. From our tables we find t .025 26 252y0731 4/25/07 (Page layout view!) The outline gives the following for these two intervals. Contrast 1-2 Individual Confidence Interval If we desire a single interval, we use the formula for the difference between two means when the variance is known. For example, if we want the difference between means of column 1 and column 2. 1 2 x1 x2 t n m s 2 1 1 n1 n 2 1 1 26 .9 23 .3636 2.040 31 .2476 10 11 3.54 2.040 5.96545 3.54 2.040 2.442 3.54 4.98 ns Tukey Confidence Interval This applies to all possible differences. 1 2 x1 x2 q m,n m 3.54 3.49 s 2 1 1 n1 n 2 26 .9 23 .3636 3.49 31 .2476 1 1 2 10 11 1 5.96545 3.54 3.49 1.727 3.54 6.03 ns 2 Contrast 1-3 Individual Confidence Interval If we desire a single interval, we use the formula for the difference between two means when the variance is known. For example, if we want the difference between means of column 1 and column 2. 1 3 x1 x3 t n m s 2 1 1 1 1 26 .9 29 .5285 2.040 31 .2476 n1 n3 10 13 2.63 2.040 5.52842 2.63 2.040 2.351 2.63 4.80 ns Tukey Confidence Interval This applies to all possible differences. 1 3 x1 x3 q m,n m 2.63 3.49 s 2 31 .2476 1 1 26 .9 29 .5285 3.49 2 n1 n3 1 1 10 13 1 5.52842 2.63 3.49 1.662 2.63 5.80 ns 2 Contrast 2-3 Individual Confidence Interval If we desire a single interval, we use the formula for the difference between two means when the variance is known. For example, if we want the difference between means of column 1 and column 2. 2 3 x2 x3 t n m s 2 1 1 n 2 n3 1 1 23 .3636 29 .5285 2.040 31 .2476 11 13 6.16 2.040 5.24435 6.16 2.040 2.290 6.16 4.67 Tukey Confidence Interval This applies to all possible differences. 2 3 x2 x3 q m,n m s 2 31 .2476 1 1 1 1 23 .3636 29 .5285 3.49 2 n 2 n3 11 13 1 5.24435 6.16 3.49 1.619 6.16 5.65 2 The remainder of the Minitab printout for this problem follows. 6.16 3.49 27 252y0731 4/25/07 (Page layout view!) Tukey 95% Simultaneous Confidence Intervals All Pairwise Comparisons Individual confidence level = 98.04% Commission subtracted from: Salary Bonus Lower -9.547 -3.147 Center -3.536 2.638 Upper 2.474 8.424 +---------+---------+---------+--------(---------*---------) (--------*---------) +---------+---------+---------+---------12.0 -6.0 0.0 6.0 Salary subtracted from: Bonus Lower 0.540 Center 6.175 Upper 11.810 +---------+---------+---------+--------(--------*---------) +---------+---------+---------+---------12.0 -6.0 0.0 6.0 Fisher 95% Individual Confidence Intervals All Pairwise Comparisons Simultaneous confidence level = 88.04% Commission subtracted from: Salary Bonus Lower -8.518 -2.157 Center -3.536 2.638 Upper 1.445 7.434 --------+---------+---------+---------+(-------*-------) (-------*-------) --------+---------+---------+---------+-6.0 0.0 6.0 12.0 Salary subtracted from: Bonus Lower 1.504 Center 6.175 Upper 10.845 --------+---------+---------+---------+(------*-------) --------+---------+---------+---------+-6.0 0.0 6.0 12.0 c) What other method could we use on these data to see if payment method makes a difference? Under what circumstances would we use it? Try it and tell what it tests and what it shows. (3) [37] The alternative to one-way ANOVA for situations in which the parent distribution is not Normal is the Kruskal-Wallis test. The original data is replaced by ranks. Data Display Row Commission 1 2 3 4 5 6 7 8 9 10 11 12 13 Rank Sum ni Rank Commission Salary Rank Salary Bonus x1 r1 x2 r2 x3 25 35 20 30 25 30 26 17 31 30 14.5 31.5 3.0 26.0 14.5 26.0 20.0 1.0 28.5 26.0 25 25 22 20 25 23 22 25 20 26 24 14.5 14.5 7.0 3.0 14.5 9.5 7.0 14.5 3.0 20.0 11.0 28 41 21 31 26 26 46 32 27 35 23 22 26 191 10 Note that the sum of the first thirty-four numbers is 118.5 11 Rank Bonus r3 24.0 33.0 5.0 28.5 20.0 20.0 34.0 30.0 23.0 31.5 9.5 7.0 20.0 285.5 13 34 35 595 , so that we can check our ranking by 2 noting that 191 + 118.5 + 285.5 = 595. 28 252y0731 4/25/07 12 H nn 1 i (Page layout view!) SRi 2 ni 2 2 2 3n 1 12 191 118 .5 285 .5 335 11 13 34 35 10 1211194 .6701 12 105 112 .8874 105 7.8874 . The 3648 .1 1276 .5682 6270 .0019 105 34 35 3435 2 design of the data is too large for the Kruskal-Wallis table, so we compare this with 2 .05 5.9915 . Since the computed chi_squared exceeds the table value we can reject the null hypothesis of equal medians. The computer agrees. The Minitab printout follows. MTB > Kruskal-Wallis c4 c5. Kruskal-Wallis Test: CSB0 versus Pay0 Kruskal-Wallis Test on CSB0 Pay0 N Median Ave Bonus 13 27.00 Commission 10 28.00 Salary 11 24.00 Overall 34 H = 7.89 DF = 2 P = 0.019 H = 7.97 DF = 2 P = 0.019 Rank 22.0 19.1 10.8 17.5 Z 2.06 0.60 -2.72 (adjusted for ties) d) (Extra Credit) Do a Levene test on these data and explain what it tests and shows. (4) The Levene test is a test for equal variances. The original data is below. Row 1 2 3 4 5 6 7 8 9 10 11 12 13 Commission 25 35 20 30 25 30 26 17 31 30 Salary 25 25 22 20 25 23 22 25 20 26 24 Bonus 28 41 21 31 26 26 46 32 27 35 23 22 26 To find the median, put the data in order and pick the center number or an average of the two center numbers. We find that the median of Commission is 28.00, the median of Salary is 24.00 and the median of Bonus is 27.00.We subtract the column median from each column. Row 1 2 3 4 5 6 7 8 9 10 11 12 13 Commission -3 7 -8 2 -3 2 -2 -11 3 2 Salary 1 1 -2 -4 1 -1 -2 1 -4 2 0 Bonus 1 14 -6 4 -1 1 19 5 0 8 -4 -5 -1 29 252y0731 4/25/07 (Page layout view!) We now take absolute values. Row 1 2 3 4 5 6 7 8 9 10 11 12 13 Commission 3 7 8 2 3 2 2 11 3 2 Salary 1 1 2 4 1 1 2 1 4 2 0 Bonus 1 14 6 4 1 1 19 5 0 8 4 5 1 An ANOVA is done on these 3 columns. Source SS DF Between 79.2 2 MS 39.6 F.05 F 2.538 ns F 2,31 3.30 H0 Column means equal Within 485.1 31 15.6 Total 564.3 33 Because we cannot reject the null hypothesis, we cannot say that the variances are not equal. As long as we use the Levine test, Minitab agrees. However, the more powerful Bartlett test causes us to reject the null hypothesis of equal variances if the data is Normally distributed. Test for Equal Variances: Commission, Salary, Bonus 95% Bonferroni confidence intervals for standard deviations Commission Salary Bonus N 10 11 13 Lower 3.45609 1.36993 4.96227 StDev 5.42525 2.11058 7.41188 Upper 11.5454 4.2692 13.8626 Bartlett's Test (normal distribution) Test statistic = 12.69, p-value = 0.002 Levene's Test (any continuous distribution) Test statistic = 2.53, p-value = 0.096 f) (Extra Credit) Check you results on the computer. The Minitab setup for 1-way ANOVA can either be unstacked (in columns) or stacked (one column for data and one for column name). There is a Tukey option. The corresponding non-parametric methods require the stacked presentation. You may want to use and compare the Statistics pull-down menu and ANOVA or Nonparametrics to start with. You may also want to try the Mood median test and the test for equal variances which are on the menu categories above. Even better, do a normality test on your columns. Use the Statistics pull-down menu to find the normality tests. The Kolmogorov-Smirnov option is actually Lilliefors. The ANOVA menu can check for equality of variances. In light of these tests was the 1-way ANOVA appropriate? You can get descriptions of unfamiliar tests by using the Help menu and the alphabetic command list or the Stat guide. (Up to 7) [12]. Most of this was done above. The printout below is for the Normality tests and Mood’s median test. ————— 4/18/2007 1:42:53 PM ———————————————————— Welcome to Minitab, press F1 for help. Retrieving worksheet from file: 'C:\Documents and Settings\RBOVE\My Documents\Minitab\252x07031-03-v0.MTW' Worksheet was saved on Tue Apr 17 2007 Results for: 252x07031-03-v0.MTW MTB > Save v0.MTW"; "C:\Documents and Settings\RBOVE\My Documents\Minitab\252x07031-03- 30 252y0731 4/25/07 (Page layout view!) SUBC> Replace. Saving file as: 'C:\Documents and Settings\RBOVE\My Documents\Minitab\252x07031-03-v0.MTW' Existing file replaced. MTB > NormTest c1; SUBC> KSTest. Probability Plot of Commission MTB > NormTest c2; SUBC> KSTest. Probability Plot of Salary MTB > NormTest c3; SUBC> KSTest. Probability Plot of Bonus Unfortunately, this is hard to read in this format. In the little boxes the last item is the p-value for the test and in each case the p-value is reported as ‘> .150.’ Because this p-value is above any significance level we might use, we cannot reject the null hypothesis of Normality. MTB > WOpen "C:\Documents and Settings\RBOVE\My Documents\Minitab\252x0703103.MTW". Retrieving worksheet from file: 'C:\Documents and Settings\RBOVE\My Documents\Minitab\252x07031-03.MTW' Worksheet was saved on Wed Apr 04 2007 31 252y0731 4/25/07 (Page layout view!) Results for: 252x07031-03.MTW MTB > print c5 c6 Data Display Row 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 Pay0 Commission Commission Commission Commission Commission Commission Commission Commission Commission Commission Salary Salary Salary Salary Salary Salary Salary Salary Salary Salary Salary Bonus Bonus Bonus Bonus Bonus Bonus Bonus Bonus Bonus Bonus Bonus Bonus Bonus C6 14.5 31.5 3.0 26.0 14.5 26.0 20.0 1.0 28.5 26.0 14.5 14.5 7.0 3.0 14.5 9.5 7.0 14.5 3.0 20.0 11.0 24.0 33.0 5.0 28.5 20.0 20.0 34.0 30.0 23.0 31.5 9.5 7.0 20.0 MTB > Mood C6 c5. Mood Median Test: C6 versus Pay0 Mood median test for C6 Chi-Square = 11.53 DF = 2 Pay0 Bonus Commission Salary N<= 3 4 10 N> 10 6 1 Median 23.0 23.0 11.0 P = 0.003 Individual 95.0% CIs Q3-Q1 +---------+---------+---------+-----16.0 (--------*----------) 15.0 (-----------------*----) 7.5 (-----*----) +---------+---------+---------+-----7.0 14.0 21.0 28.0 Overall median = 17.3 The Mood test gives us a low p-value which means that the medians are not similar. Because we have concluded that the data is Normally distributed, we have to go with the Bartlett test, which says the variances are unequal. If we had only the Levine result and the Normality tests, we could use ANOVA, but the Bartlett test says that we have unequal variances. Under the circumstances, we have a quandary and would probably go with the result of the Mood or Kruskal-Wallis test. In any case, we can conclude that the means are different and that we are probably better off using commissions. 32 252y0731 4/25/07 (Page layout view!) Version 9 MTB > print c11 - c13 Data Display Row 1 2 3 4 5 6 7 8 9 10 11 12 13 Co0 25 35 20 30 25 30 26 17 31 30 Sa0 25 25 22 20 25 23 22 25 20 26 24 Bo9 28 41 21 31 26 26 28 32 27 35 23 22 26 MTB > AOVOneway c11-c13; SUBC> Tukey 5; SUBC> Fisher 5. One-way ANOVA: Co0, Sa0, Bo9 Source DF Factor 2 Error 31 Total 33 S = 4.667 Level Co0 Sa0 Bo9 N 10 11 13 #Note that we do not reject equal means. SS MS F P 143.0 71.5 3.28 0.051 675.1 21.8 818.1 R-Sq = 17.48% R-Sq(adj) = 12.15% Mean 26.900 23.364 28.154 StDev 5.425 2.111 5.520 Individual 95% CIs For Mean Based on Pooled StDev --+---------+---------+---------+------(---------*---------) (---------*--------) (--------*--------) --+---------+---------+---------+------21.0 24.0 27.0 30.0 Pooled StDev = 4.667 Tukey 95% Simultaneous Confidence Intervals All Pairwise Comparisons Individual confidence level = 98.04% Co0 subtracted from: Sa0 Bo9 Lower -8.554 -3.576 Center -3.536 1.254 Upper 1.481 6.084 ---------+---------+---------+---------+ (---------*---------) (---------*--------) ---------+---------+---------+---------+ -5.0 0.0 5.0 10.0 Sa0 subtracted from: ---------+---------+---------+---------+ (---------*--------) ---------+---------+---------+---------+ -5.0 0.0 5.0 10.0 Fisher 95% Individual Confidence Intervals All Pairwise Comparisons Simultaneous confidence level = 88.04% Bo9 Lower 0.086 Center 4.790 Upper 9.495 Co0 subtracted from: Sa0 Bo9 Lower -7.695 -2.750 Center -3.536 1.254 Upper 0.622 5.257 -------+---------+---------+---------+-(-------*-------) (-------*-------) -------+---------+---------+---------+-- 33 252y0731 4/25/07 (Page layout view!) -5.0 0.0 5.0 10.0 Sa0 subtracted from: Bo9 Lower 0.891 Center 4.790 Upper 8.689 -------+---------+---------+---------+-(-------*------) -------+---------+---------+---------+--5.0 0.0 5.0 10.0 MTB > vartest c11-c13; SUBC> unstacked. Test for Equal Variances: Co0, Sa0, Bo9 95% Bonferroni confidence intervals for standard deviations N Lower StDev Upper Co0 10 3.45609 5.42525 11.5454 Sa0 11 1.36993 2.11058 4.2692 Bo9 13 3.69589 5.52036 10.3248 Bartlett's Test (normal distribution) Test statistic = 8.75, p-value = 0.013 Levene's Test (any continuous distribution) Test statistic = 2.25, p-value = 0.123 MTB > Stack c11 c12 c13 c14; SUBC> Subscripts c15; SUBC> UseNames. MTB > MTB > SUBC> SUBC> MTB > rank c14 c16 unstack c16 c17 c18 c19; subscripts c15; varnames. Kruskal-Wallis c14 c15 Kruskal-Wallis Test: CSB9 versus Pay 9 Kruskal-Wallis Test on CSB9 Pay 9 N Median Ave Rank Z Bo9 13 27.00 21.6 1.88 Co0 10 28.00 19.6 0.79 Sa0 11 24.00 10.8 -2.72 Overall 34 17.5 H = 7.64 DF = 2 P = 0.022 H = 7.73 DF = 2 P = 0.021 (adjusted for ties) MTB > print c11 c17 c12 c18 c13 c19 Data Display Row 1 2 3 4 5 6 7 8 9 10 11 12 13 Co0 25 35 20 30 25 30 26 17 31 30 C16_Bo9 24.5 34.0 5.0 29.5 20.0 20.0 24.5 31.0 23.0 32.5 9.5 7.0 20.0 Sa0 25 25 22 20 25 23 22 25 20 26 24 C16_Co0 14.5 32.5 3.0 27.0 14.5 27.0 20.0 1.0 29.5 27.0 Bo9 28 41 21 31 26 26 28 32 27 35 23 22 26 MTB > copy c11-c13 c31-c33 * NOTE * Column lengths not equal. C16_Sa0 14.5 14.5 7.0 3.0 14.5 9.5 7.0 14.5 3.0 20.0 11.0 We are setting up for a Levine test. MTB > name k31 'medco0a' MTB > name k32 'medsal0a' MTB > name k33 'medbon9a' 34 252y0731 4/25/07 MTB MTB MTB MTB > > > > (Page layout view!) let k31 = median(c31) let k32 = median(c32) let k33 = median(c33) print k31-k33 Data Display medco0a medsal0a medbon9a MTB MTB MTB MTB > > > > 28.0000 24.0000 27.0000 let c31 = c31 - k31 let c32 = c32 - k32 let c33 = c33 - k33 describe c31 - c33 Descriptive Statistics: Co0a, Sal0a, Bon9a Variable Co0a Sal0a Bon9a N 10 11 13 N* 0 0 0 Variable Co0a Sal0a Bon9a Maximum 7.00 2.000 14.00 Mean -1.10 -0.636 1.15 SE Mean 1.72 0.636 1.53 StDev 5.43 2.111 5.52 Minimum -11.00 -4.000 -6.00 Q1 -4.25 -2.000 -2.50 Median 0.00 0.000 0.00 Q3 2.25 1.000 4.50 MTB > print c31 - c33 Data Display Row 1 2 3 4 5 6 7 8 9 10 11 12 13 MTB MTB MTB MTB Co0a -3 7 -8 2 -3 2 -2 -11 3 2 > > > > Sal0a 1 1 -2 -4 1 -1 -2 1 -4 2 0 let c31 = abs(c31) let c32 = abs(c32) let c33 = abs(c33) print c31-c33 Data Display Row 1 2 3 4 5 6 7 8 9 10 11 12 13 Bon9a 1 14 -6 4 -1 -1 1 5 0 8 -4 -5 -1 Co0a 3 7 8 2 3 2 2 11 3 2 Sal0a 1 1 2 4 1 1 2 1 4 2 0 Bon9a 1 14 6 4 1 1 1 5 0 8 4 5 1 35 252y0731 4/25/07 (Page layout view!) MTB > AOVO c31 - c33 One-way ANOVA: Co0a, Sal0a, Bon9a Source Factor DF 2 Error 31 Total 33 S = 3.065 Level Co0a Sal0a Bon9a N 10 11 13 SS 42.24 MS 21.12 291.20 9.39 333.44 R-Sq = 12.67% Mean 4.300 1.727 3.923 StDev 3.199 1.272 3.904 F 2.25 P 0.123 #This is the last act of the Levene Test. #The high p-value means we cannot reject #the null hypothesis of equal means R-Sq(adj) = 7.03% Individual 95% CIs For Mean Based on Pooled StDev -+---------+---------+---------+-------(--------*---------) (---------*--------) (--------*-------) -+---------+---------+---------+-------0.0 2.0 4.0 6.0 Pooled StDev = 3.065 36