multi-ex

advertisement

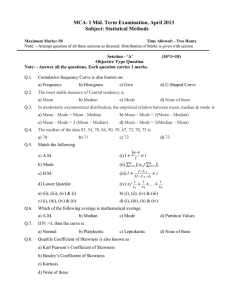

BSC Mining Engineering Mining and Metallurgical Engineering Department Multivariate Statistics 2003 Exercises 1. The data for multivariate analysis should always be examined and tested for normality, and homogeneity of variances. a. Why? b. Explain with sketches two methods to assess the normality of the variables. Answer: (a) The main reason behind these tests are to (1) determine the satiability of the data for analysis, (2) decide if transformations are necessary, and (3) decide what form of the data should use. (b) Testing for normality and homogeneity Normality of variables is assessed by either statistical or graphical methods. Two components of normality are skewness and kurtosis. Skewness has to do with the symmetry of the distribution; a skewed variable is a variable whose mean is not in the centre of the distribution. Kurtosis has to do with the peakedness of a distribution; a distribution is either too peaked (with short, thick tails) or too flat (with long, thin tails). Figure 1 shows a normal distribution, distributions with skewness, and distributions with non-normal kurtosis. The test of normality may be a normal probability plot on variables, tests of skewness and kurtosis, chi-square goodness of fit tests, and/or histograms. 1 coefficient of skewness The coefficient of skewness is often calculated to determine if the distribution is symmetrical or whether it tails to the left (negative) or right (positive). Generally, one can look at departures from symmetry of a distribution using the skewness as a measure of normality. Dr SaMeH 2 2 coefficient of kurtosis The coefficient of kurtosis, CK, is a measure of flatness and may be tested. For a normal distribution, the CK has a value of 0.263. (a) Normal (b) (c) Positive skewness Negative skewness1 (d) (e) Positive kurtosis Negative kurtosis Figure 1: Normal distribution, distribution with skewness, and distributions with kurtosis Dr SaMeH 3 2 Explain when and why we use the following: Correlation coefficient – PCA – FA Answer: Correlation coefficient ρ Is the statistic that most commonly used to summarise the relationship between two variables. It can be calculated from the equation: 1 n xi m x yi m y n i 1 x y Where: n is the number of data values is n x1, ….xn and y1, …..yn are data values for the first and second variable mx and my are mean values σx and σy the standard deviations. The correlation coefficient measures the strength of the linear relationship between two variables and takes values from -1.0 (perfect negative or inverse correlation) to +1.0 (perfect positive or direct correlation). A value of r = 0.0 indicates no linear correlation. Multivariate statistics Methods The most known multivariate analysis techniques are; the principal components analysis (PCA), factor analysis (FA), cluster analysis and the canonical analysis. The first two methods (PCA) and (FA) are statistical techniques applied to a single set of variables where someone is interested in discovering which variables in the set form coherent subsets that are relatively independent of one another. Variables are combined into factors. Factors are thought to reflect underlying processes that have created the correlation among variables. Interpreting the results obtained from those methods requires a good understanding of the physical meaning of the problem. PCA: Principal component analysis (PCA) is a multivariate technique for examining relationships among several quantitative variables by forming new variables, which are linear composites Dr SaMeH 3 of the original variables. The maximum number of new variables that can be formed is equal to the number of the original variables, and the new variables are uncorrelated themselves. So, the procedure is used if one interested in summarising data and detecting linear relationships. In other words, through evaluation of PCA, one seeks to determine the minimum number of variables that contain the maximum amount of information and determine which variables are strongly interrelated. FA Factor analysis (FA) is a generic name given to a class of multivariate statistical methods whose primary purpose is data reduction and summarisation. Broadly speaking, it addresses itself to the problem of analysing the interrelationships among a large number of variables and then explaining these variables in terms of their common underlying dimensions [factors]. The general purpose of factor analytic techniques is to find a way of condensing (summarising) the information contained in a number of original variables into a smaller set of new composite dimensions (factors) with a minimum loss of information. Dr SaMeH 4 3. Summary Statistics Exercise A frequency table records how often observed values fall within certain interval or class. Table (1) gives lead grades measured from 1m drill core samples: Required: 1. 2. 3. 4. 5. Draw histograms Calculate the frequency table Draw the frequency histogram Draw the inverse cumulative histogram of Pb grades Determine, minimum, maximum, range, mean, median, mode, variance, and the standard deviation. 8.72 0.44 2.16 0.64 3.04 6.28 9.76 1.64 1.00 4.12 4.50 1.96 4.84 0.84 0.80 0.90 7.12 7.70 1.20 2.16 0.72 6.30 8.80 1.48 1.10 0.56 0.72 1.40 7.90 0.96 0.44 6.92 1.44 5.96 2.00 1.68 1.30 0.76 8.36 1.32 0.56 7.39 0.56 8.70 7.80 0.60 1.32 1.80 9.80 3.00 2.60 5.20 Answer: Range 0-0.5 0.5-1.0 1.0-1.5 1.5-2.0 2.0-2.5 2.5-3.0 3.0-3.5 3.5-4.0 4.0-4.5 4.5-5.0 Frequency 2 13 8 5 2 2 1 0 2 1 Cumulative % 3.85 28.85 44.23 53.85 57.69 61.54 63.46 63.46 67.31 69.23 Range 5.0-5.5 5.5-6.0 6.0-6.5 6.5-7.0 7.0-7.5 7.5-8.0 8.0-8.5 8.5-9.0 9.0-9.5 9.5-10.0 Frequency 1 1 2 1 2 3 1 3 0 2 Cumulative % 71.15 73.08 76.92 78.85 82.69 88.46 90.38 96.15 96.15 100.00 Summary Statistics No of observations Mean Median Mode Standard deviation Sample variance Range Minimum Maximum Dr SaMeH 52 3.4475 1.88 0.56 3.07794 9.47372 9.36 0.44 9.8 5