Cisco ASR9000 Enterprise L2VPN for

Metro-Ethernet, DC-WAN, WAN-Core, and

Government and Public Networks

Implementation Guide

January 16, 2015

Building Architectures to Solve Business Problems

CCDE, CCENT, CCSI, Cisco Eos, Cisco Explorer, Cisco HealthPresence, Cisco IronPort, the Cisco logo, Cisco Nurse Connect, Cisco Pulse, Cisco SensorBase,

Cisco StackPower, Cisco StadiumVision, Cisco TelePresence, Cisco TrustSec, Cisco Unified Computing System, Cisco WebEx, DCE, Flip Channels, Flip for Good, Flip

Mino, Flipshare (Design), Flip Ultra, Flip Video, Flip Video (Design), Instant Broadband, and Welcome to the Human Network are trademarks; Changing the Way We Work,

Live, Play, and Learn, Cisco Capital, Cisco Capital (Design), Cisco:Financed (Stylized), Cisco Store, Flip Gift Card, and One Million Acts of Green are service marks; and

Access Registrar, Aironet, AllTouch, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the

Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Lumin, Cisco Nexus, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unity,

Collaboration Without Limitation, Continuum, EtherFast, EtherSwitch, Event Center, Explorer, Follow Me Browsing, GainMaker, iLYNX, IOS, iPhone, IronPort, the

IronPort logo, Laser Link, LightStream, Linksys, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, PCNow, PIX, PowerKEY,

PowerPanels, PowerTV, PowerTV (Design), PowerVu, Prisma, ProConnect, ROSA, SenderBase, SMARTnet, Spectrum Expert, StackWise, WebEx, and the WebEx logo are

registered trademarks of Cisco and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship

between Cisco and any other company. (1002R)

THE SOFTWARE LICENSE AND LIMITED WARRANTY FOR THE ACCOMPANYING PRODUCT ARE SET FORTH IN THE INFORMATION PACKET THAT

SHIPPED WITH THE PRODUCT AND ARE INCORPORATED HEREIN BY THIS REFERENCE. IF YOU ARE UNABLE TO LOCATE THE SOFTWARE LICENSE

OR LIMITED WARRANTY, CONTACT YOUR CISCO REPRESENTATIVE FOR A COPY.

The Cisco implementation of TCP header compression is an adaptation of a program developed by the University of California, Berkeley (UCB) as part of UCB’s public

domain version of the UNIX operating system. All rights reserved. Copyright © 1981, Regents of the University of California.

NOTWITHSTANDING ANY OTHER WARRANTY HEREIN, ALL DOCUMENT FILES AND SOFTWARE OF THESE SUPPLIERS ARE PROVIDED “AS IS” WITH

ALL FAULTS. CISCO AND THE ABOVE-NAMED SUPPLIERS DISCLAIM ALL WARRANTIES, EXPRESSED OR IMPLIED, INCLUDING, WITHOUT

LIMITATION, THOSE OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF

DEALING, USAGE, OR TRADE PRACTICE.

IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING,

WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THIS MANUAL, EVEN IF CISCO

OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks Implementation Guide

Service Provider Segment

© 2015 Cisco Systems, Inc. All rights reserved.

CONTENTSZ

Preface

iii

CHAPTER

1

Implementation Overview

CHAPTER

2

Enterprise L2VPN Transport Design

1-1

2-1

Small Scale Network Design and Implementation 2-1

Provider Edge and Provider Transport Configuration 2-2

Fast Failure Detection Using Bidirectional Forwarding Detection 2-2

Fast Convergence Using Remote Loop Free Alternate Fast Reroute 2-3

Provider Edge and Provider Routers Transport Configurations 2-3

Provider Edge Router Transport Configuration 2-3

Provider Router Transport Configuration 2-5

Core Network Quality of Service (QoS) Operation and Implementation 2-7

Provider Edge and Provider Routers Core QoS Configuration 2-8

Large Scale Network Design and Implementation 2-10

Using Core Network Hierarchy to Improve Scaling 2-10

Large Scale Hierarchical Core and Aggregation Networks with Hierarchy

Fast Convergence Using BGP Prefix Independent Convergence 2-12

Route Reflector Operation and Configuration 2-17

Route Reflector Configuration 2-17

CHAPTER

3

Enterprise L2VPN Services Design

Pseudo-wire

2-12

3-1

3-1

Virtual Private LAN Service (VPLS) 3-2

Virtual Forwarding Instance (VFI) 3-3

Provider Backbone Bridging Ethernet VPN (PBB-EVPN)

Backbone Edge Bridge (BEB) 3-5

Backbone Core Bridge (BCB) 3-5

Ethernet VPN Instance (EVI) 3-5

E-Line (EPL & EVPL)

3-4

3-8

Ethernet Private LAN (EP-LAN) and Ethernet Virtual Private LAN (EVP-LAN)

E-TREE (EP-TREE/ EVP-TREE)

3-9

3-11

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

Implementation Guide

i

Contents

CHAPTER

4

Provider Edge-Customer Edge Design Options

Inter-Chassis Communication Protocol

4-1

4-1

Ethernet Access 4-2

Hub and Spoke Using MC-LAG Active/Active

G.8032 Ring Access 4-7

4-2

nV Access 4-12

nV Satellite Simple Rings 4-13

nV Satellite L2 Fabric 4-15

nV Cluster 4-18

MPLS Access Using Pseudo-wire Head-end (PWHE)

CHAPTER

5

Provider Edge User Network Interface

QoS Implementation with MPLS access

4-21

5-1

5-1

QoS Implementation with Ethernet Hub and Spoke Access

QOS Implementation with G.8032 Access

5-7

QoS Implementation with Network Virtualization Access

CHAPTER

6

5-4

5-11

Virtual Private LAN Service (VPLS) Label-Switched Multicast (LSM)

6-1

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

ii

Implementation Guide

Preface

The Enterprise Layer 2 Virtual Private Network (L2VPN) architecture enables a single, physical network

to support multiple, virtual L2 networks. From the end user perspective, these networks appear

connected to a dedicated L2 network that has its own Quality of Service (QoS) and access policies.

This functionality gives the user numerous applications, including:

•

Meeting requirements to separate departments within an organization.

•

Sharing workload and resources as well as disaster recovery by interconnecting two or more Data

Centers of an enterprise.

•

Extending L2 connectivity between enterprise branches and Data Centers present at different

locations.

•

Realizing economic benefits through collapsing multiple existing networks onto the one physical

infrastructure, while maintaining L2 isolation and policy implementations on the different networks.

•

Maintaining multiple campuses interconnectivity and access to external networks like Internet and

Internet 2.

For each of these applications where a separate dedicated network is required, a virtual L2 network

offers the following key benefits over a non-virtualized infrastructure, or separate physical networks:

•

To reduce costs, instead of using expensive WAN links, using a single network to support multiple

user groups with virtual networks to enable greater statistical multiplexing benefits and for

providing bandwidth services with higher utilization.

•

A single network enables simpler management and operation of Ethernet Operation, Application,

and Maintenance (EOAM) protocols.

•

Security between virtual networks is built-in without the need for complex Access Control Lists

(ACL) to restrict access for each user group.

•

By consolidating network resources into a single, higher-scale virtualized infrastructure, improved

high availability becomes feasible including clustering of devices and multi-homing.

Authors

•

Chris Lewis

•

Saurabh Chopra

•

Javed Asghar

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

Implementation Guide

iii

Preface

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

iv

Implementation Guide

CH A P T E R

1

Implementation Overview

An end-to-end enterprise virtual network infrastructure requires the following primary components:

•

Layer 2 (L2) instances on the edge router devices that bind the interface toward an enterprise branch

or campus router to the L2 Virtual Private Network (L2VPN).

•

Multiprotocol Label Switching (MPLS) for label-based forwarding in the network core so that

forwarding does not rely on L2 addresses in the virtual network.

Table 1-1 lists terminology concerned with the MPLS L2VPN architecture.

Table 1-1

Terms used in MPLS L2VPN Architecture

Term

Explanation

Ethernet Virtual Connection (EVC) This is the logical representation of an Ethernet service, defined

as an association between two or more User Network Interfaces

(UNIs) that identifies a point-to-point or

multipoint-to-multipoint path within the core network.

Ethernet Flow Point (EFP)

An Ethernet service endpoint. An EFP classifies frames from the

same physical port to one of the multiple service instances

associated with that port, based on user-defined criteria.

Label Distribution Protocol (LDP)

This protocol is used on each link in the MPLS core network to

distribute labels associated with prefixes; labels are locally

significant to each link.

Provider Router

This type of router, also called a Label Switching Router (LSR),

runs an Interior Gateway Protocol (IGP) and Label Distribution

Protocol (LDP).

Provider Edge Router

This type of router, also called an edge router, imposes and

removes MPLS labels and runs Interior Gateway Protocol (IGP),

LDP, L2VPN instances and Multiprotocol Border Gateway

Protocol (MP-BGP).

Customer Edge Router

This type of router is the demarcation device in a

provider-managed VPN service. It is possible to connect a LAN

to the provider edge router directly. If multiple networks exist at

a customer location, a customer edge router simplifies the task of

connecting the networks to L2VPN.

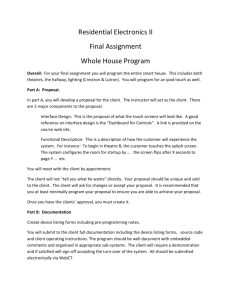

Figure 1-1 summarizes the three most common options used to virtualize Enterprise L2 WANs.

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

Implementation Guide

1-1

Chapter 1

Figure 1-1

Implementation Overview

Transport Options for L2 WAN Virtualization

1 Self Deployed IP/MPLS Backbone

Customer-managed Backbone

CE

Site 1

Site 3

PE

P

Site 2

CE

CE

P

P

PE

Customer-deployed Backbone

(IP and/or MPLS)

2 SP-managed “Ethernet” Service

Customer-managed

Backbone

Customer-managed

Backbone

SP-managed Domain

CE

Provider

Ethernet

Service

Site 1

CE

Site 3

PE

PE

Site 2

CE

3 SP-managed “IP VPN” Service

Customer-managed

Backbone

Customer-managed

Backbone

SP-managed Domain

CE

Site 1

CE

Provider

MPLS VPN

Service

Site 3

PE

PE

Site 2

EVCs

298744

CE

This guide focuses on Option 1 in shown in Figure 1, the enterprise owned-and-operated MPLS L2VPN

model.

Figure 1-2 shows how the components combine to create an MPLS L2VPN service and support the

multiple L2VPNs on the physical infrastructure. In Figure 2, a provider router connects two provider

edge routers. The packet flow is from left-to-right.

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

1-2

Implementation Guide

Chapter 1

Implementation Overview

Figure 1-2

Major MPLS L2VPN Components and Packet Flow

PE

P

P

PE

PE

P

PE

EVC

EFP

IGP

l

Labe

VC

l

Labe

Data

4 Byte

IGP Label

4 Byte

VC Label

Original Packet

Layer 2 VPN Packet Format

298745

Data

•

The provider edge router on the left has three groups each using their own virtual network. Each

provider edge router has three L2VPN instances (red, green, and blue); and, each L2 instance is for

the exclusive use of one group using a virtual infrastructure.

•

When a packet arrives on the provider edge router on the left, it appends two labels to the packet.

The BGP or LDP appends the inner (VC) label and its value is constant as the packet traverses the

network. The inner label value identifies the L2VPN instance on the egress provider edge so that the

L2 frame can be forwarded to the corresponding destination interface. LDP assigns the outer (IGP)

label and its value changes as the packet traverses the network to the destination provider edge

router.

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

Implementation Guide

1-3

Chapter 1

Implementation Overview

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

1-4

Implementation Guide

CH A P T E R

2

Enterprise L2VPN Transport Design

This chapter focuses on the use of Cisco Aggregation Services Routers 9000 Series (ASR 9000) as

provider and provider edge routers in the Multiprotocol Label Switching L2 Virtual Private Network

(MPLS L2VPN) architecture. See Figure 2 above.

You can use this architecture design to implement network infrastructures that connect virtual networks

among Data Centers, branch offices, and campuses through a variety of WAN connectivity.

In this architecture design, Data Centers (whether branch or campus) are considered customer edge

routers. The design requires that provider and provider edge routers are configured with the following

connectivity control and data plane options:

•

Ethernet hub-and-spoke or Ring;

•

Network virtualization (nV); and,

•

Pseudo-wire Head-end for MPLS access.

Enterprise L2 virtualization requires a common MPLS transport infrastructure in order to implement

multiple virtualized L2 networks. This MPLS transport must be resilient and equipped with fast

convergence and failure detection mechanisms. The architecture design requires that the MPLS transport

scales for future expansion. Two options for incorporating provider and provider edge routers into the

MPLS L2VPN transport infrastructure are:

•

A flat Label Distribution Protocol (LDP) domain option, which is appropriate for smaller MPLS

L2VPN deployments (700-1000 devices); or,

•

A hierarchical design using Request for Comments (RFC) 3107-labeled Border Gateway Protocol

(BGP) to segment provider and provider edge domains into Interior Gateway Protocol (IGP)

domains to support scaling the infrastructure beyond 50,000 devices.

This chapter examines topics common to small and large network implementations as they pertain to

small network design. It includes topics on additional technologies needed to enable small networks to

support large numbers of users.

Small Scale Network Design and Implementation

Figure 2-1 shows the small network deployment topology.

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

Implementation Guide

2-1

Chapter 2

Enterprise L2VPN Transport Design

Small Scale Network Design and Implementation

Small Deployment Topology

Pre-Aggregation

Node

Data

Center

Core

Node

Core and

Aggregation

IP/MPLS Domain

Core

Node

Pre-Aggregation

Node

nV

Pre-Aggregation

Node

Pre-Aggregation

Node

Core

Node

Pre-Aggregation

Node

Ethernet

Core

Node

Pre-Aggregation

Node

Campus/

Branch

297260

Figure 2-1

You can implement a domain that includes a few hundred provider and provider edge routers using single

IGP and LDP instances. In Figure 3, the Data Center is on the left and the network extends across the

WAN to the branch and campus locations.

There are various components involved in a small network design to achieve end-to-end MPLS transport

and instantiate L2 services seamlessly. These components are described in the following sections.

Provider Edge and Provider Transport Configuration

Transport networks comprised of provider and provider edge routers, transport traffic from multiple

L2VPNs at one location to another location. Transport networks require reachability and label-based

forwarding across the transport domain, along with fast failure detection and convergence. Bidirectional

Forwarding Detection (BFD) is used for fast failure detection. Fast convergence uses Remote Loop Free

Alternate Fast Reroute (rLFA FRR). These methods are described in sections that follow.

Transport implementation requires provider and provider edge routers configured to use IGP for

reachability. These devices also use LDP to exchange labels for prefixes advertised and learned from

IGP. The devices maintain a Label Forwarding Information Base (LFIB) to make forwarding decisions.

When sending L2 traffic from a branch or campus router to a remote location, provider edge routers

encapsulate traffic in MPLS headers, using a label corresponding to the remote provider edge router.

Intermediate devices examine the top label on the MPLS header, perform label swapping, and use LFIB

to forward traffic toward the remote provider edge router. Provider routers forward packets using only

labels. This enables the establishment and use of labeled-switched paths (LSPs) when a provider edge

router forwards VPN traffic to another location.

Fast Failure Detection Using Bidirectional Forwarding Detection

Link failure detection in the core normally occurs through loss of signal on the interface. This is not

sufficient for BGP because its neighbors are typically not on the same segment. A link failure (signal

loss) at a BGP peer can remain undetected by another BGP peer. Absent from other failure detection

methods, convergence occurs only when a BGP timer expires that is too slow. BFD is a lightweight, fast

"hello" protocol that speeds remote link failure detection.

Provider edge and provider routers use BFD as a failure detection mechanism on the CORE interfaces

that informs IGP about link or node failure within milliseconds. BFD peers send BFD control packets to

each other on the interfaces enabled with BFD at negotiated intervals. If a BFD peer does not receive a

control packet and the configured dead timer (also timed in milliseconds) expires, the BFD session is

torn down and IGP is rapidly informed about the failure. IGP immediately tears down the session with

the neighbor and switches traffic to an alternate path. This enables failure detection within milliseconds.

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

2-2

Implementation Guide

Chapter 2

Enterprise L2VPN Transport Design

Small Scale Network Design and Implementation

Fast Convergence Using Remote Loop Free Alternate Fast Reroute

After BFD detects a failure, the next step is to "fast converge" the network to an alternate path. For IGP

prefixes, Loop Free Alternate (LFA) enables fast convergence. The type of LFA depends on the network

topology. The first type, called simply LFA, is suitable for hub-and-spoke topologies. The second type

is called remote LFA (rLFA) and is suitable for ring topologies.

•

Loop Free Alternate Fast Reroute (LFA FRR) calculates the backup path for each prefix in the IGP

routing table; if a failure is detected the router immediately switches to the appropriate backup path

in about 50 milliseconds. Only loop-free paths are candidates for backup paths.

•

The rLFA FRR works differently because it is designed for cases with a physical path and no

loop-free alternate paths. In the rLFA case, automatic LDP tunnels are set up to provide LFAs for

all network nodes.

Without LFA or rLFA FRR, a router calculates the alternate path after a failure is detected, which results

in delayed convergence. LFA FRR also calculates the alternate paths in advance to enable faster

convergence. Provider and provider edge devices have alternate paths calculated for all prefixes in the

IGP table and can use rLFA FRR to quickly reroute in case of failure in a primary path.

Provider Edge and Provider Routers Transport Configurations

This section describes how to configure provider edge and provider router transport to support fast

failure detection and fast convergence.

Provider Edge Router Transport Configuration

Provider edge router configuration includes enabling IGP, Intermediate System to Intermediate System

(IS-IS) or Open Shortest Path First (OSPF), to exchange core and aggregation reachability, and enabling

LDP to exchange labels on the core facing interfaces. A loopback interface is also advertised in IGP as

the L2 services are instantiated-using Loopback0 as mentioned in Chapter 3, “Enterprise L2VPN

Services Design.” Using the loopback address improves reliability; the loopback interface is always up

when the router is up, unlike physical interfaces that can have link failures.

Configure BFD on core-facing interfaces using a 15-millisecond "hello" interval and multiplier three to

enable fast failure detection in the transport network. The rLFA FRR is used under IS-IS Level 2 for fast

convergence if a transport network failure occurs. BGP Personal Internet Communicator (PIC) is

configured for fast convergence of BGP prefixes if a remote provider edge router becomes unreachable.

Table 2-1 details the provider edge router transport configuration.

Table 2-1

Provider Edge Router Transport Configuration

Provider Edge Router Transport Configuration

Description

interface Loopback0

Loopback Interface for BGP VPNv4 neighbors.

ipv4 address 100.111.11.1 255.255.255.255

ipv6 address 2001:100:111:11::1/128

!

interface TenGigE0/0/0/0

Core interface.

ipv4 address 10.11.1.0 255.255.255.254

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

Implementation Guide

2-3

Chapter 2

Enterprise L2VPN Transport Design

Small Scale Network Design and Implementation

Table 2-1

Provider Edge Router Transport Configuration (continued)

Provider Edge Router Transport Configuration

Description

!

router isis core

net 49.0100.1001.1101.1001.00

Enters router IS-IS configuration.

Assigns network address to the IS-IS process.

address-family ipv4 unicast

Enters IPv4 address-family for IS-IS.

metric-style wide

Metric style wide generates new-style type-length-value (TLV)

with wider metric fields for IPv4.

!

address-family ipv6 unicast

metric-style wide

Enters IPv6 address-family for IS-IS.

Metric style wide generates new-style TLV with wider metric

fields for IPv6.

!

interface Loopback0

Configures IS-IS for Loopback interface.

passive

Makes loopback passive to avoid sending unnecessary "hello"

packets on it.

address-family ipv4 unicast

Enters IPv4 address-family for loopback.

!

address-family ipv6 unicast

Enters IPv6 Address-family for loopback.

!

!

interface TenGigE0/0/0/0

Configures IS-IS for Ten Gigabit Ethernet bandwidth

(TenGigE0/0/0/0) interface.

circuit-type level-2-only

Configures IS-IS circuit-type on the interface.

bfd minimum-interval 15

Configures minimum interval between sending BFD "hello"

packets to the neighbor.

bfd multiplier 3

Configures BFD multiplier.

bfd fast-detect ipv4

Enables BFD to detect failures in the path between adjacent

forwarding engines.

address-family ipv4 unicast

Enters the IPv4 address-family for Ten Gigabit Ethernet

(TenGigE) interface.

metric 10

Configures IS-IS metric for Interface.

fast-reroute per-prefix level 2

Enables per prefix FRR for Level-2 prefixes.

fast-reroute per-prefix remote-lfa tunnel mpls-ldp

Configures an FRR path that redirects traffic to a remote LFA

tunnel.

mpls ldp sync

Enables MPLS LDP sync to ensure LDP comes up on link

before link is used for forwarding to avoid packet loss.

!

!

mpls ldp

Enters MPLS LDP configuration mode.

log

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

2-4

Implementation Guide

Chapter 2

Enterprise L2VPN Transport Design

Small Scale Network Design and Implementation

Table 2-1

Provider Edge Router Transport Configuration (continued)

Provider Edge Router Transport Configuration

Description

graceful-restart

!

Configures router-id for LDP.

router-id 100.111.11.1

!

Enables LDP on TenGig0/0/0/0.

interface TenGigE0/0/0/0

address-family ipv4

!

Provider Router Transport Configuration

The provider router transport configuration includes enabling IGP (IS-IS or OSPF) to exchange core and

aggregation reachability. It also includes enabling LDP to exchange labels on core-facing interfaces.

Provider routers do not need to know VPN addresses because they interpret only core and aggregation

prefixes in the transport network. Provider routers swap labels based on the top packet label belonging

to the remote provider edge routers, and use label forwarding information base (LFIB) to accomplish

provider edge-to-provider edge label switch path (LSP). The rLFA FRR is used under IS-IS Level-2 for

fast convergence if a transport network failure occurs.

Table 2-2 details the provider router transport configuration.

Table 2-2

Provider Router Transport Configuration

Provider Router Transport Configuration

Description

interface TenGigE0/0/0/0

Core interface connecting to provider edge.

ipv4 address 10.11.1.1 255.255.255.254

!

interface TenGigE0/0/0/1

Core interface connecting to core MPLS network.

ipv4 address 10.2.1.4 255.255.255.254

!

Enters router IS-IS configuration.

router isis core

net 49.0100.1001.1100.2001.00

Assigns network address to the IS-IS process.

address-family ipv4 unicast

Enters IPv4 address-family for IS-IS.

metric-style wide

Metric style wide generates new-style TLV with wider

metric fields for IPv4.

!

interface Loopback0

passive

Configures IS-IS for loopback interface.

Makes loopback passive to avoid sending unnecessary

"hello" packets on it.

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

Implementation Guide

2-5

Chapter 2

Enterprise L2VPN Transport Design

Small Scale Network Design and Implementation

Table 2-2

Provider Router Transport Configuration (continued)

address-family ipv4 unicast

Enters IPv4 address-family for loopback.

!

!

interface TenGigE0/0/0/0

Configures IS-IS for TenGigE0/0/0/0 interface.

circuit-type level-2-only

Configures IS-IS circuit-type on the interface.

bfd minimum-interval 15

Configures minimum interval between sending BFD

"hello" packets to the neighbor.

bfd multiplier 3

Configures BFD multiplier.

bfd fast-detect ipv4

Enables BFD to detect failures in the path between

adjacent forwarding engines.

address-family ipv4 unicast

Enters the IPv4 address-family for TenGigE interface.

metric 10

Configures IS-IS metric for interface.

mpls ldp sync

Enables MPLS LDP sync to ensure LDP comes up on

link before link is used for forwarding to avoid packet

loss.

!

!

interface TenGigE0/0/0/1

Configures IS-IS for TenGigE0/0/0/1 interface.

circuit-type level-2-only

Configures IS-IS circuit-type on the interface.

bfd minimum-interval 15

Configures minimum interval between sending BFD

"hello" packets to the neighbor.

bfd multiplier 3

Configures BFD multiplier.

bfd fast-detect ipv4

Enables BFD to detect failures in the path between

adjacent forwarding engines.

address-family ipv4 unicast

Enters the IPv4 address-family for TenGigE interface.

metric 10

fast-reroute per-prefix level 2

fast-reroute per-prefix remote-lfa tunnel

mpls-ldp

mpls ldp sync

Configures IS-IS metric for interface.

Enables per prefix FRR for Level-2 prefixes.

Configures an FRR path that redirects traffic to a remote

LFA tunnel.

Enables MPLS LDP sync to ensure LDP comes up on

link before link is used for forwarding to avoid packet

loss.

!

!

Enters MPLS LDP configuration mode.

mpls ldp

log

neighbor

graceful-restart

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

2-6

Implementation Guide

Chapter 2

Enterprise L2VPN Transport Design

Core Network Quality of Service (QoS) Operation and Implementation

Table 2-2

Provider Router Transport Configuration (continued)

Configures router-id for LDP.

!

router-id 100.111.2.1

Enables LDP on TenGig0/0/0/0.

interface TenGigE0/0/0/0

!

Enables LDP on TenGig0/0/0/1.

interface TenGigE0/0/0/1

!

!

Core Network Quality of Service (QoS) Operation and

Implementation

Virtual enterprise networks consist of traffic types that include voice, video, critical applications traffic,

and end-user web traffic. This traffic requires different priorities and treatments based on their

characteristics and their business significance. In the MPLS core network, QoS ensures proper treatment

when transporting the virtual network's traffic. This section describes this configuration.

As discussed in previous sections, the MPLS header imposes on traffic in the enterprise virtual network

ingress to the MPLS network on provider edge routers. When the labeled traffic is transported in the core

network, QoS implementation uses 3-bit MPLS EXP bits fields (0-7) present in the MPLS header for

proper QoS treatment. The Differentiated services (DiffServ) Per-Hop Behavior (PHB), which defines

packet-forwarding properties associated with different traffic classes, is divided into the following:

•

Expedited Forwarding—used for traffic requiring low loss, low latency, low jitter, and assured

bandwidth

•

Assured Forwarding—allows four classes with certain buffer and bandwidth

•

Best Effort—best-effort forwarding

This section describes the MPLS Uniform QoS model. This model maps the differentiated services code

point (DSCP) marking from the received router’s traffic on a provider edge to the corresponding MPLS

experimental (EXP) bits. Table 2-3 shows mapping used for different traffic classes to PHB, DSCP, and

MPLS EXP.

Table 2-3

Traffic Class Mapping

Traffic Class

PHB DSCP MPLS EXP

Network Management

AF

56

7

Network Control Protocols

AF

48

6

Enterprise Voice and Real-time

EF

46

5

Enterprise Video Distribution

AF

32

4

Enterprise Telepresence

AF

24

3

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

Implementation Guide

2-7

Chapter 2

Enterprise L2VPN Transport Design

Core Network Quality of Service (QoS) Operation and Implementation

Table 2-3

Traffic Class Mapping

Traffic Class

PHB DSCP MPLS EXP

Enterprise Critical

AF

In Contract

16

2

Out of Contract

8

1

0

0

Enterprise Best Effort

BE

The QoS configuration includes configuring class-maps created for the different traffic classes listed in

Table 2 that are assigned with the corresponding MPLS EXP.

As outlined in Table 3 below, while configuring policy maps, real-time traffic class, the class-map for

the real-time traffic, CMAP-RT-EXP, is configured with highest priority 1. It is also policed to ensure

low latency expedited forwarding. The rest of the classes are assigned with the respective required

bandwidth. Weighted random early detection (WRED) is used as a congestion-avoidance mechanism.

WRED is used for the EXP 1 and EXP 2 traffic in the enterprise critical class, CMAP-EC-EXP. The

policy-map is applied to the provider edge and provider router core interfaces in the egress direction

across the MPLS network.

Provider Edge and Provider Routers Core QoS Configuration

Table 2-2 details the provider edge and provider routers core QoS configuration.

Table 2-4

Provider Edge and Provider Routers Core QoS Configuration

Provider Edge and Provider Routers Core QoS Configuration Explanation

class-map match-any CMAP-EC-EXP

match mpls experimental topmost 1 2

Class-map for the enterprise critical traffic.

Matching MPLS experimental 1 OR 2 from traffic top-most

MPLS header.

end-class-map

!

class-map match-any CMAP-ENT-Tele-EXP

match mpls experimental topmost 3

Class map for enterprise telepresence traffic.

Matching MPLS experimental 3 from traffic top-most MPLS

header.

end-class-map

!

class-map match-any CMAP-Video-EXP

match mpls experimental topmost 4

Class-map for video traffic.

Matching MPLS experimental 4 from traffic top-most MPLS

header.

end-class-map

!

class-map match-any CMAP-RT-EXP

match mpls experimental topmost 5

Class-map for real-time traffic.

Matching MPLS experimental 5 from traffic top-most MPLS

header.

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

2-8

Implementation Guide

Chapter 2

Enterprise L2VPN Transport Design

Core Network Quality of Service (QoS) Operation and Implementation

Table 2-4

Provider Edge and Provider Routers Core QoS Configuration (continued)

Provider Edge and Provider Routers Core QoS Configuration Explanation

end-class-map

!

class-map match-any CMAP-CTRL-EXP

match mpls experimental topmost 6

Class-map for control traffic.

Matching MPLS experimental 6 from traffic top-most MPLS

header.

end-class-map

!

class-map match-any CMAP-NMgmt-EXP

match mpls experimental topmost 7

Class-map for network management traffic.

Matching MPLS experimental 7 from traffic top-most MPLS

header.

end-class-map

!

!

policy-map PMAP-NNI-E

Policy-map configuration for 10 G link.

class CMAP-RT-EXP

Matching the real-time class.

priority level 1

Defining top priority 1 for the class for low latency queuing.

police rate 1 gbps

Policing the priority class.

!

!

class CMAP-CTRL-EXP

Assigning the desired bandwidth to the class.

bandwidth 200 mbps

!

class CMAP-NMgmt-EXP

bandwidth 500 mbps

!

class CMAP-Video-EXP

bandwidth 2 gbps

!

class CMAP-EC-EXP

bandwidth 1 gbps

!

random-detect exp 2 80 ms 100 ms

random-detect exp 1 40 ms 50 ms

Using WRED for for enterprise critical class for both EXP 1 and

2 for congestion avoidance. EXP 1 is dropped early.

!

class CMAP-ENT-Tele-EXP

bandwidth 2 gbps

!

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

Implementation Guide

2-9

Chapter 2

Enterprise L2VPN Transport Design

Large Scale Network Design and Implementation

Table 2-4

Provider Edge and Provider Routers Core QoS Configuration (continued)

Provider Edge and Provider Routers Core QoS Configuration Explanation

class class-default

!

end-policy-map

!

interface TenGigE0/0/0/0

Core interface on provider or provider edge.

service-policy output PMAP-NNI-E

Egress service policy on the interface.

Large Scale Network Design and Implementation

When an MPLS network includes more than 1000 devices, implementing a hierarchical network design

is recommended. In this guide, the hierarchical network design uses labeled BGP, as defined in

RFC 3107. Figure 2-2 shows a network with hierarchy.

Core Network with Hierarchy to Improve Scaling

Aggregation

Node

Aggregation

Node

Data

Center

Aggregation Network

IP/MPLS Domain

Aggregation

Node

Aggregation

Node

Core

Node

Core Network

IP/MPLS Domain

Core

Node

Ethernet

Aggregation Network

nV

IP/MPLS Domain

Aggregation

Node

Core

Node

Core

Node

Aggregation

Node

Campus/

Branch

iBGP (eBGP) Hierarchical LSP

LDP LSP

LDP LSP

LDP LSP

297261

Figure 2-2

Using Core Network Hierarchy to Improve Scaling

The main challenges of large network implementation result from network size. For example, network

size includes the size of routing and forwarding tables in individual provider and provider edge devices

caused by the large number of network nodes; and, trying to run all nodes in one IGP/LDP domain. In

an MPLS environment, unlike in an all-IP environment, all service nodes need a /32 network address as

a node identifier. The /32 addresses cannot be summarized because link-state databases linearly

increases as devices are added to the MPLS network.

The labeled BGP mechanism, defined in RFC 3107, can be used so that link state databases in core

network devices do not have to learn the /32 addresses of all MPLS routers in the access and aggregation

domains. The mechanism effectively moves prefixes from the IG link state database into the BGP table.

Labeled BGP, implemented in the MPLS transport network, introduces hierarchy in the network to

provide better scalability and convergence. Labeled BGP ensures all devices only receive needed

information to provide end-to-end transport.

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

2-10

Implementation Guide

Chapter 2

Enterprise L2VPN Transport Design

Large Scale Network Design and Implementation

Large-scale MPLS transport networks used to transport virtual network traffic can be divided into two

IGP areas. In the Open Shortest Path First (OSPF) backbone area, the core network is configured using

Intermediate System to Intermediate System (IS-IS) L2. In the OSPF non-backbone area, the

aggregation network is configured with IS-IS Layer 1 (L1). Another option is to run different IGP

processes in the core and aggregation networks. No redistribution occurs between core and aggregation

IGP levels, areas, and processes, which reduces the size of the routers routing and forwarding tables in

each domain; and, provides better scalability and faster convergence. Running IGP in the area enables

intra-area reachability, and LDP is used to build intra-area LSPs.

Because route information is not redistributed between different IGP levels and areas, provider edge

devices need a mechanism to reach provider edge device loopbacks in other area and levels, and send

VPN traffic. Labeled BGP enables inter-area reachability and accomplish end-to-end LSP between

provider edge routers. Devices that are connected to both aggregation and core domains are called Area

Border Routers (ABRs). ABRs run labeled Interior-BGP (iBGP) sessions with provider edge routers in

their local aggregation domain and serve as route reflectors for the provider edges. Provider edge routers

advertise their loopback addresses (used for L2VPN neighboring) and their corresponding labels to local

route reflector ABRs using labeled IBGP. ABRs run labeled IBGP sessions with a route reflector device

in the core domain, which reflects provider edge router loopback addresses and labels learned from one

ABR client to other ABR clients without changing next-hop or other attributes. ABRs learn provider

edge router loopback addresses and labels from other aggregation domains and advertise them to

provider edge routers in their local aggregation domain. ABRs use next-hop-self while advertising

routes to provider edge routers in local aggregation domain and to route reflectors in the core domain.

This makes provider edge routers learn remote provider edge loopback addresses and labels with local

ABR as BGP next-hop and ABRs learn remote provider edge loopback addresses with remote ABR as

the BGP next-hop. Provider edge routers use two transport labels when sending labeled VPN traffic to

the MPLS cloud: one label for remote provider edge router and another label for its BGP next-hop (local

ABR). The top label for BGP next-hop local ABR is learned from local IGP and LDP. The label below

that, for remote provider edge routers, is learned through labeled IBGP with the local ABR. Intermediate

devices across different domains perform label swapping based on the top label in received MPLS

packets. This achieves end-to-end hierarchical LSP without running the entire network in a single

IGP/LDP domain. Devices learn only necessary information, such as prefixes in local domains and

remote provider edge loopback addresses, which makes labeled BGP scalable for large networks.

Core Network and Aggregation Large Scale Network with Hierarchy

Aggregation Network

Core Network

ISIS Level 1 Or OSPF Non

Backbone Area

ISIS Level 2 Or OSPF Backbone Area

Aggregation

Node

next-hop-self

RR

ABR

Data

Center

Core RR

Aggregation Network

ISIS Level 1 Or OSPF Non

Backbone Area

Aggregation

Node

next-hop-self

RR

ABR

BGP IPv4+label

BGP IPv4+label

BGP IPv4+label

Ethernet

nV

Aggregation

Node

Aggregation

Node

Aggregation

Node

VC

Remote Local RR

Label PE Label ABR Label

Core

Node

Core

Node

VC

Remote Local RR

Label PE Label ABR Label

Aggregation

Node

VC

Remote

Label PE Label

iBGP Hierarchical LSP

LDP LSP

LDP LSP

Campus/

Branch

LDP LSP

298746

Figure 2-3

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

Implementation Guide

2-11

Chapter 2

Enterprise L2VPN Transport Design

Large Scale Network Design and Implementation

Large Scale Hierarchical Core and Aggregation Networks with Hierarchy

Provider edge routers are configured in IS-IS Level-1 (OSPF non-backbone area) to implement ABR,

provider edge, and core route reflector transport configuration for large scale MPLS VPNs. ABR

aggregation domain facing interfaces are configured using IS-IS Level-1 (OSPF non-backbone area) and

core domain-facing interface configured with IS-IS Level-2 (OSPF backbone area). Core route reflector

interfaces will remain in IS-IS Level-2 (Or OSPF backbone area). Provider edge and local ABR are

configured with Labeled IBGP session with ABR as route reflector. Core route reflector is configured

with Labeled BGP peering with all ABRs. LDP is configured in a similar way to the smaller network.

ABR is configured with next-hop-self for both provider edge and core-labeled BGP peers to achieve

hierarchical LSP. BFD is used on all interfaces as a fast failure detection mechanism. BGP PIC is

configured for fast convergence of IPv4 prefixes learnt through labeled IBGP. The rLFA FRR is

configured under IS-IS for providing fast convergence of IGP learnt prefixes.

ABR’s loopbacks are required in both aggregation and core domains since their loopbacks are used for

labeled BGP peering with provider edges in local aggregation domain as well as route reflector in the

core domain. To achieve this, ABR loopbacks are kept in the IS-IS Level-1 or -2 or OSPF backbone area.

Fast Convergence Using BGP Prefix Independent Convergence

For BGP prefixes, fast convergence is achieved using BGP PIC, in which BGP calculates an alternate

best path and primary best path and installs both paths in the routing table as primary and backup paths.

This functionality is similar to rLFA FRR, which is described in the preceding section. If the BGP

next-hop remote provider edge becomes unreachable, BGP immediately switches to the alternate path

using BGP PIC instead of recalculating the path after the failure. If the BGP next-hop remote provider

edge is alive but there is a path failure, IGP rLFA FRR handles fast convergence to the alternate path

and BGP updates the IGP next-hop for the remote provider edge.

Table 2-5 details the provider edge and ABR configuration.

Table 2-5

Provider Edge Transport Configuration

Provider Edge Transport Configuration

Description

router isis agg-acc

Enters Router IS-IS configuration for provider edge.

net 49.0100.1001.1100.7008.00

Defines NET address.

is-type level-1

Defines is-type as Level-1 for the provider edge in

aggregation domain.

address-family ipv4 unicast

Enters IPv4 address-family for IS-IS.

metric-style wide

Metric style Wide generates new-style TLV with wider

metric fields for IPv4.

!

interface Loopback0

Configures IS-IS for Loopback interface.

Makes loopback passive to avoid sending unnecessary

hellos on it.

passive

point-to-point

address-family ipv4 unicast

Enters IPv4 Address-family for Loopback.

!

interface TenGigE0/2/0/0

Configures IS-IS for TenGigE0/2/0/0 interface.

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

2-12

Implementation Guide

Chapter 2

Enterprise L2VPN Transport Design

Large Scale Network Design and Implementation

Table 2-5

Provider Edge Transport Configuration (continued)

Provider Edge Transport Configuration

Description

bfd minimum-interval 15

Configures Minimum Interval between sending BFD hello

packets to the neighbor.

bfd multiplier 3

Configures BFD multiplier.

bfd fast-detect ipv4

Enables BFD to detect failures in the path between adjacent

forwarding engines.

point-to-point

Configures point-to-point IS-IS interface.

address-family ipv4 unicast

Enters the IPv4 address-family for TenGig interface.

fast-reroute per-prefix level 2

Enables per prefix FRR for Level 2 prefixes.

fast-reroute per-prefix remote-lfa tunnel mpls-ldp

Configures an FRR path that redirects traffic to a remote

LFA tunnel.

metric 10

Configures IS-IS metric for Interface.

mpls ldp sync

Enables mpls LDP sync to ensure LDP comes up on link

before Link is used for forwarding to avoid packet loss.

!

!

Enters Router BGP configuration mode.

router bgp 101

!

address-family ipv4 unicast

Enters IPv4 address-family.

additional-paths receive

Configures receive capability of multiple paths for a prefix

to the capable peers.

additional-paths send

Configures send capability of multiple paths for a prefix to

the capable peers.

additional-paths selection route-policy

add-path-to-ibgp

Enables BGP PIC functionality with appropriate

route-policy to calculate back up paths.

!

session-group intra-as

Configures session-group to define parameters that are

address-family independent.

remote-as 101

Specifies remote-as as AS number of Route-Reflector.

update-source Loopback0

Specifies Update-source as Loopback0 for BGP

communication

!

Enters neighbor-group configuration mode.

neighbor-group ABR

use session-group intra-as

Importing Session-group AF independent parameters.

address-family ipv4 labeled-unicast

Enables Labeled BGP address-family for neighbor group.

!

neighbor 100.111.3.1

use neighbor-group ABR

Configured ABR loopback as neighbor.

Inheriting neighbor-group ABR parameters.

!

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

Implementation Guide

2-13

Chapter 2

Enterprise L2VPN Transport Design

Large Scale Network Design and Implementation

Table 2-5

Provider Edge Transport Configuration (continued)

Provider Edge Transport Configuration

Description

!

route-policy add-path-to-ibgp

set path-selection backup 1 install

Configures route-policy used in BGP PIC.

Configured to install 1 backup path.

end-policy

Enters MPLS LDP configuration mode.

mpls ldp

log

neighbor

graceful-restart

Configures router-id for LDP.

!

router-id 100.111.7.8

interface TenGigE0/2/0/0

Enables LDP on TenGig0/2/0/0.

!

Table 2-6 details the ABR transport configuration.

Table 2-6

ABR Transport Configuration

ABR Transport Configuration

Description

router isis agg-acc

Enters router IS-IS configuration for provider edge.

net 49.0100.1001.1100.3001.00

Defines network address.

address-family ipv4 unicast

Enters IPv4 address-family for IS-IS.

metric-style wide

Metric style wide generates new-style TLV with wider

metric fields for IPv4.

!

interface Loopback0

Configures IS-IS for loopback interface.

Makes loopback passive to avoid sending unnecessary

hellos on it.

passive

point-to-point

address-family ipv4 unicast

Enters IPv4 address-family for loopback.

!

interface TenGigE0/2/0/0

Configures IS-IS for TenGigE0/2/0/0 interface.

circuit-type level-1

Configured Aggregation facing interface as IS-IS Level-1

interface.

bfd minimum-interval 15

Configures Minimum Interval between sending BFD

"hello" packets to the neighbor.

bfd multiplier 3

Configures BFD multiplier.

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

2-14

Implementation Guide

Chapter 2

Enterprise L2VPN Transport Design

Large Scale Network Design and Implementation

Table 2-6

ABR Transport Configuration (continued)

ABR Transport Configuration

Description

bfd fast-detect ipv4

Enables BFD to detect failures in the path between adjacent

forwarding engines.

point-to-point

Configures point-to-point IS-IS interface.

address-family ipv4 unicast

fast-reroute per-prefix level 2

Enables per prefix FRR for Level-2 prefixes.

fast-reroute per-prefix remote-lfa tunnel mpls-ldp

Configures an FRR path that redirects traffic to a remote

LFA tunnel.

metric 10

Configures IS-IS metric for Interface.

mpls ldp sync

Enables MPLS LDP sync to ensure LDP comes up on link

before link is used for forwarding to avoid packet loss.

!

!

interface TenGigE0/2/0/1

Configures IS-IS for TenGigE0/2/0/1 interface.

circuit-type level-2-only

Configured CORE facing interface as IS-IS Level-2

interface.

bfd minimum-interval 15

Configures Minimum Interval between sending BFD

"hello" packets to the neighbor.

bfd multiplier 3

Configures BFD multiplier.

bfd fast-detect ipv4

Enables BFD to detect failures in the path between adjacent

forwarding engines.

point-to-point

Configures point-to-point IS-IS interface.

address-family ipv4 unicast

fast-reroute per-prefix level 2

Enables per prefix FRR for Level-2 prefixes.

fast-reroute per-prefix remote-lfa tunnel mpls-ldp

Configures an FRR path that redirects traffic to a remote

LFA tunnel.

metric 10

Configures IS-IS metric for Interface.

mpls ldp sync

Enables mpls LDP sync to ensure LDP comes up on link

before Link is used for forwarding to avoid packet loss.

!

!

Enters Router BGP configuration mode.

router bgp 101

!

address-family ipv4 unicast

Enters IPv4 address-family.

additional-paths receive

Configures receive capability of multiple paths for a prefix

to the capable peers.

additional-paths send

Configures send capability of multiple paths for a prefix to

the capable peers.

additional-paths selection route-policy

add-path-to-ibgp

Enables BGP PIC functionality with appropriate

route-policy to calculate back up paths.

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

Implementation Guide

2-15

Chapter 2

Enterprise L2VPN Transport Design

Large Scale Network Design and Implementation

Table 2-6

ABR Transport Configuration (continued)

ABR Transport Configuration

Description

!

session-group intra-as

Configures session-group to define parameters that are

address-family-independent.

remote-as 101

Specifies remote-as as number of route-reflector.

update-source Loopback0

Specifies update-source as Loopback0 for BGP

communication.

!

neighbor-group PE

Enters neighbor-group provider edge configuration mode.

use session-group intra-as

Importing session-group address-family-independent

parameters.

address-family ipv4 labeled-unicast

Enables labeled BGP address-family for neighbor-group.

route-reflector-client

Configured peer-group for provider edge as route-reflector

client.

next-hop-self

Sets next-hop-self for advertised prefixes to provider edge.

!

neighbor-group CORE

Enters neighbor-group CORE configuration mode.

use session-group intra-as

Importing session-group address-family-independent

parameters.

address-family ipv4 labeled-unicast

Enables labeled BGP address-family for neighbor-group.

next-hop-self

Sets next-hop-self for advertised prefixes to CORE

route-reflector.

!

neighbor 100.111.7.8

use neighbor-group PE

Configured provider edge loopback as neighbor.

Inheriting neighbor-group provider edge parameters.

!

neighbor 100.111.11.3

use neighbor-group CORE

Configured CORE route-reflector loopback as neighbor.

Inheriting neighbor-group CORE parameters.

!

!

route-policy add-path-to-ibgp

set path-selection backup 1 install

Configures route-policy used in BGP PIC.

Configured to install 1 backup path.

end-policy

Enters MPLS LDP configuration mode.

mpls ldp

log

neighbor

graceful-restart

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

2-16

Implementation Guide

Chapter 2

Enterprise L2VPN Transport Design

Large Scale Network Design and Implementation

Table 2-6

ABR Transport Configuration (continued)

ABR Transport Configuration

Description

Configures router-id for LDP.

!

router-id 100.111.3.1

Enables LDP on TenGig0/0/0/0.

interface TenGigE0/2/0/0

!

Enables LDP on TenGig0/0/0/1.

interface TenGigE0/2/0/1

!

!

Route Reflector Operation and Configuration

Route reflectors addresses the scalability and overhead issues of requiring full mesh of IBGP sessions

because of the IBGP split-horizon rule. When a device is assigned as a route reflector, and provider edge

devices are assigned as its clients, the split horizon rule is relaxed on the route reflectors, enabling the

route reflectors to advertise the prefixes received from one client provider edge to another client provider

edge. Provider edges must maintain IBGP sessions with the route reflectors only to send and receive

updates. The route reflector reflects updates received from one provider edge to other provider edges in

the network, eliminating the requirement for IBGP full mesh.

By default, a route reflector does not change next-hop or any other prefix attributes. Prefixes received

by provider edges still have remote provider edges as next-hop, not the route reflectors, so provider

edges can send traffic directly to remote provider edges. This eliminates the requirement to have the

route reflectors in the data path and route reflectors can only be used for route reflectors function.

Route Reflector Configuration

This section describes ASR 1000 route reflectors configuration, which includes configuring a

peer-group for router BGP. Provider edges having the same update policies (such as update-group,

remote-as) can be grouped into the same peer group, which simplifies peer configuration and enables

more efficient updating. The peer-group is made a route reflectors client so that the route reflectors can

reflect routes received from a client provider edge to other client provider edges.

Table 2-7 details the CORE route reflectors transport configuration.

Table 2-7

CORE Route Reflectors Transport Configuration

CORE Route Reflectors Transport Configuration

Description

router isis agg-acc

Enters Router IS-IS configuration for provider edge.

net 49.0100.1001.1100.1103.00

Defines network address.

address-family ipv4 unicast

Enters IPv4 address-family for IS-IS.

metric-style wide

Metric style wide generates new-style TLV with wider metric

fields for IPv4.

!

interface Loopback0

passive

Configures IS-IS for Loopback interface.

Makes loop-back passive to avoid sending unnecessary

"hello" packets on it.

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

Implementation Guide

2-17

Chapter 2

Enterprise L2VPN Transport Design

Large Scale Network Design and Implementation

Table 2-7

CORE Route Reflectors Transport Configuration

CORE Route Reflectors Transport Configuration

Description

point-to-point

address-family ipv4 unicast

Enters IPv4 address-family for loop-back.

!

interface TenGigE0/2/0/0

Configures IS-IS for TenGigE0/2/0/0 interface.

circuit-type level-2-only

Configured CORE interface as IS-IS Level-2 interface.

bfd minimum-interval 15

Configures Minimum Interval between sending BFD hello

packets to the neighbor.

bfd multiplier 3

Configures BFD multiplier.

bfd fast-detect ipv4

Enables BFD to detect failures in the path between adjacent

forwarding engines.

point-to-point

Configures point-to-point IS-IS interface.

address-family ipv4 unicast

fast-reroute per-prefix level 2

Enables per prefix FRR for Level-2 prefixes.

fast-reroute per-prefix remote-lfa tunnel mpls-ldp

Configures an FRR path that redirects traffic to a remote LFA

tunnel.

metric 10

Configures IS-IS metric for Interface.

mpls ldp sync

Enables MPLS LDP sync to ensure LDP comes up on link

before Link is used for forwarding to avoid packet loss.

!

!

router bgp 101

Enters Router BGP configuration mode.

!

address-family ipv4 unicast

Enters IPv4 address-family.

additional-paths receive

Configures receive capability of multiple paths for a prefix to

the capable peers.

additional-paths send

Configures send capability of multiple paths for a prefix to the

capable peers.

additional-paths selection route-policy

add-path-to-ibgp

Enables BGP PIC functionality with appropriate route-policy

to calculate back up paths.

!

session-group intra-as

Configures session-group to define parameters that are

address-family independent.

remote-as 101

Specifies remote-as as AS number of route-reflector.

update-source Loopback0

Specifies update-source as Loopback0 for BGP

communication.

!

!

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

2-18

Implementation Guide

Chapter 2

Enterprise L2VPN Transport Design

Large Scale Network Design and Implementation

Table 2-7

CORE Route Reflectors Transport Configuration

CORE Route Reflectors Transport Configuration

Description

Enters neighbor-group provider edge configuration mode.

neighbor-group ABR

use session-group intra-as

Importing session-group address-family-independent

parameters.

address-family ipv4 labeled-unicast

Enables Labeled BGP address-family for neighbor group.

route-reflector-client

Configures peer-group for ABR as route-reflector client.

!

neighbor 100.111.11.3

use neighbor-group ABR

Configured ABR loopback as neighbor.

Inheriting neighbor-group provider edge parameters.

!

!

Enters MPLS LDP configuration mode.

mpls ldp

log

neighbor

graceful-restart

Configures router-id for LDP.

!

router-id 100.111.2.1

interface TenGigE0/2/0/0

Enables LDP on TenGig0/0/0/0.

!

The previous section describes how to implement hierarchical transport network using Labeled-BGP as

a scalable solution in a large scale network with fast failure detection and fast convergence mechanisms.

This solution avoids unrequired resource usage, simplifies network implementation, and achieves faster

convergence for large networks.

Transport configuration including rLFA, Transport QoS, and provider configuration remains the same in

concept and configuration as described in Small Scale Network Design and Implementation, page 2-1.

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

Implementation Guide

2-19

Chapter 2

Enterprise L2VPN Transport Design

Large Scale Network Design and Implementation

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

2-20

Implementation Guide

CH A P T E R

3

Enterprise L2VPN Services Design

This chapter describes how to implement enterprise L2VPN services over MPLS based transport

infrastructure described in the previous chapter. Enterprise L2VPN services provide end-to-end L2

connectivity between two or more locations of an enterprise. The UNI interface connecting provider

edge and customer edge devices is called an Attachment Circuit (AC) and can be a physical or virtual

port. In a virtualized L2 network, the ingress provider edge router receives L2 ethernet frames from the

branch or campus router on the attachment circuit and encapsulates them with the MPLS labels before

sending to remote provider edge(s). Remote provider edge(s) in turn remove the labels and extract the

original L2 frames and forward them to the destination interface. This type of L2 connectivity across the

MPLS domain can be point-to-point or multipoint and can be achieved as described below

Pseudo-wire

A pseudo-wire provides a point-to-point connection between two enterprise locations and emulates a

wire that is carrying L2 frames over an underlying core MPLS network. A pseudo-wire is instantiated

on the provider edge devices and the attachment circuits are attached to it. Whenever a pseudo-wire is

configured between a pair of provider edge routers, a targeted LDP session is established between them.

Provider edge routers exchange virtual circuit (VC) labels using this targeted LDP session. When an

MPLS packet is received from the core, this VC label is used by the egress provider edge router to

identify the pseudo-wire and forward the frame to the corresponding AC. When an ingress provider edge

router receives L2 ethernet frames on the AC connecting to a branch, campus, or Data Center router, it

encapsulates them with two labels. The bottom label is a VC label and the top label, called transport

label, belongs to the remote provider edge’s loopback interface. When the egress provider edge router

receives the MPLS packets, it checks the pseudo-wire VC label, removes mpls header and forwards the

original L2 frames to the corresponding AC connecting to a branch, campus, or Data Center router. The

customer edge routers can see each other as CDP neighbors and can run IGP adjacency between then.

The pseudo-wire can be implemented as described below (Figure 3-1).

Figure 3-1

CPE

(Branch/Campus

Router)

Pseudo-Wire

PE

(ASR 9000)

PE

(ASR 9000)

CPE

(Branch/Campus

Router)

Pseudowire

298747

MPLS Transport

Network

Table 3-1 details the provider edge router pseudo-wire configuration.

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

Implementation Guide

3-1

Chapter 3

Enterprise L2VPN Services Design

Virtual Private LAN Service (VPLS)

Table 3-1

Provider Edge Pseudo-Wire Configuration

Provider Edge Pseudo-Eire Configuration

Description

interface GigabitEthernet100/0/0/40.100 l2transport

L2 Customer Attachment Circuit.

encapsulation default

!

Enters L2vpn configuration mode.

l2vpn

Enters the name of the cross-connect group.

xconnect group PW

p2p PW

Enters a name for the point-to-point cross-connect.

interface GigabitEthernet100/0/0/40.100

Specifies the attachment circuit.

neighbor ipv4 100.111.3.1 pw-id 100

Configures PW neighbor and VC id.

Similar configuration can be done on the remote provider edge with the corresponding neighbor address.

Note

VC id is pseudo-wire identity and is unique per pseudo-wire on a provider edge. It should be same on

both provider edges.

A pseudo-wire provides a point-to-point connection between two enterprise locations. To achieve

multipoint connectivity between various enterprises locations, VPLS and PPB-EVPN are deployed as

described below.

Virtual Private LAN Service (VPLS)

VPLS is a multipoint L2VPN technology that connects two or more enterprise locations in a single LAN

like bridge domain over the MPLS transport infrastructure. Multiple enterprise locations in a VPLS

domain can communicate with each other over VPLS core. This is achieved by using VFI, attaching local

ACs and full mesh of pseudo-wires between provider edges to the VFI as described below (Figure 3-2).

Figure 3-2

VPLS

CPE

(Branch/Campus

Router)

PE (ASR 9000)

PE

(ASR 9000)

PE

(ASR 9000)

VPLS Core

(EoMPLS PW Full Mesh)

CPE

(Branch/Campus

Router)

298748

CPE

(Branch/Campus

Router)

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

3-2

Implementation Guide

Chapter 3

Enterprise L2VPN Services Design

Virtual Private LAN Service (VPLS)

Virtual Forwarding Instance (VFI)

A VFI is created on the provider edge router for each L2VPN instance or VPLS instance. It acts like a

virtual bridge for a given VPLS instance. Provider edge routers establish a full mesh of pseudo-wires

and exchange VC labels (using targeted LDP session), with all the other provider edge routers in the

same VPLS instance and attach these pseudo-wires to the VFI. Provider edge routers also connect local

ACs in that VPLS instance to the same VFI by adding the VFI instance and customer attachment circuits

to the same bridge domain.

When a frame is received from the Attachment circuit, the ingress provider edge router learns its source

mac address and updates its mac-address-table for the associated VFI. The provider edge router then

performs destination mac based forwarding such that frames with unknown unicast or broadcast and

multicast destination mac addresses are flooded to all the remote provider edges in the same VFI.

Flooding is achieved by sending one copy of the packet to each remote provider edge, in the same VPLS

instance, on its corresponding point-to-point pseudo-wire. Ingress provider edge encapsulates L2

ethernet frame with two MPLS labels for each remote provider edge, bottom label is MPLS VC label

and top label belonging to the remote provider edge loopback respectively. When the remote provider

edges receive the mpls packet, they check the VC label to map it with the correct VFI, remove the mpls

header, look at the original L2 header, update the source mac address of the frame in the corresponding

VFI mac-address-table with the egress interface as pseudo-wire on which it was received and then the

L2 frame is forwarded to the attachment circuits. After initial flooding the all provider edge routers get

their mac-address-table populated that they use for future forwarding.

When a provider edge receives L2 frames with known unicast destination mac addresses, it forwards

them to the pseudo-wire corresponding remote provider edge router by encapsulating L2 ethernet frame

with mpls VC label, as bottom label, and label belonging to remote provider edge, as top label,

respectively. The different enterprise locations connect to the same VPLS-based virtual bridge domain

and function as if they are in a shared, LAN-like environment.

Note

As a split horizon rule the remote provider edges never advertise a frame received from a pseudo-wire

in a VFI back to the same pseudo-wire or other pseudo-wires in the same VFI. This is to prevent the

loops.

Table 3-2 details the provider edge router VPLS configuration.

Table 3-2

Provider Edge VPLS Configuration

Provider Edge VPLS Configuration

Description

interface TenGigE0/0/0/2.876 l2transport

L2 Customer Attachment Circuit.

encapsulation dot1q 876

!

l2vpn

Enters L2vpn configuration mode.

bridge group L2VPN

Enters configuration mode for the “L2VPN” named bridge group. A bridge

group organizes bridge domains.

Enters configuration mode for the “VPLS” named bridge domain.

bridge-domain VPLS

interface Te0/0/0/2.876

vfi VPLS

Specifies the attachment circuit.

Configures the virtual forwarding interface (VFI) and enters L2VPN bridge

group bridge domain VFI configuration mode.

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

Implementation Guide

3-3

Chapter 3

Enterprise L2VPN Services Design

Provider Backbone Bridging Ethernet VPN (PBB-EVPN)

Table 3-2

Provider Edge VPLS Configuration (continued)

Provider Edge VPLS Configuration

Description

Specify the IP address of the cross-connect peers. The pseudo-wire-ID can be

same for all peers.

neighbor 100.111.3.1 pw-id 876

neighbor 100.111.11.1 pw-id 876

neighbor 100.111.11.2 pw-id 876

!

Note

pseudo-wire-ID per VFI should be unique on the provider edge and should be same on all the provider

edges for the same VPLS instance

VPLS provides multipoint connectivity between the Enterprise locations, however it requires full mesh

of pseudo-wires setup between provider edge routers in same VPLS instance. PBB-EVPN provides an

alternate solution that achieves multipoint connectivity between the provider edge devices by using the

BGP control plane with the introduction of another address-family “evpn”. See the section before for

details.

Provider Backbone Bridging Ethernet VPN (PBB-EVPN)

PBB-EVPN provides multipoint L2VPN connectivity between different enterprise locations.

PBB-EVPN provides a scalable solution by using BGP control plane to establish multipoint connectivity

across MPLS transport instead of using full mesh of pseudo-wires as in case of VPLS.

Provider edge routers in the PBB-EVPN network have unique mac addresses called backbone mac

(B-MAC) and they advertise these B-MACs with their corresponding labels using BGP address-family

"evpn." Provider edge devices populate the B-MAC addresses learned through BGP, as well as their

corresponding labels and the next-hop in their forwarding tables. L2 frames received from the customers

are encapsulated with PBB header. The PBB header's source and destination mac addresses are B-MACs.

These B-MAC addresses correspond to the source and destination provider edge routers, respectively.

MPLS labels corresponding to the destination B-MAC address are imposed and forwarded to the remote

provider edge. Customer L2 information including source mac, destination mac, and VLANs are kept

intact.

Figure 3-3

PBB-EVPN

CPE

(Branch/Campus

Router)

BEB

BCB

CPE

(Branch/Campus

Router)

PE

(ASR 9000)

PE

(ASR 9000)

PBB-EVPN Core

BCB (B-MAC Addresses) BCB

CPE

(Branch/Campus

Router)

BEB

298749

BEB

PE (ASR 9000)

Cisco ASR9000 Enterprise L2VPN for Metro-Ethernet, DC-WAN, WAN-Core, and Government and Public Networks

3-4

Implementation Guide

Chapter 3

Enterprise L2VPN Services Design

Provider Backbone Bridging Ethernet VPN (PBB-EVPN)

Note

Advertising backbone mac addresses (B-MAC) in BGP instead of customer mac addresses (C-MAC)

helps in reducing the number of BGP MAC advertisement routes.

PBB-EVPN has three components, Backbone Edge Bridge(BEB), Backbone Core Bridge(BCB) and

Ethernet VPN instance (EVI) . Each of these components are described in detail as follows

Backbone Edge Bridge (BEB)

Backbone Edge Bridge (BEB) is the bridge domain on the provider edge router towards the customer.

Customer edge connecting interfaces on the provider edge routers are part of the BEB. BEB is connected

to BCB (described below) with a service instance identifier called ISID. BEB adds PBB header to the

L2 frames received from the customer that includes source B-MAC (local provider edge backbone mac),

destination B-MAC (destination provider edge B-MAC) and configured ISID for the BEB. It then

forwards the frame to the BCB. BEBs belonging to the same L2VPN network are configured with the

same ISID value across all the provider edges. BEB is responsible of learning mac addresses of the local

and remote enterprise users and accordingly forward the traffic as described below.

•

If a L2 frame is learnt from local interface connected to the customer edge then its source mac

address is updated in the mac-address-table with next-hop as local interface.

•