Document 13813510

advertisement

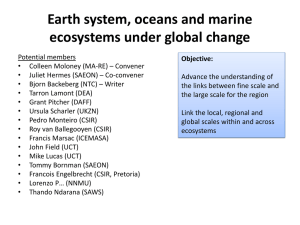

I W Z M Z . Bangalore. India. October 20-25. 2002

Algorithms for Computing the Capacity of Markov Channels with

Side Information

Shailendra K. Singh

Department of.ECE

Indian Institute of Science

Bangalore, 560012, India

Vinod Sharma

Department of ECE

Indian Institute of Science

Bangalore, 560012, India

shailQpal.ece.iisc.ernet.in

vinodmece.iisc.ernet.in

I. INTRODUCTION

Consider a finite state, irreducible M a r k channel {Sk}. At

time k the channel output Yk depends only on the channel input Xk and the channel state &. Let Uk and Vk he the CSIT

(channel state information at transmitter) and the CSIR (CSI

at &ever) respectively. In this paper we provide algorithms

to compute the capacity of the Markov channel under various assumptions on CSIT and CSIR where the assumption

P(& = s I (I:) = P(Sk I Uk)in (11 is not necessarily satisfied.

Our algorithms are based on the extension of ideas developed

in [3] to the channels with feedback.

11. DISCRETE C HANNEL

In this section we further assume perfect CSIR i.e., V, = S,

and U,,= g(S;-d+l)where g is a deterministic function of

A

%-,,+I =

(Sn-d+l ,...,&). Under these assumptions, we

show that the capacity achieving distribution of X, can he

made a function of the conditional distribution p , of S, giveu

rr; i.e., of

p,(s)

= P(S, = s

I V;).

(1)

converges a.s. i ~ sn + m, for any power allocation policy 7(,).

This limit equals the capacity if y(.) , a function of p , is the

OPA policy with respect to the limiting distribution p of pi.

Theorem 2 With the above assumptions on CSIT and CSIR,

the channel cnpacity of the finite state Markov fading channel

with AWGN noise and avemge power constrain P is

"P

where p is the limiting distribution of pn and (4) wnuerges

0 , s . to (5) for the OPA policy.

Etom the Lagrange multiplier and the Kuhn-Tucker conditions

[2] we get that the optimal power allocation policy y(.) should

.

and y(.) 2 0, for all p for which p ( p ) > 0. Solving (6)

for the minimum X such that the average power constraint

J , y ( p ) d p ( p ) 5 P is satisfied gives the optimal power allom

tion policy.

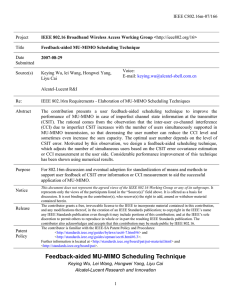

IV. EXAMPLES

Consider an AWGN channel with the fade values so =

1.0,SI = 0.5, sz = 0.01, s3 = 0.001 and let the average power

constaiut P he 20db. The capacity with perfect CSIT and

CSIR is 1.81 hits. We will take a 1 bit CSIT ( Uk = 0 if

4 E {.OOl,O.Ol} else 1) and perfect CSIR and the delay in

the feedback of the CSI to the transmitter d . Now the assump

and this limit exists a.s. The sup in (2) is taken over all tions in [l]are violated. Then the capacity of this channel with

d = 0 is Co = 1.786 bits and with d = 1 is CI = 1.699 bits.

distributions on the input alphabet.

Theorem 1 With the above mentioned assumptions on CSIT Fig. 1 shows the convergence of (4) for d = 0 and d = 1.

and perfect CSIR, the channel cnpacity of the finite state

Markov channel is

p,, can be computed recursively and { p n } is a regenerative

process which converges in distribution. We also show that

the capacity of the channel is

C

=

1

SUP

W ; Y IS = s,p)p(s)dAp)

(3)

p P(XlP)

where fi is the limiting distribution of p , and (21 converges

a.s. to (31.

111. AWGN C HANNEL

WITH

.

FADING

In this section we consider a Markov fading channel with additive white Gaussian noise (AWGN) with mean zero and variance one. The X, satisfies long term average power constraint

E[X:] 5 P. Here we assume that the receiver has the perfect

knowledge of instantaneous SNR, i.e. V, = SnE(lX,lz I V;]

and the CSIT may be imperfect i.e. U, = g ( x - d + l , Z , )

where g is a deterministic function and { Z , } is an iid noise

sequence. As in the previous section here we show that the

OPA (Optimal Power Allocation Policy) can be made a function of the conditional distribution pn, defined in (1). We

show that

(4)

0-7803-7629-3/02/Sl0.00 @ 2002 IEEE

,

.

,

I

. l . ~

,

,-

I

*.,-

. ..

-

_ .-

-

-

-

Figure 1: Convergence of Mutual Information to the capacity in Gaussian case

REFERENCES

[l] G. Caire and S. Shamai, On the Capacity of Some Channels

with Channel State Information, IEEE 'Pansaction Information

Theory, Vol. 42, 1996, pp. 868 - 886.

[2] T. Cover and J. Thomas, Elements of Information Theory ,

Wiley, New York, 1991.

[3] V. Sharma, S. K. Singh Entmpy mte and Mutual Informotion in

the Regenemtive Setup with Application to Morkov Chonnels,

Proc. IEEE Int. Symp. on Information Theory 2001, Washiug-

.

ton DC, USA June 2429, 2001.

219

-