Understanding how effective interventions work: psychologically enriched evaluation

advertisement

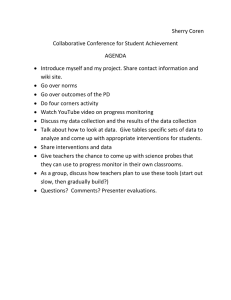

Understanding how effective interventions work: psychologically enriched evaluation 1 Symposium outline 1. Effective interventions: the research base and professional practice 1 Tony Cline 2. The role of reading self-concepts in early literacy: individual children’s progress Ben Hayes 3 3. An evaluation of a brief solution focused teacher coaching intervention Sue Bennett 4. The work of an outreach service supporting children with complex needs Louise Tuersley-Dixon 5. Effective interventions: the research base and professional practice 2 Seán Cameron 2 The future p pattern of evaluation research? A cultural ethos in which interventions must be justified + Less national funding for large scale evaluation + Increasing recognition that such evaluation activity will usually present only a partial picture = An enhanced role for local evaluation studies with a higher profile 3 Key y features of the studies in the symposium y p • A range of LA/EPS settings – Kent, Barnet, Wigan • A range of forms of intervention – Pupil reading, reading teacher coaching coaching, outreach service support • A range of evaluation designs – Experimental E i t l study t d comparing i different diff t interventions, i t ti comparison of pre- and post-intervention ratings, framework analysis of intervention session plans 4 Challenges of conceptualising evaluation solely in terms of the question - What works? • Achieving a consensus on the definition of a desired outcome • Resolving tension between treatment fidelity and sensitivity to context • Implementing randomisation andomisation of intervention inte ention trials t ials in the context of an equal q treatment ethos • Disentangling complex interactions of setting, programme and d outcome 5 Evaluating outcome and process • So a full answer to the core question, Does it work?, requires an understanding of the intervention process. • Process evaluation involves other questions: – How do participants and stakeholders perceive the intervention? – How are different components of the intervention implemented? – What impact do different contextual factors have? – If the intervention is varied in terms of duration,, staffing or equipment, how is its impact affected? – In what ways to the effects of the intervention vary between subgroups of participants (Oakley et al., 2006) 6 If practitioners and policy makers are to take any notice of it,, evaluative research must: • • • Achieve both summative and formative objectives. j Support transfer through a sound (and explicit) theory as well as through a generalisable sample. Make explicit reference to contextual variation when the findings are reported. (NB. Is the researcher part of – and influenced by - the context?) • Speak p to current concerns (fashions?) ( ) in educational politics and practice. (Remember that that is true for researchers too: what is seen to “work” depends on what it is fashionable to study.) 7 What then do we mean by “psychologically enriched evaluation”? • Evaluation that addresses “how?” how? and “why?” why? questions as well as “what?” questions, d developing l i a theory th off change h as wellll as collecting data that chart the fact of change. • Evaluation that explores the experience of involvement as well as the impact of involvement • Evaluation that draws on techniques q and instruments from Psychology to investigate issues arising in the practice of others others. 8 Some websites illustrating these points • http://whatworkswell.standards.dcsf.gov.uk http://whatworkswell standards dcsf gov uk • http://www.bestevidence.org.uk • http://eppi.ioe.ac.uk • http://ies.ed.gov/ncee/wwc 9 References and further reading Elliott, J. and Kushner, S. (2007). The need for a manifesto for educational programme evaluation evaluation. Cambridge Journal of Education, 37, (3), 321-336. IIssitt, itt JJ. and d Kyriacou, K i C. C (2009). (2009) Epistemological E i t l i l problems bl in i establishing an evidence base for classroom practice. The Psychology of Education Review Review, 33 33, (1), (1) 47-52. 47 52 Oakley, A., Strange, V., Bonnell, C. et al. (2006). Process evaluation l i in i randomised d i d controlled ll d trials i l off complex l interventions. British Medical Journal, 332, 413-416. Tymms, P., Merrell, C. and Coe, R. (2008). Educational policies and RTCs. The Psychology of Education Review, 32, (2), 3-30. (With commentary by eight peer reviewers.) 10