Document 11908976

advertisement

IV. Neural Network Learning

10/25/09

1

A.

Neural Network Learning

10/25/09

2

Supervised Learning

• Produce desired outputs for training inputs

• Generalize reasonably & appropriately to

other inputs

• Good example: pattern recognition

• Feedforward multilayer networks

10/25/09

3

input

layer

10/25/09

hidden

layers

. . .

. . .

. . .

. . .

. . .

. . .

Feedforward Network

output

layer

4

Typical Artificial Neuron

connection

weights

inputs

output

threshold

10/25/09

5

Typical Artificial Neuron

linear

combination

activation

function

net input

(local field)

10/25/09

6

Equations

n

hi = ∑ w ij s j − θ

j=1

h = Ws − θ

Net input:

Neuron output:

€

10/25/09

s′i = σ ( hi )

s′ = σ (h)

7

10/25/09

. . .

. . .

Single-Layer Perceptron

8

Variables

x1

w1

xj

Σ

wj

h

Θ

y

wn

xn

10/25/09

θ

9

Single Layer Perceptron

Equations

Binary threshold activation function :

1, if h > 0

σ ( h ) = Θ( h ) =

0, if h ≤ 0

€

1,

Hence, y =

0,

1,

=

0,

10/25/09

€

if ∑ w j x j > θ

j

otherwise

if w ⋅ x > θ

if w ⋅ x ≤ θ

10

€

2D Weight Vector

w2

w ⋅ x = w x cos φ

v

cos φ =

x

+

w

w⋅ x = w v

φ

€

€

€

x

–

w⋅ x > θ

⇔ w v >θ

⇔v >θ w

10/25/09

v

w1

θ

w

11

N-Dimensional Weight Vector

+

w

normal

vector

separating

hyperplane

–

10/25/09

12

Goal of Perceptron Learning

• Suppose we have training patterns x1, x2,

…, xP with corresponding desired outputs

y1, y2, …, yP • where xp ∈ {0, 1}n, yp ∈ {0, 1}

• We want to find w, θ such that

yp = Θ(w⋅xp – θ) for p = 1, …, P 10/25/09

13

Treating Threshold as Weight

n

h = ∑ w j x j − θ

j=1

x1

n

= −θ + ∑ w j x j

w1

xj

j=1

Σ

wj

wn

xn

10/25/09

h

Θ

y

€

θ

14

Treating Threshold as Weight

n

h = ∑ w j x j − θ

j=1

x0 = –1

x1

n

= −θ + ∑ w j x j

θ = w0

w1

xj

Σ

wj

wn

xn

j=1

h

Θ

y

€

Let x 0 = −1 and w 0 = θ

n

n

˜ ⋅ x˜

h = w 0 x 0 + ∑ w j x j =∑ w j x j = w

j=1

10/25/09

€

j= 0

15

Augmented Vectors

θ

w1

˜ =

w

wn

−1

p

x

1

p

x˜ =

p

xn

p

˜

˜

We want y = Θ( w ⋅ x ), p = 1,…,P

p

€

10/25/09

€

16

Reformulation as Positive

Examples

We have positive (y p = 1) and negative (y p = 0) examples

˜ ⋅ x˜ p > 0 for positive, w

˜ ⋅ x˜ p ≤ 0 for negative

Want w

€

€

€

€

Let z p = x˜ p for positive, z p = −x˜ p for negative

˜ ⋅ z p ≥ 0, for p = 1,…,P

Want w

Hyperplane through origin with all z p on one side

10/25/09

17

Adjustment of Weight Vector

z5

z1

z9

z1011

z z6

z2

z3

z8

z4

z7

10/25/09

18

Outline of

Perceptron Learning Algorithm

1. initialize weight vector randomly

2. until all patterns classified correctly, do:

a) for p = 1, …, P do:

1) if zp classified correctly, do nothing

2) else adjust weight vector to be closer to correct

classification

10/25/09

19

Weight Adjustment

p

η

z

˜ ′′

p

w

ηz

z

˜′

w

p

˜

w

€ €

€

€

€

€

10/25/09

20

Improvement in Performance

˜ ⋅ z p < 0,

If w

˜ ′ ⋅ z = (w

˜ + ηz ) ⋅ z

w

p

p

p

p

p

p

˜

= w ⋅ z + ηz ⋅ z

€

p

˜ ⋅ z +η z

=w

p 2

˜ ⋅ zp

>w

10/25/09

€

21

Perceptron Learning Theorem

• If there is a set of weights that will solve the

problem,

• then the PLA will eventually find it

• (for a sufficiently small learning rate)

• Note: only applies if positive & negative

examples are linearly separable

10/25/09

22

NetLogo Simulation of

Perceptron Learning

Run Perceptron-Geometry.nlogo

10/25/09

23

Classification Power of

Multilayer Perceptrons

• Perceptrons can function as logic gates

• Therefore MLP can form intersections,

unions, differences of linearly-separable

regions

• Classes can be arbitrary hyperpolyhedra

• Minsky & Papert criticism of perceptrons

• No one succeeded in developing a MLP

learning algorithm

10/25/09

24

Hyperpolyhedral Classes

10/25/09

25

Credit Assignment Problem

input

layer

10/25/09

. . .

. . .

. . .

. . .

. . .

hidden

layers

. . .

. . .

How do we adjust the weights of the hidden layers?

Desired

output

output

layer

26

NetLogo Demonstration of

Back-Propagation Learning

Run Artificial Neural Net.nlogo

10/25/09

27

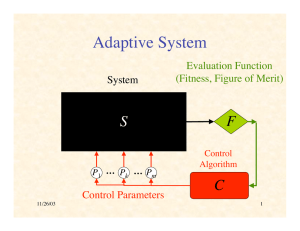

Adaptive System

System

Evaluation Function

(Fitness, Figure of Merit)

S

F

P1 … Pk … Pm

Control Parameters

10/25/09

Control

Algorithm

C

28

Gradient

∂F

measures how F is altered by variation of Pk

∂Pk

∂F

∂P1

∇F = ∂F ∂P

k

∂F

∂

P

m

€

∇F points in direction of maximum local increase in F

10/25/09

€

€

29

Gradient Ascent

on Fitness Surface

∇F

+

gradient ascent

10/25/09

–

30

Gradient Ascent

by Discrete Steps

∇F

+

–

10/25/09

31

Gradient Ascent is Local

But Not Shortest

+

–

10/25/09

32

Gradient Ascent Process

P˙ = η∇F (P)

Change in fitness :

m ∂F d P

m

dF

k

˙

F=

=∑

= ∑ (∇F ) k P˙k

k=1 ∂P d t

k=1

€d t

k

F˙ = ∇F ⋅ P˙

F˙ = ∇F ⋅ η∇F = η ∇F

€

€

2

≥0

Therefore gradient ascent increases fitness

(until reaches 0 gradient)

10/25/09

33

General Ascent in Fitness

Note that any adaptive process P( t ) will increase

fitness provided :

0 < F˙ = ∇F ⋅ P˙ = ∇F P˙ cosϕ

where ϕ is angle between ∇F and P˙

Hence we need cos ϕ > 0

€

or ϕ < 90

10/25/09

€

34

General Ascent

on Fitness Surface

+

∇F

–

10/25/09

35

Fitness as Minimum Error

Suppose for Q different inputs we have target outputs t1,…,t Q

Suppose for parameters P the corresponding actual outputs

€

are y1,…,y Q

Suppose D(t,y ) ∈ [0,∞) measures difference between

€

target & actual outputs

Let E q = D(t q ,y q ) be error on qth sample

€

€

Q

Q

Let F (P) = −∑ E q (P) = −∑ D[t q ,y q (P)]

q=1

10/25/09

q=1

36

Gradient of Fitness

q

∇F = ∇ −∑ E = −∑ ∇E q

q

q

q

€

∂E

∂

=

D(t q ,y q ) = ∑

∂Pk ∂Pk

j

€

€

∂D(t q ,y q ) ∂y qj

€

∂Pk

d D(t q ,y q ) ∂y q

=

⋅

q

dy

∂Pk

q

∂

y

= ∇ D t q ,y q ⋅

yq

10/25/09

∂y qj

(

)

∂Pk

37

Jacobian Matrix

q

∂y1q

∂

y

1

∂P1

∂Pm

Define Jacobian matrix J q =

q

∂y q

∂

y

n

n ∂P

∂

P

1

m

Note J q ∈ ℜ n×m and ∇D(t q ,y q ) ∈ ℜ n×1

€

Since (∇E q ) k

€

∴∇E = ( J

q

€

€

10/25/09

q

q

q

∂

D

t

,y

∂

y

(

)

∂E

j

=

=∑

,

q

∂Pk

∂Pk

∂y j

j

q T

)

q

∇D(t q ,y q )

38

€

€

Derivative of Squared Euclidean

Distance

Suppose D(t,y ) = t − y = ∑ ( t − y )

2

2

i

i

i

∂D(t − y ) ∂

∂ (t i − y i )

2

=

(t i − y i ) = ∑

∑

∂y j

∂y j i

∂y j

i

=

d( t j − y j )

dyj

d D(t,y )

∴

= 2( y − t )

dy

€

10/25/09

2

2

= −2( t j − y j )

39

Gradient of Error on qth Input

d D(t ,y ) ∂y

∂E

=

⋅

q

q

∂Pk

q

d yq

q

∂Pk

q

∂

y

= 2( y q − t q ) ⋅

∂Pk

q

∂

y

= 2∑ ( y qj − t qj ) j

j

∂Pk

∇E = 2( J

q

q T

)

q

q

y

−

t

(

)

€

10/25/09

€

40

Recap

P˙ = η∑ ( J ) (t − y )

q T

q

q

q

To know how to decrease the differences between

actual & desired outputs,

€

we need to know elements of Jacobian,

∂y qj

∂Pk ,

which says how jth output varies with kth parameter

(given the qth input)

The Jacobian depends on the specific form of the system,

in this case, a feedforward neural network

€

10/25/09

41

Multilayer Notation

xq

W1

s1

10/25/09

W2

s2

WL–2 WL–1

sL–1

yq

s L

42

Notation

•

•

•

•

•

•

•

L layers of neurons labeled 1, …, L Nl neurons in layer l sl = vector of outputs from neurons in layer l input layer s1 = xq (the input pattern)

output layer sL = yq (the actual output)

Wl = weights between layers l and l+1

Problem: find how outputs yiq vary with

weights Wjkl (l = 1, …, L–1)

10/25/09

43

Typical Neuron

s1l–1

Wi1l–1

l–1

W

ij

sjl–1

Σ

hil

σ

sil

WiNl–1

sNl–1

10/25/09

44

Error Back-Propagation

∂E q

We will compute

starting with last layer (l = L −1)

l

∂W ij

and working back to earlier layers (l = L − 2,…,1)

€

10/25/09

45

Delta Values

Convenient to break derivatives by chain rule :

∂E q

∂E q ∂hil

= l

l−1

∂W ij

∂hi ∂W ijl−1

q

∂

E

Let δil = l

∂h i

l

∂E q

∂

h

l

i

So

=

δ

i

∂W ijl−1

∂W ijl−1

€

10/25/09

46

Output-Layer Neuron

s1L–1

Wi1L–1

L–1

W

ij

sjL–1

Σ

hiL

σ

tiq

siL = yiq

WiNL–1

sNL–1

10/25/09

Eq

47

Output-Layer Derivatives (1)

q

∂

E

∂

L

L

q 2

δi = L = L ∑ ( sk − t k )

k

∂h i ∂h i

=

d( s − t

L

i

d hiL

q 2

i

)

L

d

s

= 2( siL − t iq ) iL

d hi

= 2( siL − t iq )σ ′( hiL )

10/25/09

€

48

Output-Layer Derivatives (2)

∂hiL

∂

L−1 L−1

L−1

=

W

s

=

s

ik

k

j

L−1

L−1 ∑

∂W ij

∂W ij k

€

∂E q

L L−1

∴

=

δ

i sj

L−1

∂W ij

where δiL = 2( siL − t iq )σ ′( hiL )

10/25/09

€

49

Hidden-Layer Neuron

s1l

s1l–1

W1il

Wi1l–1

l–1

W

ij

sjl–1

Σ

hil

σ

sil

Wkil

s1l+1

skl+1

Eq

WiNl–1

sNl–1

10/25/09

WNil

sNl

sNl+1

50

Hidden-Layer Derivatives (1)

l

∂E q

∂

h

l

i

Recall

=

δ

i

l−1

∂W ij

∂W ijl−1

q

q

l +1

l +1

∂

E

∂

E

∂

h

∂

h

δil = l = ∑ l +1 k l = ∑δkl +1 k l

∂h i

∂h i

∂h i

k ∂h k

k

€

€

€

l +1

k

l

i

∂h

=

∂h

m

∂hil

l

d

σ

h

(

∂W kil sil

i)

l

l

l

′

=

=

W

=

W

σ

h

(

ki

ki

i)

l

l

∂h i

d hi

∴ δil = ∑δkl +1W kilσ ′( hil ) = σ ′( hil )∑δkl +1W kil

k

10/25/09

€

∂ ∑ W kml sml

k

51

Hidden-Layer Derivatives (2)

l

i

l−1

ij

∂h

∂W

€

l−1 l−1

dW

∂

ij s j

l−1 l−1

l−1

=

W

s

=

=

s

ik

k

j

l−1 ∑

∂W ij k

dW ijl−1

∂E q

l l−1

∴

= δi s j

l−1

∂W ij

where δil = σ ′( hil )∑δkl +1W kil

k

10/25/09

€

52

Derivative of Sigmoid

Suppose s = σ ( h ) =

1

(logistic sigmoid)

1+ exp(−αh )

−1

−2

Dh s = Dh [1+ exp(−αh )] = −[1+ exp(−αh )] Dh (1+ e−αh )

€

= −(1+ e

−αh −2

) (−αe ) = α

−αh

e−αh

−αh 2

(1+ e )

1+ e−αh

1

e−αh

1

=α

= αs

−

−αh

−αh

−αh

−αh

1+ e 1+ e

1+

e

1+

e

= αs(1− s)

10/25/09

€

53

Summary of Back-Propagation

Algorithm

Output layer : δiL = 2αsiL (1− siL )( siL − t iq )

∂E q

L L−1

=

δ

i sj

L−1

∂W ij

Hidden layers : δil = αsil (1− sil )∑δkl +1W kil

k

€

∂E q

l l−1

=

δ

i sj

l−1

∂W ij

10/25/09

€

54

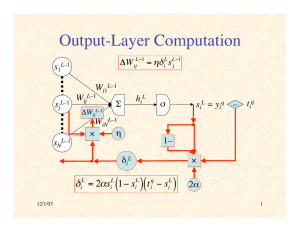

Output-Layer Computation

ΔW ijL−1 = ηδiL sL−1

j

s1L–1

Wi1L–1

L–1

W

ij

sjL–1 €

Σ

ΔWijL–1

sNL–1

10/25/09

WiNL–1

η

×

hiL

σ

siL = yiq – tiq

1–

δiL

×

δiL = 2αsiL (1− siL )( t iq − siL )

2α

55

Hidden-Layer Computation

ΔW ijl−1 = ηδil sl−1

j

s1l–1

W1il

l–1

Wi1

Wijl–1

sjl–1 €

sNl–1

ΔWijl–1

hil

Σ

WiNl–1

×

η

×

WNil

1–

δil

×

δil = αsil (1− sil )∑δkl +1W kil

10/25/09

sil

σ

k

Wkil

Σ

s1l+1

δ1l+1

skl+1

Eq

δkl+1

sNl+1

δNl+1

α

56

Training Procedures

• Batch Learning

– on each epoch (pass through all the training pairs),

– weight changes for all patterns accumulated

– weight matrices updated at end of epoch

– accurate computation of gradient

• Online Learning

– weight are updated after back-prop of each training pair

– usually randomize order for each epoch

– approximation of gradient

• Doesn’t make much difference

10/25/09

57

Summation of Error Surfaces

E

E1

E2

10/25/09

58

Gradient Computation

in Batch Learning

E

E1

E2

10/25/09

59

Gradient Computation

in Online Learning

E

E1

E2

10/25/09

60

Testing Generalization

Available

Data

Training

Data

Domain

Test

Data

10/25/09

61

Problem of Rote Learning

error

error on

test data

error on

training

data

epoch

stop training here

10/25/09

62

Improving Generalization

Training

Data

Available

Data

Domain

Test Data

Validation Data

10/25/09

63

A Few Random Tips

• Too few neurons and the ANN may not be able to

decrease the error enough

• Too many neurons can lead to rote learning

• Preprocess data to:

– standardize

– eliminate irrelevant information

– capture invariances

– keep relevant information

• If stuck in local min., restart with different random

weights

10/25/09

64

Run Example BP Learning

10/25/09

65

Beyond Back-Propagation

• Adaptive Learning Rate

• Adaptive Architecture

– Add/delete hidden neurons

– Add/delete hidden layers

• Radial Basis Function Networks

• Recurrent BP

• Etc., etc., etc.…

10/25/09

66

What is the Power of

Artificial Neural Networks?

• With respect to Turing machines?

• As function approximators?

10/25/09

67

Can ANNs Exceed the “Turing Limit”?

• There are many results, which depend sensitively on

assumptions; for example:

• Finite NNs with real-valued weights have super-Turing

power (Siegelmann & Sontag ‘94)

• Recurrent nets with Gaussian noise have sub-Turing power

(Maass & Sontag ‘99)

• Finite recurrent nets with real weights can recognize all

languages, and thus are super-Turing (Siegelmann ‘99)

• Stochastic nets with rational weights have super-Turing

power (but only P/POLY, BPP/log*) (Siegelmann ‘99)

• But computing classes of functions is not a very relevant

way to evaluate the capabilities of neural computation

10/25/09

68

A Universal Approximation Theorem

Suppose f is a continuous function on [0,1]

Suppose σ is a nonconstant, bounded,

monotone increasing real function on ℜ.

€

n

For any ε > 0, there is an m such that

∃a ∈ ℜ m , b ∈ ℜ n , W ∈ ℜ m×n such that if

€

n

F ( x1,…, x n ) = ∑ aiσ ∑W ij x j + b j

i=1

j=1

m

€

[i.e., F (x) = a ⋅ σ (Wx + b)]

€then F ( x ) − f ( x ) < ε for all x ∈ [0,1]

10/25/09

€

n

(see, e.g., Haykin, N.Nets 2/e, 208–9)

69

One Hidden Layer is Sufficient

• Conclusion: One hidden layer is sufficient

to approximate any continuous function

arbitrarily closely

1

b1

x1

xn

10/25/09

Σσ

Σσ

a1

a2

Σ

am

Wmn

Σσ

70

The Golden Rule of Neural Nets

Neural Networks are the

second-best way

to do everything!

10/25/09

IVB

71