Notation Multilayer Notation Typical Neuron Error Back-Propagation

advertisement

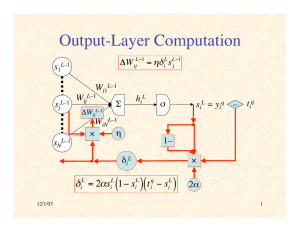

Part 7: Neural Networks & Learning 11/29/04 Notation Multilayer Notation xq W1 s1 WL–2 WL–1 W2 s2 sL–1 • • • • • • • yq sL 11/29/04 1 L layers of neurons labeled 1, …, L Nl neurons in layer l sl = vector of outputs from neurons in layer l input layer s1 = xq (the input pattern) output layer sL = yq (the actual output) Wl = weights between layers l and l+1 Problem: find how outputs yiq vary with weights Wjkl (l = 1, …, L–1) 11/29/04 Typical Neuron Error Back-Propagation s1l–1 sjl–1 2 We will compute Wi1l–1 Wijl–1 hil E q starting with last layer (l = L 1) W ijl and working back to earlier layers (l = L 2,K,1) sil WiNl–1 sNl–1 11/29/04 3 11/29/04 4 1 Part 7: Neural Networks & Learning 11/29/04 Output-Layer Neuron Delta Values Convenient to break derivatives by chain rule : s1L–1 E q E q hil = l l1 W ij hi W ijl1 E q Let = l h i sjL–1 l i So 5 Output-Layer Derivatives (1) dh L i Eq 2 = 2(siL t iq ) 6 Output-Layer Derivatives (2) hiL = W ikL1skL1 = sL1 j W ijL1 W ijL1 k d siL d hiL = 2( siL t iq ) ( hiL ) 11/29/04 tiq siL = yiq 11/29/04 2 E q = (skL tkq ) hiL hiL k d( siL t iq ) sNL–1 11/29/04 = hiL WiNL–1 E q hil = il W ijl1 W ijl1 iL = Wi1L–1 WijL–1 E q = iL sL1 j W ijL1 where iL = 2( siL t iq ) ( hiL ) 7 11/29/04 8 2 Part 7: Neural Networks & Learning 11/29/04 Hidden-Layer Neuron Hidden-Layer Derivatives (1) Recall s1l s1l–1 sjl–1 W1il Wi1l–1 Wijl–1 hil sil Wkil s1l+1 skl+1 il = Eq WiNl–1 WNil sNl–1 sNl sNl+1 E q E q h l +1 h l +1 = l +1 k l = kl +1 k l l h i h i h i k h k k l l d ( hil ) hkl +1 m W km sm W kil sil = = = W kil = W kil ( hil ) l l l h i hi h i d hil il = kl +1W kil (hil ) = ( hil )kl +1W kil k k 11/29/04 9 11/29/04 10 Derivative of Sigmoid Hidden-Layer Derivatives (2) Suppose s = ( h) = l1 l1 dW ij s j hil = W ikl1skl1 = = sl1 j l1 l1 W ij k W ij dW ijl1 1 (logistic sigmoid) 1+ exp(h ) 1 2 Dh s = Dh [1+ exp(h )] = [1+ exp(h )] Dh (1+ eh ) = (1+ eh ) E q = il sl1 j W ijl1 = where il = ( hil )kl +1W kil k 11/29/04 E q hil = il l1 W ij W ijl1 2 (e ) = h e h h 2 (1+ e ) 1+ eh 1 1 e h = s h 1+ eh 1+ eh 1+ eh 1+ e = s(1 s) 11 11/29/04 12 3 Part 7: Neural Networks & Learning 11/29/04 Summary of Back-Propagation Algorithm Output-Layer Computation W ijL1 = iL sL1 j s1L–1 Output layer : iL = 2siL (1 siL )( siL t iq ) sjL–1 q E = iL sL1 j W ijL1 sNL–1 Hidden layers : il = sil (1 sil )kl +1W kil Wi1L–1 WijL–1 WiNL–1 k E q = il sl1 j W ijl1 11/29/04 13 W s1l–1 sNl–1 = s W1il Wi1l–1 sjl–1 l l1 i j Wijl–1 Wijl–1 hil Wkil WiNl–1 WNil 1– il il = sil (1 sil )kl +1W kil 11/29/04 sil k 1– iL iL = 2siL (1 siL )(t iq siL ) 2 14 • Batch Learning 1l+1 Eq kl+1 – – – – on each epoch (pass through all the training pairs), weight changes for all patterns accumulated weight matrices updated at end of epoch accurate computation of gradient • Online Learning – weight are updated after back-prop of each training pair – usually randomize order for each epoch – approximation of gradient sNl+1 siL = yiq – tiq Training Procedures s1l+1 skl+1 11/29/04 Hidden-Layer Computation l1 ij hiL WijL–1 Nl+1 • Doesn’t make much difference 15 11/29/04 16 4 Part 7: Neural Networks & Learning 11/29/04 Gradient Computation in Batch Learning Summation of Error Surfaces E E E1 E1 E2 11/29/04 E2 17 Gradient Computation in Online Learning 11/29/04 18 The Golden Rule of Neural Nets E Neural Networks are the second-best way to do everything! E1 E2 11/29/04 19 11/29/04 20 5 Part 7: Neural Networks & Learning 11/29/04 Complex Systems • • • • • • VIII. Review of Key Concepts 11/29/04 21 11/29/04 Many Interacting Elements 22 Complementary Interactions • Massively parallel • Distributed information storage & processing • Diversity • • • • • – avoids premature convergence – avoids inflexibility 11/29/04 Many interacting elements Local vs. global order: entropy Scale (space, time) Phase space Difficult to understand Open systems 23 Positive feedback / negative feedback Amplification / stabilization Activation / inhibition Cooperation / competition Positive / negative correlation 11/29/04 24 6 Part 7: Neural Networks & Learning 11/29/04 Emergence & Self-Organization Pattern Formation • Microdecisions lead to macrobehavior • Circular causality (macro / micro feedback) • Coevolution – predator/prey, Red Queen effect – gene/culture, niche construction, Baldwin effect 11/29/04 25 • • • • • Excitable media Amplification of random fluctuations Symmetry breaking Specific difference vs. generic identity Automatically adaptive 11/29/04 Stigmergy 26 Emergent Control • Stigmergy • Entrainment (distributed synchronization) • Coordinated movement • Continuous (quantitative) • Discrete (qualitative) • Coordinated algorithm – through attraction, repulsion, local alignment – in concrete or abstract space – non-conflicting – sequentially linked • Cooperative strategies – nice & forgiving, but reciprocal – evolutionarily stable strategy 11/29/04 27 11/29/04 28 7 Part 7: Neural Networks & Learning 11/29/04 Wolfram’s Classes Attractors • • • • • Classes – point attractor – cyclic attractor – chaotic attractor – – – – • Basin of attraction • Imprinted patterns as attractors – pattern restoration, completion, generalization, association 11/29/04 Class I: point Class II: cyclic Class III: chaotic Class IV: complex (edge of chaos) 29 11/29/04 Energy / Fitness Surface 30 Biased Randomness • Descent on energy surface / ascent on fitness surface • Lyapunov theorem to prove asymptotic stability / convergence • Soft constraint satisfaction / relaxation • Gradient (steepest) ascent / descent • Adaptation & credit assignment 11/29/04 persistent state maintenance bounded cyclic activity global coordination of control & information order for free • • • • • • 31 Exploration vs. exploitation Blind variation & selective retention Innovation vs. incremental improvement Pseudo-temperature Diffusion Mixed strategies 11/29/04 32 8 Part 7: Neural Networks & Learning 11/29/04 Natural Computation • • • • • • Tolerance to noise, error, faults, damage Generality of response Flexible response to novelty Adaptability Real-time response Optimality is secondary 11/29/04 Student Course Evaluation! (Do it online) 33 11/29/04 34 9