Adaptive System S F C

advertisement

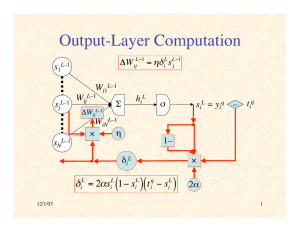

Adaptive System System Evaluation Function (Fitness, Figure of Merit) S F P1 … Pk … Pm Control Parameters 11/26/03 Control Algorithm C 1 Gradient ∂F measures how F is altered by variation of Pk ∂Pk ∂F ∂P1 M ∂F ∇F = ∂P k M ∂F ∂ P m € ∇F points in direction of maximum increase in F 11/26/03 € € 2 Gradient Ascent on Fitness Surface ∇F + gradient ascent 11/26/03 – 3 Gradient Ascent by Discrete Steps ∇F + – 11/26/03 4 Gradient Ascent is Local But Not Shortest + – 11/26/03 5 Gradient Ascent Process P˙ = η∇F (P) Change in fitness : m ∂F d P m dF k ˙ F= =∑ = ∑ (∇F ) k P˙k k=1 ∂P d t k=1 €d t k F˙ = ∇F ⋅ P˙ F˙ = ∇F ⋅ η∇F = η ∇F € € 2 ≥0 Therefore gradient ascent increases fitness (until reaches 0 gradient) 11/26/03 6 General Ascent in Fitness Note that any parameter adjustment process P( t ) will increase fitness provided : 0 < F˙ = ∇F ⋅ P˙ = ∇F P˙ cosϕ where ϕ is angle between ∇F and P˙ Hence we need cos ϕ > 0 € or ϕ < 90 o 11/26/03 € 7 General Ascent on Fitness Surface + ∇F – 11/26/03 8 Fitness as Minimum Error Suppose for Q different inputs we have target outputs t1,K,t Q Suppose for parameters P the corresponding actual outputs € are y1,K,y Q Suppose D(t,y ) ∈ [0,∞) measures difference between € target & actual outputs Let E q = D(t q ,y q ) be error on qth sample € € Q Q Let F (P) = −∑ E q (P) = −∑ D[t q ,y q (P)] q=1 11/26/03 q=1 9 Gradient of Fitness ∇F = ∇ −∑ E q = −∑ ∇E q q q q € ∂E ∂ = D(t q ,y q ) = ∑ ∂Pk ∂Pk j € € ∂D(t ,y € ∂y qj q q ∂ y ) j ∂Pk d D(t q ,y q ) ∂y q = ⋅ q dy ∂Pk q q q ∂ y = ∇ D t ,y ⋅ yq 11/26/03 q ( ) ∂Pk 10 Jacobian Matrix q ∂y1q ∂ y 1 ∂P1 L ∂Pm Define Jacobian matrix J q = M O M q ∂y q ∂ y n n ∂P L ∂ P 1 m Note J q ∈ ℜ n×m and ∇D(t q ,y q ) ∈ ℜ n×1 € Since (∇E q ) k € ∴∇E = (J q € € q q q ∂ D t ,y ∂ y ( ) ∂E j = =∑ , q ∂Pk ∂y j j ∂Pk q 11/26/03 q T ) ∇D(t q ,y q ) 11 € € Derivative of Squared Euclidean Distance Suppose D(t,y ) = t − y = ∑ (t − y ) 2 2 i i i ∂D(t − y) ∂ ∂ (t i − y i ) 2 = (ti − y i ) = ∑ ∑ ∂y j ∂y j i ∂y j i 2 2 d( t i − y i ) = = −2( t i − y i ) d yi d D(t,y ) ∴ = 2( y − t ) dy € 11/26/03 12 Gradient of Error on qth Input q q q d D t ,y ( ) ∂E ∂y = ⋅ q ∂Pk dy ∂Pk q q ∂y = 2( y − t ) ⋅ ∂Pk q q q ∂ y j q q = 2∑ ( y j − t j ) j ∂Pk € 11/26/03 13 Recap ˙P = η∑ ( J q ) T (t q − y q ) q To know how to decrease the differences between actual & desired outputs, € we need to know elements of Jacobian, ∂y qj ∂Pk , which says how jth output varies with kth parameter (given the qth input) The Jacobian depends on the specific form of the system, in this case, a feedforward neural network € 11/26/03 14 Multilayer Notation xq W1 s1 11/26/03 W2 s2 WL–2 WL–1 sL–1 yq sL 15 Notation • • • • • • • L layers of neurons labeled 1, …, L Nl neurons in layer l sl = vector of outputs from neurons in layer l input layer s1 = xq (the input pattern) output layer sL = yq (the actual output) Wl = weights between layers l and l+1 Problem: find out outputs yiq vary with weights Wjkl (l = 1, …, L–1) 11/26/03 16 Typical Neuron s1l–1 Wi1l–1 sjl–1 Wijl–1 Σ hil σ si l WiNl–1 sNl–1 11/26/03 17 Error Back-Propagation ∂E q We will compute starting with last layer (l = L −1) l ∂W ij and working back to earlier layers (l = L − 2,K,1) € 11/26/03 18 Delta Values Convenient to break derivatives by chain rule : ∂E q ∂E q ∂hil = l l−1 ∂W ij ∂hi ∂W ijl−1 q ∂ E Let δil = l ∂h i l ∂E q ∂ h l i So = δ i l−1 ∂W ij ∂W ijl−1 € 11/26/03 19 Output-Layer Neuron s1L–1 Wi1L–1 sjL–1 WijL–1 Σ hiL σ tiq siL = yiq WiNL–1 sNL–1 11/26/03 Eq 20 Output-Layer Derivatives (1) q ∂E ∂ L q 2 δ = L = L ∑ ( sk − t k ) k ∂h i ∂h i L i = d( s − t L i q 2 i d hiL ) L d s L q = 2(si − t i ) iL d hi L ′ = 2( s − t )σ ( hi ) L i 11/26/03 € q i 21 Output-Layer Derivatives (2) ∂hiL ∂ L−1 L−1 L−1 = W s = s ik k j L−1 L−1 ∑ ∂W ij ∂W ij k € ∂E q L L−1 ∴ = δ i sj L−1 ∂W ij where δiL = 2( siL − t iq )σ ′( hiL ) 11/26/03 € 22 Hidden-Layer Neuron s1l s1l–1 W1kl Wi1l–1 sjl–1 Wijl–1 Σ hil σ si l Wkil s1l+1 skl+1 Eq WiNl–1 sNl–1 11/26/03 WNil sNl sNl+1 23 Hidden-Layer Derivatives (1) l ∂E q ∂ h l i Recall = δ i l−1 ∂W ij ∂W ijl−1 q q l +1 l +1 ∂ E ∂ E ∂ h ∂ h δil = l = ∑ l +1 k l = ∑δkl +1 k l ∂h i ∂h i ∂h i k ∂h k k € l +1 k l i ∂h = ∂h € € m ∂hil l d σ h ( ∂W kil sil i) l l l ′ = = W = W σ h ki ki ( i ) l l ∂h i d hi ∴ δil = ∑δkl +1W kilσ ′(hil ) = σ ′( hil )∑δkl +1W kil k 11/26/03 € ∂ ∑ W kml sml k 24 Hidden-Layer Derivatives (2) l i l−1 ij ∂h ∂W € l−1 l−1 dW ∂ ij s j l−1 l−1 l−1 = W s = = s ik k j l−1 ∑ ∂W ij k dW ijl−1 ∂E q l l−1 ∴ = δ i sj l−1 ∂W ij where δil = σ ′( hil )∑δkl +1W kil k 11/26/03 € 25 Derivative of Sigmoid 1 Suppose s = σ ( h) = (logistic sigmoid) 1+ exp(−αh ) −1 −2 Dh s = Dh [1+ exp(−αh )] = −[1+ exp(−αh )] Dh (1+ e−αh ) € = −(1+ e −αh −2 ) (−αe ) = α −αh e−αh −αh 2 (1+ e ) 1+ e−αh 1 e−αh 1 =α = αs − −αh −αh −αh −αh 1+ e 1+ e 1+ e 1+ e = αs(1− s) 11/26/03 € 26 Summary of Back-Propagation Algorithm Output layer : δiL = 2αsiL (1− siL )( siL − t iq ) ∂E q L L−1 = δ i sj L−1 ∂W ij Hidden layers : δil = αsil (1− sil )∑δkl +1W kil k € ∂E q l l−1 = δ i sj l−1 ∂W ij 11/26/03 € 27