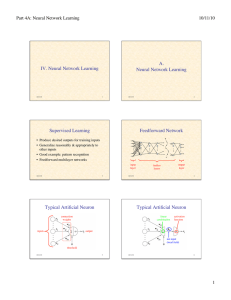

A. II. Neural Network Learning Neural Network Learning Supervised Learning

advertisement

Part 4A: Neural Network Learning

3/11/15

A.

Neural Network Learning

II. Neural Network Learning

1

3/11/15

input

layer

3/11/15

3

...

output

layer

hidden

layers

3/11/15

Typical Artificial Neuron

4

Typical Artificial Neuron

connection

weights

inputs

...

...

• Produce desired outputs for training inputs

• Generalize reasonably & appropriately to

other inputs

• Good example: pattern recognition

• Feedforward multilayer networks

...

Feedforward Network

...

Supervised Learning

2

...

3/11/15

linear

combination

activation

function

output

net input

(local field)

threshold

3/11/15

5

3/11/15

6

1

Part 4A: Neural Network Learning

3/11/15

Equations

Single-Layer Perceptron

...

s"i = σ ( hi )

Neuron output:

...

# n

&

hi = %% ∑ w ij s j (( − θ

$ j=1

'

h = Ws − θ

Net input:

s" = σ (h)

€

3/11/15

7

3/11/15

8

€

Single Layer Perceptron

Equations

Variables

Binary threshold activation function :

%1, if h > 0

σ ( h ) = Θ( h ) = &

'0, if h ≤ 0

x1

w1

xj

h

Σ

wj

y

Θ

wn

$1, if ∑ w x > θ

j j

j

Hence, y = %

&0, otherwise

€

xn

$1, if w ⋅ x > θ

=%

&0, if w ⋅ x ≤ θ

θ

3/11/15

9

3/11/15

10

€

2D Weight Vector

N-Dimensional Weight Vector

w2

w ⋅ x = w x cos φ

€

x

–

v

cos φ =

x

+

+

w

w

separating

hyperplane

w⋅ x = w v

φ

€

€

v

w⋅ x > θ

⇔ w v >θ

w1

–

θ

w

⇔v >θ w

3/11/15

€

normal

vector

11

3/11/15

12

€

2

Part 4A: Neural Network Learning

3/11/15

Treating Threshold as Weight

Goal of Perceptron Learning

#n

&

h = %%∑ w j x j (( − θ

$ j=1

'

• Suppose we have training patterns x1, x2,

…, xP with corresponding desired outputs

y1, y2, …, yP

• where xp ∈ {0, 1}n, yp ∈ {0, 1}

• We want to find w, θ such that

yp = Θ(w∙xp – θ) for p = 1, …, P

3/11/15

x1

w1

xj

xj

14

#θ&

% (

w

˜ =% 1(

w

% ! (

% (

$ wn '

wj

wn

xn

= −θ + ∑ w j x j

j=1

h

Σ

Θ

y

# −1&

% p(

x1

x˜ p = % (

%!(

% p(

$ xn '

€

Let x 0 = −1 and w 0 = θ

n

j=1

˜ ⋅ x˜ p ), p = 1,…,P

We want y p = Θ( w

n

˜ ⋅ x˜

h = w 0 x 0 + ∑ w j x j =∑ w j x j = w

j= 0

€

3/11/15

€

θ

Augmented Vectors

n

θ = w0

y

Θ

3/11/15

#n

&

h = %%∑ w j x j (( − θ

$ j=1

'

w1

wj

wn

Treating Threshold as Weight

x1

j=1

h

Σ

xn

13

x0 = –1

n

= −θ + ∑ w j x j

€

15

€

3/11/15

16

€

€

Reformulation as Positive

Examples

Adjustment of Weight Vector

We have positive (y p = 1) and negative (y p = 0) examples

z5

z1

˜ ⋅ x˜ p > 0 for positive, w

˜ ⋅ x˜ p ≤ 0 for negative

Want w

€

€

€

€

z9

z10 11

z

z6

p

p

p

z2

p

Let z = x˜ for positive, z = −x˜ for negative

z3

z8

z4

z7

˜ ⋅ z p ≥ 0, for p = 1,…,P

Want w

Hyperplane through origin with all z p on one side

3/11/15

17

3/11/15

18

€

3

Part 4A: Neural Network Learning

3/11/15

Outline of

Perceptron Learning Algorithm

Weight Adjustment

1. initialize weight vector randomly

zp

2. until all patterns classified correctly, do:

€ €

€

€

€

a) for p = 1, …, P do:

1) if zp classified correctly, do nothing

€

2) else adjust weight vector to be closer to correct

classification

3/11/15

19

Improvement in Performance

20

• If there is a set of weights that will solve the

problem,

• then the PLA will eventually find it

• (for a sufficiently small learning rate)

• Note: only applies if positive & negative

examples are linearly separable

! ⋅ z p + ηz p ⋅ z p

=w

2

! ⋅ zp

>w

3/11/15

3/11/15

Perceptron Learning Theorem

! ! ⋅ z p = (w

! + ηz p ) ⋅ z p

w

! ⋅ zp +η zp

=w

p

˜ "" ηz

w

ηz p

˜"

w

˜

w

21

3/11/15

22

Classification Power of

Multilayer Perceptrons

• Perceptrons can function as logic gates

• Therefore MLP can form intersections,

unions, differences of linearly-separable

regions

• Classes can be arbitrary hyperpolyhedra

• Minsky & Papert criticism of perceptrons

• No one succeeded in developing a MLP

learning algorithm

NetLogo Simulation of

Perceptron Learning

Run Perceptron-Geometry.nlogo

3/11/15

23

3/11/15

24

4

Part 4A: Neural Network Learning

3/11/15

Credit Assignment Problem

Hyperpolyhedral Classes

3/11/15

25

...

...

...

...

...

input

layer

...

...

How do we adjust the weights of the hidden layers?

Desired

output

output

layer

hidden

layers

3/11/15

26

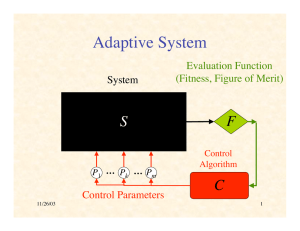

Adaptive System

NetLogo Demonstration of

Back-Propagation Learning

System

Evaluation Function

(Fitness, Figure of Merit)

S

F

Run Artificial Neural Net.nlogo

P1 … Pk … Pm

Control

Algorithm

Control Parameters

3/11/15

27

C

3/11/15

28

Gradient Ascent

on Fitness Surface

Gradient

∂F

measures how F is altered by variation of Pk

∂Pk

∇F

$ ∂F

'

& ∂P1 )

& ! )

&

)

∇F = & ∂F ∂P )

k

& ! )

&

)

&∂F

)

% ∂Pm (

€

+

gradient ascent

–

∇F points in direction of maximum local increase in F

3/11/15

€

29

3/11/15

30

€

5

Part 4A: Neural Network Learning

3/11/15

Gradient Ascent

by Discrete Steps

Gradient Ascent is Local

But Not Shortest

∇F

+

+

–

3/11/15

–

31

3/11/15

Gradient Ascent Process

General Ascent in Fitness

P˙ = η∇F (P)

Note that any adaptive process P( t ) will increase

Change in fitness :

m ∂F d P

m

dF

k

F˙ =

=∑

= ∑ (∇F ) k P˙k

k=1

k=1

∂Pk d t

€d t

˙

˙

F = ∇F ⋅ P

F˙ = ∇F ⋅ η∇F = η ∇F

fitness provided :

0 < F˙ = ∇F ⋅ P˙ = ∇F P˙ cosϕ

where ϕ is angle between ∇F and P˙

Hence we need cos ϕ > 0

≥0

€

€

€

2

32

or ϕ < 90 !

Therefore gradient ascent increases fitness

(until reaches 0 gradient)

3/11/15

33

3/11/15

34

€

General Ascent

on Fitness Surface

Fitness as Minimum Error

Suppose for Q different inputs we have target outputs t1,…,t Q

Suppose for parameters P the corresponding actual outputs

+

∇F

€

are y1,…,y Q

Suppose D(t,y ) ∈ [0,∞) measures difference between

–

€

target & actual outputs

Let E q = D(t q ,y q ) be error on qth sample

€

3/11/15

35

€

Q

Q

Let F (P) = −∑ E q (P) = −∑ D[t q ,y q (P)]

q=1

3/11/15

q=1

36

€

6

Part 4A: Neural Network Learning

3/11/15

Jacobian Matrix

Gradient of Fitness

#∂y1q

&

∂y1q

%

∂P1 !

∂Pm (

%

Define Jacobian matrix J =

"

#

" (

q

%∂y q

(

∂

y

n

n

%

∂P1 !

∂Pm ('

$

%

(

∇F = ∇'' −∑ E q ** = −∑ ∇E q

& q

)

q

q

∂D(t q ,y q ) ∂y qj

∂E q

∂

=

D(t q ,y q ) = ∑

∂Pk ∂Pk

∂y qj

∂Pk

j

€

Note J q ∈ ℜ n×m and ∇D(t q ,y q ) ∈ ℜ n×1

d D(t q ,y q ) ∂y q

⋅

d yq

∂Pk

q

q

q

= ∇ D t ,y ⋅ ∂y

€

=

€

€

yq

3/11/15

(

)

k

€

T

37

€

2

2

i

i

i

∂D(t − y ) ∂

∂ (t − y )

=

∑ (ti − y i )2 = ∑ i∂y i

∂y j

∂y j i

j

i

€

€

3/11/15

38

€

Derivative of Squared Euclidean

Distance

Suppose D(t,y ) = t − y = ∑ ( t − y )

=

q

q

∂y q ∂D(t ,y )

∂E q

=∑ j

,

q

∂Pk

∂y j

j ∂Pk

∴∇E q = ( J q ) ∇D(t q ,y q )

∂Pk

€

€

Since (∇E q ) =

d( t j − y j )

dyj

Gradient of Error on qth Input

d D(t ,y ) ∂y

∂E

=

⋅

q

q

∂Pk

dy

q

q

q

∂Pk

∂y q

= 2( y q − t q ) ⋅

∂Pk

2

= 2∑ ( y qj − t qj )

2

j

= −2( t j − y j )

∂y qj

∂Pk

T

d D(t,y )

= 2( y − t )

dy

€

3/11/15

∇E q = 2( J q ) ( y q − t q )

∴

€

39

3/11/15

40

€

€

Recap

P˙ = η∑ ( J ) (t

q T

q

q

Multilayer Notation

− yq )

To know how to decrease the differences between

actual & desired outputs,

€

we need to know elements of Jacobian,

xq

∂y qj

∂Pk ,

which says how jth output varies with kth parameter

(given the qth input)

W1

s1

W2

s2

WL–2 WL–1

sL–1

yq

sL

The Jacobian depends on the specific form of the system,

in this case, a feedforward neural network

€

3/11/15

41

3/11/15

42

7

Part 4A: Neural Network Learning

3/11/15

Typical Neuron

Notation

•

•

•

•

•

•

•

L layers of neurons labeled 1, …, L

Nl neurons in layer l

sl = vector of outputs from neurons in layer l

input layer s1 = xq (the input pattern)

output layer sL = yq (the actual output)

Wl = weights between layers l and l+1

Problem: find out how outputs yiq vary with

weights Wjkl (l = 1, …, L–1)

3/11/15

s1l–1

sjl–1

Wi1l–1

Wijl–1

WiN

hil

Σ

sil

σ

l–1

sNl–1

43

3/11/15

44

Error Back-Propagation

Delta Values

Convenient to break derivatives by chain rule :

We will compute

∂E q

starting with last layer (l = L −1)

∂W ijl

∂E q

∂E q ∂hil

=

∂W ijl−1 ∂hil ∂W ijl−1

and working back to earlier layers (l = L − 2,…,1)

Let δil =

€

So

3/11/15

45

€

δiL =

hiL

σ

tiq

siL = yiq

=

WiNL–1

sNL–1

3/11/15

46

Output-Layer Derivatives (1)

s1L–1

Wi1L–1

WijL–1

Σ

∂E q

∂hil

= δil

l−1

∂W ij

∂W ijl−1

3/11/15

Output-Layer Neuron

sjL–1

∂E q

∂hil

Eq

2

∂E q

∂

=

∑ (skL − tkq )

∂hiL ∂hiL k

d( siL − t iq )

dh

L

i

2

= 2( siL − t iq )

d siL

d hiL

= 2( siL − t iq )σ &( hiL )

47

3/11/15

48

€

8

Part 4A: Neural Network Learning

3/11/15

Hidden-Layer Neuron

Output-Layer Derivatives (2)

∂hiL

∂

=

∑W ikL−1skL−1 = sL−1

j

∂W ijL−1 ∂W ijL−1 k

∂E q

∴

= δiL sL−1

j

∂W ijL−1

W1il

Wi1l–1

Wijl–1

sjl–1

€

s1l

s1l–1

WiN

Σ

3/11/15

49

sil

σ

Wkil

skl+1

Eq

l–1

WNil

sNl–1

where δiL = 2( siL − t iq )σ &( hiL )

hil

s1l+1

sNl+1

sNl

3/11/15

50

€

Hidden-Layer Derivatives (1)

Recall

Hidden-Layer Derivatives (2)

∂E q

∂hil

= δil

l−1

∂W ij

∂W ijl−1

l−1 l−1

dW s

∂hil

∂

=

∑W ikl−1skl−1 = dWij l−1j = sl−1j

∂W ijl−1 ∂W ijl−1 k

ij

∂E q

∂E q ∂h l +1

∂h l +1

δ = l = ∑ l +1 k l = ∑δkl +1 k l

∂h i

∂

h

∂

h

∂h i

k

i

k

k

l

i

€

l l

d σ ( hil )

∂hkl +1 ∂ ∑m W km sm ∂W kil sil

=

=

= W kil

= W kilσ %( hil )

l

l

l

∂h i

∂h i

∂h i

d hil

€

∴

€

where δil = σ &( hil )∑δkl +1W kil

∴ δil = ∑δkl +1W kilσ $( hil ) = σ $( hil )∑δkl +1W kil

€

k

∂E q

= δil sl−1

j

∂W ijl−1

k

k

3/11/15

51

3/11/15

52

€

€

Summary of Back-Propagation

Algorithm

Derivative of Sigmoid

Suppose s = σ ( h ) =

1

(logistic sigmoid)

1+ exp (−α h )

−1

Output layer : δiL = 2αsiL (1− siL )( siL − t iq )

−2

∂E q

= δiL sL−1

j

∂W ijL−1

Dh s = Dh [1+ exp(−αh )] = −[1+ exp(−αh )] Dh (1+ e−αh )

= −(1+ e−αh )

−2

(−αe ) = α

−αh

e

−αh

−αh 2

(1+ e )

Hidden layers : δil = αsil (1− sil )∑δkl +1W kil

$ 1+ e−αh

1

e−αh

1 '

=α

= αs&

−

)

−αh

1+ e−αh 1+ e−αh

1+ e−αh (

% 1+ e

k

€

∂E q

= δil sl−1

j

∂W ijl−1

= αs(1− s)

3/11/15

€

53

3/11/15

54

€

9

Part 4A: Neural Network Learning

3/11/15

Hidden-Layer Computation

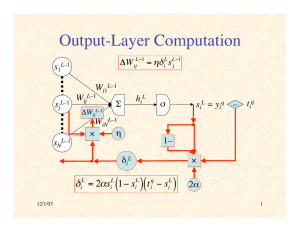

Output-Layer Computation

ΔW

s1L–1

L−1

ij

L L−1

i j

= ηδ s

ΔW ijl−1 = ηδil sl−1

j

s1l–1

W1il

L–1

sjL–1

Wi1

WijL–1

€

Σ

hiL

σ

∆WijL–1

WiNL–1

η

×

sNL–1

siL

–

1–

sNl–1

L

i

)( t

q

i

L

i

−s

)

hil

Σ

sil

σ

Wkil

1–

×

δil = αsil (1− sil )∑δkl +1W kil

3/11/15

55

€

k

3/11/15

WNil

Eq

skl+1

δkl+1

×

δil

2α

δ1l+1

l–1

WiNl–1

×

η

×

δ = 2αs (1− s

L

i

Wi1

W l–1

sjl–1 € ij

∆W l–1

tiq

ij

δiL

L

i

=

yiq

s1l+1

sNl+1

Σ

δNl+1

α

56

€

Training Procedures

Summation of Error Surfaces

• Batch Learning

– on each epoch (pass through all the training pairs),

– weight changes for all patterns accumulated

– weight matrices updated at end of epoch

– accurate computation of gradient

E

E1

E2

• Online Learning

– weight are updated after back-prop of each training pair

– usually randomize order for each epoch

– approximation of gradient

• Doesn’t make much difference

3/11/15

57

3/11/15

58

Gradient Computation

in Batch Learning

Gradient Computation

in Online Learning

E

E

E1

E1

E2

3/11/15

E2

59

3/11/15

60

10

Part 4A: Neural Network Learning

3/11/15

Testing Generalization

Problem of Rote Learning

error

error on

test data

Available

Data

Training

Data

Domain

error on

training

data

Test

Data

epoch

stop training here

3/11/15

61

3/11/15

A Few Random Tips

Improving Generalization

Training

Data

Available

Data

62

• Too few neurons and the ANN may not be able to

decrease the error enough

• Too many neurons can lead to rote learning

• Preprocess data to:

– standardize

– eliminate irrelevant information

– capture invariances

– keep relevant information

Domain

Test Data

Validation Data

• If stuck in local min., restart with different random

weights

3/11/15

63

3/11/15

64

Beyond Back-Propagation

• Adaptive Learning Rate

• Adaptive Architecture

Run Example BP Learning

– Add/delete hidden neurons

– Add/delete hidden layers

• Radial Basis Function Networks

• Recurrent BP

• Etc., etc., etc.…

3/11/15

65

3/11/15

66

11

Part 4A: Neural Network Learning

3/11/15

Deep Belief Networks

Restricted Boltzmann Machine

• Goal: hidden units

become model of input

domain

• Should capture

statistics of input

• Evaluate by testing its

ability to reproduce

input statistics

• Change weights to

decrease difference

• Inspired by hierarchical representations in

mammalian sensory systems

• Use “deep” (multilayer) feed-forward nets

• Layers self-organize to represent input at

progressively more abstract, task-relevant levels

• Supervised training (e.g., BP) can be used to tune

network performance.

• Each layer is a Restricted Boltzmann Machine

3/11/15

67

3/11/15

Unsupervised RBM Learning

• Stochastic binary units

• Assume bias units

x0 = y0 = 1

• Set yi with probability

"

%

σ $$∑Wij x j ''

#

&

j

• Set xj/ with probability

"

%

σ$ W y '

#

∑

i

ij i

&

'

j

• After several cycles of

sampling, update

weights based on

statistics:

(

ΔWij = η yi x j − yi#x#j

3/11/15

69

)

• Present inputs and do RBM learning with

first hidden layer to develop model

• When converged, do RBM learning

between first and second hidden layers to

develop higher-level model

• Continue until all weight layers trained

• May further train with BP or other

supervised learning algorithms

3/11/15

70

Can ANNs Exceed the “Turing Limit”?

What is the Power of

Artificial Neural Networks?

• There are many results, which depend sensitively on

assumptions; for example:

• Finite NNs with real-valued weights have super-Turing

power (Siegelmann & Sontag ‘94)

• Recurrent nets with Gaussian noise have sub-Turing power

(Maass & Sontag ‘99)

• Finite recurrent nets with real weights can recognize all

languages, and thus are super-Turing (Siegelmann ‘99)

• Stochastic nets with rational weights have super-Turing

power (but only P/POLY, BPP/log*) (Siegelmann ‘99)

• But computing classes of functions is not a very relevant

way to evaluate the capabilities of neural computation

• With respect to Turing machines?

• As function approximators?

3/11/15

68

Training a DBN Network

• Set yi/ with probability

#

&

σ %%∑Wij x!j ((

$

(fig. from wikipedia)

71

3/11/15

72

12

Part 4A: Neural Network Learning

3/11/15

A Universal Approximation Theorem

Suppose f is a continuous function on [0,1]

Suppose σ is a nonconstant, bounded,

monotone increasing real function on ℜ.

€

One Hidden Layer is Sufficient

n

• Conclusion: One hidden layer is sufficient

to approximate any continuous function

arbitrarily closely

For any ε > 0, there is an m such that

∃a ∈ ℜ m , b ∈ ℜ n , W ∈ ℜ m×n such that if

€

$ n

'

F ( x1,…, x n ) = ∑ aiσ && ∑W ij x j + b j ))

i=1

% j=1

(

1

m

€

x1

[i.e., F (x) = a ⋅ σ (Wx + b)]

€then F ( x ) − f ( x ) < ε for all x ∈ [0,1]

3/11/15

€

xn

n

(see, e.g., Haykin, N.Nets 2/e, 208–9)

b1

73

3/11/15

Σσ

Σσ

a1

a2

Σ

am

Wmn

Σσ

74

€

The Golden Rule of Neural Nets

Neural Networks are the

second-best way

to do everything!

3/11/15

IVB

75

13