May 22. 1998.

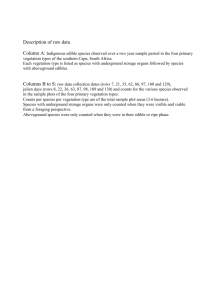

advertisement