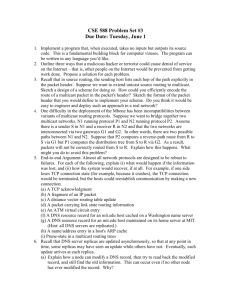

DE S NET

advertisement