Constrained Least Squares Authors: G.H. Golub and C.F. Van Loan

advertisement

UNIVERSITETET

I OSLO

Constrained Least Squares

Authors: G.H. Golub and C.F. Van Loan

Chapter 12 in Matrix Computations, 3rd Edition, 1996, pp.580-587

INSTITUTT FOR INFORMATIKK

CICN may05/1

UNIVERSITETET

I OSLO

Background

The least squares problem:

min kAx − bk2

x

Sometimes, we want x to be chosen from some proper subset S ⊂ Rn.

Example: S = {x ∈ Rn s.t. kxk2 = 1}

Such problems can be solved using the QR factorization and the singular

value decomposition (SVD).

INSTITUTT FOR INFORMATIKK

CICN may05/2

UNIVERSITETET

I OSLO

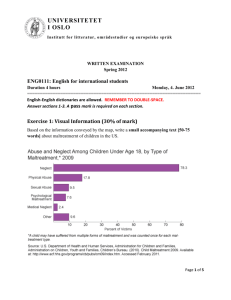

Least Squares with a Quadratic Inequality

Constraint (LSQI)

General problem:

min

x

where:

kAx − bk2

s.t. kBx − dk2 ≤ α

A ∈ Rm,n(m ≥ n), b ∈ Rm, B ∈ Rp,n, d ∈ Rp , α ≥ 0

INSTITUTT FOR INFORMATIKK

CICN may05/3

UNIVERSITETET

I OSLO

Assume the generalized SVD of matrices A and B given as:

UTAX = diag(α1, ..., αn), UTU = Im

VT BX = diag(β1, ..., βq ), VTV = Ip , q = min{p, n}

Assume also the following definitions:

b̃ Õ UTb, d̃ Õ VTd, y Õ X−1x

Then the problem becomes:

min

y

INSTITUTT FOR INFORMATIKK

kDA y − b̃k2

s.t. kDB y − d̃k2 ≤ α

CICN may05/4

UNIVERSITETET

I OSLO

min

y

kDA y − b̃k2

s.t.kDB y − d̃k2 ≤ α

Correctness: By inserting the definitions we get:

kDA y − b̃k2 = kUTAXX−1x − UTbk2 = kUT (Ax − b) k2

Multiplication with an orthogonal matrix does not affect the 2-norm. (The

same result applies for the inequality constraint.)

INSTITUTT FOR INFORMATIKK

CICN may05/5

UNIVERSITETET

I OSLO

The objective function becomes:

n m

2

X

X

αiyi − b̃i +

b̃i2

i=1

The constraint becomes:

r X

i=1

We have:

i=n+1

βiyi − d̃i

2

+

p

X

i=r +1

d̃2i ≤ α2

r = rank(B)

βr +1 = βr +2 = ... = βq = 0

INSTITUTT FOR INFORMATIKK

CICN may05/6

UNIVERSITETET

I OSLO

We have a solution if and only if:

p

X

i=r +1

d̃2i ≤ α2

Otherwise, there is obviously no way to satisfy the constraint.

INSTITUTT FOR INFORMATIKK

CICN may05/7

UNIVERSITETET

I OSLO

Special Case:

Pp

2

2

=

α

d̃

i=r +1 i

The first sum in (12.1.5) must equal zero, this means:

d̃i

yi = , i ∈ [1, r ]

βi

The remainder of the variables can be chosen to minimize the first sum in

(12.1.4):

b̃i

, i ∈ [r + 1, n]

yi =

αi

(Of course, if αi = 0, i ∈ [r + 1, n], this does not make any sense. We then

choose yi = 0.)

INSTITUTT FOR INFORMATIKK

CICN may05/8

UNIVERSITETET

I OSLO

The General Case:

Pp

2

2

<

α

d̃

i=r +1 i

The minimization (without regards to the constraint) is given by:

b̃ /α α ≠ 0

i

i

i

yi =

d̃i/βi αi = 0

This may or may not be a feasible solution, depending on whether it is in

S.

INSTITUTT FOR INFORMATIKK

CICN may05/9

UNIVERSITETET

I OSLO

The Method of Lagrange Multipliers

Solve

∂h

,i

∂yi

h(λ, y) = kDA y −

b̃k22

+ λ kDB y −

d̃k22

−α

= 1, ..., n, this yields:

T

T

T

DA DA + λDB DB y = DT

A b̃ + λDB d̃

INSTITUTT FOR INFORMATIKK

2

CICN may05/10

UNIVERSITETET

I OSLO

Solution using Lagrange multipliers:

αib̃2i +λβi2d̃i i = 1, 2, ..., q

yi(λ) = b̃ αi +λβi

i

i = q + 1, ..., n

αi

INSTITUTT FOR INFORMATIKK

CICN may05/11

UNIVERSITETET

I OSLO

Determining the Lagrange parameter, λ

Define:

φ(λ) Õ kDB y(λ) − d̃k22 =

r

X

i=1

βib̃i − αi d̃i

αi 2

αi + λβ2i

!2

+

p

X

i=r +1

d̃2i

Solve for φ(λ) = α2 . Because φ(0) > α2 and the function is monotone

decreasing for λ > 0, we know that there must be a unique, positive

solution λ∗ with φ(λ∗) = α2 .

INSTITUTT FOR INFORMATIKK

CICN may05/12

UNIVERSITETET

I OSLO

Algorithm: Spherical Constraint

The special case B = In, d = 0, α < 0 can be interpreted as selecting x from

the interior of an n-dimensional sphere. It can be solved using the

following algorithm:

• [U, Σ, V] ← SVD(A)

• b ← UT b

• r ← rank (A)

INSTITUTT FOR INFORMATIKK

CICN may05/13

UNIVERSITETET

I OSLO

Algorithm: Sperical Constraint

• if

Pr

i=1

2

bi

σi

• λ ← solve

∗

•x←

• else:

•x←

Pr

i=1

Pr

• end if

i=1

> α2 :

Pr

i=1

σ i bi

σi2 +λ∗

bi

σi

σ i bi

σi2+λ∗

2

= α2

!

vi

vi

Computing the SVD is the most computationally intense operation in the

above algorithm.

INSTITUTT FOR INFORMATIKK

CICN may05/14

UNIVERSITETET

I OSLO

Spherical Constraint as Ridge Regression Problem

Using Lagrange multipliers to solve the spherical constraint problem

results in:

ATA + λI x = ATb

where:

λ > 0, kxk2 = α

This is the solution to the ridge regression problem:

minkAx − bk22 + λkxk22

We need some procedure for selecting a suitable λ.

INSTITUTT FOR INFORMATIKK

CICN may05/15

UNIVERSITETET

I OSLO

Define the problem:

xk(λ) = argminxkDk(Ax − b)k22 + λkxk22

where Dk = I − ekeT

k is the matrix operator for removing one of the rows.

Select λ to minimize the cross-validation weighted square error:

m

1 X

2

wk(aT

C(λ) =

k xk(λ) − bk )

m k=1

This means choosing a λ that does not make the final model rely to much

on any one observation.

INSTITUTT FOR INFORMATIKK

CICN may05/16

UNIVERSITETET

I OSLO

Through some calculation, we find that:

2

m

1 X

rk

wk

C(λ) =

m k=1

∂rk/∂bk

where rk is an element in the residual vector r = b − Ax(λ). The

expression inside the parenthesis can be interpreted as an inverse

measure of the impact of the kth observation on the model.

INSTITUTT FOR INFORMATIKK

CICN may05/17

UNIVERSITETET

I OSLO

Using the SVD, the minimization problem is reduced to:

2 2

Pr

σj

m

b̃

−

u

b̃

j=1 kj j σ 2+λ

k

1 X

j

C(λ) =

wk

2

P

σj

m k=1

r

2

1 − j=1 ukj σ 2+λ

j

where b̃ = UTb as before.

INSTITUTT FOR INFORMATIKK

CICN may05/18

UNIVERSITETET

I OSLO

Equality Constrained Least Squares

We consider a problem similar to LSQI, but with an equality constraint,

i.e. a normal least squares problem with solution:

with the constraint that:

minkAx − bk2

Bx = d

We assume the following dimensions:

A ∈ Rm,n, B ∈ Rp,n, b ∈ Rm , d ∈ Rp , rank(B) = p

INSTITUTT FOR INFORMATIKK

CICN may05/19

UNIVERSITETET

I OSLO

We start by calculating the QR-factorization of BT:

R

T

B =Q

0

with

A ∈ Rn,n, R ∈ Rp,p , 0 ∈ Rn−p,p

and then add the following defintions:

AQ = [A1 A2] , Q x =

T

This gives us:

Bx = Q

INSTITUTT FOR INFORMATIKK

R

0

T

h

i

h

y

z

i

x = R 0 Q x = R 0

T

T

T

y

z

= RT y

CICN may05/20

UNIVERSITETET

I OSLO

We also get (because QQT = I):

Ax = (AQ) Q x = [A1A2 ]

T

So the problem becomes:

subject to:

y

z

= A1 y + A2 z

minkA1 y + A2 z − bk2

RT y = d

where y is determined directly from the constraint, and then inserted into

the LS problem:

minkA2 z − (b − A1 y) k2

giving us a vector z which can be used to find the final answer:

y

x=Q

z

INSTITUTT FOR INFORMATIKK

CICN may05/21

UNIVERSITETET

I OSLO

The Method of Weighting

A method for approximating the solution of the LSE-problem (minimize

kAx − bk s.t. Bx = d) through a normal, unconstrained LS problem:

A

b

min

x−

λB

λd

2

x

for large values of λ.

INSTITUTT FOR INFORMATIKK

CICN may05/22

UNIVERSITETET

I OSLO

The exact solution to the LSE problem:

p

n

X

X

vT

d

uT

i

ib

xi +

xi

x=

βi

αi

i=1

i=p+1

The approximation:

x(λ) =

p

2 2 T

X

α i uT

i b + λ β i vi d

i=1

αi2 + λ2β2i

The difference:

p

x(λ) − x =

X αi

i=1

n

X

uT

ib

xi +

xi

α

i

i=p+1

β i uT

ib

βi

αi2

−

+

α i vT

id

λ2β2i

xi

It is appearant that as λ grows larger, the approximation error is reduced.

This method is attractive because it only utilizes ordinary LS solving.

INSTITUTT FOR INFORMATIKK

CICN may05/23