Document 11269360

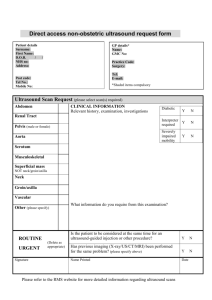

advertisement