This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike License. Your use of this

material constitutes acceptance of that license and the conditions of use of materials on this site.

Copyright 2006, The Johns Hopkins University and Jane Bertrand. All rights reserved. Use of these materials

permitted only in accordance with license rights granted. Materials provided “AS IS”; no representations or

warranties provided. User assumes all responsibility for use, and all liability related thereto, and must independently

review all materials for accuracy and efficacy. May contain materials owned by others. User is responsible for

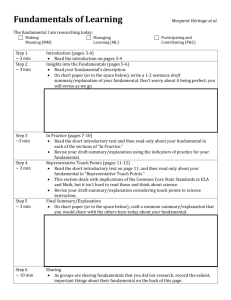

Fundamentals

of Program

Evaluation

obtaining

permissions

for use from

third parties as needed.

Fundamentals of Program Evaluation

Course 380.611

Monitoring Outputs and Outcomes

And Introduction to Study Design

Fundamentals of Program Evaluation

Topics to cover

RHIS as a tool to monitor outputs and

outcomes

Use of surveys to monitor outcomes

Break

Threats to validity

Intro to “impact”

Fundamentals of Program Evaluation

Purpose of MIS at local level

Management to track:

Inputs (finances, personnel)

Process (description of activities)

Outputs

# activities conducted

Measures of access (optional)

Results (# products distributed, service

utilization)

Fundamentals of Program Evaluation

Inputs: Finance, Personnel

Essential data for management

Less important for M&E

Covered in management course, not

Evaluation Course

Dissenting comment by Duff Gillespie

Fundamentals of Program Evaluation

Process

Records of training courses

Records on activities implemented

(type, purpose, # participants)

Evaluation of quality of services

Note: database for determining:

Was project implemented as planned

How well was it implemented

Fundamentals of Program Evaluation

Outputs – Two main types

Number of activities conducted

# persons trained

# pamphlets, posters produced/distributed

Results:

# products distributed

Volume of service utilization

Profile of clients

Note: all data are program - level

Fundamentals of Program Evaluation

Typical “results” from tracking

outputs (service utilization)

Fundamentals of Program Evaluation

Steps in establishing an MIS

for service statistics

Determine indicators to track

Design forms to collect the data

Assign responsibility for data collection

Establish a system of remitting the data

back to the central offices

Train staff in use of the forms

Ensure timely submission of data

Fundamentals of Program Evaluation

Fundamentals of Program Evaluation

Monitoring outcomes

Program-based measurement

Available through RHIS

Ex: % of TB patients enrolled who

complete treatment

Population-based measurement

Not available through RHIS

Ex: % contraceptive use over time

Fundamentals of Program Evaluation

Utility of data from monitoring

To track levels in a given service over

time

To compare regions/sites on

key indicators

To compare program performance to

specified standards

Fundamentals of Program Evaluation

Example of monitoring

outputs: # condoms sold

From Gardner, R., Blackburn, R.D., and Upadhyay, U.D. Closing the Condom Gap. Population Reports, Series H, No.

Fundamentals

Program

Evaluation

9. Baltimore,

Johnsof

Hopkins

University

School of Public Health, Population Information Program, April 1999.

n.

-M

A ar

pr ch

il 9

Ju -J u n 8

ly e

O -S e 9 8

ct p.

Ja .- D e 9 8

n.

-M c. 9

A a rc 8

pr h

il 9

Ju -J u n 9

ly e

- 9

O S ep 9

c

.

J a t.- D 9 9

n.

-M e c.9

A ar 9

pr ch

il 0

Ju -J u n 0

ly e

O -S e 0 0

ct p.

Ja .- D e 0 0

n.

-M c. 0

A ar 0

pr ch

il 0

Ju -J u n 1

ly e

O -S e 0 1

ct p.

Ja .- D e 0 1

n.

-M c. 0

A ar 1

pr ch

il 0

Ju -J u n 2

ly e

O -S e 0 2

c t. p .

-D 02

ec

.0

2

Ja

Number of condoms distributed,

Ghana 1998-2002

Launch of Stop AIDS

Love Life

Launch of Life Choices

8000000

7000000

6000000

5000000

4000000

3000000

2000000

1000000

0

of Program

Evaluation

Source:Fundamentals

Ghana’s Stop

AIDS Love

Life Program. Phase 1: Evaluation Report. February 2000 to June 2001

Use of male methods: comparisons

by country and across time

Fundamentals of Program Evaluation

Source: Drennan, M. Reproductive Health: New Perspectives on Men's Participation. Population Reports, Series

J, No. 46. Baltimore, Johns Hopkins School of Public Health, Population Information Program, October 1998.

Advantages of a health MIS

Routine:

Local:

Doesn’t require a special study

Existing staff collect the info

Provides info relevant to local program

Timely:

Data are available on a monthly or

quarterly basis

Fundamentals of Program Evaluation

Shortcomings of Health MIS

Data are often of poor quality

Data collection detracts from clinical

service delivery (I.e., takes time)

Data are often sent to the central level

without being used at local level

Staff at the local level may not feel

comfortable “analyzing” the data

Fundamentals of Program Evaluation

…As a result...

Service statistics often provide

unreliable data on health interventions

Inflated results

Staff disinterested in process

One-way flow of info (no local benefit)

Result: widespread use of DHS-type

surveys instead of routine service

statistics

Fundamentals of Program Evaluation

So what is “program

monitoring?”

Can include tracking activities:

# training events, # materials produced

Also tracks program-level results:

Levels of service delivery

(behavior-related) products distributed

May track outcomes:

Percent of target population performing

behavior

Fundamentals of Program Evaluation

Fundamentals of Program Evaluation

Measuring “reach” (using

survey data)

Example from Stop AIDS Love Life

Ghana

Social Marketing

Foundation

(GSMF)|USAID|Johns Hopkins University

Fundamentals

of Program

Evaluation

Exposure to the Stop AIDS Love Life

campaign components, Males, 2001

Spontaneous

Prompted

Overall

TV

Music video

Radio

Newspaper

Poster

Leaflet

Car sticker

Tee-shirt/cap

Mobile van

Community event

Community rally

0

Source: 2001 GRHCSS

N=1270

Fundamentals of Program Evaluation

20

40

60

80

% of male respondents exposed

100

Monitoring outcomes:

Initial outcomes

–

Intermediate outcomes

–

Useful if topic is new, difficult (e.g., FGM)

Focus of much evaluation

Long-term outcomes

–

Important but they change slowly; other

factors contribute

Fundamentals of Program Evaluation

Initial outcomes

Knowledge

Attitudes

Self-efficacy

Question: why do we care?

(Example: knowledge of HIV/AIDS in the

U.S. in mid-1980s)

Fundamentals of Program Evaluation

Intermediate outcomes

Health behavior, practice: examples

% employees who exercise 3

times/week

% 15-19 years olds who don’t smoke

% of infants fully immunized at 12

months

% pregnant women who deliver with

skilled birth attendant

Fundamentals of Program Evaluation

Long-term outcomes

Fertility

–

Morbidity

–

–

HIV prevalence

% diarrheal disease (past month)

Mortality

–

Total fertility rate

Infant mortality, maternal mortality

Quality of life

Fundamentals of Program Evaluation

Source of data (if populationbased)

Representative survey of the general

population (or relevant subsection)

Census (e.g., fertility, mortality rates)

National or regional surveys (e.g.,

DHS)

Fundamentals of Program Evaluation

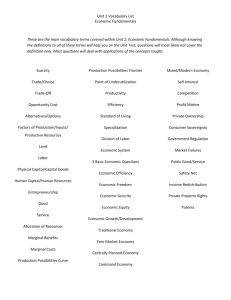

Differentiating Outputs versus

Outcomes

Output: measured at program level:

Activities conducted:

Service utilization:

# brochures printed

# client visits, # condoms sold, # HIV tests

performed

Outcomes: program or population level:

% of TB patients successfully treated

% of youth using condom at last sex

Fundamentals of Program Evaluation

OUTPUTS OR

OUTCOMES?

Fundamentals of Program Evaluation

Source: Gardner, R., Blackburn, R.D., and Upadhyay, U.D. Closing the Condom Gap. Population Reports, Series H, No.

9. Baltimore, Johns Hopkins University School of Public Health, Population Information Program, April 1999.

Value of monitoring outcomes

Demonstrates trends at

national/regional level

Useful for policy makers and public

health practitioners

Allows for comparisons: cross-national

and over time

Fundamentals of Program Evaluation

Example: tracking contraceptive

use over time: 4 countries

U s e o f A n y C o n t r a c e p t iv e M e t h o d

100

90

80

Cameroon

70

Kenya

% use M W R A

60

50

Senegal

40

30

Eg y p t

20

10

0

1970s

1980s

1990s

2000s

d e cad e

of Program

Evaluation

Source:Fundamentals

Ghana’s Stop

AIDS Love

Life Program. Phase 1: Evaluation Report. February 2000 to June 2001

Limitations of monitoring

outcomes

Program-based:

What happened to those outside program?

Population-based:

Does not measure causation

Is often misunderstood as demonstrating

cause and effect

Survey data collection is costly, infrequent

Fundamentals of Program Evaluation

Question

Can we use qualitative methods to

measure outcomes?

Fundamentals of Program Evaluation

Question

When is monitoring outcomes

“enough”? (or “appropriate”)

Fundamentals of Program Evaluation

Moving from monitoring

change to measuring impact

Fundamentals of Program Evaluation

Donors, program administrator,

providers, evaluators:

Want to know:

● Did this program make a difference?

● Did it have an impact?

● Did the program cause the

desired/observed change?

Fundamentals of Program Evaluation

To answer this question, need

to have:

Appropriate study design, and/or

Appropriate statistical analysis

(based on advanced multivariate

analysis)

Fundamentals of Program Evaluation

Review of two key

measurement concepts

Reliability: The measure

repeatedly gives the same results

Validity: We are measuring what

we think we are measuring

Fundamentals of Program Evaluation

Measurement of validity

Considers systematic error: a true effect

may be biased positively or negatively

How well measures represent underlying

concepts

Objective behavior measures more likely

valid

More difficult to assess than reliability

Fundamentals of Program Evaluation

Study design needs to control

for threats to validity

History

Maturation

Testing

Instrumentation

Regression

Selection

Attrition

Fundamentals of Program Evaluation

Threats to internal validity

History: discrete events external to

units

Maturation: events occurring within

subjects over time

Testing: pretest influences results

Fundamentals of Program Evaluation

Threats to internal validity,

continued

Instrumentation: changes in instrument

or raters influences results

Regression (to the mean): units chosen

on extreme scores will naturally regress

Selection: compositional differences in

treatment and comparison groups

Fundamentals of Program Evaluation

Threats to internal validity,

continued

Attrition: differential dropout of units

between treatment, control groups

Selection-maturation interaction:

selection differences cause different

maturation effects

Olympic athletes versus couch potatoes

Fundamentals of Program Evaluation

Threats to internal validity,

continued

Selection-history interaction:

selection differences cause different

exposures to historical events

Ex: effect of Magic Johnson’s HIV

announcement on blacks and whites

Fundamentals of Program Evaluation

Which study designs (next class)

control to threats to validity?

Experimental (randomized control trials)

Quasi-experimental

Controls some threats to validity

Non experimental

“gold standard” for assessing impact

Controls threat to validity

Do not control threats to validity

Observational with statistical controls:

Controls some threats to validity

Fundamentals of Program Evaluation