CASPR Research Report 2005-01 DETERMINING SYNTACTIC COMPLEXITY USING VERY SHALLOW PARSING

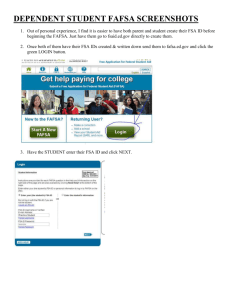

advertisement