Design and Implementation of a ... Cluster System Network Interface Unit

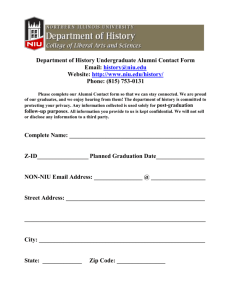

advertisement