TactiForm: A Touch Sensitive Actuated Shape Geospatial Visual Analytic Systems

advertisement

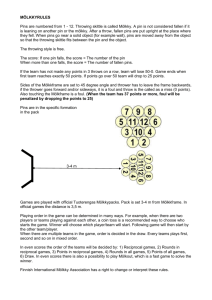

TactiForm: A Touch Sensitive Actuated Shape Display as a Dynamic Interactive User Interface for Geospatial Visual Analytic Systems by Clark D. Della Silva B.S., Massachusetts Institute of Technology (2014) Submitted to the Department of Electrical Engineering and Computer Science in partial fulfillment of the requirements for the degree of Master of Engineering in Electrical Engineering and Computer Science at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY June 2015 c Massachusetts Institute of Technology 2015. All rights reserved. ○ Author . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Department of Electrical Engineering and Computer Science May 21, 2015 Certified by . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Hiroshi Ishii Professor of Media Arts and Sciences Thesis Supervisor Accepted by . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Prof. Albert R. Meyer Chairman, Masters of Engineering Thesis Committee 2 TactiForm: A Touch Sensitive Actuated Shape Display as a Dynamic Interactive User Interface for Geospatial Visual Analytic Systems by Clark D. Della Silva Submitted to the Department of Electrical Engineering and Computer Science on May 21, 2015, in partial fulfillment of the requirements for the degree of Master of Engineering in Electrical Engineering and Computer Science Abstract Visual analytic systems that use real-time interactive interfaces enable effective problem solving and decision making by supporting intuitive data modelling, visualization, and analysis. In the oil and gas industry, these technologies are essential as they allow expert users to discover valuable insights and actionable information, even as the relevant information becomes increasingly diverse and complex. However, better interactive interfaces are needed to allow any user to effectively work with multivariate information. In this thesis I present TactiForm, a dynamic tangible user interface for geospatial visual analytic systems. In order to develop an effective tangible interface for viewing, selecting, and manipulating multivariate data, I designed a dynamic actuated shape-changing display that incorporates a multi-point multi-surface touch interface. I also developed a grammar of interaction techniques focused around multivariate geospatial data. I am in the process of constructing a 1x6 pin prototype to evaluate its performance as an interactive tool. Thesis Supervisor: Hiroshi Ishii Title: Professor of Media Arts and Sciences 3 4 Acknowledgments First of all, I would like to express my heartfelt gratitude to my advisor, Prof. Hiroshi Ishii. It has been a tremendous privilege to work with him. I have always been inspired by his insight and intuition for tangible interfaces and his counsel has allowed me to complete this thesis successfully. I also want to extend sincere thanks to Saudi Aramco, for without their interest and funding this project would not have been possible. In addition, I would would like to thank my colleagues in the Tangible Media Group, who are an amazing group of people that are energetic, sincere, and always willing to help. I have learned so many things from them and admire their talent, creativity, and intellectual curiosity. I also want to thank Mary Tran-Niskala, my groups administrator. Her assistance and support has been critical in the development of this thesis. Many thanks as well to Nick Rivera and Andrea Helton for their assistance in proofreading and editing my work. I would also like to extend my gratitude to my friends for their continuing support and for making my time at MIT so enjoyable. In particular, I want to thank my good friends and roomates Joshua Slocum and Jonathan Slocum. Last but most importantly, I would like to express my deepest gratitude to my parents and grandparents for their enduring love, support, and faith in me. Their care, encouragement, and understanding has helped me to achieve so much. 5 THIS PAGE INTENTIONALLY LEFT BLANK 6 Contents 1 2 3 4 Introduction 11 1.1 Motivation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11 1.2 TactiForm System Description . . . . . . . . . . . . . . . . . . . . . . 13 1.3 Thesis Organization . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14 Background 15 2.1 Tangible Displays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15 2.2 Shape Changing Displays . . . . . . . . . . . . . . . . . . . . . . . . . 16 2.3 Gesture Based Interaction . . . . . . . . . . . . . . . . . . . . . . . . 17 2.4 Geospatial Visual Analytics . . . . . . . . . . . . . . . . . . . . . . . 19 2.4.1 21 Reservoir Simulation . . . . . . . . . . . . . . . . . . . . . . . User Interaction Techniques 23 3.1 Interaction Technique Requirements . . . . . . . . . . . . . . . . . . . 24 3.2 Touch Input Grammar . . . . . . . . . . . . . . . . . . . . . . . . . . 25 3.2.1 Focus . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25 3.2.2 Selection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25 3.2.3 Manipulation . . . . . . . . . . . . . . . . . . . . . . . . . . . 27 3.2.4 Global Operations . . . . . . . . . . . . . . . . . . . . . . . . 30 Design 31 4.1 Design Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . 31 4.2 Mechanical Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31 7 4.3 4.4 4.2.1 Backplate Structure . . . . . . . . . . . . . . . . . . . . . . . . 31 4.2.2 Pin Structure . . . . . . . . . . . . . . . . . . . . . . . . . . . 33 4.2.3 Test Apparatus . . . . . . . . . . . . . . . . . . . . . . . . . . 34 Electrical Design and Assembly . . . . . . . . . . . . . . . . . . . . . 34 4.3.1 Control Board . . . . . . . . . . . . . . . . . . . . . . . . . . . 35 4.3.2 Capacitive Sensing Board . . . . . . . . . . . . . . . . . . . . 36 4.3.3 Motor Subsystem . . . . . . . . . . . . . . . . . . . . . . . . . 37 4.3.4 Motor Controller Carrier Board . . . . . . . . . . . . . . . . . 38 Software and Firmware . . . . . . . . . . . . . . . . . . . . . . . . . . 38 4.4.1 Control Board . . . . . . . . . . . . . . . . . . . . . . . . . . . 38 4.4.2 Backend . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43 5 Implementation 45 6 Conclusion 49 6.1 Future Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49 6.1.1 Future Research Questions . . . . . . . . . . . . . . . . . . . . 49 6.1.2 System Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . 50 6.1.3 6x6 model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50 6.1.4 User Studies . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51 6.1.5 Application and GUI Development . . . . . . . . . . . . . . . 52 A LED Display Implementation A.1 Mechanical 53 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54 A.3 Firmware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54 A.2 Electrical 8 List of Figures 1-1 The paradigm for processing data using visual analytics. . . . . . . . 12 1-2 Example of a typical WIMP interface. 13 . . . . . . . . . . . . . . . . . 2-1 The difference between discrete and continuous pin surfaces on actuated shape displays. . . . . . . . . . . . . . . . . . . . . . . . . . . . 16 2-2 Examples of existing 2.5D shape changing displays. . . . . . . . . . . 17 2-3 Direct manipulation (left) and gestural input (right) implemented on Relief. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18 2-4 A screenshot of climate models from "Coordinated Graph Visualization" a geospatial visual analytics tool . . . . . . . . . . . . . . . . . 3-1 Focusing Modes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-2 Single pin selection methods. 19 25 . . . . . . . . . . . . . . . . . . . . . . 26 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27 3-4 Double tap pull up method (left) and Pinch pull up method (right) . 28 3-5 Moving a single pin down. . . . . . . . . . . . . . . . . . . . . . . . . 28 3-6 Moving a selection of pins. . . . . . . . . . . . . . . . . . . . . . . . . 29 3-7 Translating a selection. . . . . . . . . . . . . . . . . . . . . . . . . . . 29 4-1 Mechanical drawing of TactiForm with major components labelled. . 32 4-2 Rendering of a single pin with major components labelled. . . . . . . 33 3-3 Line Selection. 4-3 Block diagram of overall electrical structure and how the system is connected. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-4 Block diagram of the control board and its component elements. 9 . . 34 35 4-5 Board layout and touch sensor positions for the side touch PCBs. . . 37 4-6 Block diagram showing how the touch PBCs inside of a pin are connected. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37 4-7 Block diagram of the major elements of the motor subsystem and their relationships. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38 4-8 The initialization sequence and main program flow for the control board. 39 4-9 The motor controller behavior is mapped in CANopen via a state machine. States can be controlled with the controlword and displayed with the statusword. . . . . . . . . . . . . . . . . . . . . . . . . . . . 41 5-1 Comparison of the design rendering and the constructed 1x6 prototype. 46 5-2 Photo of the completed touch sensitive pin. . . . . . . . . . . . . . . 47 5-3 Photo of an assembled touch PCB. . . . . . . . . . . . . . . . . . . . 47 5-4 Photo of the touch PCB wiring harness. . . . . . . . . . . . . . . . . 48 5-5 Photo of an assembled touch PCB. . . . . . . . . . . . . . . . . . . . 48 5-6 Photo of the MCLM3002 motion controller mounted in the carrier board. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48 A-1 Examples of what a volumetric display developed on an actuated shapedisplay could look like . . . . . . . . . . . . . . . . . . . . . . . . . . 53 A-2 A 3D rendering of the plasmaForm Led PCB Pin . . . . . . . . . . . 54 A-3 The three DMA channels working together to sythensize the WS2812b control waveform. [17] . . . . . . . . . . . . . . . . . . . . . . . . . . 10 55 Chapter 1 Introduction TactiForm is a next generation actuated shape display that uses a multi-point multisurface touch architecture to enhance a user’s ability to create and manipulate 3D information. This system was motivated by the desire to create a viable interactive user interface to use as a tool for geospatial visual analytics. Specifically, TactiForm provides users with a natural and efficient interface for viewing, selecting, and manipulating 3D data sets. 1.1 Motivation With the advent of big data, engineers and analysts have access to enormous amounts of information to use in decision making processes. Unfortunately there is so much raw data that information overload becomes a serious problem. It is very easy to get lost in data that may be irrelevant, or processed and presented in ways that lead to missed relationships or bad conclusions. The process of making effective decisions depends heavily on the right information being present at the right time. Often the information that would lead to a good decision is difficult to categorize as solutions are not well defined or understood. This means that traditional computation driven processes do not work reliably. The field of visual analytics aims to create effective methods for understanding, deliberating, and decision making with very large and complex data sets. Figure 1-1 shows how visualization and modelling work together to generate 11 knowledge. The major goals of visual analytics include increasing cognitive resources, reducing necessary search, enhancing pattern recognition, supporting the perceptual inference of relationships, and providing a malleable medium for information display and manipulation. User Interacon Mapping Visualization Transformaon Data Model Building Model Visualizaon Knowledge Knowl Data Mining Models Parameter Refinement Feedback Loop Figure 1-1: The paradigm for processing data using visual analytics. The interactivity of visual analytic tools is critical for an analyst to be able to derive insight and make decisions from massive, dynamic and often ambiguous data. A tool needs to support seamless manipulation of information by the user in a way that does not distract from the data. As such, a user interface that minimizes the barrier between a human’s cognitive model of a given task and a computer’s understanding of that model is crucial. In addition, decisions are generally made collaboratively, so the ability to make unambiguous interactions with the data is important. A tool needs to support interaction methods that are clearly defined to observers. An additional complication comes in the form of higher dimensional data sets and the need to display and interact with them. While 2D displays are useful for displaying 3D data, most interaction tools do not provide an effective set of techniques for manipulating this data. The traditional interaction model for 2D is WIMP (Windows, Icons, Menus, Points) shown in figure 1-2 which is not necessarily appropriate for 3D information. Actuated shape displays are a strong candidate as a tool for visual analytics in that 12 Figure 1-2: Example of a typical WIMP interface. they provide a physical display for the information as well as a focal point for interaction in collaborative environments. In addition, with carefully integrated interaction techniques the affordances of the table can be utilized to seamlessly connect an analyst with their data, minimizing the difference between cognitive and computational models. The purpose of this thesis is to develop a shape display architecture that better enables the visualization and manipulation of multi-dimensional information. To achieve this, the goals are as follows: to build a display that is faster, more accurate, and supports a higher maximum output force; to introduce multi-point, multi-surface touch sensing as an input modality; and to develop a modular architecture that can be scaled up to higher resolutions. 1.2 TactiForm System Description TactiForm is an actuated shape display that creates a dynamic 2.5D surface, as well as providing a multi-point multi-surface touch interface. The 2.5D surface is actuated by a 2D array of linear actuators. Attached to the top of each actuator is a pin that takes the form of a long rectangular cuboid. These cuboids create the 2.5D surface. Each pin is made up of five touch sensitive PCBs covering the exposed surfaces of the pin. These touch sensitive pins make up the multi-point multi-surface touch interface. 13 Finally, TactiForm is connected via USB to a computer where a server acts as an intermediary between a controlling application and the shape display. Shape change commands are sent from the application to TactiForm, while touch interactions and position updates are sent from TactiForm to the controlling application. 1.3 Thesis Organization This thesis provides a complete view of TactiForm’s design and implementation. The introductory chapter outlines the need for a physical dynamic 3D interface and outlines the contributions of this thesis. Background on the development of shape changing displays and 3D interaction techniques, as well as a description of the field of geospatial visual analytics and its challenges is provided in Chapter 2. Chapter 3 describes the set of user interaction techniques developed for working with a 2.5D touch sensitive display. Chapter 4 provides a complete system design of TactiForm, including its goals, design process, and component make-up. Chapter 5 describes the implementation of a 1x6 prototype of TactiForm. Finally, Chapter 6 gives a summary of this thesis’s contributions as well as discusses future work. The Appendix contains documentation on a parallel research component to TactiForm, focusing on developing a high resolution volumetric display using LED pins instead of touch sensitive pins. 14 Chapter 2 Background This section gives an introduction to the development of actuated shape displays, as well as an overview of interaction techniques. As an example of a field which is in need of better data manipulation techniques, and as a motivation for the work in this thesis, I focus on the example of geospatial visual analytics. The field of geospatial visual analytics is described and its problems and challenges are evaluated. Finally, the application of geospatial visual analytics to reservoir engineering is explored. 2.1 Tangible Displays Tangible user interfaces (TUIs) are a form of user interface that provide a physical mechanism for interactive control by computationally coupling physical objects to a digital framework. A classic example of this is the computer mouse. The movement of the mouse is coupled to the movement of the pointer on the screen. Advances in TUIs have sought to exploit the affordances of physical objects, from their functionality to suggested uses and inherent constraints, but are limited by their static nature. This leads to interfaces which are strongly specific and cannot accurately represent the dynamic structure of information and how we interact with it. 15 2.2 Shape Changing Displays Shape-changing user interfaces (SCUIs) attempt to overcome these limitations by creating dynamic physical interfaces which are capable of changing their size, shape, location, and orientation. These transformations bring the dynamism of graphical user interfaces (GUIs) to the physical world, allowing an interface to present new physical affordances and constraints. Various projects explore the ideas of dynamic shape changing interfaces, ranging from dynamic objects that can be used to augment existing displays, to stand-alone dynamic displays. The concept of augmenting existing computer displays has been explored in ShapeClip [10], where they introduce a modular z-actuator that can be deployed in various configurations to provide a height changing augmentation to static displays. Other research into dynamic shape displays has focused on stand-alone systems that utilize arrays of linear actuators to form 2.5D surfaces. 2.5D follows a common definition used for digital elevation models. Specifically, it refers to the fact that the surface elevation of a 2.5D display is not an independent variable, and there can only be one elevation value at any given location on the display. Further, 2.5D shape displays are made up of an array of actuated pins that form a surface, similar to pin screen toys [8]. These pins can either be exposed, creating discrete changes in elevation on the surface of the display, or occluded by stretching a malleable surface over the pins, creating the illusion of a continuous surface. Figure 2-1 demonstrates the difference. (a) Relief system with uncovered alu- (b) Relief system with pins covered minium pins by a malleable surface Figure 2-1: The difference between discrete and continuous pin surfaces on actuated shape displays. Lumen [18] uses variable height LED rods actuated by shape-memory alloys to 16 control shape and graphics. Feelex [11] uses top down projection onto a flexible screen that is overlaid on an array of linear actuators. It focuses on the concept of a deformable interface that enables interaction using the palm of the hand. Relief [14] is a higher resolution display designed to render and animate 3D shapes, as well as provide individual addressing of the pins. In Relief [4] they explore different input modalities for a shape display, evaluating direction manipulation and gestural interaction. Inform [9] represents a systematic improvement on Relief, further increasing the resolution of the display in order to bring the representation of data closer to reality. These interfaces provide a dynamic interface, but information is almost always restricted to a particular domain and interaction modes are limited by hardware capabilites. Leithinger et. al. in [15] explores the interaction limitations of existing displays and implements a gestural interface to supplement the physical display. A summary of the different kinds of shape displays is shown in figure 2-2. (a) Feelex (b) Lumen (c) Inform Figure 2-2: Examples of existing 2.5D shape changing displays. 2.3 Gesture Based Interaction Gestures are a form of communication in which information is encoded in visible bodily actions. Through gesture recognition, bodily language can be used to interact with digital systems. Gesture based interaction is currently a common tool used to interact with and manipulate 3D information. It offers an interface that can maintain the locus of interaction on the display while performing actions across the 17 entire surface of the display. Relief [15] and Inform [9] are both examples of shape displays that use gesture based interaction as a method of interfacing the user with the information displayed on the table. A gesture based interface was implemented in these instances due to the lack of developed input methods for shape displays, as well as to reduce the hardware requirements of the system. Figure 2-3 shows the difference between direct manipulation and gestural input as implemented on Relief. Direct manipulation was only used as an example for Relief because input was limited to pushing down on the pins. Figure 2-3: Direct manipulation (left) and gestural input (right) implemented on Relief. G-stalt [22] and Microsoft Hololens [16] both represent systems that utilize gesture based interaction as a means to interface with physical screens and augmented reality displays. Gestural interactions are particularly useful here as the implementation does not require significant hardware additions and allows the displays to be mobile. There are several problems with the use of gesture based interfaces for 3D displays. Of these there are no apparent affordances for the interface, i.e. it is not self-revealing, and any action must be learned beforehand. In addition, gestural interfaces experience segmentation issues. As the interface generally captures a continuous stream of hand motions, any commands must be segmented from the stream before they can be recognized. Free space gestures also do not provide a frame of reference or scale for the user or observers in addition to lacking kinesthetic feedback. They 18 must rely on feedback from the system to confirm what they are selecting or commanding. Finally, free space gestures are imprecise and difficult to repeat, as well as ambiguous to observers and collaborators. While they provide many advantages for prototyping interaction methods alone, gesture based interaction methods are an imperfect solution to the challenge of interfacing humans and and digital information. 2.4 Geospatial Visual Analytics Figure 2-4: A screenshot of climate models from "Coordinated Graph Visualization" a geospatial visual analytics tool Geospatial Visual Analytics is a field dedicated to recognizing and leveraging the unique characteristics of information inherent when dealing with problems involving geographical space and the objects, events, phenomena, and processes which populate it, as well as the spatio-temporal relationships that exist. The application of visual analytics to decision making processes in geologic fields has been explored in depth [2, 19]. The variety and complexity of the space-time related phenomena and problems give rise to the need to work with tools that are capable of handling multivariate complex data structures involving temporal, spatial, and categorical hierarchies [3]. In order to solve these problems, analytic systems need to support reasoning, deliberation, and communication of information, as well as the ability to fully explore the 19 problem and solution options. Geospatial information presents numerous challenges that must be overcome in order to build an effective visual analytic system. These challenges can be viewed across several categories: spatial information, temporal information, and spatio-temporal information. Spatial information has several properties that make it difficult to analyze effectively. It is anisotropic and heterogeneous, meaning that spatial relationships differ according to direction, and processes are different in different places. A good example of anisotropy in geology is the phenomenon of seismic anisotropy where the velocity of seismic waves is dependent on the geologic make-up of the wave medium [21]. Temporal data also introduces several complicating factors when dealing with geospatial analysis. It is both linear and cyclic, meaning that time always progresses forwards but various processes and phenomenon occur periodically. A classic example of this is the moon’s orbit around the earth affecting the tides. This is further complicated as there are often numerous embedded and overlapping cycles that all effect information in different ways. The tides are also affected by the relative position and distance of the sun, as well as wind and weather patterns. In addition, many geologic phenomenon are time variant and are not necessarily cyclic. A good example of this is the eruption of a volcano which increases the concentration of atmospheric dusts and results in reduced global temperatures. The integrated analysis of spatial and temporal data also has its own challenges. The diversity of the information results in multiple multivariate data sets that are structured differently. In addition, measurable parameters in geologic systems are autocorrelated in space and time, meaning that over some range of distances and time, measurements will tend to be more similar to measurements taken nearby or close in time, than measurements taken farther away [7]. Collected data is also often in the form of continuous data streams that need to be evaluated in the context of a given time frame. Finally, information is available at multiple spatial and temporal scales. 20 2.4.1 Reservoir Simulation A particular application of visual analytics is in the field of reservoir engineering, where simulation models and collected information are used in the decision making process for the development of new oil fields and ongoing reservoir management. As the impact scale for investment decisions is very large, it is critical that analysts have access to tools that enable them to process and understand the data efficiently [19]. The size and complexity of the data available, as well as the need for closely coupled human machine analysis makes this an ideal application for visual analytics [20]. In addition, visual analytics systems are already being developed to aid in solving problems [13, 12]. For new oil fields, analysis will affect decisions including the number of wells to be constructed, the optimal arrangement of wells, as well as the expected production of oil, water, and gas. For existing fields, decisions include recommending appropriate reservoir depletion schemes to optimize oil recovery, as well as when to abandon a well [5, 1, 6]. These tend to be open ended problems that have no easily computable solutions, and instead rely on an analyst’s understanding of the data to make decisions. As the data is geospatial in nature, it starts out as three dimensional and often moves to higher dimensions with the introduction of time or other independent variables. Because of this it is important to have effective 3D visualization and interaction systems to work with. Analysts need to be able to work at multiple scales varying from the size of a reservoir (e.g. Ghawar Field measuring 280km) down to the size of an individual modelling cell (can be down to 50ft3 ). In addition they need to be able to effectively select and manipulate complex 3D shapes and designate cross sections or alternative views of the data. Existing tools in the field include Schlumberger’s Eclipse, an oil and gas reservoir simulator. While companies are exploring alternative methods of display and interaction with the 3D information generated by programs like Eclipse, the predominant method of display is still virtual renderings on screens. These programs also rely on 2D WIMP interaction techniques which tend to be inefficient and clumsy. 21 THIS PAGE INTENTIONALLY LEFT BLANK 22 Chapter 3 User Interaction Techniques This section discusses the new input modality created by the addition of a multipoint multi-surface touch architecture to a shape display and describes the set of interaction techniques developed to utilize this modality. Because the focus of this thesis is the application of actuated shape displays to geospatial visual analytics, the interaction techniques are designed with this in mind. Specifically the goal is to interface the user with multi-variate spatio-temporal data. In Relief they state that a major limitation of shape displays is that input through touch is limited to button like behavior and that interactions become complex and ambiguous [15]. Instead of looking towards different input modalities, like gestural interaction, to fill this gap, TactiForm was designed to enhance and disambiguate the affordances provided by touch. At the heart of interactive visual analysis is the process of using coordinated views displaying different aspects of the information available. The technique known as brushing and linking, allows the exploration of datasets with multi-dimensional properties. Specifically, brushing refers to selecting and highlighting data in one view and having that data highlighted in other views, and linking refers to changes in parameters made in one view that are represented across other views. In order to support the connection of the shape display with other displays, an interaction grammar needs to be established that allows for intuitive and efficient selection and manipulation of data on the display. 23 3.1 Interaction Technique Requirements The current 3D geospatial simulation software allows for a variety of interactions that need to be supported in TactiForm. The goal is to keep the locus of interaction on the shape display, as well as provide a method of interaction that is accurate, repeatable, and supports direct collaboration. The shape display will be collocated with higher resolution digital displays, so the ability to accurately select and manipulate information on the shape display allows it to efficiently integrate with the digital displays. Repeatability is important because the process of decision making using visual analytics tools is dependent on the ability to reliably view information, and errors introduced by a system where interactions are not exactly repeatable may change how an analyst views the data or cause information to be missed. Finally, direct collaboration is critical because rarely are decisions made by one person, and the ability for other users to see exactly what interactions are happening and reproduce them enables efficient, clear communication. By using a table that supports multi-point, multi-surface interaction with 3D information these requirements can be fulfilled. A full set of techniques to interact with 3D information is required. This includes the ability to select model vertices, define higher dimensional shapes, as well as the ability to move and transform the selection. As touch is no longer limited to the tops of the pins, the problem of "reach" [15] is expanded to include the sides of the pins. To overcome this and provide access to occluded regions, a focus or isolate method is required. Due to the limited resolution of the display, only a subset of the total model can be displayed at a time. Therefore global operations including zoom, translation, and rotation are essential. In addition, the ability to view section cuts is necessary as it serves to provide a window into higher dimensional data. Because the entire shape display is to be used for geospatial information, using the portions of the display to generate dynamic UI components, as seen in [9] is suboptimal, and therefore an accessory touchscreen is utilized to provide access to more complex functions, such as menu access, mode selection, and data entry. 24 3.2 Touch Input Grammar A grammar of touch methods has been created to explore the functions required to interact with 3D information on a 2.5D shape display. This set of methods is broken down into four main categories: focus, selection, manipulation, and global operations. The categories are described in further detail below. 3.2.1 Focus Focusing is the process of isolating a pin or surface on the table in order to render accessible the surfaces of the pin for interaction. This includes both focusing for selection purposes as well as for viewing section cuts. The act of focusing causes non-selected pins to drop flat, exposing the desired pins completely. Focusing is accomplished by double tapping on a selected region. After the desired interaction is complete, another double tap will return the table to its previous state. (a) Focusing on a single pin (b) Focusing on a section cut Figure 3-1: Focusing Modes. 3.2.2 Selection Selection is the process of highlighting a subset of the pins or region of the pins for the purpose of performing operations on the highlighted region. This is the most 25 important type of interaction for 3D information and intuitive efficient methods are critical to make the system useful. Single Point Single point selection supports two modes. The first is where a single touch will select the entire pin if the top is touched, or the side of a pin if touched on the side. The second mode is where a touch will select the unit cell at the z height of the touch. Whole pin Face select Unit cell Figure 3-2: Single pin selection methods. Lines Line selection has two modes, straight and curved. Straight lines are selected by touching two points sequentially. If the top of the pins are touched, the line is defined as flat, in the direction of the two points. If the sides of the pins are touched, the line is defined in 3D space. Curved lines are selected by tracing a continuous path along the surface of the pins. Planes Planes are selected by defining a line and then touching a third point to define the direction of the plane. If the third point is along the line, the plane is defined as perpendicular to the face that was touched. Otherwise, the three points determine an arbitrary plane. 26 Figure 3-3: Line Selection. Volumes Volumes are selected by touching four or more points. Shapes can also be drawn on planes or pin surfaces, and individual points can be tapped to include/exclude them. This allows for both constructive and destructive creation of a selection. 3.2.3 Manipulation Once a selection has been made, a user needs to be able to perform actions and transformations on that selection. This includes the ability to move either the selection region or the data that is being selected, as well as changing the value of data that is selected. Move Single Pin Up Pins are moved down by pressing on the top of the pin and pushing down. While the user is in contact with the top sensor, the pin will allow itself to be displaced. Once contact is removed, the pin will set its current position and hold it. 27 Figure 3-4: Double tap pull up method (left) and Pinch pull up method (right) Move Single Pin Down There are two possible methods for moving a pin up on the table. The first is a double tap, where after the tap the pin will rise until it senses contact again. The second method to move a pin up is by pinching two parallel faces of the pin and pulling up. Figure 3-5: Moving a single pin down. Multi-pin Movement Pin movement can also be applied to selections or groups of pins. Once a selection is made, movement of the group is made by manipulating a single pin within the group. There are two different modes for multi-pin movement. The first is a direct mode 28 where all pins move the same amount. The second is a limited linked mode where the surrounding pins follow the moved pin with a limited proportion. Figure 3-6: Moving a selection of pins. Translation Translation is the relative movement of a selected region within the display. This is accomplished by pressing on the side of a selected region. A single tap will move the selected region one unit in the direction of the tap. A press and hold will cause the selected region to move one unit at repeated intervals until the hold is released. There are two modes that translation can occur in. The first moves the selection region without moving the underlying data, and the second moves the data that is selected. Figure 3-7: Translating a selection. 29 3.2.4 Global Operations Because of the limited scale of the display, and the large nature of data sets being worked with, operations to support global navigation through the data set are required. Much like with digital map systems where a user might need to interact with a view of an entire country or a single address, the table needs to provide seamless navigation of the data sets. Scaling Once in scaling mode, the scale of the system is manipulated in any direction by touching a reference pin, and then a second pin to define the relative scale. Touching a third pin then defines the ratio to be scaled. For example, ff the first two pins are adjacent, and the third pin is five from the first, the display is scaled 5:1 in the direction of the line between the first and third pin. Rotation Rotation is changing the relative angle of the displayed data to the shape display. This action is supported with a two finger twist on the accessory tablet. Exploration Movement through the map is the act of changing what subsection of the dataset is shown on the shape display. This action is supported by dragging the display window on the accessory tablet. 30 Chapter 4 Design 4.1 Design Requirements In order to test the architectural design of the system as well as the interaction techniques, a 1x6 pin prototype was constructed. This prototype had several requirements in order to enable the interaction techniques in chapter 3 in addition to more general requirements. First, the display need to have pins that were touch sensitive on all exposed sides. In addition, the system needed to be robust in order to handle the added forces of users touching the pins on all sides. Finally, it needed to have a modular design that could be scaled up. This section describes the mechanical, electrical, and software design of TactiForm. 4.2 4.2.1 Mechanical Design Backplate Structure The display is built around a card structure that holds six pins and all of the supporting mechanical and electrical components. This allows for a modular design, where larger displays can be constructed of 1x6 cards. The back plate (figure) is water-jet cut from 3mm aluminum, creating a flat, stiff mounting surface, as well as serving as a heatsink for the motors and drive electronics. The motors are mounted directly 31 Figure 4-1: Mechanical drawing of TactiForm with major components labelled. to the plate to provide optimal thermal conductivity. In addition, the bushing that constrains the motion of the pins, as well as the bottom stop and limit switches are mounted to the card as indicated in 4-1. A bronze bushing is used to provide a low friction bearing surface for the carbon fiber shafts of the pin. In its current form it is a solid bar of bronze that has six holes cut in it to provide the guidance for each of the six pins. Due to the precise requirements for bushing manufacturing, as well as the difficult nature of machining square holes, wire EDM was selected as the optimal manufacturing technique. Below the pins a stop plate is mounted on right angle brackets to provide a stable resting position for the pins when the motors are unpowered. In addition, each pin has a limit switch installed on the stop plate to serve as an interrupt if the motor exceeds its maximum travel distance and to serve 32 as a sensor for zeroing the position of the motor at startup and calibration. The drive electronics and control board are mounted on the back of the aluminum plate. This allows for proper cooling of the motor drivers and efficient wire routing. The wires from the pins are routed over the top of the card and down the back of the card in order to keep them clear of the motors as the pins move up and down. 4.2.2 Pin Structure The pins are the mechanical structures that form the display section of the device and include the linkages and mounting to the motors. The main part of the pin consists of 5 PCBS press fit into 3D printed components that serve as the top and bottoms of the pins. This allows for a lightweight design that exposes the touch sensitive pads of the PCB. In order to keep weight as low as possible, a square pulltruded carbon fiber shaft was selected as the linkage. This also allows for a very rigid pin design which will prevent unnecessary movement and friction between pins. It connects to the motor shaft and runs through the 3D printed components to the very top of the pin to provide support. In addition a 3D printed part is used to link the motor shaft to the carbon fiber rod. Figure 4-2 shows the major elements and structure of the pin. Figure 4-2: Rendering of a single pin with major components labelled. 33 4.2.3 Test Apparatus To facilitate testing, the 1x6 display assembly is mounted to an extruded aluminum frame. This frame runs along the back of the aluminum card and extends out the bottom with legs. This serves to stiffen the frame, provide easy access to the control electronics, and ensures sufficient air flow without having to implement a forced air cooling system. 4.3 Electrical Design and Assembly This section describes the major electrical components of the system and how they are implemented. Figure 4-3 shows these elements and how they are connected. The actuated shape display contains three major elements, the control board, the pin subsystem, and the motor subsystem. The control board is the main controller of the system and interfaces with the computer. The pin subsystem contains the capacitive touch boards that make up the top and sides of each pin. The motor subsystem contains the linear actuators that drive each pin, the motor controllers, carrier board, and limit switches. Figure 4-3: Block diagram of overall electrical structure and how the system is connected. 34 4.3.1 Control Board The control board is the main hardware of the system, designed to interface with all of the subsystems and communicate with the computer. Figure 4-4 shows the major components of the system and what they connect to. The control board is based around the Freescale MK20DX256, an ARM Cortex-M4 microprocessor integrated into the Teensy 3.1 USB development board. This chip was selected because it has a high clock speed of 72MHz, has native support for a variety of USB communication modes, has both I2C and CAN interfaces, and sufficient DMA channels to drive the WS2812b LEDs without significant CPU overhead. The board also contains components to interface with the capacitive touch pins, the Can bus, and the thermal subsystem. Figure 4-4: Block diagram of the control board and its component elements. Because each of the six capacitive pins use the same I2C addresses, an I2C multiplexor is necessary to communicate individually with each pin. When a pin is to be selected and queried as to whether a touch has occurred, the Teensy drives the appropriate bits to select the correct channel on the multiplexor and then begins I2C communications. The can bus is a multi-master serial bus that utilizes two wires in differential mode to connect nodes. Nodes are connected in parallel and a 120Ω 35 resistor is required at each end of the bus to maintain the differential impedance for noise immunity. In addition, the bus supports voltages between -3V and +32V. To connect the Teensy to the can bus, a TI SN65HVD230D can Transceiver is connected to its can interface. In order to maintain optimal performance of the system, a thermal management subsystem has been implemented. It consists of TMP36 temperature sensors mounted to the aluminium back plate, opposite the motor housing, and computer case fans mounted to the back plate to provide forced air convection. A multiplexor driven by the same select bits as the i2c multiplexor allows the Teensy to read the temperature of each motor. 4.3.2 Capacitive Sensing Board In order to enable touch sensing of the pin surfaces, the sides and top of the pin are made of pcb’s that have capacitive sensing technology built in. The boards are dual layer, with the capacitive pads on the exterior side, and all components on the internal side as shown in figure 4-5. The exterior facing side of the pin is shown in blue, and the interior in red. A Microchip cap1188 is used to provide eight capacitive sensing channels as well as an i2c interface. Each board also has a TI Reg113 LDO regulator to enable powering these boards from a variety of power sources. The five boards are daisy chained together in the pin, each assigned a unique i2c address between 0x28 and 0x2C. Figure 4-6 shows how the five boards are connected. Due to the sensitivity of the Cap1188 input channels, the components are placed so that they do not overlap with any of the capacitive sensing pads. As implemented, the boards are capable of operating as eight individual buttons, or as a slider reporting the touch position as a value between 0 and 255, and are also capable of detecting proximity as well as touch. External connections include power, I2C, a reset line, as well as an interrupt line. The cable harness is directly soldered to the board to ensure a solid reliable connection as the pins move. 36 Figure 4-5: Board layout and touch sensor positions for the side touch PCBs. Figure 4-6: Block diagram showing how the touch PBCs inside of a pin are connected. 4.3.3 Motor Subsystem The motor subsystem is responsible for the actuation of the pins in the display. It is comprised of the motors, motor controllers, limit switches, and a carrier board. Figure 4-7 shows these components and how they are connected. The Faulhaber Quickshaft LM127 linear actuator was selected as the motor to drive the pins. It is an electro-mechanical linear motor comprised of a 3-phase self-supporting coil, a 120mm forcer rod, and integrated hall effect sensors for position feedback. It was selected because it provides the capabilities for high dynamics with an excellent force to volume ratio, as well as coming in a compact robust package that is fully integrated, simplifying installation and configuration. To drive this motor, the Faulhaber MCLM3002 Motion Controller was selected. It provides a fully integrated control solution that offloads motor control from the main system controller. In addition it has a very small footprint, allowing for it to be installed in low clearance situations. The motor controller fits onto the motor controller carrier board, which connects it to power, the limit switches, the can bus, and the linear actuator. This carrier board is described in the next section. 37 Figure 4-7: Block diagram of the major elements of the motor subsystem and their relationships. 4.3.4 Motor Controller Carrier Board In order to interface the MCLM3002 Motion Controller with the rest of the system, a carrier board was designed. The carrier board consists of a mounting point for the MCLM3002, a 2mm pitch rectangular connector for the motor cable, and several screw terminal connectors to interface with the can bus, power, and the limit switches. This board also integrates the termination resistor for the can bus, and pull down resistors for the limit switches. This allows motor controller boards to be swapped out easily in the case of a damaged or misconfigured board without having to reconnect the communication bus and limit switches. 4.4 4.4.1 Software and Firmware Control Board Figure 4-8 shows the process flow for the Teensy’s firmware. The system initializes by resetting both the motor controllers and touch boards, then sending new configuration data. From there it waits until a USB connection is established before running the 38 motors through a homing routine to rezero their position. Once homing is completed the system moves into the main program loop. The main program loop consists of polling each of the touch pcbs to determine if a touch has been detected. If a touch is detected, the system sends an update to the backend and then updates the motor positions if necessary. After the touch sensors have been polled, the system checks if it has received a new position update from the backend and updates the motor positions if necessary. Finally, the system queries the temperature sensors to make sure the system is operating correctly. The following sections describe the motor control and capacitive sensing sections of the firmware. Figure 4-8: The initialization sequence and main program flow for the control board. Motor Control Motor control is the subcategory of code on the Teensy that is responsible for controlling the Faulhaber lm1247 motors used in TactiForm. Specifically it communicates with the Faulhaber MCLM3002 Motor controller, and is responsible for configuring the motor controller and sending position updates to the correct actuator when required. 39 The Faulhaber MCLM3002 integrated motor controller utilized for this project communicates via a Controller Area Network (CAN) bus using CANopen as specified by Can in Automation. Prior to connecting the motor controllers to the system, they have to be individually configured to give each a unique can ID. After they are configured, they can be placed into the carrier board and connected to the system. Once the system is powered on, the motors are taken through a startup sequence in order to ensure they are in a known operating state. All the possible states and transitions of the system are shown in figure 4-9. First a "fault reset" command is sent to bring any motor in fault state into a power disabled state. Then a "shutdown" command places all motors into the "ready to switch on" state. A "switch on" command is then sent to switch on the power stage of the motor controller and set the drive into the "switched on" state. Finally, the command "enable operation" is sent, which places the drive in the "operation enabled" state, the drives normal operating mode. Once the drivers are in a known state, a homing sequence is initiated to zero the motors position. First the mode of operation (0x6060) is set to homing mode (6). Then the homing limit switch (0x2310), homing method (0x6098), homing speed (0x6099) and homing acceleration (0x609A) are set to the desired values (see table). The command "Homing operation start" is sent by setting bit 4 of the controlword to 1. The homing sequence is then started, and the motor begins homing towards the negative limit switch. While the switch is inactive, the drive moves in the direction of the switch until a positive edge is detected. Once the limit switch is active, the drive then moves upward out of the limit switch until a negative edge is detected. At this point the drive stops, sets the current position to the known value, and alerts that the reference run has been completed by setting bits 10 (target reached) and 12 (homing attained) in the statusword to 1. Once the system has determined that all drivers have completed their homing sequence, the command is then sent to place them into profile position mode. Mode of operation (0x6060) is set to profile position mode (1). Once in position mode, the system is ready for normal operation. When a motor is required to move, the 40 position setpoint value is set via the target position object (0x6070A) and then the positioning process is started by a change from 0 to 1 of "new setpoint" (bit 4) in the controlword. Once the target position has been reached, "target reached" (bit 10) in the statusword of the drive is set to 1. In addition the drive is set to send an asynchronous transmission of the PDO, triggered by the change in state. This insures the system is notified that a drive has reached its target position without having to query each motor periodically. Figure 4-9: The motor controller behavior is mapped in CANopen via a state machine. States can be controlled with the controlword and displayed with the statusword. Capacitive Sensing Capacitive sensing is the subcategory of the code on the Teensy that is responsible for controlling the touch PCBs that make up each of TactiForm’s pins. Specifically it is responsible for configuring each of the touch controllers and then repeatedly polling each controller to determine if a touch has been detected. If a touch is detected, it is also responsible for determining if that touch should affect the position of the motors, and then sending the correct messages to the motor control process and the computer. 41 When the system is powered on, the cap1188 rest pin is pulled low, initiating a reset of the cap1188 registers. The Teensy then cycles through each of the five chips on each pin and rewrites the correct settings. This insures that none of the devices end up in an unknown state. Because no LEDs are connected to the device, address 0x72 is set to 0x00 to unlink any LEDs from sensor pins. Address 0x74 is then set to 0x00, switching all eight LEDs to undriven. The sampling parameters of the chip are then set by writing 0x39 to address 0x24. This register store the values for number of samples to be taken per measurement, the sample time per measurement, and the maximum cycle time to complete all samples. The value 0x39 sets the number of samples to 8, the sample time to 1.28ms and the cycle time to 70ms. The chip will complete all samples for a given channel before moving on to subsequent channels. Although the cycle time is 70ms, sampling eight sensors eight times at 1.28ms per sample requires 81.92mS. The chip automatically extends the cycle time to measure all samples. The reason the cycle time is not set longer is that the chip drops into a low power state for any remaining time in the cycle after all samples are completed. In order to distinguish between a single touch and press and hold, 0x07 is written to 0x23, which sets the minimum time for a press and hold detection to 280ms. If a touch is registered for less than or equal to this time, a single touch is reported, and a interrupt is generated on detection and release. For any touch longer than this time, a press and hold event is reported, and an interrupt is generated on detection, release, and is repeated at intervals between the two. Once the device has been configured, the Teensy proceeds to poll each cap sense chip in sequence. For each pin, it sets the multiplexor select bits appropriately, and then polls each address between 0x280x2A. Each poll consists of a query to the value of INT bit 0 of the Main control Register (0x00) which determines if a touch has been detected and an interrupt has been generated. If the value is 1, 0x03 is queried to get the status of each sensor. A bit set to 1 represents that a touch is detected on the corresponding sensor. After the status bits have been queried, INT (bit 0 of 0x00) is cleared to reset the alert. Each poll consists of a block read starting at 0x00 through 0x03 to for the status of the INT and Touch Sensed Bits as well as the status of each 42 sensor. After the query is complete, a write to 0x00 bit 0 is used to clear the INT bit. I2C runs at 400kHz which is 50kBytes/Second. A full query takes 10bytes of data, and there are five devices per pin and six pins. This requires 300bytes of data to query all 30 capacitive sense ICs. Given the maximum data transfer, the system can poll all the capacitive sensors at 50kBs/S / 300Bs = ˜ 166Hz, which is 6̃ms to cycle through all 30 ICs. Since a full measurement cycle takes 82ms we don’t have to poll as fast as theoretically possible. If we only poll every IC once every 70ms, we have a channel utilization of 1̃2%. 4.4.2 Backend The backend is the process that acts as a server and provides an interface between TactiForm and GUI applications. Specifically it is responsible for establishing a connection to each 1x6 card in the display, determining the correct layout of pins in the overall system, and then translating and sending messages between the application and the shape display. When the backend starts up, it waits for the Teensys to be enumerated as a USB HID devices and then returns an object that allows data to be read from and written to the Teensy. Once it has determined how many display cards are available, it opens a channel to each. Its primary purpose is to act as a translator between the display cards and applications that wish to use the display, providing a layer of abstraction. In order to support as many applications as possible, interprocess communication between the backend and application interfaces is implemented with POSIX message queues. The process creates three message queues titled "touch data", "position data" and "system messages". When information arrives from the shape display, it is processed and written to the "touch data" queue. Applications can then retrieve this information from the queue. The "position data" queue is monitored to receive position commands for the linear from the application interface. When data is received, it is formatted according to the layout of the shape display and sent to the Teensy. The backend is written in python, and uses the PyUSB library to enable USB access and the posixpic library to give python access to POSIX 43 elements. The "system messages" queue is used to send configuration commands and status updates back and forth between the backend and application. Messages are passed from the display to the backend in the form of strings. Each string contains a list of touch sensors that are currently activated. Each touch sensor activation is made up of three 1-digit integers e.g. "106" each zero indexed. The first integer refers to which pin in the 1x6 card the touch occurred in. The second integer determines which pcb of that pin, and the third integer indicates which sensor was activated. For the above example, that would be the first pin in the display, the zeroth pcb of that pin, and the sixth touch sensor on the PCB. Position messages are passed from the backend to the display in the form of a 2D matrix. The backend receives position data in the form of a 16bit int, and converts this to the appropriate value in terms of ticks. The backend also implements a general library for configuring and operating the display. Commands include "restart", "calibrate motors", "calibrate touch sensors", as well as commands to change the PID parameters of the motor drivers. These commands are accessible through the command line. 44 Chapter 5 Implementation To evaluate the above design I began construction of a 1x6 prototype of TactiForm. The main mechanical elements of the prototype were completed. This includes the backplate, bronze linear bushing, and pin structures. Figure 5-1 shows the rendering of the design next to the picture what was constructed. The machining tolerances on the backplate were found to be correct, as all the components mounted to the backplate securely and were aligned correctly. In addition, the pulltruded carbon fiber shafts fit appropriately in the bronze bushing, with approximately five thousands of an inch clearance on each side. All six capacitive sense pins were also constructed. A completed pin is shown in figure 5-2. The sides of the pin are made up of the touch sensitive pcbs. The construction of these pins, as well as how they are wired together is shown in figures 5-5 and 5-4. The motor controller carrier board was also completed. The MCLM3002 motion controller mounted in the carrier board is shown in figure 5-6. All six of the capacitive sense pins were configured and tested with the Teensy. It was determined that the spacing between the pins was sufficient to prevent any detrimental cross talk between the capacitive sensors on the pins. In addition, the CAN interface between the Teensy and the MLCM3002 motion controller was tested while the motion controller was mounted in the carrier board. It was confirmed that the Teensy can send and receive information from the motor controller, and actively control the Quickshaft LM1247 linear actuator. 45 (a) Rendering (b) Construction Figure 5-1: Comparison of the design rendering and the constructed 1x6 prototype. The control board is currently in the prototype phase, consisting of the Teensy development board, three Sparkfun 16-channel multiplexors, and a WaveShare SN65HVD230 CAN transceiver, all mounted to a breadboard. Two of the multiplexors are used for the I2C interface and the third is used to connect the TMP36 temperature sensors. Once the functionality of the board has been verified, the design will be moved to a custom PCB to fit the footprint of the display. In the process of constructing the 1x6 prototype several discoveries were made that will influence future designs. Primarily, machining square holes for the bronze bushing is difficult without a significant taper. The square shaft was chosen to fix the pins in a particular position as the forcer rod of the motor is free to rotate. A better solution would be to use to a circular shaft and machine an indexing slot down the side. This would allow for the use of traditional self lubricating linear bearings instead of a custom machined bushing. In addition, the movement of the pins introduces significant challenges for the connector in the touch pins as well as the 46 Figure 5-2: Photo of the completed touch sensitive pin. Figure 5-3: Photo of an assembled touch PCB. wire routing to the control board. A locking connector needs to be used to prevent the cable from coming loose as the pins move, and a wire guide needs to be used to prevent the cable from tangling in the system. Sufficient slack must also be present to prevent strain on the connectors as the pins move. Finally, the proximity of the PCBs in the touch pins as well as the carbon fiber shaft running up the middle of the pin produces a very low clearance environment. It was very difficult to solder the wire harness in a way that would allow the pin to be assembled without damaging it. A better solution would be to replace the internal wire harness with a small PCB that directly solders to each of the four side touch PBCs and has a hole in the middle for the shaft. The wire harness could then be connected to this PCB instead. Unfortunately, completion of the 1x6 prototype was significantly delayed due to the unavailability of the Faulhaber Quickshaft LM1247 Linear Actuators. I had expected to take delivery of the motors in mid-April, but I was informed that the construction of the motors would take until mid-June. Testing of the system was accomplished using the two motors that were previously acquired. 47 Figure 5-4: Photo of the touch PCB wiring harness. Figure 5-5: Photo of an assembled touch PCB. Figure 5-6: Photo of the MCLM3002 motion controller mounted in the carrier board. 48 Chapter 6 Conclusion Geospatial visual analytics requires tools that enable interactivity with complex multivariate data sets. In this thesis, I presented the design of TactiForm, an interactive actuated shape display that can be used as a tool for information manipulation in visual analytic systems. TactiForm implements a multi-point, multi-surface touch architecture that enables full interactivity with every pin of the shape display. A grammar of interactive touch techniques was created to enable the efficient and intuitive manipulation of higher dimensional information on a 2.5D display. Finally, a 1x6 prototype of TactiForm is being constructed to evaluate the system architecture and interaction techniques. 6.1 Future Work The development of TactiForm creates several new research directions and many questions to be answered. This section describes several questions that are of importance to actuated shape displays as well as several planned improvements and experiments. 6.1.1 Future Research Questions Adding the input modality of touch to actuated shape displays opens up new possibilities for interaction techniques. For visual analytic systems, what percentage of 49 interactions can occur directly on the table instead of through gestural interfaces or traditional WIMP interfaces? In addition, would integrating direct manipulation with gestural interfaces provide a better method of interaction than either individually? While this thesis explores interaction techniques for manipulating geospatial information, can these techniques be applied to other fields and types of information? More broadly, does there exist a set of interaction techniques that provide a generic interface that can be effectively used across a variety of applications? Looking at the touch sensitivity of the display, how does the difference in resolution between the touch sensors on the display and the digital renderings on accessory screens affect the ability of the user to accurately select and manipulate data? Also, how does interacting with a physical model of the information change how multiple users interact with the data and communicate with each other? 6.1.2 System Analysis As the 1x6 prototype is completed, its performance and reliability needs to be evaluated individually and in the context of other shape displays. The underlying actuation architecture of the system should provide a significant boost in the speed and accuracy at which the display can change shape. This can be achieved by running tests to determine the system parameters of the linear actuators while they are installed in the display. In addition, the capacitive sensing system needs to be evaluated to determine its sensitivity and if there is any drift present while the display operates. The capacitive controllers implement a dynamic re-thresholding method, however as the pins are moving up and down this rapidly changes the state of the sensors. Finally, the entire system should be placed through a set of tests to determine if there are any race conditions or fault states that can occur during operation. 6.1.3 6x6 model After using the 1x6 prototype to evaluate the system architecture as well as test the basic interaction techniques, the system needs to be expanded in order to better 50 understand how the system can be used as a tool for visual analytics, and the full 3D interaction technique set needs to be evaluated. In order to do this, a higher resolution display is required. It was determined that a 6x6 pin display would provide sufficient resolution to test the 3D interaction techniques, while also maintaining a smaller form factor and representing a reasonable quantity of work for an iterative improvement. The current design had in mind that the 1x6 cards could be mounted into a carrier structure and stacked next to one another in order to create a higher resolution display. To accomplish this the design needs to be changed to better integrate the control electronics. In its current format, the electronics are mounted to the back of the card, however for a higher density system that space is where additional 1x6 cards would be mounted. An optimal configuration would be for the control electronics to be mounted directly underneath the aluminium card, allowing the cards to be stacked next to each other. 6.1.4 User Studies In order to test how the system aids in the understanding and manipulation of complex data sets and in the making of analytic decisions, the system needs to be evaluated by analysts who would be potential users of this technology. The aim is to see how integrating an interactive shape display with existing visual analytics tools affects user’s work flow and decision making processes. Informal and anecdotal data would be collected from users on how they felt working with the system, whether the interaction techniques were intuitive and effective, and whether there was a perceived effective difference between using only existing tools and adding an interactive shape display. In addition, these studies would test the hypothesis that multi-point multi-surface touch manipulation of 3D information is advantageous when compared to mid-air gestural input and traditional input methods. 51 6.1.5 Application and GUI Development The current system was designed to interface with many possible graphical user interfaces. We aim to build several UI’s that allow for the testing of the 1x6 prototype as well as simulating how interactions would happen on the 6x6 display. 52 Appendix A LED Display Implementation Figure A-1: Examples of what a volumetric display developed on an actuated shapedisplay could look like An additional portion of the work completed for this thesis was the development of an alternate display that replaced the touch sensing pins with transparent led pins. The goal was to develop a high resolution volumetric display that took advantage of the capabilities of the new motors. Each pin consisted of a vertical stack of rgb LEDs, that when vibrated rapidly would produce the effect of having a higher resolution. This relies on the afterimage that is created when an object is rapidly oscillated in front of the eye. This requires an oscillation frequency of at least 20Hz, and because the pins travel the same positions twice in one oscillation, the motors only need to oscillate at > 10Hz. The following describes additions and modifications to TactiForm to enable this: 53 Figure A-2: A 3D rendering of the plasmaForm Led PCB Pin A.1 Mechanical The solid touch sensitive pins are replaced with a minimal pin that consists of a single thin pcb mounted on top of the carbon fiber shaft. This pcb is less that half the pitch of the pin spacing, allowing for better visibility through the dense grouping. The pcbs are 2 sided, with eight WS2812B rgb LEDs spaced 2CM apart. These boards require a 3 wire connection to the control board consisting of power, ground , and data in. A.2 Electrical To drive the WS2812b LEDs, a 74HCT245 buffer chip and 100Ω impedance matching resistance are included on the control board. This brings the teensy’s digital outputs from 3.3V to 5V and ensures sufficient current handling. Two Ethernet jacks provide three sets of twisted pairs each connecting to the data lines of the six LED pins. A.3 Firmware The WS2812b is an RGB LED with integrated driver that uses a single wire communication protocol that is very timing dependent. It operates at 800kHz (a period of 1.25uS) and uses 8 bits / color for 24 bits per LED. A high and low bit are differentiated by the duty cycle of the data line. A high bit is represented when the line remains high for .9uS while a low bit is represented when the line remains high for .35uS. This timing has a tolerance of 150ns. To drive these LEDs by counting requires significant CPU overhead leaving little time for other operations. A better solution is to utilize Direct Memory Access (DMA) channels to trigger the high and low transitions. DMA control of the LEDs is implemented using three DMA transfers 54 tied to two PWM channels. Both PWM channels are set to 800kHz and synchronized, with the first having a high duty cycle of .35uS and the second having a high duty cycle of .9uS. The first DMA transfer is triggered on the rising edge of the first pwm channel, and this causes all 8 channels to be written high. The second DMA transfer is triggered on the first falling edge, writing a frame of data to the output. This causes any bits that should be low to be written low. The third DMA transfer is triggered on the second rising edge, and writes all the bits low. This creates the appropriate waveform to drive the WS2812b LEDs and is shown in figure A-3. Figure A-3: The three DMA channels working together to sythensize the WS2812b control waveform. [17] The open source OctoWS2811 LED Library is used because it implements this driving method, utilizing the crossbar switch and dual-bus RAM on the teensy to synthesize the wafeforms with minimal CPU overhead. Because each LED requires 24 bits of information, this requires 30uS per LED to update. Since there are 8 LEDs per pin, this enables an update frequency of up to 4.1kHz. In practice, the LEDs update at 400hz, leaving ample time for computation and data transfer. LED information is passed to the Teensy via USB several frames ahead of what is to be displayed. This data is passed as a 6x24 = 144 byte array that is parsed by the Teensy. 55 THIS PAGE INTENTIONALLY LEFT BLANK 56 Bibliography [1] Tarek Ahmed et al. ing, 2006. Reservoir engineering handbook. Gulf Professional Publish- [2] Gennady Andrienko, Natalia Andrienko, Piotr Jankowski, Daniel Keim, M-J Kraak, Alan MacEachren, and Stefan Wrobel. Geovisual analytics for spatial decision support: Setting the research agenda. International Journal of Geographical Information Science, 21(8):839–857, 2007. [3] Gennady Andrienko, Natalia Andrienko, Daniel Keim, Alan M MacEachren, and Stefan Wrobel. Challenging problems of geospatial visual analytics. Journal of Visual Languages & Computing, 22(4):251–256, 2011. [4] Matthew Blackshaw, Anthony DeVincenzi, David Lakatos, Daniel Leithinger, and Hiroshi Ishii. Recompose: direct and gestural interaction with an actuated surface. In CHI’11 Extended Abstracts on Human Factors in Computing Systems, pages 1237–1242. ACM, 2011. [5] Benjamin Cole Craft, Murray Free Hawkins, and Ronald E Terry. Applied petroleum reservoir engineering, volume 9. Prentice-Hall Englewood Cliffs, NJ, 1959. [6] Laurie P Dake. 2001. The practice of reservoir engineering (revised edition). Elsevier, [7] Evan J Englund. Spatial autocorrelation: implications for sampling and estimation. In ASA/EPA Conferences on Interpretation of Environmental Data, 1987. [8] W. Fleming. Pin screen, August 27 1985. US Patent 4,536,980. [9] Sean Follmer, Daniel Leithinger, and Alex Olwal Akimitsu Hogge Hiroshi Ishii. inform: dynamic physical affordances and constraints through shape and object actuation. In Proceedings of the 26th annual ACM symposium on User interface software and technology, pages 417–426. ACM, 2013. [10] John Hardy, Christian Weichel, Faisal Taher, John Vidler, and Jason Alexander. Shapeclip: Towards rapid prototyping with shape-changing displays for designers. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, CHI ’15, pages 19–28, New York, NY, USA, 2015. ACM. 57 [11] Hiroo Iwata, Hiroaki Yano, Fumitaka Nakaizumi, and Ryo Kawamura. Project feelex: adding haptic surface to graphics. In Proceedings of the 28th annual conference on Computer graphics and interactive techniques, pages 469–476. ACM, 2001. [12] Joshua Brian Kollat, Patrick M Reed, and RM Maxwell. Many-objective groundwater monitoring network design using bias-aware ensemble kalman filtering, evolutionary optimization, and visual analytics. Water Resources Research, 47(2), 2011. [13] Sang Yun Lee, Kwang-Wu Lee, Taehyun Rhee, and Ulrich Neumann. Reservoir model information system: Remis. In VDA, page 72430, 2009. [14] Daniel Leithinger and Hiroshi Ishii. Relief: a scalable actuated shape display. In Proceedings of the fourth international conference on Tangible, embedded, and embodied interaction, pages 221–222. ACM, 2010. [15] Daniel Leithinger, David Lakatos, Anthony DeVincenzi, Matthew Blackshaw, and Hiroshi Ishii. Direct and gestural interaction with relief: a 2.5 d shape display. In Proceedings of the 24th annual ACM symposium on User interface software and technology, pages 541–548. ACM, 2011. [16] Microsoft. Hololens: Augmented reality gestural interface, 2015. [17] PJRC.com. Octows2811 led library, 2015. [18] Ivan Poupyrev, Tatsushi Nashida, Shigeaki Maruyama, Jun Rekimoto, and Yasufumi Yamaji. Lumen: interactive visual and shape display for calm computing. In ACM SIGGRAPH 2004 Emerging technologies, page 17. ACM, 2004. [19] Anya C Savikhin. The application of visual analytics to financial decision-making and risk management: Notes from behavioural economics. In Financial Analysis and Risk Management, pages 99–114. Springer, 2013. [20] Mario Costa Sousa, Emilio Vital Brazil, and Ehud Sharlin. Scalable and interactive visual computing in geosciences and reservoir engineering. Geological Society, London, Special Publications, 406(1):447–466, 2015. [21] Leon Thomsen et al. Understanding seismic anisotropy in exploration ploitation, volume 5. Society of Exploration Geophysicist, 2002. and ex- [22] Jamie Zigelbaum, Alan Browning, Daniel Leithinger, Olivier Bau, and Hiroshi Ishii. G-stalt: a chirocentric, spatiotemporal, and telekinetic gestural interface. In Proceedings of the fourth international conference on Tangible, embedded, and embodied interaction, pages 261–264. ACM, 2010. 58