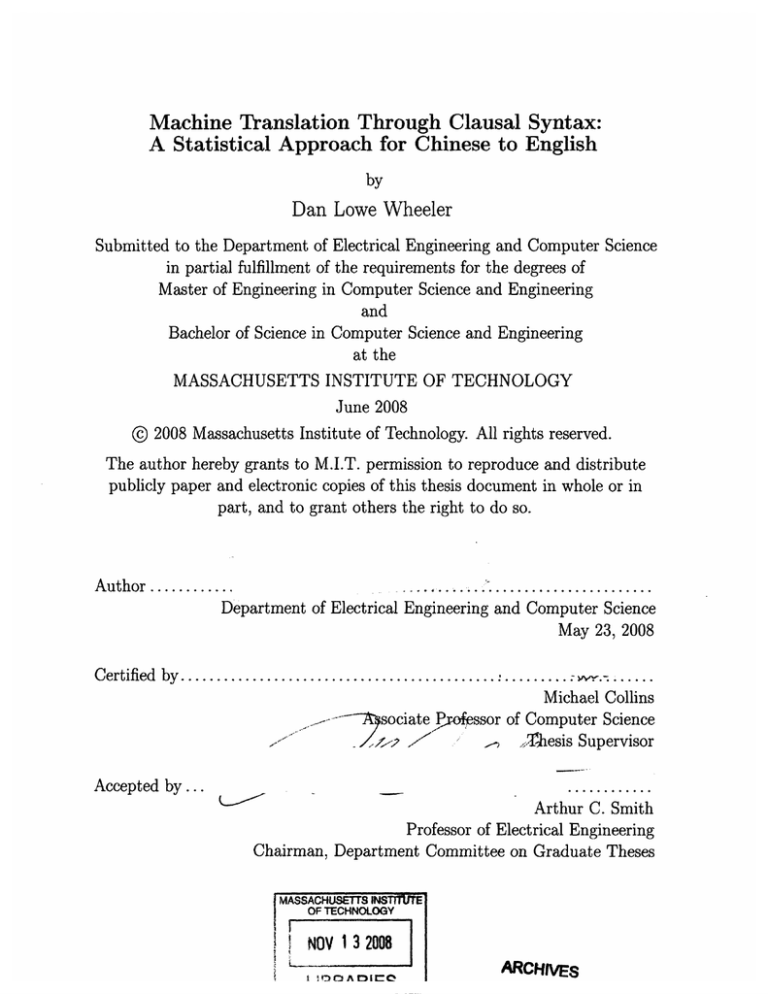

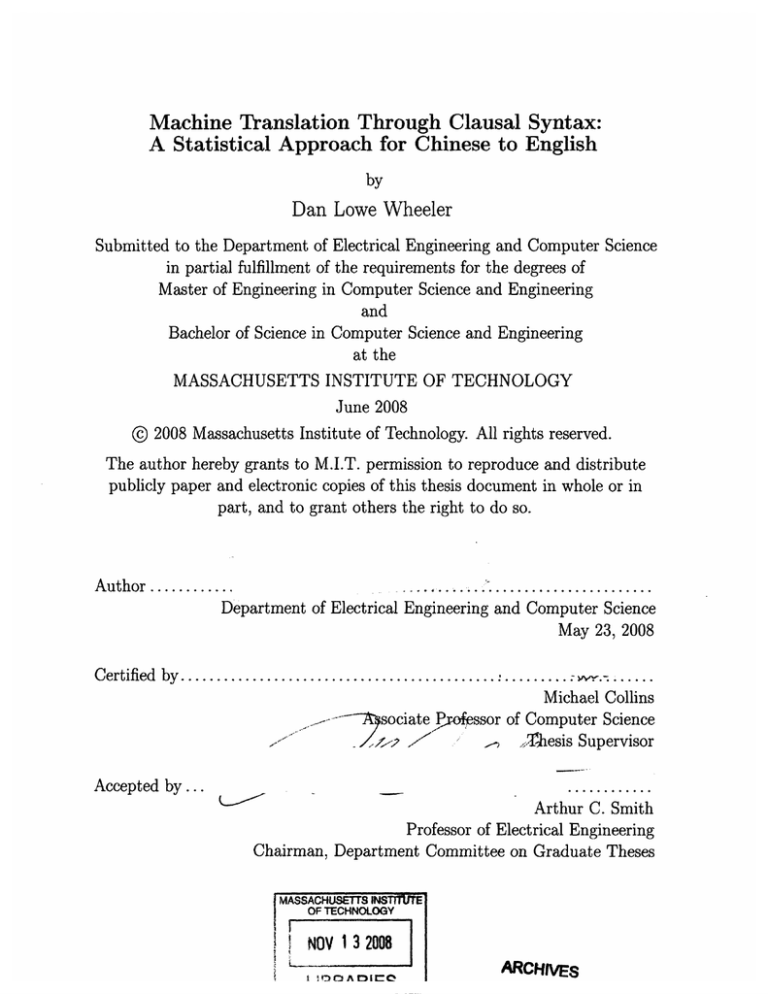

Machine Translation Through Clausal Syntax:

A Statistical Approach for Chinese to English

by

Dan Lowe Wheeler

Submitted to the Department of Electrical Engineering and Computer Science

in partial fulfillment of the requirements for the degrees of

Master of Engineering in Computer Science and Engineering

and

Bachelor of Science in Computer Science and Engineering

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

June 2008

@ 2008 Massachusetts Institute of Technology. All rights reserved.

The author hereby grants to M.I.T. permission to reproduce and distribute

publicly paper and electronic copies of this thesis document in whole or in

part, and to grant others the right to do so.

Author ...............................

Department of Electrical Engineering and Computer Science

May 23, 2008

Certified by..............................

............

.-...

sociate P

-.....~/

- .

......

Michael Collins

ssor of Computer Science

:.Thesis Supervisor

Accepted by...............

Arthur C. Smith

Professor of Electrical Engineering

Chairman, Department Committee on Graduate Theses

MASSACHUSETTS INSTMTTE

OF TECHNOLOGY

NOV 1 3 2008

3...

RARIES

ARCHIVES

Machine Translation Through Clausal Syntax:

A Statistical Approach for Chinese to English

by

Dan Lowe Wheeler

Submitted to the Department of Electrical Engineering and Computer Science

on May 23, 2008, in partial fulfillment of the

requirements for the degrees of

Master of Engineering in Computer Science and Engineering

and

Bachelor of Science in Computer Science and Engineering

Abstract

Language pairs such as Chinese and English with largely differing word order have proved

to be one of the greatest challenges in statistical machine translation. One reason is that

such techniques usually work with sentences as flat strings of words, rather than explicitly

attempting to parse any sort of hierarchical structural representation. Because even simple

syntactic differences between languages can quickly lead to a universe of idiosyncratic surfacelevel word reordering rules, many believe the near future of machine translation will lie

heavily in syntactic modeling. The time to start may be now: advances in statistical parsing

over the last decade have already started opening the door.

Following the work of Cowan et al., I present a statistical tree-to-tree translation system

for Chinese to English that formulates the translation step as a prediction of English clause

structure from Chinese clause structure. Chinese sentences are segmented and parsed, split

into clauses, and independently translated into English clauses using a discriminative featurebased model. Clausal arguments, such as subject and object, are translated separately using

an off-the-shelf phrase-based translator. By explicitly modeling syntax at a clausal level, but

using a phrase-based (flat-sentence) method on local, reduced expressions, such as clausal

arguments, I aim to address the current weakness in long-distance word reordering while still

leveraging the excellent local translations that today's state of the art has to offer.

Thesis Supervisor: Michael Collins

Title: Associate Professor of Computer Science

Acknowledgements

Many thanks to Michael Collins, who introduced me to Natural Language Processing both

in the classroom and in the lab, and advised my thesis. I'd like to thank Brooke Cowan and

Chao Wang for their continual guidance, especially in the beginning. Thanks to Mom and

Dad. And a huge thanks to Hibiscus for hearing me out on those Saturdays I spent in lab.

Contents

1 Introduction

2 Previous Research in Machine Translation

2.1

13

. . . . . .. . . . . . . . 14

A Quick History of Machine Translation ......

2.2 Advances in Statistical Machine Translation . . . . . . . . .. . . . . . . . . 16

2.2.1

2.2.2

Direct Transfer Statistical Models ......

. . . . .. . . . . . . . . 17

2.2.1.1

Word-based Translation . . . . . . . . . . .. . . . . . . . . 18

2.2.1.2

Phrase-based Translation

. . . . . . . . . .. . . . . . . . . 19

. . . . . . . . . . . . . . 22

Syntax-Based Statistical Models .......

3 Conceptual Overview:

Tree-to-Tree Translation Using Aligned Extended Projections

3.1

Background: Aligned Extended Projections (AEPs)

3.2 Machine Translation:

........

Chinese-to-English-specific Challenges

4 System Implementation

4.1

End-to-End Overview of the System .........

4.1.1

Preprocessing . ................

4.1.2

Training Example Extraction

4.1.2.1

........

Clause splitting . ........

.

4.1.3

4.1.4

4.1.2.2

Clause alignment ..................

4.1.2.3

AEP extraction ...................

Training ............................

4.1.3.1

Formalism: A history-based discriminative model

4.1.3.2

Feature Design .

Translation

4.1.4.1

4.2

4.3

4.4

..................

...................

. . . . .. . . . . . . . 39

Gluing Clauses into a Sentence . . . . . . . . . . . . . . . . 40

Chinese Clause Splitting ................

. . . . . .. . . . . . . 40

4.2.1

Step 1: Main Verb Identification . . . . . . . . . . . . . . . . . . . . . 43

4.2.2

Step 2: Clause Marker Propagation Control

. . . . . . . . . . . . . 46

4.2.3

Design Rationale ................

. . . . . .. . . . . . . 50

Clause Alignment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

4.3.1

Method Used and Variations to Consider . . . . . . . . . . . . . . . . 53

4.3.2

Word Alignments: Guessing Conservatively

AEP Extraction

4.4.1

4.4.2

....................

. . . . . . . . . . . . . 54

.. . . . . . .. . . . . 57

Identifying the Pieces of an English Clause . . . . . . . . . . . . . . . 59

4.4.1.1

Main and Modal Verb Identification

4.4.1.2

Spine Extraction and Subject/Object Detection . .....

4.4.1.3

Adjunct Identification ........

. . . . . . . . . . . . . 61

62

.. . . . . . .. . . . . 63

Connecting Chinese and English Clause Structi re ...........

5 Experiments

.

64

67

5.1

AEP Prediction Accuracy

5.2

System Output Walk-Through ............

5.3

Further Work ........................

. . . . . . . . . . . . . . . . .

. . .

68

70

75

5.3.1

The Need for a Modifier Insertion Strategy . . . .

75

5.3.2

The Need for a Clause Gluing Strategy ......

76

5.3.3

Chinese Segmentation and Parsing

77

. . . . . . . .

6 Contributions

78

Chapter 1

Introduction

State-of-the-art statistical machine translation performs poorly on languages with widely

differing word order. While any translation scheme will struggle harder the less its two

languages have in common, one crucial reason for this performance drop-off is the current

near-complete lack of syntactic analysis: viewing input sentences as flat strings of words,

current systems tend to do an excellent job translating content correctly, but for a hard

language pair, particularly over longer sentences, the grammatical relations between content

words and phrases rarely come out right. Two Chinese-to-English translations produced by

the Moses phrase-based translator of Koehn et al. 12] serve to illustrate:

REFERENCE 1: The Chinese market has huge potential and is an important part

in Shell's global strategy.

TRANSLATION 1: The Shell, China's huge market potential, is an important part

of its global strategy.

REFERENCE 2: In response to the western nations' threat of imposing sanctions

against Zimbabwe, Motlanthe told the press: "who should rule Zimbabwe is Zimbabwians' own business and should not be decided by the western nations."

TRANSLATION 2: In western countries threatened to impose sanctions against

the threat of Zimbabwe, Mozambique, Randt told the media: "Zimbabwe

should

exactly who is leading the Zimbabweanpeople their own affairs, and not by

western

countries' decision."

Notice how the content phrases of TRANSLATION 1, such

as huge potential, important part,

and global strategy, are for the most part dead on. The relations between

them are severely

mangled, however: Shell now appears, to be equated to China's huge market

potential, and

it is unclear what its refers to. Aside from translating Motlanthe as Mozambique,

Randt,1

the content in TRANSLATION 2 is similarly quite good. Incorrect

grammatical relations,

however, similarly ruin the sentence: the opening preposition seems to

suggest that the

event took place in western countries instead of Zimbabwe, and the quote

itself is far from

grammatically competent.

One reason for such low grammaticality is that it is difficult to learn rules for

reordering a

sentence's words and phrases without any knowledge of its high-level structure.

The following

is an example of how, when viewed as flat strings, word order between Chinese

and English

can quickly become complicated:

English Word Order:

Professor Ezra Vogal is one of America's most famous experts in Chinese

affairs

Chinese Word Order: Vogal Ezra Professor isAmerica most famous DE China

affair expert ZHI one

Even simple syntactic differences between languages can, on the surface,

lead to incomprehensible differences in word order. One example is the head-first/head-last

discrepancy

between Japanese and English: Japanese parse trees are similar to those of

English, except

with each node's child order recursively flipped. This discrepancy alone (and

there are of

course many more) leads to an ocean of idiosyncratic word reordering rules,

and yet structurally speaking it can be described quite simply. A similar discrepancy

between Chinese

and English is illustrated in the example sentence pairs below:

1This particular mistranslation of Motlanthe likely stems from a mistake

made during Chinese word

segmentation, by accidentally splitting Motlanthe into multiple words.

[[the person] [WHO bought the house]]

Chinese Word Order: [[bought house DE ] [ person ]]

English Word Order:

A modifier clause goes after

the thing it modifies in English,

before inChinese

Word order quickly diverges as modifier clauses are added

[[the person] [WHO bought [[the house] [THAT everybody wanted]]]]

Chinese Word Order: [[ bought [[ everybody wanted DE ] house I DE ] [ person ]]

English Word Order:

In English, a relative such as who bought the house follows after the noun phrase it

modifies: the person who bought the house. In Chinese, on the other hand, a clause comes

before the noun phrase, forming bought house DE person (where DE is the Chinese equivalent

of who in this context.) When the object of the clausal modifier is itself a complex noun

phrase with its own modifier clauses, the word orderings between languages quickly become

divergent. For a translation system to be able to model this difference between Chinese and

English, clearly some form of representation is needed that can identify the (often nested)

relative clauses and the noun phrases that they modify.

To summarize, machine translation must go beyond flat-sentence-based representation if

it is to succeed in translating languages with significant syntactic differences. The good news

is that steady advances in parsing over the last decade have made syntactically motivated

translation more viable. This thesis applies the discriminative tree-to-tree translation framework of Cowan et al [1] to the Chinese-to-English language pair, with the goal of improving

performance over current methods through explicitly modeling the clausal syntax of both

languages.

Chapter 2 reviews previous research in machine translation and orients the reader within

its current landscape. Chapter 3 introduces and motivates the core concept of clausal translation using Aligned Extended Projects, and discusses inherent Chinese-to-English-specific

challenges. Chapter 4, the meat of the thesis, explains how the system actually works. Chapter 5 probes system performance through experiments and discusses essential further work.

The final chapter summarizes my contributions.

Chapter 2

Previous Research in Machine

Translation

Machine Translation - the automatic translation of one natural language into another through

the use of a computer - is a particularly difficult problem in Artificial Intelligence that has

captivated researchers for nearly 6 decades. Its difficulty and holy grail-like end goal has

only made it a more fascinating and addictive research area. At the same time, however,

the fact that learned humans can do translation effectively is a proof by example that "Fully

automatic high quality translation" is an achievable dream. Moreover, despite its flaws, MT

has already proved itself over the years as a useful technology, and one that has initiated

several successful commercial ventures including SYSTRAN and Google Translate.

This chapter aims to briefly outline the history of MT, to orient the reader within the

current state of the art, and to contrast the work of this thesis against other contemporary

approaches. My goal is not to give a comprehensive summary of the current literature;

rather, I have selected a few bodies of work that illustrate the field as a whole.

2.1

A Quick History of Machine Translation

Initial enthusiasm for machine translation began shortly after the great success of World

War II code breaking efforts. Warren Weaver, the director of the Natural Sciences Division

of the Rockefeller Foundation, released the first detailed proposals for machine translation

in his March, 1949 memorandum

15].

These proposals were heavily based on information

theory and cryptography, modeling a foreign language as a coded version of English. His

proposals were a stimulus for research centers around the globe.

The Georgetown-IBM experiment, performed on January 7th, 1954, was the first widely

publicized demonstration of machine translation. The experiment involved the fully automated translation of 60 Russian sentences into English. Specializing in the domain of organic

chemistry, the system employed a mere 6 grammatical rules and a vocabulary of only 250

words. It was nevertheless widely perceived as a great success - the authors went so far as

to claim that machine translation would be a solved problem in a couple years [6], believing

that the complexity of natural language wasn't significantly greater than the codes that had

been fully deciphered during WWII.

Within those 3 to 5 years researchers began to understand just how complex the problem

of machine translation indeed is. Their early systems mostly used painfully hand-enumerated

bilingual dictionaries and grammatical rules for modeling word order differences between languages, a technique that was quickly recognized as fragile and restrictive. New developments

in linguistics at the time, largely Chomsky's transformational grammar and the budding field

of generative linguistics, served as a theoretical foundation for more expressive translation

systems.

Fueled heavily by the Cold War, research in machine translation continued full steam

throughout the early 1960s. It is worth mentioning that imperfect translation systems proved

to be valuable tools even at this early stage. As an example, both the USSR and the United

States used MT technology to rapidly translate scientific and technical documents, to get a

rough gist of their contents and determine whether they were of sufficient security interest -

whether they should therefore be passed to a human translator for further study. The poor

quality of the translations, in this context, was made up for by the stamina with which a

computer mainframe could perform.

In 1966, after a decade of high enthusiasm, heavy governmental expenditure, and results

that were far less than satisfying, research in machine translation, in the United States at

least, almost entirely came to a halt. The Automatic Language Processing Advisory Committee (ALPAC), a body of seven scientists commissioned by the US government, published

an influential and pessimistic report that triggered a major reduction in funding for fully

automated machine translation. It recommended instead that more focus be placed on foundational problems in computational linguistics, as well as tools for aiding human translators

such as automatically generated dictionaries [20]. While such foundational research did give

better theoretical background for modern MT, 1966-1978 was a difficult period for the field.

It is important to note that part of the aftermath of this loss of funding was the establishment of several commercial ventures by former academics. Several successful MT systems

resulted that are still in use today, including SYSTRAN 1 and Logos.

A source of frustration in building MT systems at the time lay in the endless amount

of effort highly trained linguists and computer scientists had to spend explicitly modeling

a pair of languages and the differences between them. Most of the systems of the time

can safely be called rule-based for this reason, in that they consisted of a large body of

hard-coded rules for how to analyze, represent, and translate one language into another.

SYSTRAN and Logos fit squarely into this paradigm. MT Research steadily accelerated in

the 1980s, budding into several new approaches by the end of the decade. Statisticalmachine

translationis one such area that has the great advantage of not requiring an enumeration of

rules and other linguistic knowledge. Rather, SMT approaches start with a large corpus of

text, either plain or linguistically annotated, and learn how to translate on their own through

statistical models and machine learning algorithms. Interest in SMT grew as the availability

iThe core translation technology used in Babel Fish and formerly Google Translate.

of inexpensive computational power, in step with the advent of personal computers, began

to soar.

While relatively nascent, statistical machine translation is now the dominant subfield

in MT research. Google Translate is an excellent commercial SMT success story, and was

consistently ranked at or near the top of the last NIST Machine Translation Evaluations in

2006. The next section reviews SMT up to the present.

2.2

Advances in Statistical Machine Translation

Machine translation models, statistical or otherwise, are traditionally pictured in terms of

the machine translation pyramid diagrammed in figure 2.1. Machine translation is conceptualized into three stages: analysis, transfer and generation. Analysis interprets an input

text to produce the representation that the translation system reasons about. For example, a

system might perform a syntactic analysis that produces a parse tree from a foreign sentence.

On top of that, it might go further to analyze the semantics of the sentence, forming a deeper

representation such as first-order logic or lambda calculus. The final stage, generation, works

in reverse of analysis, extracting an English text from the transferred representation. As an

MT system's internal representation - a parse tree, for example - often consists of strictly

more information than the input text that it is constructed from, this generalization step is

typically easier than the analysis. It is for deeper analysis, where the internal representation

moves increasingly further away from the original foreign input, that generation becomes a

substantial challenge.

Typically, as more analysis is performed, the transfer stage becomes less and less prominent. Hence the pyramid. At the top of the pyramid, where foreign texts are converted to

a language-independent interlingua representation, no transfer step is needed at all. Similarly, systems at the base of the pyramid perform no analysis and instead comprise one large

transfer step, reasoning with input texts as flat strings of words.

Interlinqua

ation

Ar

Foreign Words

English Words

Figure 2.1: Machine Translation Pyramid. Machine Translation is viewed in three stages: analysis, transfer

and generation. Analysis first interprets a foreign input text to form the representation that the translation

system reasons over. Transfer then converts this representation into an English representation. As more

analysis is performed, transfer becomes less prominent. At the top of the pyramid, where texts are converted

to a language-independent interlingua representation, no transfer stage is needed at all. Systems at the base

of the pyramid conversely consist of one large transfer step, performing no explicit syntactic or semantic

analysis. The final stage, generation, extracts an English sentence from the transferred representation.

Statistical machine translation is still crawling at the bottom of the pyramid. Direct

transfer architectures to date, excepting a few syntax-based competitors, have significantly

outperformed all other approaches that involve some sort of syntactic or semantic analysis.

Climbing up one rung of the MT triangle, several new syntax-based translation models are

beginning to show potential. The next sections review direct transfer and syntax-based

translation models, and contrast the approach of this thesis against other works.

2.2.1

Direct Transfer Statistical Models

Direct transfer models work with foreign text nearly the way that it is input: as a flat string

of word tokens. Their representation is therefore minimal. Word-based and phrase-based

direct transfer approaches are reviewed in this section.

2.2.1.1

Word-based Translation

In the early 1990s, IBM developed a series of statistical models that, despite a near complete

lack of linguistic theory, pushed forward the state of the art and inspired future systems.

While the models, now named IBM Models 1 through 5, did not always outperform wellestablished rule-based systems such as SYSTRAN, part of the attractiveness of the IBM

models was the relative ease with which they could be trained. Contrasted with the years

upon years that linguists and computer scientists spent encoding the translation rules behind SYSTRAN and other systems, the designers of the IBM models could simply hand a

computer a corpus of parallel sentence translations and let it crunch out the numbers quietly

on its own, simply by counting things - for example, the co-occurances of English words

with foreign words.

The IBM models, and several SMT systems to follow, all learn parameters for estimating

p(elf), the probability of an English sentence e given a foreign sentence f. Applying Bayes'

rule, this is equivalent to maximizing p(e) -p(fle), where p(e) is a language model - a

probability distribution that allocates high probability mass to fluent English sentences,

independent of the corresponding foreign input - and an inverted translation model p(fle).

The IBM models estimate p(f le). A range of techniques for building language models, such

as smoothed n-gram models, are combined with an IBM model to form p(elf). Translation

then becomes a decoding problem in this view:

e = argmaxep(elf) = argmaxep(e) -p(f e)

Translation decodes a foreign sentence into an English sentence, by finding the English

sentence 6 that maximizes p(elf). The IBM models are commonly called word-based systems

because they make predictions for how each word moves and translates, independent of other

words.

IBM models 1 through 5 break p(fle) into an increasingly complex product of several

distributions, with each distribution measuring a different aspect of the variation between

one language and another. The following diagram, adapted from [24], is a canonical example

that demonstrates a minor simplification of IBM Model 4 translating a sample English

sentence into Spanish. The model works in four steps: fertility prediction, null insertion,

word-by-word translation, and lastly, distortion.

Mary did not slap the green witch

_ _/

(3Fertility

n(31slap)

Mary not slap slap slap the green witch

Tp-null

Null Insertion

Mary not slap slap slap NULL the green witch

Translation

t(verde I green)

Maria no daba una botefada a

!X

verde bruja

Distortion

verde

Maria no daba una botefada a ia bruja

Fertility allows one English word to become many foreign words, to be deleted, or to

remain as a single foreign word. In this case, "did" is deleted (fertility 0), and "slap" grew

into 3 words (fertility 3). Null insertion allows words to be inserted into the foreign sentence

that weren't originally present in the English. Later down the road, the NULL word in this

example becomes the the Spanish function word "a". Next, each English word, including

NULLs, is independently translated into a Spanish word. Finally, distortion parameters

allow these words to be shuffled around, to model the differences in word order between

Spanish and English. Probability distributions for each of these steps are learned during a

training phase, by counting various events in a parallel corpus. An Expectation Maximization

algorithm 123] is typically employed to do the counting. During training, the decoder searches

for the English sentence e that maximizes the combined product of these four groups of

learned distributions.

2.2.1.2

Phrase-based Translation

A weakness of word-based systems that early phrase-based systems - the next generation

of direct transfer SMT - improved greatly upon is the loss of local context that is inherent

in translating each word independently. The IBM models can't leverage the fact that very

commonly between one language and another, the translation of a given word will depend

heavily on the words nearby. Consider an idiom for example: it is much more natural to

translate an idiom like kick the bucket as a whole, than it is to consider all the combinations

of word-by-word translations and pick the most probable. Similarly, collocations such as

with regards to are often best translated as a whole. In translating from English to Chinese,

for example, it is very common to translate with regards to as the single word )T.

Phrase-based systems improve upon word-based systems in exactly this area by modeling many-to-many word translations - building a phrase table, rather than a word-to-word

dictionary, that counts co-occurances of foreign and English phrases among other events.

Phrase-based systems learn a probability distribution p(fle) similar to the IBM models, and

can be seen as consisting of three steps: dividing the sentence into contiguous phrases, reordering the sentence at the phrasal level, and lastly, translating each phrase independently

to form the output foreign sentence. Combined with a language model p(e), the model can

then be used to estimate p(elf) via Bayes' rule.

She told me with regards to the new agenda to proceed on Thursday

Input Sentence

IShe told me with regards tolthe new agendallto proceed on Thursdayl Identify Phrases

Reorder Phrases

the new agenda with regards to She told me to proceed on Thursday

I

I

Ithe new agenda with regards tollShe told mellto proceed on Thursday

Translate Each Phrase

Independently

Output Sentence

(italics indicate foreign translations)

Note that the phrases that a phrase-based system learns to recognize are only of a statistical significance; the phrases are not necessarily linguistically motivated or otherwise

theoretically grounded. Similar to the IBM models, translation consists of a decoding step

where the optimal phrase boundaries, reorderings, and phrase translations are searched for

over all possibilities. Due to the combinatorial explosion of possibilities in the search, designing algorithms for how to build phrase tables during training, and how to search over

them during online translation, is no easy challenge. A large body of literature exists on

these subjects, including [7, 2]. Moses is a popular open source phrase-based decoder used

in this thesis.

Like word-based systems, a great advantage of phrase-based systems is the relative simplicity of the models and corresponding ease with which they can be constructed. To train

a phrase-based decoder like Moses, the MT practitioner merely needs to hand the software

a parallel corpus of translations and leave it cranking for several hours or days. Simplicity

and ease of training aside, phrase-based systems have now become a mainstream technique

in machine translation, contending well with SYSTRAN and other culminations of several

decades of research in rule-based systems. Google Translate, for example, perhaps the leader

in commercial machine translation, has quietly switched from a SYSTRAN backend to an

in-house phrase-based system. By October 2007 all supported language pairs were switched

to Google's own software [13].

Phrase-based systems are nevertheless quite far from the accuracy and fluency of human

translations. Perhaps the most accessible shortcoming of the models is their inability to learn

syntactic differences between languages: they have no abstract sense of nouns, verbs, clauses,

prepositional phrases, and so on. Rather, the knowledge that a phrase-based system (and

other direct-transfer systems) gleans from a corpus is highly repetitive - highly lexicalized in that it must learn the same patterns for distinct but similar word combinations. Consider

the following similar Spanish-to-English translations, taken from Cowan's PhD dissertation:

SPANISH:

la cosa roja

SPANISH:

la manzana verde

GLOSS:

the house red

GLOSS:

the apple green

ENGLISH:

the red house

ENGLISH:

the green apple

Both cases involve a Determiner+Noun+Adjective -> Determiner+Adjective+Noun translation reordering, and yet a phrase-based system would need to learn separate rules for each

word group. One can imagine the combinatorial explosion of lexicalized rules, all of them

related to this simple syntactic difference between Spanish and English. A phrase-based

system therefore cannot effectively generalize this pattern to use it on a new, previously

unencountered Determiner+Noun+Adjective combination.

The Spanish-English example illustrates one small difference between noun phrases of

each language. Differences at higher syntactic levels, ones that result in substantial longdistance word reorderings, also cannot at all be modeled using phrase-based systems. The

head-first/head-last discrepancy between English and Japanese, and the difference in the

attachment of relative clauses in English and Chinese are both excellent examples of syntactic phenomena that phrase-based systems cannot effectively capture. Wh-movement, the

phenomena in English responsible for the movement of question words like which and what

to the beginning of a sentence, is another classic example.

Researchers in the last few years have made progress augmenting phrase-based systems

to incorporate some syntactic knowledge. Liang et al. 121] made improvements by moving

part-of-speech information into a phrase-based decoder's distortion model. Koehn and Hoang

122] have generalized phrase-based models into factor-based models that behave much the

same way but can incorporate additional information like morphology, phrase bracketing and

lemmas.

2.2.2

Syntax-Based Statistical Models

Syntax-based statistical machine translation is growing increasingly prominent in the field.

This section describes two rough categories in which such systems tend to differ - choice of

representation and the languages modeled - and orients my system within those categories.

I and many others incorporate context-free phrase structure into our syntactic representations. Several other representations, however, are currently being explored. In the

last few years, tree-to-tree transducers 115] have surpassed phrase-based systems in Chineseto-English translation, as judged through both human evaluation and statistical metrics.

Chiang's [16] hierarchical phrase-based representation, at the other end of the spectrum,

effectively generalizes broad syntactic knowledge from an unannotated corpus, from within

a much looser formalism.

The languages that are syntactically modeled - source, target or both - form another

dimension over which syntax-based SMT systems differ. My approach and other tree-to-tree

translation systems, such as Nesson and Shieber's approach [25], model both the syntax of

source and target languages. Xia and McCord [18] focus on source-side syntax, automatically

learning how to restructure the child order of the nodes of a source parse tree to make it

look more like a target parse tree. Yamada and Knight [17], on the other hand, present a

system that probabilistically predicts a target parse tree from an input source string.

So far I've described my system in broad terms. More specifically, my system focuses

on modelling clausal syntax, the syntax of verbs, through Aligned Extended Projections.

Chapter 3 introduces and motivates this model in detail.

Chapter 3

Conceptual Overview:

Tree-to-Tree Translation Using Aligned

Extended Projections

Brooke Cowan, Ivona Kuierovd and Michael Collins designed and implemented a tree-totree translation framework for German to English in 2006 [1], with similar performance

to.phrase-based systems 12].

This thesis centers around applying their approach to the

Chinese/English language pair, and exploring new directions for their relatively new model.

This chapter introduces and motivates that model at a conceptual level. Section 3.2 explains

why "porting" Cowan's approach to Chinese-to-English is exciting and substantial, rather

than merely a matter of implementation.

At the highest level, translation proceeds sentence-by-sentence by splitting a Chinese

parse tree into clauses, predicting an English syntactic structure called an Aligned Extended

Projection (AEP) for each Chinese clause independently, and, finally, linking the AEPs to

obtain the English sentence. Verbal arguments, including subjects, objects, and adjunctive

modifiers (such as prepositional phrases), are translated separately, currently using a phrasebased system [2]. The hope is to achieve better grammaticality through syntactic modelling

independently

High-level: Split Chinese sentence into clause structures, predict English clause structures

PREDICT

NP

NP

V

N

VP

NP

V

N

C(Paskwlauw

CP-bedinu

haog %aid

P,,Ap~oru

- l"e

NP

a', ,: :,)

f hirn

SP

i, .j

PREDICT

VP

NP

U

N

VP

VP

VP

NP

I

PN

VP

V

NP

V

VP

be

ihj(

NP

a

NP

v

P

'P

PP

.. I(

NP

V

DFT' N

maim

PP

y I

NP

(lio

kE

VP

Ti

t PREDIC

:P

'ow

Ri

the trtaa

PREDICT,,.

VP

PP

PP

V

IA

]L:

E h

)Nk91C~ il~

rtt(PVP$JY~

hew'~

Pt

ttttatpe

PP

+ii o'+ km hrlwmn

into separate

Figure 3.1: Translation scheme at a high level. A Chinese sentence is first parsed and split

then predicted for

clauses. An English clause structure, called an Aligned Extended Projection (AEP), is

to obtain the

each Chinese clause subtree independently. The final step (not shown) is to link the AEPs

the

illustrates

3.2

figure

final English sentence. Note that this is a simplification of the AEP repesentation;

AEP prediction step in detail.

direct

at a high level, while still maintaining high-quality content translation by applying

and

transfer methods to reduced expressions. Figure 3.1 shows a simplified view of the split

prediction stages on a Chinese-to-English example.

3.1

Background: Aligned Extended Projections (AEPs)

Aligned Extended Projections build on the concept of Extended Projections in Lexicalized

Tree Adjoining Grammar (LTAG) as described in [3], through the addition of alignment

information based on work in synchronous LTAG [4]. Roughly speaking, an extended proa

jection applies to a content word in a parse tree (such as a noun or verb), and consists of

syntactic tree fragment projected around the content word, which encapsulates the syntax

that the word carries along with it. This projection includes the word's associated function

words, such as complementizers, determiners, and prepositions. The figure below shows the

EPs of three content words - said, wait, and verdict - for the example The judge said that

we should wait for a verdict. Notice how the EPs of the two verbs, said and wait, include

argument slots for attaching subject and object. Importantly, an extended projection around

a main verb encapsulates that verb's argument structure: how the subject and object attach

to the clausal subtree, and which function words are associated with the verb.

S

NP

SBAR

VP

SBAR

V

C

that

said

S

NP

VP

V

I

PP

should

V

PP

NP

P

I

for

VP

wait

D

N

I

I

a

verdict

An Aligned Extended Projection (AEP) is an EP in the target language (English) together

with alignment information that relates that EP to the corresponding parse tree in the source

language (Chinese). For the remainder of this proposal I will speak of clausal verb AEPs

only: in this case the extra alignment information includes where the subject and object

should attach, and where adjunctive modifiers should be inserted.

Anatomy of an Aligned Extended Projection

A good way to understand an AEP is to investigate the way my system predicts one from

a Chinese clause. The AEP prediction step - the core of the translation system - is diagrammed thoroughly in figure 3.2.

(The linguistic terminology used in this overview is

also reviewed toward the right of the figure.) The input is a Chinese clause structure: an

SDEC

IN

PredictEnglishCause Backbone

that

(DE)

NP- ubj

PP VP

_Terminology

S

CP

NP-Subj

VP

V

Input:Chinese clause structurewith

modfers [1] and [2] Identfied

Englishclause backbone structure

(called the verb'sExtended Projection).

Argument essentil clausal arguments,

such as subject and object,

thata givenclauseneedsto

be grammaticallycomplete:

Thebiyate a sandMwhh

Adjunct:somethnglike a prepositionalphrase

that adds o a clause,andyet if it were

removed,the clausewill soilbe grammatical:

Theboy fromNew rt a sandwichat noon

Modifier:

arguments

andadjuncts

om New obrkatea sandwkh

The

Predict English argument

modifiers

Step 3:

Graft/Deleteremanng Chinese modifiers

IN

that

NP-A

VP

-that

V

Output:Englishclause structure withfull alignment information

(a fullAligned ExtendedProjectlon:

havebeengrafted onto various positions, ordeleted.

remainingChinesemodifiers

position.

[2] was graftedto the post-verb

in this case. Chinese modifiers

IN

IN

NP-Subj

VP

v

witharguments aligned.

Englishclause structure

(Inthis case, the subject position s aligned to

Chinesemodifier11].)

Extended

Projection

(EP):

the syntactic fragment a contentwordprojects

und el. A verb'sEP reveals the structur o

theclause:where the subjectandobjectattach,

and whichfunctionwords(suchas that andto) am

usedto relatethe arguments.

(AEP):

AlignedExtendedProjection

an extended projectionwith exta infrmaon that

foreignstructure.This

aligns It to a correspondingo

thesis modelsthe EnglishAEPsof clausalverbs,so the

of whichChesmse

alignmnt Infrnnatonconsists

subject and

modller re inserted Intothe English

object positions andin which positionsthe remaining

modifiers are grated," Ifanywhere.

Chinesemodifier

Notethat In this representton, any

maybe Inserted Into Englishsubl orobject position.

As examples, a Chinesepreposionalphrase

(an adlunct) canbecome the Englishsubject,

and a Chinese subjectcanbe graftedonto its English

counterpartas an adjunct.

Figure 3.2: AEP Prediction broken down into three steps. The input is a Chinese extended projection.

The first step is to predict an English extended projection from the Chinese. The next two prediction steps

align that English clause to the Chinese clause: step 2 predicts where the English subject and object should

be aligned, and step 3 finally predicts the alignment of the remaining Chinese modifiers.

extended projection around a Chinese verb, indicating where modifiers such as subject and

object attach. The first step is to predict the corresponding English verb and its extended

projection. In the figure above, that extended projection indicates that the English clause

starts with the function word that, shows where the subject attaches, and also indicates that

the English clause has no object. The second and third steps predict how the English clause

structure is aligned to the Chinese input. Step 2 looks at the English clause's subject and

object attachment slots if present, and figures out which Chinese modifiers should be aligned

to them. Step three determines the alignment of any remaining Chinese modifiers.

A more technical specification of the AEP representation that I use in this thesis is given

in table 3.1. Specifically, an AEP can be thought of as a basic record datatype that consists of

several fields: an English verb, a syntactic spine projected around that verb that indicates its

clausal structure, and several pieces of alignment information - subject, object, and modifier

attachment - that link that English verb's clause structure to a parallel Chinese structure.

An additional AEP field indicates which wh function words (e.g. in which, where, etc.) are

AEP field

Description

STEM

SPINE

WH

MODALS

SUBJECT

Clause's main verb, stemmed.

Syntactic tree fragment capturing clausal argument structure.

A wh-phrase string, such as in which, if present in the clause.

List of modal verbs, such as must and can, if present.

- The Chinese modifier that becomes the English subject, or

- NULL if English clause has no subject, or

- A fixed English string (e.g. there) if English subject doesn't correspond to a

Chinese modifier.

OBJECT

MOD[1...n]

Analagous to SUBJECT

Alignment positions for the n Chinese modifier phrases that have not already been

assigned to SUBJECT or OBJECT.

Table 3.1: The AEP representation used in this thesis is a simple record datatype. During the AEP

prediction step - the core of the translation model - each field is predicted in sequence.

present in the English clause.'

My system predicts each of these fields in order: first the main verb, followed by its

English syntactic spine, and so on. The order matters because the prediction of a given

field depends in part on predictions made for previous fields: syntactic spine prediction (the

SPINE field), for example, is highly dependent on the main verb (STEM field) that the spine

is projected around.

Cowan's tree-to-tree framework [1] provides techniques for (1) extracting <source clause,

target AEP> training pairs from a parallel treebank, and (2) training a discriminative

feature-based model that predicts target AEPs from source clauses. My system uses modifications of these techniques, explained in detail in Chapter 4.

1

This representation is a simplification of that used in [1]. For example, Cowan's AEP representation

also included fields for the English clause's voice (active or passive) and verb inflection.

3.2

Machine Translation:

Chinese-to-English-specific Challenges

The easy side of this project is that Cowan's framework for German-to-English translation,

along with preexisting word segmenters, part-of-speech taggers, and parsers, is ready to be

leveraged. One might think that applying a translation system to a new language pair is a

relatively straight-forward task - grungy perhaps but not intellectually exciting. This section

illustrates why that is simply not the case, highlighting several of the unique challenges that

the Chinese-to-English language pair brings forward.

Linguistic modelling challenges: Novel feature set needed for Chinese-to-English

The features developed for Cowan's German-to-English translation framework heavily exploited dependencies specific to the German and English languages. One contribution of this

thesis is the development a new feature set tailored to the Chinese/English language pair.

To give one example, because the Chinese languages do not have an inflectional morphology,

new features must be developed to predict the inflected forms of translated English verbs.

Written Chinese uses the aspect marker zai ZHCAR, for example, to express the progressive

aspect, rather than the -ing verb suffix used in English. I developed features that are sensitized to this closed class of Chinese aspect words, to try to help predict English inflectional

forms.

Error propagation from word segmentation

In written form, Chinese words contain no spaces between them. While words do indeed

exist in the Chinese languages, word boundaries aren't present on paper. One theory is that

a character-based written system, as opposed to a phonetic system such as written English,

makes it easier for readers to recognize the different words in a sentence, so word separation

becomes unimportant. Either way, the extra processing step of segmenting (aka tokenizing)

Chinese sentences into words creates a new layer of ambiguity and an early source of error that

can easily derail later stages. As an example of one class of segmentation-related problems,

most segmenters, when confused, tend to break up a chunk of characters into many singlecharacter words.

Long transliterated names are particularly vulnerable to segmentation

into several nonsensical nouns and verbs.

The transliteration of "Carlos Gutierrez," for

example, contains seven characters selected for their sound. On their own, however, four of

the characters, as examples, mean "to block," "iron," "thunder," and "ancient." Translation

would fail hilariously if the segmenter were to break this name into these separate singlecharacter words.

A clause-by-clause translation system such as presented in this thesis

would fail particularly badly - each verb (such as "to block") created from the nonsensical

segmentation would likely be interpreted as its own clause, complete with subject and object.

The Chinese/English corpus used in this thesis was processed with a special segmenter

that attempts to lessen the impact of exactly this problem - when the segmenter doesn't

know how to divide up a string of characters, it tends to leave them strung together rather

than splitting them up into single character words.

Fragility with respect to parsing errors

The biggest danger in any translation scheme that incorporates syntactic analysis is that

the analysis can potentially do more harm than good through the mistakes it introduces.

Because Chinese-to-English translation performs rather poorly in methods that forgo syntactic analysis, my hunch in building this system was that the trade-off would be worth it.

At the same time, however, Chinese sentence parses are generally worse than those of German and English: word segmentation errors alone are often enough to result in nonsensical

parses. Additionally, properties of the Chinese language family, including topicalization and

subject/object dropping, also partially explain the relative decrease in parse quality from

a parser [10] designed for English. The challenge is to be especially cautious of erroneous

parses. My system is conservative in this respect, through safety checks made during the

extraction of training data. Essentially, if any safety checks fail, my system considers a given

sentence a misparse and moves on to the next sentence, without extracting any training

data. The details are explained in 4.3. Training aside, parsing mistakes made during actual

translation nevertheless greatly impact performance.

To give an example of the sorts of parse errors that can cripple my translation system,

consider Chinese names. Because most names are rare proper nouns, it is frequently the

case that a given Chinese name will not have been previously encountered in the training

data. Add the fact that the characters that compose Chinese names frequently have other

meanings, and one can understand why it is relatively easy for the parser to make an error

by not recognizing a name as a proper noun - or even a noun at all. Consider the name Lin

Jianguo ZHCAR, for example. The surname, Lin ZHCAR, 2 means forest. The given name,

Jianguo ZHCAR, roughly means to build the country. Given a misparse, my system could

easily dedicate an entire clause to this name, with disastrous results. Segmentation errors

only make these sorts of problems worse.

Lack of inflectional morphology

The Chinese language family contains no verb conjugations, and no tense, case, gender or

number agreement. Tense, for example, is established implicitly, and can only be determined

for a given sentence without other context if it contains a temporal modifier phrase such as

"today" or "last Saturday." One major extension to Cowan's model for Chinese-to-English

that may be worth pursuing in the future would be to do extra bookkeeping to keep track

of tense information from earlier clauses, as a means of filling in missing information in later

clauses. Clause predictions would then no longer be strictly independent of each other. For

now, I have no means of combating this problem.

2

Contrary to English, in Chinese languages the surname comes first, followed by the given name.

Subject/Object dropping

Chinese is a pro-drop language, meaning once established in previous sentences, the subject

and object (sometimes both) may be dropped from a sentence entirely. This makes sentenceby-sentence translation particularly ill-suited to Chinese. Similar to the implicit tense issue,

I'm currently thinking about ways to use subject and object information from previous

sentences to fill in missing information - it is a hard problem, and one not explored in this

thesis.

Rich idiomatic usage

Chinese formal writing is full of condensed 4-character idioms called Chengyu. These often

contain a subject and verb and yet should be translated into English as a single word. One

idiom, for example, translates directly as draw-snake-add-legs, and yet in most sentences

should be translated as the single word unnecessary or redundant. The Chinese language

contains thousands of such examples. Phrase-based systems tend to do a good job on these

types of condensed idioms, because they can bag up the entire idiom and translate it in

one piece. Syntactic analysis may get in the way in this respect: it is challenging to handle

idioms as effectively as phrase-based systems.

Chapter 4

System Implementation

A working Chinese-to-English clausal translation system is a major contribution of this thesis.

Chapter 4 presents this system and descries its parts in detail. Section 4.1 sketches the full

system at a high elevation, outlining the system's three stages: training data extraction,

training, and translation. Training data extraction - deriving <Chinese clause input, English

AEP output> training examples from a parallel corpus of Chinese and English sentences is the area that required the greatest amount of research. Sections 4.2-4.4 describe the three

steps that I developed: clause splitting, clause alignment and AEP extraction.

4.1

End-to-End Overview of the System

The system consists of three separate stages: extraction of training examples, training of

the model, and translation. Figure 4.1 gives an overview of the extraction and translation

stages. All sentences are first segmented, tagged, and parsed. These steps are reviewed

under 4.1.1. Training example extraction, reviewed in detail in sections 4.2-4.4, is sketched

briefly in 4.1.2. Given appropriate training data, the underlying AEP prediction model is a

linear history-based model trained with the averaged perceptron algorithm [12] and decoded

Output:

EnglishSentence

Output: TrainingPairs

t

T

r

IT

t

r

T

a

I

T

I

I

Chines

InentP

ntence

Input:

Parallel English Sentence

Training Data Extraction

Input:

Chinese

Sentence

Translation

Figure 4.1: Control flow during training example extraction (left) and translation (right).

Note that training happens between these two stages. Extraction consists first of monolingual analysis

over each language: word segmentation (Chinese only), part-of-speech tagging, parsing and clause splitting.

Chinese/English clause subtrees are next paired together according to word alignments, or thrown away if

judged inconsistent. An English AEP data structure is then generated from the clause pair, resulting finally

in a training example: a Chinese clause subtree paired with an English AEP. Translation starts with the

same preprocessing steps, by converting a Chinese sentence into several clause subtrees. An AEP is then

predicted from each clause independently according to the trained model. The final translation step is to

link the AEPs together and flatten them, yielding an English sentence as output.

using beam search.' Section 4.1.3 describes the training procedure. Section 4.1.4 describes

how the trained model is used to perform translations.

4.1.1

Preprocessing

Written Chinese, like many East Asian languages, does not delimit the words within a

sentence. It is therefore necessary to segment Chinese sentences into words before other

processing can take place. Chinese word segmentation is a difficult, frequently ambiguous

'Combining the perceptron algorithm with beam-search is similar to a technique described by Collins

and Roark [9].

task, and a prerequisite of all other steps.

Next, parsing takes a segmented sentence and derives a context free parse tree. I'm

currently using a Chinese-tailored variant of the Collins parser [10] trained on the Penn

Chinese Treebank [14]. Part-of-speech tagging is first performed using a maximum entropy

statistical tagger.

4.1.2

Training Example Extraction

After preprocessing, the training corpus consists of parallel Chinese/English sentence parses.

The next step is to process the corpus to extract the desired <Chinese clause subtree, English

AEP> training example input/output pairs. This is performed deterministically, through

several carefully tuned, hard-coded heuristics. These heuristics are the product of both direct

observation of the data, and the annotation standards of the Penn Chinese and English

treebanks. The aim is not to be correct 100% of the time, but to capture the bulk of the

accurate training data available with minimal rules.

Each training data extraction step is diagrammed in the left portion of figure 4.1. Clause

splitting, clause alignment, and AEP extraction are major contributions of this thesis, and

focused on in detail in subsequent chapters.

4.1.2.1

Clause splitting

Because my system produces translation at the clausal level, parse trees first need to be

broken into separate clause subtrees. I use a small set of rules to do this on the Chinese side,

in two steps. Minimal verb phrases are first marked as clauses. These clause markers then

propagate up through their ancestors according to a series of constraints. One constraint,

for example, allows an IP to pass its clause marker to a CP parent, in effect including a

complementizer word within the clause. Clause markers propagate until no more propagations are allowed. I have implemented this technique and found it works well. I use Brooke

Cowan's clause splitter on English parse trees. Clause splitting is reviewed in section 4.2.

4.1.2.2

Clause alignment

This step serves two purposes: (1) align the clauses in a sentence pair and (2) filter out potentially bogus training data. Clause alignments are made based on symmetric word alignments

from the GIZA++ implementation of the IBM models [7], by computing word alignments

for Chinese/English and English/Chinese and taking their intersection. Any sentence pair

that contains an unequal number of clauses is discarded from the start. Furthermore, if one

Chinese clause contains words aligned to multiple English clauses, or vice versa, all involved

clauses are discarded. This filtering is intentionally conservative (high precision, low recall),

to increase the quality of the training examples in the face of occasionally inaccurate parse

trees. Clause alignment is reviewed in detail in section 4.3.

4.1.2.3

AEP extraction

In the training stage, a machine learning algorithm will inspect a corpus of <Chinese clause,

English AEP> example pairs to attempt to learn how to predict an English AEP given a

Chinese clause. This final step of training data extraction refines a raw <Chinese clause,

English clause> translation pair into the format that the learning algorithm requires: a

<Chinese clause, English AEP> input/output example pair. AEP extraction is reviewed in

detail in section 4.4.

4.1.3

Training

This section reviews the underlying representation used to predict English AEP structures

from Chinese clauses: a history-based, discriminative model. Such a model offers two major

advantages. The greatest strength in a feature-based approach is the flexibility with which

qualitatively wide-ranging features can be integrated. As examples, features used in this

thesis include structural information about the source clause and target AEP under consideration, properties of the function words present (such as modal verbs, complementizers

and wh words), and lexical features of the subject and object. Secondly, feature sets can

be developed with relative ease for new language pairs: while features in the original model

exploited German/English dependencies, I built a custom set for Chinese/English.

4.1.3.1

Formalism: A history-based discriminative model

Following work in history-based models, I formalize AEPs in terms of decisions, such that

the prediction model can be trained using the averaged perceptron algorithm [12]. The

formalism presented closely follows that presented by Cowan et al. [1] in section 5.1.

Assume a previously extracted training set of n examples, (xi, yi), for i = 1... n. Each xi

is a clause subtree, with y being the corresponding English AEP. Each AEP yi is represented

as a sequence of decisions (dl,... , dN), where N, a fixed constant, is the number of fields

in the AEP data structure: d, corresponds to the STEM field, d2 corresponds to SPINE,

and so on, ending with dN as the last MODIFIER field.

Each d3 , j = 1... N, is a member of Dj, the set of all possible values for that decision. I

create a function ADVANCE(xi, (dl, d2 ,... , d(j_))) that returns a subset of Dj corresponding to the allowable decisions dj given xi and previous history (dl, d2 , ... , dj_). A decision sequence (dl,..., dN) is well-formed for a given x if and only if dj E ADVANCE(x, (dl,..., dj_l))

for all j = 1... N. The generator function GEN(X) is then the set of all well-formed decision

sequences for x.

The model is defined by constructing a function O(x, (dl,..., dj- 1 ), dj) that maps a decision dj in context x, (dl,..., djl) to a feature vector in Rk, where k is the constant number

of features in the model. For any decision sequence y = (dl,... , dn), partial or complete,

the score is defined as:

SCORE(x, y) = Q((x, y) a

where (I(x,y) is the sum of the feature vectors corresponding to each decision (dl,..., dm),

namely, I(x, y) = Ej'=i (x, (d, ... dj-1),dj).

The parameters of the model, !

REk,

weight the features by their discriminative ability.

a is precisely what is learned during training. The perceptron algorithm is a convenient

1

2

3

4

5

6

7

8

9

10

11

12

main verb

any verb in the clause

all verbs, in sequence

clausal spine

full clause tree

complementizer words

label of the clause root

each word in the subject

each word in the object

function words in the spine

adverbs

parent and siblings of clause root

1

2

3

4

5

6

7

does the spine have a subject?

does the spine have an object?

does the spine have any wh words?

the labels of any complementizer nonterminal

the labels of any wh nonterminals in the spine

the nonterminal labels SQ or SBARQ

the nonterminal label of the root of the spine

Table 4.1: The aspects of a Chinese clause (left) and of an English AEP (right) that are incorporated into

features. Additional features can easily be added in the future.

choice because it converges quickly, generally only requiring a few iterations over the training

examples [9],[12]. I specifically use the averaged perceptron algorithm as presented in [12]

to learn the weights.

4.1.3.2

Feature Design

Training and translation both use a feature function 4(x, y) that maps a Chinese clause

x and English AEP y into a vector of features. This section describes that function. A

strength of discriminative models is the ease with which qualitatively wide-ranging features

can be combined; theoretically, a feature could be a function of any aspect of a Chinese

clause x, any aspect of an English AEP y, or any sort of connection between the two. Table

4.1 enumerates the complete set of Chinese clause aspects and English AEP aspects used to

construct features in the current model. The mapping from aspect to feature is for the most

part direct. For example, one aspect of a Chinese clause is its main verb - for every Chinese

main verb encountered in the training data, I created an indicator feature ¢i(x) that is True

if a clause x has that main verb, or False otherwise. The Chinese features are based on the

German features used in [1], while the English features are identical.

It is worth noting that a relatively simple set of features stretches a far distance. For

example, the function words in a Chinese clause's spine include prepositions, verbal aspect

words (such as the perfective aspect Tor the continuous aspect V), locatives, conjunctions,

and more. Chinese conjunctions often connect directly to the conjunctions of an English

translation, while at the same time, Chinese verbal aspect words and locatives often influence

the English main verb, including its tense.

Also note that the feature vector created for each <Chinese clause, English AEP> pair is

enormous: for example, an indicator feature is created for every single word in the corpus that

ever appeared within a subject or object. The weight vector a effectively filters uninformative

features, keeping only those that have predictive power.

4.1.4

Translation

The translation phase is outlined in the right half of Figure 4.1, and works as follows: An

input Chinese sentence is first segmented, parsed, and split into clause subtrees. An AEP,

representing high-level English clausal structure, is predicted for each Chinese clause independently using the trained model. Clausal modifiers - subject, object, and adjuncts - are

translated separately, using the Moses phrase-based translator [21. The rationale is to obtain

improved translation quality through syntactic analysis at a high level, while still utilizing

the typically good content translation phrase-based systems have to offer. Additionally, by

applying simpler methods to reduced expressions, my approach becomes less vulnerable to

parsing errors: high-level verbal structure must be accurate, but the system isn't sensitive

to low-level parsing details such as the inner structure of the subject and object.

During AEP prediction, the model is decoded using beam search. An AEP is built one

decision at a time, in the order (dl,..., dN), and at any given point a beam of the top M

decisions is maintained. The ADVANCE function is used to increment the beam one step

further. Partial decision sequences are ranked using the SCORE metric.

4.1.4.1

Gluing Clauses into a Sentence

A significant challenge of this approach is: after each clause of a Chinese sentence has been

translated into a full English clauses, modifiers and all, how should the clauses be combined

together to form the full sentence? This is a substantial problem that was not focused on in

this thesis. Instead, I put in a placeholder implementation, ready to be replaced by a more

sophisticated method.

The implemenentation glues English clauses together depth-first. That is, for a Chinese

sentence, consider the depth-first order of its clauses - the first clause in the sequence is the

one at the root of the tree. The gluer simply concatenates, in depth-first order, each clause

translation. Such an approach is slightly better for Chinese than the left-to-right gluing use

in [1]. For example, a Chinese clause very commonly has a lower relative clause that attaches

to its object:

IP

NP

VP

subject

V

NP

verb

CP

NP

relative clause

object

Depth-first order reorders these clauses correctly, by translating the higher clause and

lower clause independently and concatenating them: [subject verb object [relative clause]].

Section 5.3.1 talks more about the cases it can't handle and suggests improvements.

4.2

Chinese Clause Splitting

In the training extraction and translation stages of my system, a clause "splitting" step is

performed that takes a parse tree of a sentence and identifies all the subtrees that correspond

to clauses. For example, the green circles in Figure 4.2 indicate the roots of the clause

subtrees for the English sentence The one that organized the meeting did encourage other

members to meet the new speaker, but everyone was busy. Labeling the clauses depth-first,

the 1st clause is the one did encourage other members, the second is that organized the

meeting, the third is to meet the new speaker, and the fourth is but everyone was busy. The

clauses attach as follows:

[1: the one [2: that organized the meeting] did encourage other members [3: to

meet the new speaker] [4: but everyone was busy]].

I developed an algorithm for splitting Chinese sentences into clauses: given a sentence parse

as input, the clause markers (green circles in the diagram) are returned as output. The

program was designed by looking at hundreds of randomly drawn English and Chinese parse

trees from the corpus, to figure out several heuristic rules that would identify the Chinese

clauses most of the time. While I did no formal evaluation of clause splitting performance

(because I have no evaluation data to begin with), from observation I can say that it was

infrequently the case that my program would assign bad clause markings to a good parse

tree. Note that it is not necessary to give good output for 100% of the data; the aim here

is to be correct the majority of the time, by capturing the common cases with a few simple

heuristics.

A clause splitter for English has already been developed by Cowan et al. [1]. I use it

untouched to split my English parse trees. A contribution of this thesis is a novel technique

for splitting Chinese clauses. While part of the design is Chinese-specific, I believe the

approach taken is an effective way to split clauses of any language, including English.

My system splits clauses through the use of two stages of rules. First, the main verbs

in the parse are identified. Clause markers are assigned to each of these verbs. Second, the

clause markers propagate up the tree, the aim being for a given marker to stop once it has

reached its clause root. Figure 4.2 diagrams each of these stages. The red dashed rectangles

around the main verbs in the sentence are the result of stage 1. The thick red branches in

the parse tree illustrate the journey that these clause markers undergo, before reaching their

final destination at the root of their respective clauses. Stage 2 rules control this propagation

F-11nii~

rl--

UmA-~ Driin

Note

3

Lv]

encourage

CC

but

NP

)JP

other

members

NP

usy

the

meeting

Step 1: Identify clausal verbs:

organized, encourage, meet, was

new

speaker

Step 2: Propagate upwards to clause root:

s, S, SG, CCP

Note 1: The verb 'did' functions here as a modal verb, not a clausal verb.

Verbs such as these make clausal verb identification (Step 1) non-trivial.

clause marker will never pass through a noun phrase, because

A

2:

Note

noun phrases never constitute clauses. Propagation therefore ends.

Several other rules of this type come into play to control propagation.

Note 3: For each of the two branches indicated, propagation is prohibited:

a child cannot pass upwards to a parent that has already had a clause

marker pass through it.

Figure 4.2: Overview of the clause splitting program. While the program was designed for and tailored

to Chinese, an English example was chosen, for clarity, to illustrate. A first set of rules identify the main

(clausal) verbs in the sentence, as indicated by the red dotted rectangles. Clause markers are assigned to

each of these verbs, which then propagate up the tree, eventually stopping at the clause root. Notes 1-3

describe a few of the interesting parts of this program.

and eventual settling. The notes in the figure point to a few of the interesting parts of the

program, described in detail in the remaining sections.

4.2.1

Step 1: Main Verb Identification

This section reviews the first step towards clause splitting: clausal verb identification. The

goal is to identify each of the clausal verbs in a sentence. Each clausal verb corresponds

to its own clause subtree - stage 2 identifies that subtree. In figure 4.2, the clausal verbs

are organized, encourage, meet, and was. Stage 1 is non-trivial because of the existence of

non-clausal verbs, such as modal verbs in English and Chinese (did in figure 4.2), Chinese

stative verbs, Chinese verbs that behave like adverbs, and more.

Figure 4.2 actually shows a slight simplification of how stage 1 works. The actual program does something equivalent and slightly more complicated: it identifies the clausal verb

phrases (VP nodes in the parse tree) that are above the clausal verbs. The reason, in short,

is that the rules are easier to express that way. For example, consider the English modal

verb did and the clausal verb encourage. The words themselves, inside the parse tree, look

the same - they have the same part-of-speech tag VV. The VP nodes directly above them,

however, look quite different.

VP

VV

VP

did

VV

NP

SG

encourage

The VP above did has another VP - the VP above encourage - as one of its immediate

children. In fact, this is the general pattern used to discern modal verbs from other verbs: a

modal verb has another VP as one of its children. This is a standard for modal verbs used

in both the English and Chinese Penn Treebanks. The VP above encourage, on the other

hand, does not fit this pattern. In this example, the VP above encourage would be marked

as a clausal-VP, and the VP above did would not.

Algorithm 1 Clausal Verb Identification

input: the nodes of a Chinese parse tree

procedure isClausalVerbPhrase? (node)

if node is not a VP then

return false /* only targeting verb phrases */

if one of node's children is a VP then

return false /* VP corresponds to a modal verb */

for each word spanned by node, that is not spanned by a descendant VP of node do

if word is a verb then

if word is a VV, VC or VE then

return true /* any non-stative verb treated as a clausal verb */

else

/* need to handle stative verbs (VAs) specially */

if word has ancestry CP--IP--I1 or more VPs]-VA then

/* short CP pattern */

return false /* word acts more like an adjective, less like a clausal verb */

else if word has ancestry DVP--VP--VA then

/* adverbial pattern */

return false /* word acts as an adverb, not a clausal verb */

else

return true /* stative verbs are considered clausal by default */

return false /* default: didn't find any reason to consider node a clause root. */

The problem of stage 1 is therefore restated as follows: find, for each clausal verb, the

VP ancestor that it corresponds to. Such VPs are here named clausal VPs. Sensitivity to

modal verbs are one detail of the program. The full procedure used in this thesis is outlined

in algorithm 1.

The algorithm begins with two simple tests. First of all, to be a clausal VP, a parse tree

node must of course be a VP. Secondly, the node must not have a VP as one of its children

- such a node corresponds to a modal verb. The meat of the algorithm considers each word

that is both spanned2 by this node and additionally not spanned by a VP descendant of

this node. The reason for disregarding the words spanned by a descendant VP is that if any

of those words are clausal verbs, then the descendant VP is what should be recognized as

a clausal VP, rather than the current node under consideration that is higher up the tree.

2

The words in the span of a node are simply those that are covered by its subtree.

Considering each word individually, the algorithm looks for a reason to mark the current

node as a clausal VP - it looks for words that behave syntactically like clausal verbs. First

of all, if a given word is the copula verb (VC) to-be, the existential verb (VE) to-have, or a

basic verb (VV), the algorithm ends and the current node is marked as a clausal VP. The

remaining class of verbs, stative verbs (VA), are treated more specially. Chinese stative verbs,

also called static verbs, are like adjectives but can also serve as the main verb in a sentence.