Using Graphone Models in Automatic ... Recognition Stanley Xinlei Wang JUL 2

advertisement

Using Graphone Models in Automatic Speech

MASSACHUSETTS INSTI'JTE

OF TECHNOLOGY

Recognition

by

JUL 2 0 2009

Stanley Xinlei Wang

LIBRARIES

S.B. Massachusetts Institute of Technology (2008)

Submitted to the Department of Electrical Engineering and Computer

Science

in partial fulfillment of the requirements for the degree of

Master of Engineering in Electrical Engineering and Computer Science

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

June 2009

@ Massachusetts Institute of Technology 2009. All rights reserved.

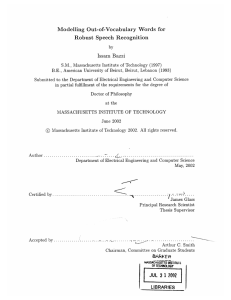

Author ..

...........

.............

Department of Electrical Engineering and Computer Science

A

May 22, 2009

Certified by

(

James R. Glass

Principal Research Scientist

, Thesis Supervisor

A

Certified by..............

(I. Lee Hetherington

~-

~~ ---WA~.

Research Scientist

S- Co-Supervisor

Accepted by...

At

C...........

Arthur C. Smith

Chairman, Department Committee on Graduate Theses

ARCHIVES

Using Graphone Models in Automatic Speech Recognition

by

Stanley Xinlei Wang

Submitted to the Department of Electrical Engineering and Computer Science

on May 22, 2009, in partial fulfillment of the

requirements for the degree of

Master of Engineering in Electrical Engineering and Computer Science

Abstract

This research explores applications of joint letter-phoneme subwords, known as graphones, in several domains to enable detection and recognition of previously unknown

words. For these experiments, graphones models are integrated into the SUMMIT

speech recognition framework. First, graphones are applied to automatically generate

pronunciations of restaurant names for a speech recognizer. Word recognition evaluations show that graphones are effective for generating pronunciations for these words.

Next, a graphone hybrid recognizer is built and tested for searching song lyrics by

voice, as well as transcribing spoken lectures in a open vocabulary scenario. These

experiments demonstrate significant improvement over traditional word-only speech

recognizers. Modifications to the flat hybrid model such as reducing the graphone set

size are also considered. Finally, a hierarchical hybrid model is built and compared

with the flat hybrid model on the lecture transcription task.

Thesis Supervisor: James R. Glass

Title: Principal Research Scientist

Thesis Co-Supervisor: I. Lee Hetherington

Title: Research Scientist

Acknowledgments

I am grateful to my advisor Jim Glass for his guidance and support. His patience

and encouragement has helped me significantly in my past two years at MIT. I would

also like to thank my co-advisor, Lee Hetherington, for his guidance and for helping

me with building speech recognition components using the MIT FST Toolkit. I am

especially appreciative of being able to come by Jim and Lee's offices almost anytime

when I have questions, and get them answered very quickly.

Much thanks to Ghinwa Choueiter for teaching me when I first joined the group,

and for helping me with the word recognition and lyrics experiments. I would like to

thank Paul Hsu for discussions on NLP topics and effective algorithm implementations, Hung-An Chang for help with the lecture transcription experiment, Stephanie

Seneff for discussions on subword modeling, and Scott Cyphers for assistance on getting my scripts running. Furthermore, I would like to thank Mark Adler and Jamey

Hicks at Nokia Research Cambridge for giving me the opportunity to perform speech

research during a summer internship, as well as Imre Kiss, Joseph Polifroni, Jenine

Turner, and Tao Wu for their help during my internship.

I am thankful to my friends at MIT for keeping me motivated. Finally, I would

like to thank my parents for their continued encouragement and support.

This research was sponsored by Nokia as part of a joint MIT-Nokia research

collaboration.

Contents

1

2

Introduction

1.1

Motivation .................

1.2

Summary of Results

1.3

Outline ..................

........

......

17

......

...................

........

.

2.2

2.3

20

22

.......

23

Background

2.1

3

17

Working with OOVs

23

........

.........

2.1.1

Vocabulary Selection and Augmentation

2.1.2

Confidence Scoring ...................

2.1.3

Filler Models

2.1.4

Multi-stage Combined Strategies

.

. .........

.....

...................

26

......

.

. ...............

........

Graphone Letter-to-Sound Conversion

2.2.2

Graphone Flat Hybrid Recognition . ..............

.

. ............

36

37

Restaurant and Street Name Recognition

Restaurant Data Set ...................

3.2

Methodology

3.3

Results and Discussion ...................

29

29

SUMMIT Speech Recognition Framework . ...............

3.1

27

28

Graphone Models ...................

2.2.1

24

39

........

..

.........

...................

.......

3.3.1

Graphone Parameter Selection . .................

3.3.2

Adding Alternative Pronunciations

3.3.3

Generalizability of Graphone Models . .............

. ..............

39

40

41

41

43

45

3.4

4

5

6

Summary

.

Lyrics Hybrid Recognition

4.1

Lyrics Data Set ......

4.2

Methodology

4.3

Results and Discussion .

4.4

Controlling the Number of Graphones.

4.5

Summary

.......

.........

Spoken Lecture Transcription

61

5.1

Lecture Data Set .............................

61

5.2

Methodology

62

5.3

Results and Discussion .

5.4

Controlling the Number of Graphones ....

.. . . .

. . . . ..

68

5.5

Hybrid Recognition with Full Vocabulary . . . . . .

. . . . .....

.

69

5.6

Hierarchical Alternative for Hybrid Model

. . . . .....

.

72

5.7

Summary

.....

75

...............................

.........................

. . . . .

63

..........................

Summary and Future Directions

77

6.1

77

Future Directions .............................

A Data Tables: Restaurant and Street Name Recognition

81

B Data Tables: Lyrics Hybrid Recognition

83

C Data Tables: Spoken Lecture Transcription

85

List of Figures

1-1

Vocabulary sizes of various corpora as more training data is added

(from [25]).

1-2

19

New-word rate as the vocabulary size is increased for various corpora

(from [25]).

2-1

..

............

...................

.......

..........

.......

.......

20

Generative probabilistic process for graphones as described by Deligne

et al. According to an independent and identically-distributed (iid)

distribution, a memoryless source emits units gk that corresponds to

Ak and Bk, each containing a symbol sequence of variable length.

2-2

. .

30

Directed acyclic graph representing possible graphone segmentations of

the word-pronunciation pair (far, f aa r). Black solid arrows represents

graphones of up to one letter/phoneme. Green dashed arrows (only a

subset shown) represent graphones with two letters or two phonemes.

Traversing the graph from upper-left corner to lower-right corner generates a possible graphone segmentation of this word. . .........

3-1

31

Word recognition performance using automatically generated pronunciations produced by a graphone L2S converter trained with various L

and N values ..................

3-2

............

Word-recognition performance using top 2 pronunciations generated

using various L and N values. ...................

3-3

42

...

44

Word recognition performance with various number of pronunciations

generated using the graphone (L = 1, N = 6) and spellneme L2S

converters ...................

...............

46

3-4

Word recognition performance for seen words when the top 1 pronunciation is used.

3-5

..

..

..

.. .

...

.........

47

Word recognition performance for unseen words when the top 1 pronunciation is used.........

3-6

. . . .

. .

....

..........

.

47

Word recognition performance for seen words when the top 2 pronunciations are used .........

...

.................

. . .

48

3-7 Word recognition performance for unseen words when the top 2 pronunciations are used ........

4-1

.........

.....

.....

.

48

Recognition word error rate (WER) for recognizers with 50-99% training vocabulary coverage. Word & OOV is replacing each output graphone sequence by an OOV tag. Word & Graphone is concatenating

consecutive graphones in the output to spell OOV words. .......

4-2

.

55

Recognition sentence error rate (SER) for recognizers with 50-99%

training vocabulary coverage. Word & OOV is replacing each output

graphone sequence by an OOV tag. Word & Graphone is concatenating

consecutive graphones in the output to spell OOV words. .......

4-3

.

56

Recognition letter error rate (LER) for recognizers with 50-99% training vocabulary coverage. Word & Graphone is concatenating consecutive graphones in the output to spell OOV words. . ........

5-1

. .

57

Recognition word error rate (WER) for 88-99% training vocabulary

coverages. Word & OOV is replacing each output graphone sequence

by an OOV tag. Word & Graphone is concatenating consecutive output

graphones to spell OOV words.

5-2

.....................

. . .

65

Recognition sentence error rate (SER) for 88-99% training vocabulary

coverages. Word & OOV is replacing each output graphone sequence

by an OOV tag. Word & Graphone is concatenating consecutive output

graphones to spell OOV words.

...................

..

66

5-3

Recognition letter error rate (LER) for 88-99% training vocabulary coverages. Word & Graphone is concatenating output graphone sequences

to spell OOV words.

5-4

...........

......

.

66

Test set perplexity of language models trained on a replicated training

corpus ..................

5-5

. ....

..........

......

70

Hierarchical OOV model framework (from [2]). Woov represents the

OOV token. ..............

..................

73

12

List of Tables

2.1

Examples of graphone segmentations of English words. These maximumlikelihood segmentations are provided by a 5-gram model that allows

30

a maximum of 4 letters/phonemes per graphone. . ............

3.1

Examples of the L2S converter's top 3 hypotheses using the graphone

language model. ...................

4.1

..........

..

Vocabulary size of hybrid recognizers, and their vocabulary coverage

levels on the training and test sets. . ...................

4.2

43

53

Example recognizer output at the 92% vocabulary coverage level. Square

brackets denote concatenated graphones (only letter part shown). Ver56

tical bars are graphone boundaries. ....................

4.3

Number of graphones for L = 4 unigram model after trimming using

the specified Kneser-Ney discount value.

4.4

. .............

. .

59

Comparison of recognition performance for the full graphone hybrid

model and trimmed graphone models (4k and 2k graphone set size). We

also show results for the word-only recognizer. All of these recognizers

have 80% vocabulary coverage.

5.1

...................

..

59

Vocabulary sizes for the hybrid recognizer, and corresponding vocabulary coverage levels on the training and test sets ............

.

63

5.2

Example recognizer output for the hybrid and word-only recognizers

at 96% training corpus coverage, along with reference transcriptions.

Square brackets indicate concatenation of graphones (only letter part

shown). Vertical bars denote graphone boundaries.

5.3

. ..........

64

Examples of recognition output for 100% coverage word-only recognizer and a 98% coverage hybrid recognizer, along with the corresponding reference transcriptions. Square brackets denote concatenated graphones. Vertical bars show graphone boundaries. Note that this table

differs from Table 5.2 in that the hybrid and word-only recognizers

have different coverage levels.

5.4

......................

. . .

68

Comparison of recognition performance for the full graphone hybrid

model and trimmed graphone models (4k and 2k graphone set size).

Results are also shown for the word-only recognizer. All of these recognizers have 96% vocabulary coverage on the training corpus.

5.5

.

.

.

69

Performance of hybrid recognizers with full vocabulary compared to the

full-coverage baseline word-only recognizers. Word-only lx and 10x are

built with one and ten copies of the training corpus, respectively. The

OOV column is for replacing graphone sequences by OOV tags. The

Gr column is for concatenating graphones together to form words.

5.6

Comparison of hybrid approaches for the lecture transcription task at

98% vocabulary coverage. ................

5.7

71

.

. . .

.

75

Examples of recognition output from hierarchical, flat, and word-only

models. All recognizers have a 98% training corpus coverage. Square

brackets denote concatenated graphones (only letter parts shown).

Vertical bars show boundaries between graphones. . ..........

76

A.1 Word recognition performance using automatically-generated pronunciations for restaurant and street names.

Graphone L2S converters

with various L and N values are used to generate pronunciations. Results are shown in WER (%). This data is used to generate Figures

3-1, 3-2, 3-4, 3-5, 3-6, and 3-7. ......

...........

... . .

82

A.2 Word recognition performance with various number of pronunciations.

The graphone converter is trained with L = 1, and N = 6.

The

spellneme data is from [13]. Results are shown in WER (%). This

data corresponds to Figure 3-3 ...........

.

..

.......

. .

82

B.1 Performance of the flat hybrid recognizer on music lyrics. Ctrain and

Ctest are vocabulary coverages (%) on the training and test sets, respectively. IVI is the vocabulary size. Word & OOV is replacing each

graphone sequence in the recognizer's output by an OOV tag. Word

& Graphone is concatenating graphones to spell OOV words. All error

rates are in percentages. This data corresponds to Figures 4-1, 4-2,

and 4-3. ..................

.........

......

84

C.1 Performance of the flat hybrid recognizer on transcribing spoken lectures.

Ctrain

and Ctest are vocabulary coverages (%) on training and

test sets, respectively. IVI is the vocabulary size. Word & OOV is replacing each graphone sequence in the recognizer's output by an OOV

tag.

Word & Graphone is concatenating graphones to spell OOV

words. All error rates are in percentages. This data corresponds to

Figures 5-1, 5-2, and 5-3.

..................

.....

.

86

16

Chapter 1

Introduction

1.1

Motivation

Current speech recognition platforms are limited by their knowledge of words and how

they are pronounced. Almost all speech recognizers have a pronunciation dictionary

that has a finite vocabulary. If the recognizer encounters a word that is not in the

speech recognizer's vocabulary, then the recognizer cannot produce a transcription of

the word. This limitation is detrimental in many speech recognition applications. For

example, if the user wants to find the name of an obscure restaurant while driving

home from work, the user could use a spoken dialogue interface in the car, but the

dialogue interface may have no idea how the restaurant name should be pronounced,

resulting in the inability to understand the user's request and the failure to retrieve

information about the restaurant. Another example is transcribing video to enable

conventional text-based search. Uncommon words in these videos are often not in

the speech recognizer's vocabulary. In addition, because a recognition system needs

to find boundaries between words in the input audio stream, a misrecognized word

can easily cause the surrounding words, or even the entire sentence to be completely

misrecognized.

This often results in user frustration and reduced user confidence

in speech dialogue systems in general. Therefore, in order to build speech dialogue

systems that are applicable in practical user scenarios, we must develop techniques

to mitigate the effects of out-of-vocabulary (OOV) words.

There are several methods to combat the OOV problem. One possibility is to

dynamically generate pronunciations of OOV words prior to recognition. For a searchby-voice application, pronunciations can be automatically generated for new words

as they are imported into a database. These newly generated pronunciations can

then be added to the speech recognizer's vocabulary such that they are no longer

out-of-vocabulary.

However, we may not be able to perform all large vocabulary

search-by-voice tasks by increasing the vocabulary. If we increase the vocabulary by

large factors, we may be hindered by sparsity in the available language model training

data, which could lead to more confusion and degraded recognition performance.

Another argument concerns the usability of these search-by-voice interfaces. When

a user makes a voice query, if there are truly no matching results, it's better to be

able to tell the user that the query produces no results, rather than automatically

constraining the audio query to search terms that do produce results, but are not

even close to what the user intended. In the latter case, the user may be conviced

that the lack of results is because of misrecognition.

Simply increasing the vocabulary of a recognizer also cannot solve the OOV problem in real-time transcription tasks, such as converting streaming broadcast news to

text. In this case, we have no way of knowing what the speaker will say, so we have

to be able to automatically hypothesize spellings of new words during recognition.

Traditional speech recognizers cannot do this because their recognition frameworks

are constrained by their known vocabulary, and are only able to output words in the

known vocabulary. One may wonder if we could just use a large lexicon as the speech

recognizer's vocabulary, and not worry about recognizing the rare esoteric terms in

speech. However, in most applications of real-time audio transcription, this approach

has a crucial weakness: OOV words are likely to be content words that are highly

important for the topic discussed in the audio. In a broadcast news scenario, since

newly-coined words frequently appear in headlines, a recognizer with finite vocabulary

is likely to miss key words in these news reports.

Vocabulary size growth in training corpora has been characterized in the past

[25]. Figure 1-1 shows the the growth of vocabulary size as a function of words in

10k.

IlOABSARD

VOYAG"IER

10A2

10f2

iOAS

10I4

10AJ8

WAS

10W7

106

Number ofTranin Wrds

Figure 1-1: Vocabulary sizes of various corpora as more training data is added (from

[25]).

several training corpora. These measurements are calculated by first randomizing

each corpus and then computing the vocabulary size while scanning the corpus from

beginning to end. This process is repeated several times, and the results are averaged

to yield the final measurements. ATIS, F-ATIS, VOYAGER are data of users talking

with a spoken language system. WSJ, NYT, and BREF are from newspaper text.

CITRON and SWITCHBOARD are from human-to-humman speech. In all of these

corpora, we can see that even at very large training corpora sizes, the vocabulary size

continues to increase rapidly without leveling off. This demonstrates that new words

continue to appear even as the training data gets very large. The same study also

examines the rate at which new words appear for the same set of corpora, as shown

in Figure 1-2. This data shows that as we increase vocabulary coverage, we get still

get a substantial amount of new words even at very large vocabulary sizes.

One method for mitigating the effect of OOVs is to use sub-lexical units to model

OOV words. These units represent pieces of words, rather than whole words. One

of the most successful types of these units are known as graphones, which are joint

sequences of letters and phonemes [6]. These units are automatically-learned from

a pronunciation dictionary so they have potential for applications in any language

as long as the written form and pronunciations can be represented as two streams

100.0

BREF

.0 -

0.5 -

10A2

VOYAGER

VOYAGER

10

104

1I0O5

06

Vocabulary Size

Figure 1-2: New-word rate as the vocabulary size is increased for various corpora

(from [25]).

symbols. These units are useful for both automatically generating the pronunciation

of words in a dictionary as well as constructing a recognizer that can automatically

hypothesize spellings of OOV words. Because of the urgent need for effective OOV

models and the success of graphone models so far, we focus the work in this thesis on exploring applications of graphone models in the existing speech recognition

framework at MIT.

1.2

Summary of Results

This section contains an overview of the experiments presented in this thesis, along

with important results.

* Generating pronunciationsfor restaurant and street names. A graphone-based

letter-to-sound converter is built and used to automatically generate pronunciations for restaurant and street names.

Word recognition is then used to

evaluate the quality of these pronunciations. These experiments show that using a 6-gram, with graphone sizes of 0-1 letters/phonemes, produces the best

results. The generalizability of these results are demonstrated through dividing

the test set into seen and unseen words. We also explore using multiple L2S

pronunciations for each word, and conclude that using 10 pronunciations per

word produces the best performance without increasing confusion within the

recognizer. Graphone results are also shown to compare favorably with those

of linguistically-motivated subword models.

* Music lyrics hybrid recognition. A flat hybrid recognizer is built using graphones, and tested on transcribing spoken lyrics in a closed-vocabulary scenario.

The hybrid recognizer significantly outperforms the word-only recognizer at all

vocabulary coverage levels. We find that a hybrid recognizer with >92% vocabulary coverage on the training set actually yield lower recognition error rates

than a word-only recognizer with 100% coverage on the training set. The results

also demonstrate that graphones are useful for preventing misrecognition of the

neighbors of OOV words, as well as hypothesizing the spelling of OOV words.

In addition, we experiment with reducing the graphone set significantly, and

show that the hybrid recognition performance degrades slightly, but is still significantly better than the word-only recognizer at the same vocabulary coverage

level.

* Transcribingspoken lectures. Spoken lectures are transcribed using a flat hybrid

recognizer at several vocabulary levels. This task is open vocabulary because we

cannot know all the words the speaker will say during the lecture. The hybrid

recognizer's performance is significantly better than the word-only recognizer

at 88-96% vocabulary coverage on the training set, and slightly better than

the word-only recognizer at 98-99% coverage levels. We show that the hybrid

model's performance remains about the same when the graphone set size is

reduced from 19k to 4k. A hybrid recognizer with 100% vocabulary coverage

is also built and shown to perform about the same as a word-only recognizer

at 100%, even though the graphones can add more confusion to the recognizer.

Finally a hierarchical hybrid model is built and compared with the flat hybrid

model. Results show that at 98% training set coverage, these two hybrid models

perform about the same, and both outperform the word-only recognizer.

1.3

Outline

In the rest of this thesis, we first give an overview of previous works that examine the

OOV problem. We then discuss the background technology used in this thesis, which

are graphone models and the SUMMIT speech recognition framework. In subsequent

chapters, we explore applications of graphone models for speech recognition in areas

described in Section 1.2. Finally, we discuss future work that builds upon the research

presented in this thesis.

Chapter 2

Background

Since OOV words are inevitable in large vocabulary speech recognition tasks, we must

find ways to cope with them in order to build practical speech applications. In this

chapter, we first review past techniques for mitigating the effect of out-of-vocabulary

(OOV) words on speech recognition performance. Next, we introduce graphone models for both automatic pronunciation generation and hybrid speech recognition. Finally, we give an overview of the SUMMIT speech recognition framework used in this

thesis.

2.1

Working with OOVs

Several techniques have been developed to mitigate the effect of OOV words on speech

recognition performance. The most straight-forward approach is to increase the vocabulary of the recognizer, but this cannot completely eliminate OOVs in open vocabulary tasks such as audio transcription. Other approaches focus on adding probabilistic machinery to the speech recognizer to detect OOVs in utterances, and possibly

even hypothesize pronunciations and spellings associated with the OOV word. These

techniques include confidence scoring, filler models, or a combination of both. Multistage recognition can also be used to improve large vocabulary recognition by using

different recognition strategies in each stage.

2.1.1

Vocabulary Selection and Augmentation

One way to combat OOVs in speech recognition is to select the best set of words

to be in-vocabulary for the target domain. If the system has a pretty good idea of

what the user might say, the system can be trained with a set of words that give a

small OOV rate. One example is a system that provides weather information, which

can be trained with a set of geography-related words based on frequency of usage

[43]. Martins et al. combine morphological and syntactic information to optimize

the trade-off between the predicted vocabulary coverage and the number of added

words [30]. Morphological analysis is used to label words with part-of-speech tags,

which are then used to optimize vocabulary selection. Another recent study explores

using the internet as a source for determining which words to include in the speech

recognizer's vocabulary [32]. This technique uses a two-pass strategy for vocabulary

selection, where the first pass produces search engine requests, and the second pass

augments the speech recognizer's lexicon with words from returned online documents.

We can also increase the vocabulary by automatically generating pronunciations

for unknown words. This can be performed by encoding linguistic knowledge, or automatically learning letter-sound associations. The older techniques include purely

rule-based approaches that use context-dependent replacement rules [18].

Subse-

quent techniques have been more data-driven, such as using finite-state transducers

[38], or decision trees to capture the mapping between graphemes and phonemes

[15]. Another technique is hidden Markov models (HMM), which formulates a generative model for finding the most likely phoneme sequence for generating the observed

grapheme sequence [37]. There are discriminative techniques as well, such as perceptron HMM trained in combination with the margin infused relaxed algorithm (MIRA)

[27]. Finally, there has also been a series of successful techniques based on sub-lexical

models, which are the most relevant to this thesis.

Probabilistic sub-lexical models capture probabilities over chunks of letters commonly referred to as subwords, or sub-lexical units. These units are often correlated

to syllables that commonly occur in a language. Some of these models automatically

learn the sub-lexical units, while others have a predefined set of them. However, they

all use a probabilistic framework to capture the dependencies between the subwords

that make up words in the training set. The advantage of probabilistic approaches is

that these methods can generally handle the problem of aligning the grapheme and

phoneme sequence much better than many non-probabilistic approaches.

One technique is to use context-free grammar (CFG) parse trees with a superimposed N-gram model to parse words into lexical subwords [31, 34, 35]. First, the

pronunciation of words in the lexicon are converted to sequences of subword pronunciations through simple rewrite rules. Next, a rule-based CFG is applied to convert

the words in the lexicon to subwords, using the subword pronunciation sequences

as a constraint. This process converts the lexicon into lexical subword units containing both letter and sound information, which are also called spellnemes. Failing

parses are either manually corrected, or made possible by the addition of new rules.

This correction process is repeated iteratively until the entire lexicon is successfully

converted into subword units. Finally, N-gram probabilities are learned from lexical

subword sequences such that this statistical model can be used to segment unknown

words into lexical subwords.

Another method is maximum-entropy N-gram modeling of sub-lexical units, in

the form of either a conditional model, or a joint model [11]. The conditional model

captures probabilities of phonemes conditioned on previous phonemes and letters, over

a sliding window. Probability distributions are estimated using maximum entropy

with a Gaussian prior. For the joint model, a maximum entropy N-gram model is

calculated on training data of words segmented into chunks consisting of either a

single letter, a single phoneme, or a single letter-phoneme pair.

Joint grapheme-and-phoneme sequences have been used to model the letter-tosound relationships. These models, known as graphone models, are automatically

learned from a pronunciation dictionary through EM training with language modeling.

They are described in detail in Section 2.2.1.

It is still an open question whether having a huge vocabulary recognizer is better or

worse than an open-vocabulary recognizer that can recognize arbitrary words. One

recent study focused on Italian, and shows that augmenting a speech recognizer's

lexicon with automatically-generated pronunciations of OOV words performs slightly

better than OOV modeling in an open-vocabulary recognizer [20]. However, in many

applications, such as transcribing audio files, there is no way to eliminate all OOV

words because we do not know what the speaker will say. In these cases, we can use

methods to detect and hypothesize spellings of OOV words.

2.1.2

Confidence Scoring

Confidence scoring allows us to get a sense of how likely a hypothesized word or word

sequence is an OOV word. Several criteria can be used to generate the confidence score

for region in the recognition hypothesis. They include word posterior probability,

acoustic stability, and hypothesis density [41, 28]. Word posterior probability can

be computed on the output lattice or the output N-best list of a speech recognizer.

Word lattice posterior probabilities are probabilities associated with edges in the

word lattice, and can be computed by the well-known forward-backward algorithm.

Word N-best list posteriors are different from lattice posteriors in that the N-best

list does not have a time associated with each word in the hypothesis. This could

be an advantage because the probabilities for the same speech recognizer output are

essentially collapsed together. Acoustic stability is a measure of how likely a given

word occurs in the same position in all hypothesized N-best list. Hypothesis density

is a measure of how many hypotheses have similar likelihoods at a given point in time.

The higher the hypothesis density, the more likely the error. Comparison of these

measures show that using word posteriors on word lattices yield the best results [41].

Multiple confidence measures can also be combined to construct confidence vectors,

which are then passed through a linear classifier to determine if the corresponding

word is OOV [24].

More recent studies have explored comparing information from several different

sources to detect OOVs. Lin et al. jointly align word and phone lattices to find OOV

words [29]. Another study compares confidence measures from strongly constrained

and weakly-constrained speech recognizers [10]. The strongly-constrained recognizer

is a regular speech recognizer, while the weakly constrained data comes from a bigram

phoneme recognizer and a neural network phone posterior estimator. Word-to-phone

transductions have also been combined with phone-lattices to find OOVs [42]. In

addition to comparing output of weakly and fully constrained phoneme recognition

outputs, this study uses a transducer to decode the a frame-based recognizer's top

phoneme output into words, and then compares this output with the word output of

a frame-based speech recognizer.

2.1.3

Filler Models

Filler models vary in degrees of sophistication, depending on the application. Some

filler models are based on phoneme sequences, and can hypothesize an OOV's pronunciation. Other models are based on joint letter-sound subwords, which not only can

hypothesize pronunciation, but also can hypothesize spelling of OOV words. Filler

models can be incorporated into a traditional speech recognition network through two

main approaches: hierarchical hybrid and flat hybrid. In the hierarchical approach,

an OOV tag is added to the speech recognizer's vocabulary. At a given point in

decoding an utterance, the recognizer has a choice of decoding the next portion of

the utterance as a word, or the OOV tag. This OOV tag is modeled by a separate

OOV model that has probabilistic information of phones, syllables, or both. In these

recognizers, an extra cost can be added for entering an OOV model because we would

prefer to recognize words if the words are in-vocabulary. In the flat-hybrid approach,

OOV words in the recognizer's training corpus are turned into subwords or phonemes,

which are treated just like regular words in the rest of the recognizer training process.

Bazzi and Glass use phones and syllables to model OOV words through a hierarchical approach [3, 4]. The phoneme-level OOV model is an N-gram model computed

on a training corpus of phoneme sequences. Later work in this area use automaticallylearned variable-length phonetic units for the OOV model [5]. In this study, mutual

information is used as a criterion for merging phonemes into bigger sequences. The

authors show that using variable-length units for the OOV model leads to improved

recognition performance over using only individual phonemes. They also show that if

individual phonemes were used, it's better to train the OOV model on phoneme sequences in a large-vocabulary dictionary rather than on phoneme sequences of words

in a recognizer's LM training corpus. This reduces both domain-dependent bias of the

OOV model, as well as bias toward phonemes of frequent words in the LM training

corpus.

Modeling OOV words with phonetic units can be effective for OOV detection,

but does not allow us to hypothesize the spelling of an OOV word. To do so, our

OOV model must incorporate spelling information into subword units. For example,

spellnemes can do this by encoding both phonetic and spelling information in each

spellneme [35]. In a previous study, Choueiter incorporates spellnemes into a recognizer in a flat-hybrid configuration to model OOV words [12], and demonstrates that

using spellnemes in flat hybrid recognition decreases word error rate and sentence

error rate for music lyrics spoken by a user with the intention to search for a song.

Some OOV words are spelled correctly through spellneme modelling.

Graphones have also been used in flat hybrid recognition [8, 19]. Graphones also

contain both spelling and pronunciation information, and are trained in an unsupervised fashion. The details of graphone flat hybrid recognition are described in Section

2.2.2.

2.1.4

Multi-stage Combined Strategies

Efforts have been made to combine confidence scoring and filler model approaches in a

multi-stage framework. In the first stage, an utterance is first recognized into subword

or phoneme lattices. In the second stage, these lattices are used to generate confidence

scores for words or time frames. One technique is to use a phoneme OOV model in the

first stage to detect OOV words, and then use confidence scores on remaining words

to detect words with confidence [23].

Since filler models can introduce confusion

with in-vocabulary words, it would be helpful to have good methods to prevent invocabulary words from being detected as OOV words. As an alternative to having a

cost for diving into the OOV model, confusion network and vector space model post

processing can be used to detect OOVs while accounting for confusion between in-

vocabulary terms and OOV words [33]. Multi-stage frameworks can also have more

than two stages. Chung et al. present a three-stage solution, where the first stage

generates phonetic networks, the second stage produces word network, and the third

stage parses the word network using a natural language processor [14].

2.2

Graphone Models

In this section, we describe the training process for graphones, and their applications in speech recognition. We first discuss letter-to-sound (L2S) conversion because

graphones were originally developed for the L2S task. L2S conversion is also an important part of vocabulary augmentation. We then discuss how graphones models can be

incorporated directly into speech recognizers to construct hybrid speech recognition

systems.

2.2.1

Graphone Letter-to-Sound Conversion

Graphone models capture the relationship between a word and its pronunciation

by representing them as a sequence of graphone units. Each graphone unit has a

grapheme sequence part and a phoneme sequence part. Maximum-likelihood learning

on a pronunciation dictionary is used to automatically learn graphone units as well as

transitions between graphones. In various publications, graphones have been referred

to as joint-multigrams, graphonemes, and grapheme-to-phoneme correspondences.

The symbol sequences within Ak's can be concatenated to form a sequence R.

We can do the same for the sequences within Bk's to form the sequence S. For the

machine learning task, we observe the sequences R and S, and we want to estimate

the underlying unit sequence G. In the L2S conversion context, R is the sequence

of letters and S is the sequence of phonemes. The term graphone refers to gk in

this formulation. Table 2.1 shows some examples of graphone sequences for English

words. The process of building a L2S converter is a three-step process. The first

step is to develop a graphone model that best represents the data. The second step

is to segment the training data into graphones. The third step is to build a N-gram

gl

g2

g3

gk

Figure 2-1: Generative probabilistic process for graphones as described by Deligne

et al. According to an independent and identically-distributed (iid) distribution, a

memoryless source emits units gk that corresponds to Ak and Bk, each containing a

symbol sequence of variable length.

Word

alphanumeric

colonel

dictionary

semiconductor

xylophone

Graphone Sequence

(alph/ae 1 f) (anum/ax n uw m) (eric/eh r ax kd)

(colo/k er) (n/n) (el/ax 1)

(dic/d ih kd) (tion/sh ax n) (ary/eh r iy)

(semi/s eh m iy) (con/k ax n) (duct/d ah kd t) (or/er)

(xylo/z ay 1 ax) (phon/f ow n) (e/)

Table 2.1: Examples of graphone segmentations of English words. These maximumlikelihood segmentations are provided by a 5-gram model that allows a maximum of

4 letters/phonemes per graphone.

language model on the segmented training dictionary. This language model can then

be used to perform L2S conversion on new words.

The theoretical foundation for graphone models is presented by Deligne et al.,

where they illustrate a statistical learning framework for aligning two sequences of

symbols [16, 17]. The underlying assumption is that there is a memoryless source that

emits the sequence of units G = g 1 , g2 , 9 3 ,

... ,

gK, where gk contains two parallel parts:

Ak and Bk (see Figure 2-1). Ak and Bk are each variable-length symbol sequences

themselves.

For the first step, the graphone model is learned through maximum-likelihood

training. Expectation maximization (EM) is performed on the training dictionary by

running the forward-backward algorithm on directed-acyclic graphs (DAG) representing letter and phoneme sequences. Each DAG corresponds to one word-pronunciation

pair. It can be visualized as a 2-dimensional grid: one dimension represents the

traversal of letters in the word, and the other dimension represents the traversal of

t

aa

r

r

Figure 2-2: Directed acyclic graph representing possible graphone segmentations of

the word-pronunciation pair (far, f aa r). Black solid arrows represents graphones

of up to one letter/phoneme. Green dashed arrows (only a subset shown) represent

graphones with two letters or two phonemes. Traversing the graph from upper-left

corner to lower-right corner generates a possible graphone segmentation of this word.

phonemes in the pronunciation (see Figure 2-2). Each arc in the DAG corresponds

to a graphone, and is labeled with the graphone's probability. During training, we

must specify the minimum and maximum number of letters and phones allowed for

each graphone, which limits the length of arcs in the DAG. Any path through this

DAG represents a possible graphone segmentation of this word-pronunciation pair.

The forward algorithm runs from the upper-left corner toward the lower right corner,

while the backward algorithm runs the opposite way. These forward and backward

scores are used to calculate the posterior for each arc. Posteriors corresponding to

the same graphone are accumulated over DAGs of all dictionary entries, and then

normalized to generate the updated probability for that graphone. This process is

repeated until the likelihood of the training data stops increasing.

Mathematically, the EM training process can be formulated as follows. The likelihood of a dictionary, A, is the product of the likelihood of W word-pronunciation

pairs in the training dictionary. For a word-pronunciation pair, let the letter sequence

be rl, r 2 , ... TM, and the phoneme sequence be si, S2,

... , SN.

We also denote a stream

of graphones as gl, g2, ..., gK. The likelihood of this word-pronunciation pair is the

sum of the likelihoods for all possible segmentations. The likelihood for a possible

segmentation, z, is p(z) =

k=l P(gk). The goal of the EM training goal is to find a

graphone model that maximizes the likelihood of the training dictionary which is:

w

p(A) =-I(

w=1

p(z))

(2.1)

zE{z}w

where {z}, is the set of all segmentations of the w-th word in the dictionary.

The forward-backward algorithm is formulated as follows. Let tm,n represent the

node at location (m, n) in the word-pronunciation pair's DAG, where to,o is the upper

left node in the DAG, and

tM,N

is the lower right node (see Figure 2-2). Let ac(tm,n)

be the forward score and 3(tm,n) be the backward score corresponding to node tm,n.

The forward score for tm,n represents the likelihood of letters and phonemes between

to,o and tm,n. The backward score for tm,, represents the likelihood of the of the letters

and phonemes between tm,n and tM, N. For boundary conditions, we use a(to,o) = 1

and

3

(tM,N) = 1. Let Xrm,n denote an arc that leads into node tm,n, and let ,n

denote the starting node of this arc. Let Ym,n denote an arc that leads out of node

tm,n, and let ym,n denote the ending node of this arc. In addition, {Xm,n} denotes

the set of all arcs that enter node tm,n, and {Ym,} is the set of all arcs that leave

this node. As we move through the graph, the forward and backward scores can be

computed using:

a(tmn,n) =

a(xm,n)p(Xn,n)

(2.2)

(Yrn,n)P(Ym,n)

(2.3)

{Xm,n)

p(tmn,n)= E

{Ym,n }

After the forward and backward scores are calculated, we can re-estimate the

posterior probability for each graphone gq used in the word-pronunciation pair w:

POSw (gq)

=

(rmn,n)e {gr(Xm,n)=gq}

pos,,(g,) =(2.4)

a(Xrn,n)p(X,n)(trn,n)

m,where

a(tgr(X,)

isthegraphone

to corresponding

(tm,n)

where gr(Xmn) is the graphone corresponding to the arc Xmn,

(2.4)

The posterior probabilities for all graphones are accumulated through all wordpronunciation pairs in the training dictionary, and normalized such that graphone

probabilities sum to one. In the following equation for updating the graphone probabilities, Q denotes the graphone set size.

posw (g,)

EW

pw=

1 EQpsw(gq)

(2.5)

After updating probabilities for all graphones, we check if the training dictionary's

likelihood has stopped increasing, and repeat another EM iteration if needed. The

training dictionary's likelihood is calculated by taking the product of a(tM,N)'s for all

words. The number of graphones can be controlled in a number of ways, the simplest

being trimming with a probability threshold. Also, in a practical implementation,

the probabilities would be stored in the log domain to reduce the effect of machine

precision.

For the second step of the graphone training process, the Viterbi algorithm is

applied on the DAGs to segment the entire training dictionary into sequences of

graphones. Running this algorithm on a word-pronunciation pair involves calculating

the Viterbi scores for each node in its DAG and setting back points which can then

be used to perform a backward trace to identify the graphones corresponding to the

best segmentation. The Viterbi scores,

*(tm,n), are updated while moving from the

upper-left to the lower-right corner of the DAG. This score represents the probability

of the best graphone segmentation up to node t,n. It can be calculated using the

following equation:

7*(tm,n) =

max p(Xm,n)y*(Xm,n)

(2.6)

{xm,n}

The back-pointer for node tm,n is set to point to the previous node that corresponds

to the maximum value in Equation 2.6.

For the third step, standard N-gram modeling is used to compute statistics over the

segmented training dictionary, which includes smoothing and backing-off to shorter

N-grams. These statistics can then be combined with standard search algorithms

(e.g. Viterbi search, beam search, Djikstra search) to decode an unknown word into

its most-likely pronunciation.

In 2002, Bisani and Ney improve upon Deligne's formulation by adding an effective

trimming method during training for controlling the number of graphones [6]. This is

important because only a subset of the graphones that satisfy the letter/phoneme size

constraints are actually valid chunks for modeling the training data. The intuition

behind this is closely related to choosing morphemes that best represent the training

data. Because only some of all possible chunks resemble valid morphemes or syllables,

trimming can help us remove spurious graphones from our set. This study explores

various constraints for the number of letter or phonemes allowed in a graphone, and

finds that allowing 1-2 letters and and 1-2 phones per graphones, combined with a

trigram language model gives the best performance in terms of phoneme error rate

(PER). This trimming method, called evidence trimming, trims graphones based on

how much they are used in segmenting words-pronunciation pairs in the dictionary,

and is slightly different from trimming graphone probabilities. For a given iteration of

EM, the evidence for a graphone refers to its accumulated posterior probability over

all word-pronunciation DAGs. The graphone set is trimmed by applying a threshold

for the minimum amount of evidence needed for a graphone to remain in the model.

The probability of the trimmed graphones are then redistributed over the remaining

graphones. Note that this is actually the opposite of the intuition from a language

modeling point of view, where we use smoothing to assign probabilities to unseen

data.

Both Bisani-Ney and Deligne et al. consider Viterbi training rather than EM training as a way to speed up the training process. During Viterbi training, only posterior

probabilities for graphones along the best path in each DAG is accumulated. This

could be a good approximation of considering all paths. However, both studies find

that Viterbi training is highly sensitive to the initialization of graphone probabilities.

One good initialization method is to initialize graphone probabilities proportional to

each graphone's occurrence in all possible segmentations of the training dictionary.

In subsequent studies, Bisani and Ney investigate the use of graphones in large vo-

cabulary continuous speech recognition (LVCSR) [7]. Several studies by other authors

also apply graphones to tasks such as dictionary verification [40]. Because graphone

models are trained probabilistically, they are good for spotting errors in manuallyannotated dictionaries. For entries that are likely to be erroneous, the probability

associated with its graphone segmentation is very low compared to others. Using this

information, we can automatically detect the mislabeled entries in the dictionary.

In a later study by Bisani and Ney, they revisit the L2S task with an revised

training procedure that integrates EM training with language modeling (LM) [9],

and produce results that are better than or comparable to other well-known L2S

methods. EM training is first used to determine the set of graphone unigrams, in

almost the same fashion as before. Then, the next-highest N-gram model (i.e. bigram)

is initialized with the current model, and trained to convergence using EM. This

process is repeated for successively-higher N-grams until the desired N-gram length

is achieved. This differs from their previous study [6] in that EM is now performed

for each N-gram length, rather than estimating the final language model directly on

a dictionary segmented by unigram graphones. In the higher-order N-gram models,

each node in the dictionary entry's DAG represents a position along the letter-sound

sequence as well as the history for the N-gram that ends at this position. This means

that unlike the older model described by Figure 2-1, the underlying probabilistic

model is no longer a memoryless emitter. Instead, graphone emission probabilities

are now conditioned on the previous N - 1 emissions. Therefore, a node in the

DAG is only connected to previous nodes that satisfy the N-gram history constraint.

Each graphone N-gram is associated with an evidence value that is accumulated

through all DAGs during each iteration of EM. Kneser-Ney smoothing is applied to

graphone N-gram evidence values at the end of each EM iteration. Because these

counts are fractional, we can't use discount parameter selection methods that assume

integer counts. Powell's method is used to tune smoothing discount parameters by

optimizing on a held-out set. When a graphone N-gram's fractional count falls below

the discount parameter value, this N-gram is effectively trimmed. Another difference

between this study and their previous study is that the new EM convergence criteria

is the likelihood of a held-out set rather than the training set, which could increase

the generalizability of the L2S converter. This study concludes that training a long

N-gram (i.e. 8 or 9-gram) along with tiny graphones (i.e. 0-1 letters/phonemes)

produced the best L2S results. This finding is similar to the results produced by

Chen with joint maximum entropy models [11], which is expected because the two

methods have similar statistical learning processes. The results of this study are also

comparable to the discriminative training results reported in [27].

2.2.2

Graphone Flat Hybrid Recognition

In speech recognition, graphones can be an effective method to mitigate the OOV

problem in open vocabulary speech recognition. Ideally, OOV words could be recognized into a sequence of graphones that can be concatenated to form the correct

spellings. There may be cases where an in-vocabulary word is recognized as a series

of graphones, but as long as the graphone sequence still generates the correct spelling,

the speech recognizer's output is still accurate. One way to move closer to this goal

is to use a flat hybrid model for the speech recognizer's language model. To build

this model, OOV words in the recognizer's training corpus are replaced by their graphone representations. Finding the graphone representation of a word can be done

through the same process as L2S conversion, except we stop just before converting

the most-likely graphone sequence to a phoneme sequence. From now on, we will

refer to this process as graphonization. The only other part of the recognizer that

needs to be modified is the lexicon, which needs to be augmented with a list of graphones and their pronunciations. This model is flat in the sense that graphones and

words are treated as equals in the language model; the model is a hybrid because it

contains statistics of N-gram transitions between graphones and words. Bisani and

Ney have applied graphones in flat hybrid recognition for the Wall Street Journal

dictation task [8]. Their graphonization process uses an N-gram size of 3, and their

speech recognition's language model also has a N-gram size of 3. They find that at

lower vocabulary coverage (88.8% and 97.4%), the word and letter error rates are

significantly reduced by using this fiat hybrid model. At high vocabulary coverage

(99.5%), this model does not degrade the recognition performance even though the

added graphones could increase confusability with words. They also note tradeoffs

regarding the graphone size. Larger graphones are easier to recognize correctly, but

have worse L2S performance, leading to less accurate graphonization of OOV words

in the training corpus. Small graphone sizes (i.e. 1-2 letters/phonemes) can also

lead to more insertions in recognition output. In their experiments, they find that

the trade-off point is a graphone size of 4 letters/phonemes for 88.8% and 97.4%

coverage, and a graphone size of 3 for 99.5%.

Recently, Bisani-Ney's flat hybrid recognition have been combined with other

methods. Vertanen combines graphone hybrid recognition with confusion networks

and demonstrates improvement with the addition of the confusion network component [39]. This study also notes a technique for mitigating the tradeoff where bigger

graphones are not as good in graphonization performance as smaller graphones. Instead of graphonizing the language model's OOV words directly, they first use a tiny

graphone, large N-gram, L2S unit to generate pronunciations for OOV words, and

then graphonize OOV words using bigger graphones with the L2S pronunciations as

an additional constraint. Akbacak et al. use graphones to model OOV words in a

spoken term detection task [1]. The recent applications of the graphone flat hybrid

model show that this model is a start-of-the-art technique for speech recognition in

domains where OOV words frequently occur.

2.3

SUMMIT Speech Recognition Framework

The SUMMIT Speech Recognizer is a landmark-based speech recognizer that uses

a probabilistic framework to decode utterances into transcriptions [21]. The speech

recognizer is implemented as a weighted finite-state transducer (FST), which consists

of four stages: C o P o L o G. C and P encode phone label context and phoneme

sequences.

L is the mapping from phonemes to words, and G is the grammar or

language model (LM). The acoustic model is based on diagonal Gaussian mixtures,

and is represented by features derived from 14 Mel-Frequency Cepstral Coefficients

(MFCCs), averaged over 8 temporal regions. The mapping from phonemes to words

are constructed directly from a pronunciation lexicon. The language model is an Ngram model trained on a large corpus of text, which is usually in the same domain

as where the recognizer is used.

Decoding during recognition is performed through a two-pass search process. The

first pass is a forward beam search where traversed nodes are labeled with their Viterbi

scores. The second pass is a backward A* search where the Viterbi scores are used as

the heuristic for the remaining distance to the destination. A wider search beam can

produce more accurate results, but takes longer to run. To minimize computational

complexity, the forward pass usually uses a lower-order N-gram than the backward

pass. The backward A* search produces the recognizer's N-best hypotheses, along

with their associated probability scores.

Chapter 3

Restaurant and Street Name

Recognition

This chapter focuses on applying graphones to letter-to-sound (L2S) conversion of

restaurant and street names. Since the end goal of L2S in a speech recognition system

is to be able to correctly recognize the corresponding words, we evaluate the quality

of the automatically-generated pronunciations through word recognition experiments.

The objectives of these experiments are to find the optimal training parameters for the

graphone model, determine how using multiple pronunciations affects performance,

and verify that the graphone model generalizes well to truly unseen proper names.

3.1

Restaurant Data Set

The data set used in this experiment was originally collected for research reported in

[13], in which subjects were asked to read 1,992 names in isolation. These names are

selected from restaurant and street names in Massachusetts. They are representative

of a data set that could be used for a voice-controlled restaurant guide application.

These names are especially interesting because a significant portion of them are not

in a typical pronunciation dictionary, so in order for the word recognizer to recognize

these names, we must be able to automatically determine their pronunciations. In a

typical system, as new place names are imported into the system through an update

process, a L2S converter can be run to automatically generate pronunciations for

those words.

3.2

Methodology

The first step involves building graphone language models using the expectation maximization (EM) training process described in [9]. An established -150k-word lexicon

used by SUMMIT is used for the training process. Different graphone models with

maximum graphone size (L) of 1-4 letters/phonemes and graphone N-gram size (N)

of 1-8 are explored. L is the upper bound on the maximum number of letters as

well as the maximum number of phonemes in a graphone. There is no limit for the

minimum number of letters or phonemes, except that we do not allow graphones to

have neither letters nor phonemes (i.e. null). Note that it is important to allow 0phoneme graphones because some letters can be silent. Also, for large-L models, the

number of N-grams may no longer change after a certain N, and we do not continue

building higher-order models beyond this point. These models are saved to files in

ARPA language model format, which are then used to build L2S converters using the

MIT Finite-State Transducer (FST) Toolkit [26].

The L2S converter is the composition of three FSTs: letter to graphones, graphone

language model, and graphones to phonemes. The letter-to-graphone FST maps a letter sequence to all possible graphones that fit the letter sequence constraint. The graphone language model component is a direct FST translation of the ARPA language

model file. The graphone-to-phoneme FST concatenates the phoneme components of

the graphones. For a given word, a Viterbi forward search and A* backward search

within the L2S FST is used to generate an N-best list for possible pronunciations.

This L2S converter is then used to generate pronunciations for all 1,992 restaurant

and street names.

For evaluation, a word-recognizer is built using the automatically generated pronunciations. A standard set of telephone quality acoustic models are used [43]. Utterance data collected from users are then used to determine the word-error rate (WER)

of this recognizer. These results are also compared to the same experiments using

spellnemes.

3.3

3.3.1

Results and Discussion

Graphone Parameter Selection

Graphone parameters have a big impact on the performance of the system. Bigger

graphones with larger L values can capture more information individually, but each

word contains fewer graphones of this size. Smaller graphones on the other hand,

capture less information individually, but because each word contains more graphones,

we can more effectively use established language modeling techniques to make the

model generalizable. Bigger graphones also lead to a much larger set of graphones

compared to smaller graphones.

Graphone models with different L and N parameters were used to generate word

pronunciations. The raw data for graphs in this section are located in Appendix A.

Figure 3-1 shows the recognition word error rate (WER) for different combinations

of L and N values. For lower N values, high-L models perform the best because

low-L graphones capture little information individually. For high N values, lowL models perform better, most likely because the language modeling framework is

more powerful and generalizable than the EM framework that identifies the graphone

set. One explanation for this could be that unlike the Kneser-Ney discounting used

with N-grams, there is no discouting that discounts larger graphones for smaller

graphones. Numerical precision may also play a role. For large L, each graphone

unigram has a very small probability, making it likely that some valuable graphones

could be discarded when their evidence falls below numerical precision. For a sense

of comparison, graphone set sizes for the unigram models are: 0.38k, 3.7k, 20k, 54k,

for L = 1, 2, 3, 4 respectively. Numerical precision could also play a role for higherorder N-grams as well, but because the training process trains each N-gram model

to convergence before ramping up to the next-higher N-gram, we have much more

L=1

- L=2

-h- L=3

---

65~

---

60

60

L=4

cr 55

o

50

= 45

0

r: 40-

35

1

2

3

5

6

4

Graphone N-gram Size

7

8

Figure 3-1: Word recognition performance using automatically generated pronunciations produced by a graphone L2S converter trained with various L and N values.

model stability as we increase N.

These results show that the best L-N combination is L = 1, and N = 6, which

has a WER of 35.2%. For L = 1, as we increase N past 6, the WER actually starts

to increase slightly. This could be due to over-training as we increase the model order

too much. For L = 2, WER does not decrease beyond a 5-gram. This trade-off point

is around a trigram for L = 3, and bigram for L = 4.

Comparing graphone results with spellneme results from [13] shows that the best

graphone models outperform spellneme models. With spellnemes, the WER is 37.1%

while the best graphone model yields a WER of 35.2%. This could be because for

graphones, EM training with smoothing is run for both unigrams as well as higherorder N-grams. For spellnemes however, although N-gram statistics are computed

on a subword-segmented dictionary, there is no additional learning process for readjusting the higher-order N-gram models.

Word

albertos

creperie

giacomos

isabellas

renees

sukiyummi

szechuan

tandoori

valverde

First Hypothesis

aa 1 b eh r t ow z

k r ey pd r iy

jh ax k ow m ow z

ih z ax b eh I ax z

r ax n ey z

s uw k iy uw m iy

s eh ch w aa n

t ae n d ao r iy

v aa 1 v eh r df ey

Second Hypothesis

ae Ib er t ow z

k r ax p r iy

jh ax k ow m ow s

ax s aa b eh I ax z

r ax iy z

s uw k iy y uw m iy

s eh ch uw aa n

t ae n d ao r ay

v aa 1 v eh r df iy

Third Hypothesis

ae Ib er t ow s

k r ax p er iy

jh ax k ow m ax s

ih z ax b eh I ax s

r ax n iy z

s uw k iy ax m iy

s eh sh w aa n

t ae n d ao r ax

v ae 1 v eh r df ey

Table 3.1: Examples of the L2S converter's top 3 hypotheses using the graphone

language model.

3.3.2

Adding Alternative Pronunciations

The pronunciation dictionary can have multiple pronunciations for each word. In

fact, a manually-annotated dictionary will definitely have multiple pronunciations for

some words because words can be pronounced in different ways. For an automaticallygenerated dictionary, we could just use the top hypothesis for the L2S converter as

the pronunciation. However, the next few hypotheses in the recognizer's output Nbest list (ranked by decreasing probability) could be valuable as well, especially if a

word truly does have multiple pronunciations. Interestingly, for restaurant and street

names, multiple pronunciations can have another added benefit: people may pronounce names incorrectly because they are guessing based on prior language knowledge. For example, for a French restaurant name, some people may pronounce it

closer to its French pronunciation, while others may say it by reading the name as

if it were an English word. If the L2S converter suggests some of these commonlyguessed pronunciations as alternatives, then we are more likely to recognize place

names correctly, even if the subject doesn't pronounce it correctly in the first place.

This all works under the assumption that the L2S converter's top hypotheses are the

ones that make the most linguistic sense based on the training dictionary. For the

graphone-based L2S, this is usually the case when the training dictionary is large.

Table 3.1 shows some examples of graphone L2S conversion using L = 1 and N = 6.

-e-e--A-0-

6

60

L=I

L=2

L=3

L=4

0

'm 50

o

0

30

1

1

2

3

1

4

5

6

Graphone N-gram Size

7

8

Figure 3-2: Word-recognition performance using top 2 pronunciations generated using

various L and N values.

For most of the words, the first hypothesis is an excellent pronunciation. Sometimes,

the quality of the pronunciation degrades rapidly, such as for renees, but for most

words, the first few hypotheses are all reasonable approximations of the true pronunciation. Some of these variations account for variations in phonemes that are very

close to each other, such as s and z, and ax and ae. These variations are also useful

for how fast the person says the word. For creperie, the first two pronunciations are

more accurate for someone saying it at conversational speed, especially if they know

french, whereas the third pronunciation is in more enunciated form.

First, we explore various values of L and N for generating top 2 pronunciations

for each word. The WER graph, shown in Figure 3-2, has a similar shape as the

graph for using top pronunciation only. These results are slightly better than using

the top 1 pronunciation. The best L-N combination is still L = 1 and N = 6, with

a WER of 31.6%. Since for both top 1 and top 2 pronunciation cases, the same L-N

combination gives the best results, we use this combination for experimenting with

even longer lists of alternative pronunciations.

To study the effect of adding more alternative pronunciations, the number of pronunciations per word is varied between 1 to 50 for L = 1 and N = 6. Multiple

pronunciations for each word are weighed equally in the recognizer's FST framework.

We expect that as we increase the number of pronunciations per word, the wordrecognition performance first gets better because we are adding more useful variation

for each word, and then gets worse because too many pronunciations introduces too

much confusion into the recognition framework, and dilutes the probabilities for the

correct pronunciations. These results, along with a comparison to spellnemes are

shown in Figure 3-3. We see the most recognition improvement when the number

of pronunciations is increased from 1 to 3. We then have a trade-off point of about

10 pronunciations per word before the WER starts increasing with added pronunciations. These results indicate that using graphones are the most advantageous than

spellnemes for using the top 1 hypothesis. Spellnemes do better at high number

of included pronunciations, possibly because their lexically-derived nature allows the

pronunciation quality to degrade more gradually as we move down the N-best hypothesis list. In addition, because spellnemes are more linguistically-constrained, they may

be better at generating alternative pronunciations when applying phonological rules

leads to multiple correct pronunciations that are distant from each other.

3.3.3

Generalizability of Graphone Models

Finally, we explore the generalizability of graphone L2S for this task. The restaurant

and street name data set includes some common English names that are likely to

occur in the training dictionary. The set of truly unseen names provide a good test

for the generalizability of the model. Out of the 1,992 names, 626 names have an exact

match in the dictionary used to train the graphone model, and the remainding 1,366

are considered to be unseen. In order to generate good pronunciations for unseen

words, the L2S converter must use the probabilities learned from the dictionary to

guess the pronunciation of the unseen words. Also, this task is more challenging than

evaluating L2S performance by dividing a dictionary randomly into train and test

sets, because the unseen words for this task are names of places. Some examples

S34

33

S32

Ljr314

72

0

291

100

10

Number of Pronunciations per Word

Figure 3-3: Word recognition performance with various number of pronunciations

generated using the graphone (L = 1, N = 6) and spellneme L2S converters.

of words in the unseen set are: galaxia, isabelles, knowfat, verdones, and republique.

Many of these words are foreign words, concatenation of multiple words, or purposelymisspelled words.

For using the top pronunciation only, WER results separated by seen and unseen

words are shown in Figures 3-4 and 3-5. For unseen words, we see that we are still

getting very good performance that are sometimes even slightly better than seen

words. One reason for this favorable performance is that the EM training process has

integrated smoothing. For unseen words, we also see a large dip at L = 1 and N = 6,

which indicates possible overtraining after the N-gram length goes past 6. This dip

is not present for the seen words.

For using the top 2 pronunciations, WER results for seen and unseen words are

shown in 3-6 and 3-7. It's interesting to see that both plots have dips in their WER

curves that indicate overtraining for very high N-grams. One explanation for this is

that many seen words in the training dictionary only have one pronunciation. While

the top L2S pronunciation may match the actual seen pronunciation exactly, the

--e- L=1

-e- L=2

-A- L=3

--4- L=4

1

2

3

4

5

6

Graphone N-gram Size

8

7

Figure 3-4: Word recognition performance for seen words when the top 1 pronunciation is used.

-e- L=1

-e- L=2

--- L=3

-- L=4

I

1

2

3

I

__ . _

.

..

_

..

_

.

.

......

4

5

6

Graphone N-gram Size

.

. ".."

. ..

7

.

..........

8

Figure 3-5: Word recognition performance for unseen words when the top 1 pronunciation is used.

' L=4

L=2

-a- L=2

L=3

-- L=4

1

2

3

4

5

6

Graphone N-gram Size

8

7

Figure 3-6: Word recognition performance for seen words when the top 2 pronunciations are used.

-e- L=I

1

2

3

4

5

6

Graphone N-gram Size

7

-+-

L=2

L---

L-.4

8

Figure 3-7: Word recognition performance for unseen words when the top 2 pronunciations are used.

second L2S pronunciation is only a hypothesized guess since it is not available in the

training data. Finally, we have also shown good generalizability to unseen words for

top 2 pronunciations, since the curves are similar to the ones for seen words.

3.4

Summary

In this chapter, we built a system that recognizes restaurant and street names, and

demonstrated that graphone models are excellent for L2S conversion of these words.

We further increased the word recognition performance of the system by incorporating more than one pronunciation for each word, using N-best hypotheses from the

L2S converter. Finally, we showed that graphones generalize well to unseen words

because their performance does not deteriorate for words not in the graphone training

dictionary.

50

Chapter 4

Lyrics Hybrid Recognition