Syllables and the M Language: SNOV 1 LIBRARIES

Syllables and the M Language:

Improving Unknown Word Guessing

by

M. Brian Jacokes

MASSACHSES INSTITUTE

OF TECHNCS,-OG'

SNOV 1

3 2008

LIBRARIES

Submitted to the Department of Electrical Engineering and Computer

Science in partial fulfillment of the requirements for the degree of

Master of Science in Electrical Engineering and Computer Science at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

May 2008

@

Massachusetts Institute of Technology 2008. All rights reserved.

Author

.... ................

Department of Electrical Engineering and Computer Science

May 23, 2008

I

A 4

Certified by.

...............

David L. Brock

Principal Research Scientist, Lab for Manufacturing and Productivity

Thesis Supervisor

Accepted by ..........

Arthur C. Smith

Professor of Electrical Engineering

Chairman, Department Committee on Graduate Students

ARCHNES

A ;

Syllables and the M Language:

Improving Unknown Word Guessing

by

M. Brian Jacokes

Submitted to the Department of Electrical Engineering and Computer Science on May 23, 2008, in partial fulfillment of the requirements for the degree of

Master of Science in Electrical Engineering and Computer Science

Abstract

Despite the huge amount of computer data that exists today, the task of sharing information between organizations is still tackled largely on a case-by-case basis. The M

Language is a data language that improves data sharing and interoperability by building a platform on top of XML and a semantic dictionary. Because the M Language is specifically designed for real-world data applications, it gives rise to several unique problems in natural language processing. I approach the problem of understanding unknown words by devising a novel heuristic for word decomposition called "probabilistic chunking," which achieves a 70% success rate in word syllabification and has potential applications in automatically decomposing words into morphemes. I also create algorithms which use probabilistic chunking to syllabify unknown words and thereby guess their parts of speech and semantic relations. This work contributes valuable methods to the areas of natural language processing and automatic data processing.

Thesis Supervisor: David L. Brock

Title: Principal Research Scientist, Lab for Manufacturing and Productivity

Acknowledgments

I would like to thank many people for their support during the completion of this thesis.

I would like to thank my advisor, Dave Brock, for taking me on as an M.Eng. candidate and guiding me with several insights that became my thesis.

I am also thankful to the other members of the MIT Data Center Ed Schuster,

Ken Lee, Robby Bryant, Peden Nichols, and Chris Paskov for their help during our weekly meetings and their comments on my ongoing research.

Finally, I would like to thank my parents, Paul and Pat, and my girlfriend Darlene for supporting me during my time at MIT.

Contents

1 Introduction

1.1 Overview of Research ...........................

1.2 Thesis Outline ...............................

2 Data Languages

2.1 WordNet ...................

2.2 XML ....................................

2.3 The M Language .............................

...............

2.3.1 M Dictionary ...........................

2.3.2 M Files ..............................

2.3.3 The M Platform .

......................

2.4 M and Existing Technologies .......................

2.4.1 Differences between M and Wordnet

2.4.2 Differences between M and RDF .................

..............

3 Natural Language Processing

3.1 NLP Problems ..............................

3.1.1 Word Sense Disambiguation ...................

3.1.2 Unknown Word Guessing .....................

3.2 Adaptations of NLP to Data Languages ................

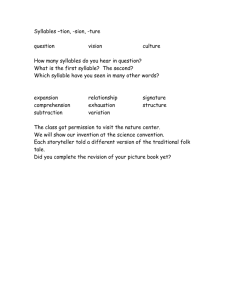

4 Automatic Syllabification

4.1 Syllabification ...............................

7

4.1.1 Role in Unknown Word Guessing . ...............

4.1.2 Current Techniques ...................

4.2 Probabilistic Chunking .. . ............. ..

4.2.1 Algorithm Overview ................

4.2.2 Methods .......

4.2.3 Results and Discussion ...................

...........

4.2.4 Extension beyond Syllables ........... ........

38

.. . .

38

........ 39

......

...

40

42

42

43

5 Unknown Word Guessing

5.1 Part-of-Speech Tagging ...................

5.1.1 Algorithm Overview ...................

5.1.2 Methods ...................

5.1.3 Results and Discussion ................

...........

.......

....

45

45

45

46

... . . 47

5.2 Similar Word Guessing ...........

5.2.1 Problem Overview ...........

5.2.2 Algorithm Overview ......

5.2.3 Methods ....... ..

5.2.4 Results and Discussion ...................

. .

.

. . .

..... .

.

...

.......

.

........

..... ..

49

... .

...

..

49

49

51

51

6 Conclusion

6.1 Contributions ...................

6.2 Future Directions ...................

A M Dictionary Storage Formats

A.1 words.txt ...................

A.2 syllabledict.txt ...................

A.3 relations.txt ...................

B Source Code

B.1 mlanguage.h ...................

B.2 mlanguage.cc ...................

B.3 syllables.cc. ...................

...........

...........

..........

..........

...........

.........

............

........

53

53

.

54

57

.. 57

57

.. 58

59

.. 59

.. 61

..

64

Chapter 1

Introduction

Over the past 20 years, developments in technology have given incredible importance to the role of data in modern society. Corporations and other organizations around the world are now accumulating huge amounts of data every day, and the Internet provides a cheap and easy method for data transmission. The business of gathering, organizing, and analyzing this data is of paramount importance in fields ranging from government intelligence to agriculture.

The task of integrating data from multiple sources is complicated by the lack of a universal data language. Although the Extensible Markup Language (XML) is widely used to store data, there is no simple way to convert between XML files with different schemas. Groups of organizations that wish to share data amongst themselves often work around this problem by creating domain-specific schemas, but this only serves to further isolate information. XML also lacks semantic associations, so that it is often unclear what sense, or definition, of a word is intended in a document. These shortcomings lead to difficulties in sharing data between organizations and an inability to reliably search for information in data files.

Using a semantic data language is a promising approach to storing data which can easily be converted from one format to another, a concept called interoperable data.

A semantic data language does not necessarily impose a structure on data, so long as the meaning of each part of the data is clear.

The most powerful application of a semantic data language is the ability to develop

algorithms which make no restrictions on the format of their input data. A user could analyze patient records from a hospital with no knowledge of the format in which medical records are kept, or could search for used cars from multiple dealers and find listings for "cars," "automobiles," and "Toyotas." This is what we envision as the future of interoperable data.

Not surprisingly, developing the framework around a semantic data language requires research in the diverse fields of computer systems, user interfaces, and machine learning. In this thesis, I focus on the machine learning problems whose solutions may greatly enhance the interoperability of disparate data.

1.1 Overview of Research

In this thesis, I investigate ways for a machine to automatically convert non-semantic data into a semantic data language. This task involves well-known natural language processing problems, namely the problems of word sense disambiguation and unknown word guessing. Both of these problems are variants on the more general problem of determining the meaning of a word which is encountered in a document.

I make the hypothesis that syllables are a useful structural unit for analyzing the meaning of words. To this end, I devise an algorithm called probabilistic chunking which is able to automatically break words into syllables, or more generally into any

"chunks" of meaning for which training data is available. I train this algorithm on syllable data gathered from the Merriam-Webster dictionary and obtained a 70% success rate in syllabifying unknown words.

I then use this algorithm to make progress on the unknown word guessing problem.

This is done by using the probabilistic chunking algorithm, along with a semantic dictionary, to determine the implications of certain syllables on the part-of-speech and meaning of words in which they are found. I use this to produce algorithms for part-of-speech tagging and for similar word guessing. The part-of-speech tagging algorithm successfully tags unknown words with a success rate ranging from 89% to

97%, depending on how many different definitions the unknown word has. These

results are comparable with those of the more complicated techniques which are currently available. My similar word guessing algorithm produced mixed results, but provides clues as to future directions of research. Since the similar word guessing problem is one which I am the first to formulate, no concrete research has been done to compare my results with.

1.2 Thesis Outline

The material in this thesis is organized as follows:

Chapter 2 discusses relevant topics concerning data languages. It introduces

WordNet, a semantic dictionary which is widely used for natural language research. It also introduces the M Language, a semantic data language recently developed by the

MIT Data Center. The M Language is compared and contrasted with two existing technologies: WordNet and RDF.

Chapter 3 presents background information on word sense disambiguation and unknown word guessing, two natural language problems whose solutions could help to automate the conversion of XML data into M. Current approaches to these problems are examined, and their applicability in the context of data files is discussed.

Chapter 4 introduces my key hypothesis regarding the role of syllables in guessing unknown words, and discusses methods for computers to automatically break words into syllables. It describes the algorithm and test results for probabilistic chunking, a novel method for automatically breaking words into smaller chunks of meaning. The application of this algorithm to morpheme breakdown is described.

Chapter 5 details the methods developed for applying syllables to unknown word guessing. It demonstrates a part-of-speech tagger with success rates comparable to those of current techniques, and also explains the prototype for a new unknown word guessing task called similar word guessing.

Chapter 6 concludes by discussing the contributions made in this thesis and suggesting future directions of work.

Chapter 2

Data Languages

In this chapter, I introduce WordNet, a semantic dictionary which has formed the basis for a large body of research into natural language parsing. A knowledge of Word-

Net is important in understanding background research on unknown word guessing.

I also explain the M Language, a semantic data language developed by the MIT Data

Center.

The M Language forms a platform which aims to satisfy the growing need for interoperable data. Several other technologies have been created to address this issue, so I will discuss these technologies and explain the positive attributes that M

provides.

2.1 WordNet

WordNet is a semantic dictionary that was introduced by Miller [8] in 1990. As in a normal dictionary, each word in the dictionary has one or more entries, each corresponding to a different sense of the word. However, instead of storing only the definition and part of speech for each sense of a word, WordNet also stores a set of semantic relations. These semantic relations provide a representation for the English language which is relatively easy for a computer to understand and process.

There are six types of semantic relations outlined in the original version of Word-

Net, although several more complicated relations have since been added. These rela-

tions are as follows:

* A synonymy indicates that the two words have the same meaning (e.g. "rise" is related to "ascend" by synonymy).

* An antonymy indicates that the two words have opposite meanings (e.g. "rise" is related to "fall" by antonymy).

* A hyponymy indicates that the first word is a member of a class of objects denoted by the second word (e.g. "maple" is related to "tree" by hyponymy).

* A hypernymy is the inverse of a hyponymy (e.g. "tree" is related to "maple" by hypernymy).

* A meronymy indicates that the first word is a part or component of the object described by the second object (e.g. "blossom" is related to "flower" by meronymy).

* A holonymy is the inverse of a meronymy (e.g. "flower" if related to "blossom" by holonymy).

On an intuitive level, these relations are the ones which come to mind most readily when trying to explain an unknown word to another person, so it is natural that they also provide a good basis for representing knowledge about word meanings to a computer.

The largest task in creating WordNet is to define word senses and specify the relations between them. As described in the implementation details of WordNet in [11], this task is performed by human lexicographers, ensuring that changes to the dictionary are controlled and that entries are accurate. However, this approach has drawbacks of limited development speed and lack of slang and proper nouns, which becomes an issue when focus shifts from the academic analysis of texts to the processing of real-world data. Proper nouns such as those of industry-specific technologies are rapidly being created and changed at a pace which it is impossible for a team of lexicographers to match.

Despite a few limitations, the WordNet dictionary has many advantages over traditional dictionaries in computational situations. Semantic relations are a convenient way of representing words because they are far easier for a machine to understand than freeform text definitions. They are also powerful in their ability to represent the nuances of word senses, and in many cases the relations offered by WordNet give even more information than a corresponding dictionary or thesaurus entry would.

For these reasons, WordNet has been the platform of choice for many researchers interested in natural language processing.

2.2 XML

Before discussing M, it is convenient to first review XML, which forms a basis for the M language. XML was created in 1996 through the World Wide Web Consortium (W3C) primarily as a way to share data over the Internet [1], and has become ubiquitous in commerce and industry as a standard for data storage.

XML represents data in a tree format by using tags to signify the traversal of the tree. Each tag appears between two angle brackets ("<" and ">"), has a name, and can optionally have attributes or enclosed data. The region between a start-tag, of the form "<tagname>", and an end-tag, of the form "</tagname>", is called an

element, and represents a subtree of the XML data tree. Figure 2-1 shows a sample

XML document.

The only requirement of an XML file is that it must be well-formed, meaning that it must follow a simple set of syntactical constraints which ensure that the file consists of nested tags, attributes and data. Much of XML's power comes from the freedom of representation that its well-formedness rules allow. As long as data can be represented in a tree structure with some auxiliary information at each node, it can also be represented by an XML document.

However, it is difficult for a computer to understand such data, because the semantic and structural flexibility of XML allows the same piece of knowledge to be represented in many different ways. In a hospital patient record like the one shown

<record>

<patient sex="male" age="21">

<name>John Doe</name>

<condition>Heart disease</condition>

</patient>

<doctor>

<name>Jane Smith</name>

<education>Harvard Medical School</education>

</doctor>

</record>

Figure 2-1: A sample hospital record in XML format. The "record" element is the root of the XML tree, and its children are the "patient" and "doctor" elements. The

"patient" element has two attributes, "sex" and "age." in Figure 2-1, the gender of the patient could appear as an attribute in the "patient" tag or as its own tag in the "patient" element's subtree. The gender could also be referred to by either the name "gender" or "sex." This makes it all but impossible for XML data to be understood without some a priori knowledge of the format that it will be stored in.

The most common solution is to use XML Schema, a language also created by

W3C. A schema defines a template for an XML document by specifying the names, data types, and layout constraints of all elements of the data tree. For example, a schema for XML documents of the form shown in Figure 2-1 would specify, among other things, that the "record" tag has a child tag called "patient" with an integer

"age" attribute. This is done in an XSD file, which is both complicated and visually confusing for humans, as can be seen in Figure 2-2.

XML has become a de facto standard for data storage since its introduction. However, despite its potential to serve as a universal data language, it has instead suffered from the reliance on schemas to interpret data. Because each schema essentially defines an entirely new data format, it is difficult for organizations which use different schemas to exchange data unless conversion tools are created on a case-by-case basis.

<?xml version="1.0" encoding="Windows-1252"?>

<xs:schema attributeFormDefault="unqualified" elementFormDefault="qualified" xmlns:xs="http://www.w3.org/2001/XMLSchema">

<xs:element name="record">

<xs:complexType>

<xs:sequence>

<xs:element name="patient">

<xs:complexType>

<xs:sequence>

<xs:element name="name" type="xs:string" />

<xs:element name="condition" type="xs:string" />

</xs:sequence>

<xs:attribute name="sex" type="xs:string" use="required" />

<xs:attribute name="age" type="xs:unsignedByte" use="required" />

</xs:complexType>

</xs:element>

<xs:element name="doctor">

<xs:complexType>

<xs:sequence>

<xs:element name="name" type="xs:string" />

<xs:element name="education" type="xs:string" />

</xs:sequence>

</xs:complexType>

</xs:element>

</xs:sequence>

</xs:complexType>

</xs:element>

</xs:schema>

Figure 2-2: An XML Schema for the patient record format shown in Figure 2-1. The length and complicated syntax of XSD files make them difficult to maintain.

Thus, despite the widespread use of XML for data storage and information exchange, it has failed to allow the free-flow exchange of data between industries.

2.3 The M Language

The M Language is a semantic data language that was first formulated by Brock and Schuster [2] in 2006. It is designed to retain the desirable structural elements of XML while introducing the semantic associations provided by WordNet.

The combination of these features forms the basis for a platform which intends to improve data interoperability.

2.3.1 M Dictionary

The M Dictionary is modeled after WordNet in many respects; in fact, the original version of the M Dictionary was populated using WordNet content. The M Dictionary

has two primary purposes: to resolve word sense ambiguity in data files and to define semantic associations between word senses. The combination of these two functions is a powerful approach to creating interoperable data. By precisely defining the words which appear in data, the M Dictionary alleviates the ambiguity problem that is normally faced when sharing data between organizations. Then, after data has been encoded by specifying word senses instead of just words, semantic associations allow computer applications to more efficiently search through data and convert between arbitrary data formats.

Because of its intended use in real-world applications, the M Dictionary departs from the WordNet implementation in several ways:

* Instead of having controlled releases with changes made only by lexicographers, the M Dictionary is available online at http: //mlanguage. mit. edu and is continually updated by authorized users in a wiki format.

* The M Dictionary adds a "proper noun" part of speech and relations for acronyms, abbreviations, and slang.

* Users of the M Dictionary can create their own relations, such as the "borderson" relation (connecting words such as "Massachusetts" and "Connecticut") which was defined by Data Center partner Raytheon Corp.

* In addition to defining definitions and semantic relations, the M Dictionary also allows data formats to be specified for each concept in the dictionary, allowing automatic type checking of data.

* It is sometimes useful for one side of a relation to be a combination of single words from the dictionary, such as when "China" is defined as a "type-of" the concept "Asian countries", so the M Language allows word phrases comprised of two or more word senses to comprise relations in the M Dictionary.

* WordNet requires synonym relation to be transitive if a is a synonym of b and

b is a synonym of c then a and c must also be synonyms but the M Dictionary

removes this restriction to allow greater flexibility in relations and to make the dictionary editing process easier for users.

These changes promote rapid development of the dictionary and ensure that the M

Language can deal with words commonly used in data files.

The M Dictionary currently uses 8 relations, many of which are adapted from

WordNet, although different terminology is used for ease of understanding:

* The synonym relation is the same as the synonymy relation of WordNet.

* The antonym relation is the same as the antonymy relation of WordNet.

* The type-of relation is the same as the hyponymy relation of WordNet, and implicitly stores the inverse hypernymy relation.

* The part-of relation is the same as the meronymy relation of WordNet, and implicitly stores the inverse holonymy relation.

* The attribute-of relation indicates that the first word is an attribute which the second word can have (e.g. "maple" is related to "wood" by type-of).

* The abbreviation-for relation indicates that the first word is an abbreviation for the second word (e.g. "mi" is related to "mile" by abbreviation-for).

* The acronym-for relation indicates that the first word is an acronym for the second word (e.g. "GUID" is related to the compound word "globallyunique-identifier" by acronym-for).

* The slang-for relation indicates that the first word is a slang term with the same meaning as the second word (e.g. "klick" is related to "kilometer" by slang-for).

The M Dictionary allows data files to reference specific word senses by the simple method of indexing every possible sense of a word. The string of letters "patient," for instance, has four senses according to the M Dictionary. The senses are indexed from

"patient.l," meaning "to endure without protest or complaint," to "patient.4," which

refers to a hospital patient. As far as the M Dictionary is concerned, these senses represent entirely different words: there is no inherent connection between "patient. 1" and "patient.4" unless a set of relations links them in the M Dictionary. Constructing a data file using these indexed M words resolves the ambiguity currently present in most data.

2.3.2 M Files

<record>

<patient sex="male" age="21">

<name>John Doe</name>

<condition>Heart disease</condition>

</patient>

<doctor>

<name>Jane Smith</name>

<education>Harvard Medical School</education>

</doctor>

</record>

(a)

<record.6>

<patient.4 sex.2="male" age.l="21">

<name. 1>John Doe</name.1>

<condition.6>Heart disease</condition.6>

</patient.4>

<doctor.2>

<name. 1 >Jane Smith</name.1>

<education.2>Harvard Medical School</education.2>

</doctor.2>

</record.6>

(b)

Figure 2-3: A sample patient record is shown in both (a) XML and (b) M. The M file reduces the ambiguity present in the original XML file through the use of M words.

For instance, the "record.6" differentiates the file from files about Olympic records, the "patient.4" specifies that we are referring to a hospital patient and not a virtuous characteristic, and the "age.1" indicates that we are referring to a numerical age and not an era of history.

The predominant goal of the M Language is to serve as a neutral intermediary between disparate data standards using the concept of an M file. At the most basic level, the M Language has three requirements of an M file: the file must adhere to the well-formedness rules of XML, all tag and attribute names must be valid M words, and if a tag name has an associated format in the M Dictionary then the tag's corresponding element must contain data values which conform to that format.

As a point of comparison, Figure 2-3 shows an XML file with its corresponding translation into an M file. Although the XML record is easily understood by a human, it is difficult for a computer to infer the word meanings from their context. When the M file is cross-referenced with the M Dictionary, however, most of the XML file's

ambiguities are eliminated.

2.3.3 The M Platform

In addition to defining a data format which reduces ambiguity, the M Language project is also focused on creating an entire platform for data translation and analysis.

This framework is designed to enhance the foundation built by the M Language.

The Translator Factory

As described in the discussion of XML, individual organizations often use the structure of an XML Schema to store all files containing a given type of data. Facilitating the translation from these schemas into M files is a key step in helping organizations to adopt the M Language.

A certain hospital, for example, might always store patient records in the format shown in Figure 2-3(a), but at the same time might want to share its data with other hospitals which use different formats. The solution is to build an M adapter which automatically converts the hospital's patient records into an M file such as the one shown in Figure 2-3(b). Because M words are semantically unambiguous, it is much easier to automatically translate between M files than to do so between XML files.

Thus, although the hospital can continue to use its original data schema, is will also be able to easily share its data with other hospitals which have M translators of their own.

The Translation Factory, in advanced stages of development, provides a user interface for specifying such an adapter translation. For a given XML Schema, the user is able to specify intended word senses for all tag and attribute names, and can optionally specify a structural transformation between the input XML Schema and the output M file. After defining an adapter, conversion from the given XML Schema into the M Language is entirely automatic.

There are two automation problems which are of interest in the translation process. The first problem is for a computer to automatically determine which word

senses are intended for all tag and attribute names in an XML Schema. This touches on two natural language processing problems known as word sense disambiguation and unknown word guessing, both of which are discussed in Chapter 3. The second problem is for a computer to automatically detect the structural transformation needed to go from an input XML Schema to an output in the M Language. This problem is outside the scope of the research done in this thesis.

Web Machines

Another component of the M platform is the concept of Web Machines. Web Machines are algorithms whose inputs and outputs take the form of M files, and they are made available online as web pages and as web services.

One advantage of having Web Machines operate on M files is that the validity of inputs can be checked automatically. For instance, the M Dictionary has three entries in the format field of the word "color.l": RGB color, 24-bit RGB color, and hexadecimal. These entries specify that RGB colors consist of a 3-dimensional array of floating-point numbers between 0.0 and 1.0, that 24-bit RGB colors consist of a

3-dimensional array of integers between 0 and 255, and that hexadecimal colors are represented as strings. When an input file has a "color.1" element with enclosed data, that data can then be validated by checking that it matches one of the formats in the M Dictionary. Similar checks can ensure that temperatures, prices, and GPS coordinates are of the correct format in input data.

Web Machines are also especially adept at extending the range of possible input formats for an algorithm. Consider, for example, an algorithm that searches through a data file full of classified advertisements and finds information about car listings. The simplest implementation when the input file is in XML would be to search for tags with a given name, such as "car," and return the data enclosed by those tags. However, listings that advertise "vehicles," "automobiles," or any of a several different related words, would not be found by this algorithm. An MQL interface to the M Dictionary, built into the M platform, enables Web Machines to access word relations, making it is very easy for Web Machines to search input M files for "cars," "automobiles," and

"Toyotas," all at the same time.

The greatest potential of Web Machines is in their composability for increasingly complex functions. On the M Language website, simple machines exist to convert stock tickers into company names, to convert company names into RSS News Feeds, and to convert RSS News Feeds into a list of headlines. A simple composition of these three machines allows a user to see headlines associated with a given stock ticker. Input validation ensures that the composition process avoids producing inconsistent inputs, while the ability of machines to accept a wide range of formats greatly increases the scope of composed machines.

2.4 M and Existing Technologies

It should be evident from the above discussion that the M Language provides substantially different functionality than technologies currently used in industry and academia. It is still useful to highlight the most prominent of these differences, so that the unique problems associated with the M Language can be fully understood.

2.4.1 Differences between M and Wordnet

The M Dictionary and WordNet are both examples of semantic networks, a form of knowledge representation in which concepts in a set are selectively linked with each other using a set of semantic relations. One significant way in which the M Language differs from WordNet, and indeed from any semantic network, is in the addition of the M file and its platform of data technologies. Semantic networks alone are unable to represent data or perform data analysis, while the M Language is able to handle both of these functions.

A more specific difference between the M Language and WordNet, which is necessitated by the fact that the M Language is built to handle real-world data, is the way in which words and relations are added to their respective dictionaries. While

WordNet relies on lexicographers to act as a trusted source of dictionary modification, the M Dictionary can be edited in a wiki format by any registered user. Wiki editing

encourages rapid development of the M Dictionary because words can be added as needed when users store data as M files. With widespread adoption of the M Language, the expectation is that the M Dictionary will quickly acquire definitions for everything from corporations to car models to politicians, along with relations which place these terms in the context of the M Dictionary. While defining such terms is perhaps not as important for most applications of WordNet, it is vital for the ability of the M Language to represent real-world data.

These differences in functionality and implementation create several challenges not associated with WordNet. One clear need is for consistency in the definitions of

M words as the dictionary is edited, since modifying or deleting an M word such as

"patient.1" will affect the meaning of every M file which references that M word. The wiki process also carries a risk of compromised dictionary quality, whether it is in the form of poor definitions which need improvement or in the form of a word having multiple senses which are not very semantically distant from each other.

The M Language website currently provides a user interface to facilitate the processes of adding new word senses, editing and merging existing word senses, and deleting deprecated word senses. Several machine learning techniques have the potential to greatly reduce human error and even automate the process of changing the dictionary. For example, if two M words have identical or very similar semantic relations in the M Dictionary, then it is likely that they should be either merged or connected with a synonym relation. In addition, if analysis of a large corpus of data shows that tags named by one M word often have attributes named by another M word, then it is likely that those two M words should be linked with the attribute-of relation.

Since the M Language deals with real-world data in which new concepts are constantly being introduced, one interesting problem is to automatically determine information about a word which does not appear in the M Dictionary. Solving this task would allow the wiki interface to automatically suggest a part of speech, as well as potential semantic relations, when a user adds an unknown word to the M Dictionary.

A solution would also have the potential of automating the definition process in the

future. This problem is one which will be more fully discussed in Chapter 5.

2.4.2 Differences between M and RDF

The Resource Description Framework (RDF), is a technology created by W3C which provides a framework for defining semantic relations in data. The central concept of RDF is that of a triple, which consists of a subject, a predicate, and an object.

Triples, which serve a similar function in RDF as relations do in the M Dictionary, can use the subject "dog," the predicate "is a type of," and the object "animal" to express the same concept that is expressed by the type-of relation between "dog.1" and "animal.2" in the M Dictionary. Any of the components of a triple can refer to other resources in the form of a URI, so that the subject of a triple, for instance, can contain a URI which points to a complete RDF description of the subject. This allows concepts to be built on top of each other in RDF.

RDF has the same deficiency as WordNet in the sense that it does not have a platform built around its knowledge representation. Thus, there is no infrastructure for translating legacy data into RDF or for performing data analysis on RDF data.

The syntax used to express RDF relations in XML is difficult for humans to read, so this lack of infrastructure makes the widespread adoption of RDF difficult.

Also, although RDF triples can be used to describe the same types of semantic relations that are found in the M Dictionary, RDF lacks a centralized source of knowledge like the one provided by the M Dictionary, and in this respect has the same semantic deficiencies as XML. To build concepts on top of each other in RDF requires URIs of external resources, so a computer might have to load several external documents to fully understand the relations expressed in RDF data. The other issue with a lack of a centralized dictionary is that it is possible for a single concept to be described by arbitrarily many URIs. For instance, while RDF provides the ability to create a description of a hospital patient, an RDF file which refers to hospital patients may do so using any URI which points to such a description. The M Language, on the other hand, requires that the M word "patient.4" is the only term which can be used to refer to a hospital patient. The M Language is therefore significantly differ-

ent RDF because concepts and relations defined in the M Dictionary are both easily accessible and shared across all M files.

Chapter 3

Natural Language Processing

In this chapter, I discuss natural language processing (NLP) problems and their applications to the M Language. Central among these problems are the well-studied problems of word sense disambiguation and unknown word guessing. I explain these problems and the research which has been done on them.

Much of the research done on language processing focuses on analyzing natural language, whose format differs dramatically from the sorts of data that need to be analyzed for M Language applications. These differences give rise to several unique variants on NLP problems. I will explain these variants and why they require a fundamentally different approach than those covered by current research.

3.1 NLP Problems

The goal of NLP research is to allow computers to understand natural language: freeform text of the sort which might be written in a book or transcribed from human conversation. A human-level understanding of language is well beyond the reach of current research, so NLP has become an umbrella term which refers to any of several subproblems of natural language understanding, from parse tree creation to information retrieval. NLP research draws on clues from both artificial intelligence and linguistics in its attempt to solve these problems.

NLP has important applications to the M Language in the areas of data translation

and dictionary management. Automating the translation of legacy data into the

M Language requires converting each tag and attribute name into an M word. If a tag or attribute name has more than one sense in the M Dictionary, one must determine which sense is intended in the given context, a task known as word sense

disambiguation. An even more difficult problem is to guess information about a tag or attribute name which doesn't even appear in the M Dictionary, a problem known as unknown word guessing. Other goals of the M Language project, such as automatic inference of word relations, would also benefit from improved natural language processing.

It is worth noting again that NLP research is specifically designed to deal with natural language, and not with XML or other common data formats. This significantly reduces the number of NLP techniques which are applicable in processing

XML, because many NLP techniques guess information about a word by its context in a sentence. Thus, although NLP problems such as word sense disambiguation and unknown word guessing are relevant to the M Language, most of the current research on these problems cannot be used for data processing. In the next section, I will discuss potential ways to apply NLP techniques to data files, which I will hereafter use as an umbrella term for any format which can be used to store data. In practical terms this refers to XML, Excel, CSV, and any other file format where information is not stored in complete sentences.

3.1.1 Word Sense Disambiguation

Many words in the English language are associated with more than one possible word sense, a situation known as polysemy. This leads to the problem of word sense disambiguation, in which a machine must attempt to determine which sense of a polysemous word is intended, given an occurrence of that word in a document.

As we saw in Chapter 2, the M Language resolves the potential ambiguity of polysemous words by using M words, for which the intended word sense is clearly defined. In fact, if the M Language were universally used for data storage, then the problem of word sense disambiguation would be solved. Unfortunately this is not

the case, and most data files are filled almost entirely with polysemous words whose intended meaning is unclear.

The problem of word sense disambiguation, as it applies to machine understanding, was proposed nearly 60 years ago. I direct the reader to Ide's valuable summary in

[5] of a historical perspective on approaches to this problem. There are two common themes which can be drawn from all current research on word sense disambiguation.

The first such theme is the use of word context. It almost goes without saying that disambiguation algorithms must rely on the context of a word to have any hope of success, for the only other possibility is to resort to always guessing the most common sense of a word. In natural language processing, word context usually refers to a window of N words on either side of a word occurrence. The second common theme is that of word collocations, or words which appear near each other in text. Seeing certain word collocations gives strong clues as to the intended meaning of both words.

Finding and interpreting these clues is currently the most promising approach to word sense disambiguation. Current algorithms draw information from word collocations using either relational information from a semantic network such as WordNet or the

M Dictionary, or a large corpus of text with a small amount of supervised training.

To illustrate current methods of word sense disambiguation, I will summarize two successful approaches to this problem which have the potential to be adapted for use with data files. The first method uses a large corpus of text and a small training set to learn relations between word senses, while the second method determines relations between word senses using a similarity metric defined over a semantic network such as WordNet.

Large Corpus Learning

Using a corpus of raw text to uncover relations between word senses is a daunting task, because the text itself has ambiguous words. If a corpus is to be the predominant source of relational information, so that the algorithm does not have any external dictionary or semantic network with which to work, then a small amount of training is necessary, if only to inform the algorithm about the possible senses of a word.

In [12], Yarowsky presents a method for word sense disambiguation which fits the above description. He focuses specifically on the task of disambiguating between two possible senses of a certain word, given a large corpus of text which contains the word in both possible senses. Yarowsky's observation is that of "one sense per collocation," meaning that seeing certain word collocations in text generally suggests the correct word sense for one of the words. The example given by Yarowsky is that of the word

"plant," which can refer either to vegetation or to an industrial complex. In this instance, observing a "plant"-"life" collocation usually suggests the former definition, while a "plant"-" manufacturing" collocation usually suggests the latter definition.

These original collocations are called the seeds for the algorithm.

After choosing these seeds, the algorithm can guess the intended sense of "plant" with a fairly high success rate if the words "life" or "manufacturing" occur nearby.

This still leaves a large set of sentences in which neither of these collocations occurs.

Yarowsky's solution is to use his current set of informative collocations to guess the intended sense for as many occurrences of "plant" as he can, and then to pass these guesses to a supervised algorithm which finds more informative collocations.

For instance, the word "animal" occurs frequently among sentences with a "plant"-

"life" collocation, but rarely occurs among sentences with a "plant"-" manufacturing" collocation, thus it can be inferred that a "plant"-" animal" collocation is indicative of the first sense of plant. This collocation can be used to the intended sense for more occurrences of the word "plant," which can in turn be used to find even more informative collocations.

Iteratively performing this process serves to bootstrap two seeds into a large set of collocations which can accurately predict the intended sense of a word occurrence.

Yarowsky provides results which consistently place the success rate of the algorithm between 92% and 98% with well-chosen seeds. This method has the advantage of requiring very little framework, needing only a corpus of text and two seeds to perform disambiguation for a given word. However, its success has only been demonstrated for disambiguating between two word senses, which is significantly easier than when there are three or more potential word senses. Also, the onus of finding two high-

quality seed collocations for every word that is to be disambiguated arguably takes more effort, over the long run, than developing a semantic network such as the M

Dictionary.

Semantic Relatedness

The large corpus learning method uses a complicated iterative method to determine words which are related to each other, but if a semantic network is available to our algorithm, this relatedness information can be determined even more easily. Semantic

relatedness is a general term given to any measurement of word sense similarity which is calculated from a semantic network. For instance, a simple (but unreliable) method of determining semantic relatedness would be to assign two word senses a score of

1 if they are synonyms and 0 if they are not. Once one has a measure of semantic relatedness, disambiguating a word occurrence can be performed by guessing the word sense which has the greatest semantic relatedness to other nearby words.

A good summary of ways in which to measure semantic relatedness in WordNet is given by Patwardhan et al. in [9]. These metrics use, among other techniques, the following procedures to determine semantic relatedness:

* Build a tree over all word senses by using "type-of" relations as connections between parent nodes and child nodes, and then determine the relatedness of two concepts by measuring the information content (specifically, the negative log frequency of occurrence in language) of their lowest common ancestor (LCA).

Concepts whose LCA has a high information content are considered to have a high semantic relatedness [10].

* Build a tree as above, but use a hybrid approach which combines the information content of the least common ancestor of the two concepts with the information content of the concepts themselves. If the information content of the individual concepts is much greater than the information of their LCA, then the concepts are considered to have a high semantic relatedness [6].

" Treat the semantic network as a graph with upward relations (such as type-of),

downward relations (such as has-attribute), and horizontal relations (such as

synonym), and then find the shortest path between two concepts. If this path is short and doesn't "change directions" often, then the concepts are considered to have a high semantic relatedness [4].

An understanding of these methods isn't essential to understanding how semantic relatedness attempts to solve word sense disambiguation. What is important, however, is that these metrics provide a mechanism for determining similar words which is more automated and in-depth than the learning process that is formulated by Yarowsky.

Whether these metrics improve the results obtained by Yarowsky is arguable.

Patwardhan gives test results for each of several metrics on a set of 28 different words, whose number of possible senses ranges from two to 21. These results show a success rate of 46% to 89% for words with two possible senses, which fails to reach the mark which Yarowsky shows with his results. On the other hand, semantic relatedness correctly tags up to 25% of the occurences for words with 21 possible senses, a success rate which is significantly better than random chance and which is likely better than an adapted version of Yarowsky's method would give. There is also potential for combinations of these semantic relatedness measures to yield improved disambiguation performance.

3.1.2 Unknown Word Guessing

Even if a computer is provided with an extensive dictionary, it will inevitably encounter words which are not part of its vocabulary. Whether an unknown word is a proper noun, slang, or simply not included in the given dictionary, it is important for a computer to be able to make an educated guess as to the part-of-speech (POS) and meaning of the word.

The task of truly guessing the meaning of an unknown word is significantly harder for a computer than the problem of word sense disambiguation. There are several levels on which one might want a computer to understand the meaning of an unknown

word, and none of these levels is easy to achieve. The ability to compute a natural language definition of an unknown word is well beyond the reach of today's research, and even guessing synonyms of an unknown word is extremely difficult. To truly understand the difficulty of the problem, note that word sense disambiguation has a small set of possible answers and is easily performed by humans, while the range of synonyms for an unknown word is enormous essentially as large as the dictionary itself and guessing the meaning of an unfamiliar word is very difficult for humans, even if they have a good knowledge of etymology.

Because of the difficulty of this task, and because of the difficulty in formulating the problem so as to be able to achieve quantifiable results, research into unknown word guessing almost exclusively deals with the far easier problem of POS-tagging.

This problem attempts to predict the POS-tag of an word which is encountered in text. Although I will attempt to tackle a more ambitious form of unknown word guessing in Chapter 5, the remainder of this section will be devoted to POS-tagging.

As with word sense disambiguation, there is an approach to POS-tagging which uses the context of a word to guess its POS-tag. However, instead of using contextual clues like word collocations, which have the potential to be adapted into algorithms that run on data input, context-based POS-taggers analyze the sentence structure surrounding a word to guess its POS-tag. While these techniques are interesting in their own right, they apply more to language parsing than data analysis, and so I will avoid discussing them in more depth.

The other widely-used method for POS-tagging takes advantage of the fact that word prefixes and suffixes often express information about the POS-tag of a word.

One example taught in grade school is the fact that the suffix "-ly" has a strong correlation to adverbs in English, but there are several less obvious etymological clues which suggest that a word might be a noun, verb, or adjective.

In [7], Mikheev uses the prefix/suffix approach to automatically deduce rules for

POS-tagging. His algorithm looks for rules which are roughly of the form "adding the prefix p or suffix s changes words with the POS-tag P

1 into words with the POS-tag

P

2

". For instance, the observation in the previous paragraph might be written as

"adding the suffix -ly changes adjectives into adverbs." Mikheev presents a process for generating, merging, and evaluating these rules so that only the most successful rules remain. The POS-tag of an unknown word can then be guessed by using the result of an applicable rule with the highest success rate. When tested on a raw corpus of text, this method achieves a success rate of about 87% on unknown words.

3.2 Adaptations of NLP to Data Languages

The successful adoption of the M Language will require tools to facilitate and automate translation of legacy data, so the NLP problems of word sense disambiguation and unknown word guessing are essential components to the future of the M Language project. Successfully integrating the techniques from this chapter into M Language applications, however, requires changing the context and solutions for the corresponding NLP problems.

Although techniques for word sense disambiguation are specifically formulated in terms of natural language, it is not at all difficult to extend these techniques into the realm of data. All that is required is an altered definition of the context of a word occurrence, which can be defined as some function of nearby tag and attribute names in the XML tree structure of the file. Precisely defining the context of a word occurrence in a data file is an interesting problem, but because the arrangement of words is the only thing which separates disambiguation of natural language from disambiguation of data files, it is unlikely that studying unknown word disambiguation in the context of data files will present interesting language processing problems.

Unknown word guessing, on the other hand, has several attributes which make it an attractive subject of study in the context of data files. First, the research done on unknown word guessing is in an extremely primitive state when compared with the research done on word sense disambiguation, and research is primarily done on the low-level problem of POS-tagging. Additionally, because data files lack a sentence structure, many techniques used for POS-tagging of unknown words will not work in the context of data files. The frequency with which proper nouns and slang words are

used in real-world data is also much higher than in most natural language, so there is a great need for even simplistic mechanisms to guess meanings of unknown words.

For these reasons, I avoid the well-understood problem of word sense disambiguation, as it does not open up interesting new problems when placed in the context of data processing. Instead, I make a concerted effort in this thesis to study ways in which unknown word guessing can be effectively performed on data files.

Chapter 4

Automatic Syllabification

In this chapter, I discuss a problem called syllabification, which seeks to break words into their component syllables. My hypothesis that breaking an unknown word into syllables is useful for deducing information about the word. I present a novel method called probabilistic chunking which performs automatic syllabification of unknown words, and I compare it with current methods for syllabification. I also explain ways in which probabilistic chunking can be used to break words into other components of meaning such as morphemes.

4.1 Syllabification

A syllable is the smallest unit of sound into which spoken words can be broken.

Although syllables are primarily an auditory concept, they are often extended to the realm of written language. In some languages, such as Japanese, this correspondence is very intuitive because each character corresponds to a single syllable. In English, however, a word's syllables are comprised of one or more letters from the word. The syllabification of a word is often denoted by placing hyphens between the component syllables, so that the word "syllable" is syllabified as "syl-la-ble."

The process of syllabification is not the subject of much research, and syllables are generally studied in the context of spoken language and not written language. A few techniques have been developed for computers to perform automatic syllabification

of written language, but these largely have the goal of speech synthesis in mind. The use of syllables in helping machines to understand language has not been thoroughly investigated.

4.1.1 Role in Unknown Word Guessing

My hypothesis is that syllabification has the potential to be a powerful tool in unknown word guessing. For intuition, one needs only to look at how humans attempt to derive the meanings of unknown words. Young children who encounter unknown words while reading usually attempt to sound them out, explicitly breaking them into individual syllables. As they grow older, children begin to learn prefix and suffix rules, such as the use of "un-" to negate an adjective or "-like" to denote similarity to a preceding noun. These prefixes and suffixes almost always take the form of a spoken syllable. Even high school students studying for the SATs memorize word roots, which provide more subtle hints about the meaning of a word.

As I discussed in the Chapter 3, it has already been shown that computers can perform POS-tagging by using prefixes and suffixes of words. What I propose is the more ambitious goal of extending the problem of unknown word guessing to the general task of making sense of an unknown word, instead of limiting it to the simple task of POS-tagging. Moreover, since the interior of words must also be useful in determining word meanings for they are all that separate words such as

"unfaithful" and "unfearful" I believe that the approach of using only prefixes and suffixes must be carefully avoided. The breakdown of unknown words into smaller units of meaning is necessary for determining their meanings, and syllables offer a straightforward method for obtaining such a breakdown.

4.1.2 Current Techniques

For a summary of current techniques in syllabification, we turn our attention to work on speech synthesis, as it is the only major field of research which deals with automatic syllabification of written language. There are two predominant methods used

in speech synthesis for breaking words into syllables. The first is a dictionary-based approach, which simply attempts to exhaustively store the syllable breakdown for every possible word. Clearly this approach is not applicable for the purposes of unknown word guessing, because we are seeking to syllabify words which do not appear in the dictionary. The other approach to syllabification is a rule-based approach, which relies on observations about the placement of consonants and vowels to guess where syllable breaks occur. For instance, a word which contains four letters in sequence vowel-consonant-consonant-vowel usually has a syllable break between those two consonants.

Neither of these approaches appears promising in terms of a potential use in unknown word guessing. The first approach will not work on unknown words, and the second approach uses seemingly arbitrary rules to determine the syllable boundaries in a word. As is the case with rule-based approaches, there are also exceptions to syllabification rules which force the second approach to make a tradeoff between performance and simplicity. It is desirable to seek a more elegant solution to the syllabification problem before turning our attention back to unknown word guessing.

4.2 Probabilistic Chunking

If we again appeal to intuition and examine how humans pronounce a word like "uncanny," we will likely find that the syllable "un" is produced without any conscious analysis of rules associated with consonants and vowels. Instead, English speakers know that the string "un," when it appears in a word, almost always represents a syllable, while the strings "u" and "unc" almost never represent syllables. A native English speaker who has never heard any of the words derived from "awe" spoken aloud would in all likelihood break the word "awesome" into the syllables "a-we-some," for he would have no reason to anticipate the existence of an entirely new syllable. The ability of humans to continually learn new syllables and change their syllabification process based on previous experience suggests that rule-based approaches are not satisfactory explanations for how humans break written words into syllables.

I have developed a probabilistic heuristic which is able to reliably break words into smaller units of meaning. Although this method primarily applies to the process of automatic syllabification, it can also be trained to recognize any well-defined units of meaning when provided with the proper training set. The motivation for this process is increased evidence that humans use probabilistic information to understand and process language. Several models which successfully use such a probabilistic approach are described by Chater and Manning in [3].

4.2.1 Algorithm Overview

I will define a chunk to be a substring of a word which carries some sort of meaning.

This applies directly to the problem of syllabification if we define syllables to be our chunks, but the generality of this definition allows the algorithm to be used for more than just syllabification. The algorithm requires a set of training data which consists of several words and their corresponding breakdown into chunks.

The central idea of probabilistic chunking is to examine, for every possible chunk

c, the conditional probability that c is a chunk in a word s given that c is a substring of s. In the context of our training data, we can define

# of times that c appears as a chunk in the training data

(c) of times that c appears as a substring in the training data to be the conditional probability of c being a chunk when it appears in a word s.

Now consider the possible breakdown of a word s into chunks cl, ...

, ck, where each c, is a string of one or more letters and c

1

... Ck = s. I assign a heuristic score to this breakdown of s according to the equation

Hchunk(Cl, C

2

, ... , Ck) = Pchunk(C1) ... Pchunk(Ck).

(4.2)

Although H might appear to be a conditional probability function, this score does not actually reflect the probability of the given chunk breakdown, because the events are dependent on one another: if cl is indeed a chunk in s, it greatly increases the

chance that c

2 is also a chunk in s, and so on. Regardless of the fact that it cannot be interpreted as an actual probability, this score attempts to predict how likely it is that s is broken into the given chunks cl, c

2

, ...

, Ck.

Algorithm 1 The probabilistic chunking algorithm which is used for syllabification.

The function substr(s, i, j) is used to express the substring of a word w which starts at character i and has length j, and the function append(s, C) appends the string s to the sequence of strings defined by C.

{Inputs a training set t[1],...

,

t[n] and target word w.}

for i = lto n do for each substring s of t[i] do timesSeen[s] timesSeen[s] + 1 end for

for each designated chunk c of t[i] do timesChunked[c]

+-

timesChunked[c] + 1 end for end for bestScore[O] 0 bestChunking[0]

~

for i = 1 to n do

() bestScore[i] +- 0 bestChunking[i]

+-

()

for j = 0 to i - 1 do x = substr(w, j + 1, i - j)

if bestScore[j] * timesChunked[x]/timesSeen[x] > bestScore[i] then

bestScore[i] = bestScore[j] * timesChunked[x]/timesSeen[x] bestChunking[i]

*- append(x, bestChunking[j]) end if end for end for

{Returns bestChunking[n] as the optimal chunking}

Given the definition of the heuristic score H, the process of automatically chunking a word is very simple. The algorithm simply determines the chunk breakdown which maximizes H and returns that as its guess for the correct chunking. This optimization problem can be quickly performed with dynamic programming, which has a time requirement which is quadratic in the word length. Simplified pseudocode for the algorithm is shown in Algorithm 1, while the complete source code can be found in

Appendix B.

4.2.2 Methods

The probabilistic chunking algorithm was tested using syllables as chunking units.

All words in the M Dictionary which were not proper nouns or acronyms were looked up on the Merriam-Webster website at http://www. m-w. com, and the syllable breakdown was recorded for all words for which the website returned a result. Out of

149,124 word roots in the M Dictionary, there were 63,702 which were not proper nouns or acronyms, and 49,247 of those words returned a result in Merriam-Webster.

The algorithm was tested by removing a test set of 1000 random words from the data set, training the algorithm by computing chunk probabilities on the remaining data, and then guessing the syllable breakdown of each word in the test set. Mistakes in syllabification were also qualitatively examined to determine ways in which the algorithm most often failed.

4.2.3 Results and Discussion

The probabilistic chunking algorithm consistently achieved a success rate of 70%, plus or minus a 1% margin of error. This success rate is computed over a random sample of all words for which Merriam-Webster syllabifications were retrieved.

It is difficult to compare these results to existing methods, since there is no set of syllabification data which is commonly used for testing. In addition, the 70% observed success rate belies the true efficacy of the probabilistic chunking algorithm for two reasons. First, because my testing samples all words with equal probability, the real-world results might differ due to common words being weighted more heavily in the success rate. Also, there is no assurance that the syllabifications provided by

Merriam-Webster are correct, nor is there only one correct syllabification for many words, so some syllabifications produced by the algorithm are marked incorrect when they actually appear to the human eye to be correct.

The lack of concrete testing data leads to a necessity for qualitative analysis of the testing results. This analysis is very useful in understanding how the probabilistic chunking algorithm usually fails. A random assortment of some of the purported fail-

Word starved pollutant desalination apparatus libration

Probabilistic Chunking Merriam-Webster starv-ed pol-lu-tant de-sal-i-na-tion ap-para-tus lib-ra-tion starved pol-lut-ant de-sa-li-na-tion ap-pa-ra-tus li-bra-tion

Table 4.1: Sample syllabification results, produced by probabilistic chunking, which do not agree with the "correct" syllabification produced by Merriam-Webster. The

Merriam-Webster syllabification does not always appear preferable to that of probabilistic chunking.

ures of the algorithm are shown in Table 4.1. With two of these tests, on "pollutant" and "desalination," the probabilistic chunking method comes up with a syllabification which subjectively appears more correct than the one which is stored in Merriam-

Webster. The other three failures are near misses of the correct syllabification, and still provide useful information about how the individual words are broken down. In light of several such observations, I believe that the probabilistic chunking method is much more successful than its 70% success rate suggests.

4.2.4 Extension beyond Syllables

The main reason for using the general concept of chunks, instead of restricting the algorithm to syllables, is that there is another unit of meaning inside words which has greater potential for unknown word guessing than syllables. This unit, called the

morpheme, is defined in linguistics as the smallest meaningful unit of a language.

Consider an attempt by a computer to understand the word "Microsoft." This word is broken into syllables as "Mi-cro-soft," but a human would probably deduce meaning from the word using the units "micro" and "soft," which represent the morpheme breakdown of "Microsoft." In general, certain syllables such as "mi" do not provide very much information in language analysis, because they can appear in unrelated words like "mitigate" and "micron."

The predominant difficulty associated with morpheme analysis is a complete lack of publicly available training data. However, the promising results of probabilistic

chunking suggest that it could easily train on a set of morpheme breakdowns and thereby utilize the component morphemes in a word to perform unknown word guessing.

Chapter 5

Unknown Word Guessing

In this chapter, I discuss how the syllabification of an unknown word can be used to guess information about its meaning. I first tackle the problem of POS-tagging to evaluate my syllabification approach within the context of current research. I also formulate a new problem which I call similar word guessing and explain how syllabification can be used to predict words which are similar to an unknown word.

5.1 Part-of-Speech Tagging

My approach of using syllables to deduce information about unknown words suggests a straightforward method for POS-tagging. The intuition is that some syllables have a high correlation with a certain part of speech, and that seeing such syllables in a word should therefore suggest to us the part of speech of a word. By scoring each possible (word, POS-tag) pair using a probabilistic heuristic, an intelligent guess as to the correct POS-tag can be formed by simply using the POS-tag which gives us the highest score for a given unknown word.

5.1.1 Algorithm Overview

The POS-tagging algorithm requires as input a training set containing words and their corresponding syllablifications, as well as a training set containing words and

their corresponding POS-tags. These sets need not cover the same sets of words.

The first training set is used to train the probabilistic chunking algorithm from the

Chapter 4 to automatically syllabify words. The chunking algorithm is then used on the second training set, producing a large set of words along with their corresponding syllabifications and POS-tags.

For every syllable s and POS-tag p, I then compute the conditional probability of a word having the POS-tag p given that its syllabification contains the syllable s at the location £ in the word (either the beginning, middle, or end). This produces a probability equation for each (syllable, POS-tag, position) triple, which is similar to the equation used in probabilistic chunking:

PP,(s) # of times that syllable s appears at location f in a word with POS-tag p

# of times that syllable s appears at location £ in a word

(5.1)

Note that while the corresponding location-independent heuristic is also a viable option in POS-tagging, in practice the information about where syllables occur in a word provides a significant improvement.

If the algorithm is presented with an unknown word w whose POS-tag it must guess, the first step is to run the probabilistic chunking algorithm to break the unknown word into syllables s

1

,..., sk. A heuristic score is then computed for every possible POS-tag p in the dictionary according to the equation

HPos(p, S1, .

.. ,Sk) - Pp,beginning(S1)Pp,middle(S2) ..- Pp,middle(Sk-1)Pp,end(Sk)

(5.2) and the POS-tag which offers the highest heuristic score is chosen as the guess.

The complete source code for my POS-tagging algorithm can be found in Appendix B.

5.1.2 Methods

The input set of words and syllables which was used to train the syllabification portion of the algorithm consisted of the same training set used with the probabilistic chunking

algorithm in Chapter 4. The input set of words and POS-tags which was used to train the algorithm was comprised of all words in the M Dictionary whose POS-tag was either noun, adjective, verb, or adverb.

Although testing on a large corpus of text is the usual method for evaluating

POS-tagging algorithms, this testing approach is neither necessary nor applicable in my situation. Since my POS-tagging algorithm does not use context, it will make the same guess as to an unknown word's POS-tag regardless of its context in a sentence.

Also, the fact that my algorithm is designed to run on data files means that its success rate should not be weighted more heavily to words which appear commonly in text, which would be the case if training was performed on a corpus of raw text.

To attempt to give the broadest measure possible of the algorithm's success, it was tested on every word in the M Dictionary whose POS-tag was either noun, adjective, verb, or adverb. The results were broken down based on whether a word had more than one possible POS-tag or not. If a word has only one POS-tag, then clearly the algorithm succeeds for that word if it correctly guesses this POS-tag. If a word has multiple senses which span more than one POS-tag, however, I denote success to refer to a situation where the algorithm's guess represents one of the possible POS-tags for that word.

5.1.3 Results and Discussion

Out of 51,236 words whose senses all share the same POS-tag, the algorithm successfully guessed the POS-tag for 45,123 of them, yielding a success rate of 89.3%. Out of 6,185 words whose senses cover more than one POS-tag, the algorithm successfully guessed one of these POS-tags for 5,995 words, giving a success rate of 97.0%.

I used further analysis to generate a set of syllables which are strongly connected to a certain POS-tag. The data set used for POS-tag training consisted of 56% nouns, 22% adjectives, 18% verbs, and 4% adverbs. Therefore the words in which an

"average" syllable occurs should closely follow this same distribution. If the words in which a syllable occurs deviate significantly from this distribution, then that syllable is important in signaling the POS-tag for an unknown word. Such a deviation can be

Syllable Pnon, Padjective Pverb Padverb Chi-square coefficient ly 0.054 0.083 0.012 0.851 61132 ably 0.000 0.000 0.000 0.157 3688 cal ize ic

0.165

0.004

0.179

0.505

0.000

0.804

0.044

0.996

0.012

0.286

0.000

0.005

2732

2560

2355

Table 5.1: The five syllables whose chi-square coefficient indicates that they are the most valuable in determining a word's POS-tag. The correlation of the syllable "ly" with adverbs is well-known by most English speakers, but observations such as the correlation of "ize" with verbs can easily be overlooked until my algorithm points them out.

detected calculating the Pearson chi-square coefficient over the POS-tag distributions for each syllable.

Table 5.1 shows the five most important syllables detected by the algorithm, in decreasing order of significance, along with their distribution of POS-tags. Note that these syllables are not specifically an output of the algorithm, but are rather highlighted examples of the internal representations of the algorithm. These syllables are demonstrative of what English speakers already know: that words containing "ly" and "ably" are most often adverbs, that words containing "cal" and "ic" are most often adjectives, and that "ize" is almost always found in verbs. The fact that these syllables are most commonly found at the ends of words strengthens observations in prior research that prefixes and suffixes are the most important parts of a word in terms of determining its POS-tag.

While it is tested on different data than the POS-tagger formulated by Mikheev, my algorithm shows promising results in the area of POS-tagging. Unlike Mikheev's algorithm, my POS-tagger does not require complicated rules and iterative processes for discovering and merging rules. The POS-tag of a word is instead guessed using a simple and elegant heuristic which uses powerful probabilistic principles to predict

POS-tags. My algorithm also has the valuable side effect of finding syllables whose presence in a word is a strong indicator of a certain POS-tag, a tool which could come in valuable for future NLP problems which arise in the M Language and elsewhere.

5.2 Similar Word Guessing

I propose the problem of similar word guessing as a new standard to be sought after in the area of unknown word guessing. In similar word guessing, a computer is given an unknown word and must predict which other words it should be related to in a semantic network.

5.2.1 Problem Overview

While this problem is far more difficult than POS-tagging, it is necessary for automatic knowledge acquisition and also has many applications in the M Language. I envision even a moderately successful similar word guessing technique as a way for the M

Language to assist and automate the translation of data into the M Language. For instance, a machine which could successfully perform similar word guessing would see the word "Microsoft" and predict that it is related to computers and microelectronics, or would see the word "Google" and predict that it has something to do with looking for information. Providing such suggestions to users facilitates the word creation process, even if incorrect relations are sometimes found.

The more distant goal in similar word guessing is for the machine to determine the exact relations for these unknown words, so that "Microsoft" is recognized as a "type-of" computer company and Google is "slang-for" searching. If such a level of functionality were achieved, then it would have far-reaching effects in machine intelligence, but reliably performing such a classification would probably require a machine to have a huge amount of textual data at its disposal, from encyclopedias to novels to websites. However, I begin the investigation of this problem using only the

M Dictionary, in hopes that the use of a semantic network sheds light on promising approaches to the similar word guessing problem.

5.2.2 Algorithm Overview

I devised a simplistic algorithm as a proof-of-concept of my syllable-based approach on the problem of similar word guessing. The algorithm requires as input a training