Hidden Markov Models, Bayesian Networks Stat 430

advertisement

Hidden Markov Models,

Bayesian Networks

Stat 430

Outline

• Definition of HMM

• Set-up of 3 main problems

• Three Main algorithms:

• Forward/Backward

• Viterbi

• Baum-Welch

Hidden Markov Models

(HMM)

• Idea

each state of a Markov chain emits a letter

from a fixed alphabet

distribution of letters is time independent,

but depends on state

• Situation

usually we have string of emitted symbols,

but don’t know Markov Chain (it’s hidden)

Application Areas

• Pattern recognition

• Search algorithms

• Sequence alignments

• Time series analysis

Setup

Markov State Diagram

with transition probabilities A

emission probabilities B

Emitted Sequence Y

Usually, we only observe Y (several instances of it)

Example

• Suppose we have five amino acid sequences

CAEFTPAVH

CKETTPADH

CAETPDDH

CAEFDDH

CDAEFPDDH

• find best possible alignment of all sequences

(allowing insertions and deletions)

Run of an HMM

process:

• 2-step

initial -> emission -> transition -> emission -> transition ->

q1

O1

q2

O2

• Sequence of visited states Q = q

• Sequence of emitted symbols

q3

1

q2 q3 ...

O = O1 O2 O3 ...

• usually we can observe O, but don’t know Q

Example

• Markov Chain with two states, S

1

and transition matrix P

• Emission alphabet {a, b}

• In S emission probabilities

1

for a and b are 0.5 and 0.5

• In S

emission probabilities

for a and b are 0.25 and 0.75

2

• Observed sequence is bbb

and S2

S1

S2

S1

0.9

0.1

S2

0.8

0.2

S1 S2

S1 0.9 0.1

S2 0.8 0.2

transitions

Example

a b

S1 0.5 0.5

S2 0.25 0.75

emissions

• Observed sequence is bbb

• What is the most likely sequence Q that

emitted bbb?

argmaxQ P(Q|O)

• What is the probability to observe O?

P(O) = ∑Q P(O|Q) P(Q)

Definition

A hidden Markov model (HMM) consists of

• set of states S , S , ..., S

• the transition matrix P with

1

2

N

pij = P(qt+1 = Sj| qt=Si)

• an alphabet of M unique, observed symbols

A = {a1, ..., aM}

• emission probabilities

bi(a) = P(state Si emits a)

• initial distribution π = P(q

i

1

= Si )

Three Main Problems

•

Find P(O)

computational problem: naive solution is intractable

foward-backward algorithm

•

Find the sequence of states that most likely

produced observed output O:

argmaxQ = P(Q|O)

Viterbi algorithm

•

for fixed topology find P, B and π that maximize

probability to observe O

Baum-Welch algorithm

Forward/Backward

• given all parameters (B, Q, π) find P(O)

• naive approach is computationally too

intensive

• Use help of forwards α and backwards β

• α(t,i) = P(o o … o , q =S )

• then P(O) = ∑ α(T,i)

1 2

i

t

t

i

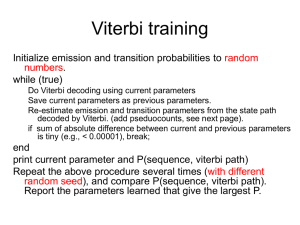

Viterbi

• compute arg max P(Q | O)

• two-step algorithm:

• maximize probability first,

• then recover structure Q

Q

Baum-Welch

CAEFTPAVH, CKETTPADH, CAETPDDH, CAEFDDH, CDAEFPDDH

HMM for Amino Acid/Gene

Sequences

d1

d2

d3

i0

i1

i2

i3

m0

m1

m2

m3

m4

CAEFTPAVH, CKETTPADH, CAETPDDH, CAEFDDH, CDAEFPDDH

Example

•

CAEFDDH most likely produced by

m0 m1 m2 m3 m4 d5 d6 m7 m8 m9 m10

•

CDAEFPDDH most likely produced by

m0 m1 i1 m2 m3 m4 d5 m6 m7 m8 m9 m10

•

Yields alignment

C -AEF - -DDH

CDAEFP -DDH

R packages

• HMM

• RHmm

• HiddenMarkov

• msm

• depmix, depmixS4

• flexmix

Bayesian Networks

• HMMs are special case of Bayesian Nets

• A Bayesian Network is a directed acyclic

graph,

where nodes represent variables

edges describe conditional relationships

Setup

X3

X1

X5

X2

• If there is no edge between two nodes, the

nodes are independent

• Edges imply parent/child relationship:

•

•

X1 has children X2, X3, X5

X5 has parents X1, X2

• P(X , ..., X ) = ∏ P(X |parents(X ))

1

p

i

i

i

X4

Example

• Given that the grass is wet, what is the

probability that it is raining?

R packages

• deal

• MASTINO