MA22S6 Assignment 8 - Markov processes Mike Peardon (

advertisement

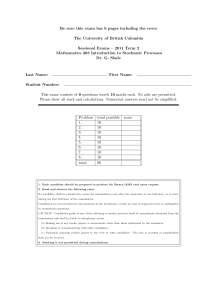

MA22S6 Assignment 8 - Markov processes Mike Peardon (mjp@maths.tcd.ie) March 23, 2012 Answer all three parts. Each part is worth seven marks. Hand the homework in by the end of the last tutorial (30th April). Question 1 A machine is either “working” or “broken”. In one day, there is a 1% chance the working machine will break. When broken, the machine is under repair and there is a 10% chance the repair will complete in a day, allowing the machine to go back to the “working” state tomorrow. Write a Markov matrix describing this dynamics and find: 1. The probability the machine will be working in three days time, given it is working today. 2. The probability the machine will be working in three days time, given it is broken today. 3. The fraction of days the machine is working on average. The Markov matrix would be µ M= 0.99 0.10 0.01 0.90 ¶ For parts 1 and 2. Ã 3 M = 973,179 1,000,000 26,821 1,000,000 268,210 1,000,000 731,790 1,000,000 ! So the probabilities for the states in three days time can be read off from this matrix: P (ψ(3) = χW |ψ(0) = χW ) = and P (ψ(3) = χW |ψ(0) = χB ) = 973, 179 ≈ 9.7% 1, 000, 000 268, 210 ≈ 26.8% 1, 000, 000 T 3. The stationary state is found ¡ 10 1by¢ using w = (1 x) as a trial solution for M w = w, which gives x = 0.1 T so the equilibrium is π = 11 11 Question 2 A system can be in any one of three states, A, B or C . When it is in state A it jumps to state B in one time-unit with probability 0.8. When it is in state B it jumps to state C with probability 0.5 and when in state C it jumps to state A with probability 0.2. Find the long-time average probabilities of the system being in any of the three states. The Markov matrix is 1 0 15 5 M = 45 12 0 0 1 2 4 5 ¡ ¢ And using w T = 1 x y as a guess for the solution to M w = w gives 1 1 + y = 1 5 5 4 1 + x = x 5 2 with solution x = 8/5, y = 4. This gives the fixed point as: µ ¶ 5 8 20 T π = 33 33 33 Question 3 Consider two bowls containing six numbered balls between them. A dice is rolled and the corresponding ball is moved from one bowl to another. Explain why this can be considered as a Markov process. If the 7 states of the system are labelled by the number of balls in the first bowl (k = 0 . . . 6), write the Markov matrix for this system and show ¶ µ 1 6 15 20 15 6 1 , , , , , , π= 64 64 64 64 64 64 64 is the equilibrium distribution. This is a Markov process because the probability of making a transition from one state to another depends only on the two states, not on how the system came to be in a particular state. The Markov matrix would be 0 16 0 0 0 0 0 1 0 2 0 0 0 0 6 0 5 0 3 0 0 0 6 6 M = 0 0 64 0 46 0 0 0 0 0 36 0 65 0 0 0 0 0 2 0 1 6 0 0 0 0 0 1 6 0 Now writing a trial eigenvector for the fixed point M w = w as w T = (1, x 1 , x 2 , x 3 , x 4 , x 5 , x 6 )) 2 gives the equations: 1 x1 6 2 1 + x2 6 3 5 x1 + x3 6 6 4 4 x2 + x4 6 6 5 3 x3 + x5 6 6 2 x4 + x6 6 Which we can solve in turn to give the eigenvector. 3 = 1 = x1 = x2 = x3 = x4 = x5