Outline Hidden Markov Models, Metropolis Hastings •

advertisement

Hidden Markov Models,

Metropolis Hastings

Stat 430

Outline

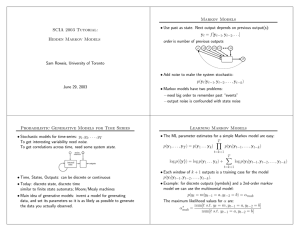

• Definition of HMM

• Set-up of 3 main problems

• Three Main algorithms:

• Forward/Backward

• Viterbi

• Baum-Welch

Definition

A hidden Markov model (HMM) consists of

• set of states S , S , ..., S

• the transition matrix P with

1

2

N

pij = P(qt+1 = Sj| qt=Si)

• an alphabet of M unique, observed symbols

A = {a1, ..., aM}

• emission probabilities

bi(a) = P(state Si emits a)

• initial distribution π = P(q

i

1

= Si )

Three Main Problems

•

Find P(O)

computational problem: naive solution is intractable

foward-backward algorithm

•

Find the sequence of states that most likely

produced observed output O:

argmaxQ = P(Q|O)

Viterbi algorithm

•

for fixed topology find P, B and π that maximize

probability to observe O

Baum-Welch algorithm

CAEFTPAVH, CKETTPADH, CAETPDDH, CAEFDDH, CDAEFPDDH

HMM for Amino Acid/Gene

Sequences

d1

d2

d3

i0

i1

i2

i3

m0

m1

m2

m3

CAEFTPAVH, CKETTPADH, CAETPDDH, CAEFDDH, CDAEFPDDH

Example

•

CAEFDDH most likely produced by

m0 m1 m2 m3 m4 d5 d6 m7 m8 m9 m10

•

CDAEFPDDH most likely produced by

m0 m1 i1 m2 m3 m4 d5 m6 m7 m8 m9 m10

•

Yields alignment

C -AEF - -DDH

CDAEFP -DDH

m4

R packages

• HMM

• RHmm

• HiddenMarkov

• msm

• depmix, depmixS4

• flexmix

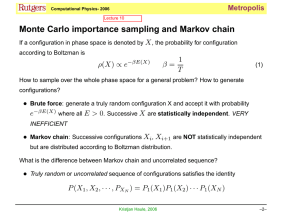

Metropolis Hastings

Algorithm

• Motivation:

we want to sample from distribution F

• Previously we did that with Acceptance/

Rejection sampling, with independent

samples.

• Now: Generate correlated random variables

instead of independent ones.

• Gives us improved efficiency

Extension to Markov

Chains

• Allow state space to be infinite (all of R):

• X , X , … ,X X is sequence of

0

1

t,

t+1, …

dependent variables, such that distribution

of Xt+1 only depends on value of Xt.

Markov Kernel K(Xt, Xt+1)

• e.g. :

random walk: Xt+1 = Xt+ ε, with ε ~ N(0,1)

Continuous Markov

Chains

• Small variation of random walk:

Xt+1 = rXt+ ε, with ε ~ N(0,1) with r < 1 and

X0 ~ N(0,1)

has large t distribution N(0, 1/(1-r2))

• Ergodic Markov Chains (irreducible, aperiodic

and positive recurrent) have a stationary

distribution, i.e.:

limT 1/T ∑t h(Xt) = E(h(X))

Metropolis Hastings

•

•

target density f

•

algorithm:

conditional density q

(easy to simulate, e.g. uniform)

•

given xt

•

•

generate yt ~ q(y|xt)

xt+1 = yt with probability α and

xt+1 = xt with probability 1 - α

where

α = min(1, f(yt)/f(xt) * q(xt|yt)/q(yt|xt))

Metropolis Hastings

• q is called proposal or instrumental

distribution

•

α is acceptance probability

Example: inverse χ2

• inverse χ

2 density:

f(x) = x(-k/2)exp(-a/(2x))

• for k = 5, a = 4:

0.14

0.12

f(x, k, a)

0.10

0.08

0.06

0.04

0.02

5

x

10

15

20

3500

3000

count

2500

Example

2000

1500

100

1000

500

0

40

60

met

80

100

40

60

20

20

met[1:1000]

80

0

0

• Top:

0

200

400

600

800

1000

Index

proposal distribution uniform U[0,100],

long stretches of same random number:

chain is poorly mixed

1200

1000

count

400

200

0

2

4

met

6

8

10

6

4

met[1:500]

8

10

0

2

Bottom: proposal

distribution is χ2

with df=1

600

0

•

800

0

100

200

300

Index

400

500

Metropolis Hastings

•

Very general algorithm:

simulate distribution f from any other distribution q

•

•

xt are not independent (because of the repetitions)

•

pick proposal distribution based on sd:

large sd -> large jumps, poor acceptance

too small sd -> small jumps, high autocorrelation

sample chain’s quality dependent on proposal

distribution

Gibbs Sampling

• Goal: target distribution

F(Y1,Y2, ...,Yk)

• Algorithm:

•

•

Given xt = (x1, x2, ..., xk)

X1t+1 ~ f(x1| x2t, ..., xkt)

X2t+1 ~ f(x2| x1t+1, x3t, ..., xkt)

...

Xkt+1 ~ f(xk| x1t+1, ..., xk-1t+1)