e-Business Threshold Management for Proactive Problem Determination Anca Sailer Soila Pertet

advertisement

e-Business Threshold Management for Proactive Problem Determination

Anca Sailer and Gautam Kar, IBM T. J . Watson Research Center

Soila Pertet and Priya Narasimhan, Carnegie Mellon University

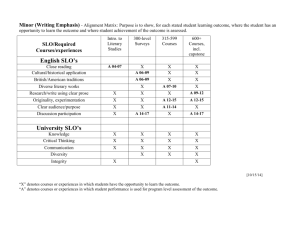

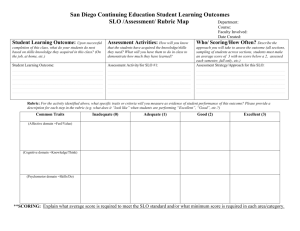

Motivation

Other (20%)

Hardware / OS

Network

Power and

Disaster

Proactive Fault-Tolerance (CMU)

Determine if we can exploit performance

monitoring to identify pre-fault symptoms and

initiate proactive (rather than reactive) actions

for faster fault-recovery

Traditionally, problem determination is

related to the state of components at the

system level (e.g., CPU, memory)

Application (40%)

Untested

Change

Management

Overloaded

Human Error (40%)

Forgot something

Lack of procedures

Backup

errors/Security

If problems manifest at the transaction

level, the management service has no

knowledge on the cause of the problem

Causes of site failure

Source: Gartner Group - 2001

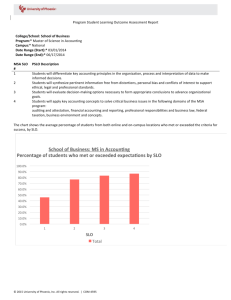

Pertet and Narasimhan developed a proactive fault-recovery

strategy for distributed middleware systems in the presence of

resource-exhaustion faults.

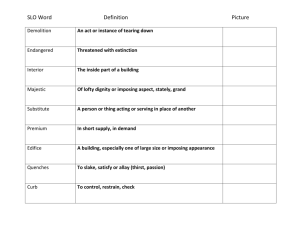

Autonomic Problem Determination (IBM)

Proactive fault-recovery architecture

HTTP1 WAS1

DB1

WAN

M

M

M

HTTP2 WAS2

WAN

DB2

M

1. Data

Collector

M

M

!

HTTP1

Local

Monitor

Agent

Storage

MQ

M

WAS2

DB2

2. Data Storage

Dependencies

T-SLO notification

b. Fault

notification

Middleware

Middleware

Replicator

Group Comm

Group Comm

M

M

Local SQL

c. Fault

root cause

t

1a

t

t

2a

3a

+t

t

3b

SQL START

Monitoring architecture for e- commerce system

t

2b

3. Migrate client connections away

from failing server replica on receipt

of proactive fault notification

Time

1b

Recovery Latencies

SQL END

Servlet START

SLO = Service Level Objective

Interface to Group

Communication

2. Detect resource exhaustion and

initiate proactive fault recovery

Point-to-point TCP

connection

Local HTTP

3. Data Analysis

Performance Models

System

State

1. Transparently modify behavior of

function calls associated with TCP

middleware communication

Host OS

Host OS

Local SERVLET

Problem

Determination

Recovery

Handler

Replicator

M

M

Management

Service

at

ed

Server

Storage

SLO Monitor

M

a. SLO Violation

Interface to CORBA

(modified system calls)

Proactive FT Manager

DB1

MQ

M

M

Host OS

Client

WAS1

HTTP2

Group Comm

Re

pl

ic

We build on work done by Sailer et al that pinpoints the root cause of

performance degradation in multi-tier distributed systems

Se

rv

er

Recovery

Manager

Servlet END

HTTP request

HTTP request

START

END

Determine time locally spent at component

Problem Determination Steps

1. Monitor transaction-related resource dependencies and

resource behavior performance models

Proactive fault-recovery significantly reduces recovery latencies in 2tier middleware application [IEEE DSN 2004]

2. Use monitored data to decompose transaction-SLOs

(T-SLOs) into component-SLOs (c-SLO)

Idea: Increase the benefit of proactive faulttolerance through topology awareness

3. Localize the root-cause of problem based on the degree to

which components violate their constructed c-SLO

A. Decompose Transaction-SLO into

Component-SLO

B. Detect c-SLO Violations and

Localize Root Cause

T1

Use knowledge of application dependencies to curb error

propagation in multi-tier distributed systems

Q1

New Collaborative Approach

S1

Q2

SLO VIOLATION

NO SLO VIOLATIONS

1. Read response time RT for component C

2. Lookup graph to create T, set {T1, T2} of

transactions that depend on C

M

TLocal (C ) = RT(C ) -

s

∑ ## Occurrence

Occurrences

k =1

k

c

× RTk

T2

S2

(i.e., if any transaction of T exceeds SLO)

Q3

1. If (TLocal > c-SLO) Then

bad_avg(C) = αbad_avg(C) + (1-α)TLocal

bad_N(C) = bad_N(C) + 1

2. If (bad_N(C) > β) Then

Compute severity(C) = bad_avg(C)/ c-SLO

P(C) = T Local ( C ) / RT End - to - end

3. Sorts nodes by severity value

c - SLO = T_SLO* avgP(C)

4. When problem is diagnosed

Reset bad_N(C) = bad_avg(C) = 0

How do we detect SLO violations based on metrics other

than response time? (e.g., resource usage, throughput violations)

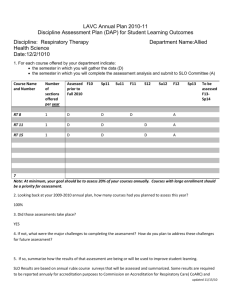

Experimental Observations

Precision: Non-Instrumented Code

100

80

Detection f aulty

servlets

60

%

Detection f aulty

tables

40

Detection mixed

problems

20

0

50

100

Research Question: What is the minimal level

of system monitoring required to proactively

diagnose performance problems in transactionoriented distributed applications?

How much monitoring and instrumentation is required to

effectively detect SLO violations of various kinds?

Should we monitor everything or get partial data and extrapolate values

through statistical inference or machine learning?

What granularity of monitoring is most appropriate to detect

SLO violations for different metrics?

150

load [# custom ers ]

Published in the Ninth IFIP/IEEE International Symposium on Integrated Network Management 2005

The c-SLO does not need to be recomputed if the system operates

within the linear part of the response time curve

Precision of problem determination in instrumented code≈100%

Does looking at more metrics provide higher accuracy in

problem determination, and potentially more focused

recovery?

Can system monitoring help us identify patterns of abnormal

behavior to enable proactive fault-tolerance?

Precision of non-instrumented code ≈ 50% -100%

IBM Academy Conference - April 26 - April 27, 2005 {IBM T. J. Watson, Research Center Yorktown Heights, NY}