Modeling episodic memory: beyond simple reinforcement learning Zachary Varberg Adam Johnson

advertisement

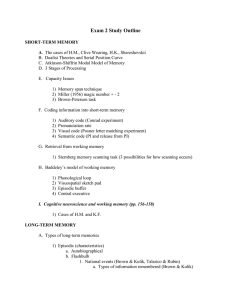

Modeling episodic memory: beyond simple reinforcement learning Zachary Varberg Adam Johnson Project outline Problem: – Episodic memory allows humans and animals(?) to imagine both the past and the future. These cognitive processes are poorly understood – both in terms of how they work and exactly what they contribute to behavior. Method: – Reinforcement learning (RL) provides a flexible and robust theoretical framework that allows study of interactions between memory processes and behavior. – Can we adapt current RL models to more concretely understand episodic memory in humans and animals? Reinforcement learning Basics – Markov decision processes (MDPs) – states, actions, transition and reward – Goal: learn a policy, a mapping of states to actions, that maximizes reward receipt (minimizes cost). Reinforcement learning Defining value as the total reward from all future states yields: leads to a useful recursive form that can be used to discover an optimal policy So given the transition model T the agent can make inferences about the future and learn more quickly. Reinforcement learning When the transition model T is unknown, we can use model-free RL to learn V – Given – we can assume that each transition is a sample from the transition model. An accurate estimate of the value function suggests that If the equality doesn’t hold, we can revise and update the estimated value function at each time step by A simple example – 15x15 grid – Random start – Goal at (9,5) Reinforcement learning Method: Q-learning – Step 1: Initialization – Q matrix (225x4) – Start and Goal states – Transitions – Step 2: Action Selection – Various methods – Step 3: Take the action – Step 4: Receive reward – Update Q value – Repeat 2-4 until termination a2 a5 a3 a4 Basic behavior Starting at point (1,15) there is a 18 step minimum. Steps per trial Red is the path taken on the 10th trial. (415 steps) Blue is the path taken on the 2500th trial. (32 steps) Reward per trial Episodic memory A brief history – Episodic memory is memory for episodes. – Tulving (1984) defined EM as memory for What, Where, and When (WWW memory) – This definition has been more stringently revised to mental time travel in which a subject can travel back to a previous event and re-experience it as though they were there. – At the simplest level, episodic memory can be thought of as richly detailed sequence memory. Computational desiderata Current RL models of episodic memory – Zilli and Hasselmo have constructed a variety of RL models that incorporate what they call episodic memory. – These models are massively limited: they allow solution for only very simple tasks and for many other tasks they are computationally intractable. The fallibility of episodic memory – Loftus and Palmer (1974) showed that memory is highly susceptible to suggestion. – More recent results from Maguire and Schacter suggest that episodic memories are constructed to facilitate planning future actions. A breaking point in the project Summary – We implemented a variety of RL models of episodic memory (e.g. supplemented MDPs, POMDPs, etc.). – We were unsatisfied with how each of these existing RL models treated episodic memory. An adequate model for episodic memory must: – learn quickly – flexibly generalize learning across a variety of situations – somehow address constructing future episodes A new problem… How do animals sort through which aspects of a task are important or valuable? – What is state (and transition models)? – Failing to find the appropriate state-space makes most tasks insoluble. – Inferring the appropriate state-space allows humans and animal to learn very quickly. – If similar states are used for inference, humans and animals can quickly generalize reward experiences. Making sense of data Dimensionality reduction – We used the radial arm maze task to produce massive data sets and applied a variety of non-linear data reduction methods to explore how state information might be embedded within the task. Roweis et al. (2000) Science Data reduction for memories Modeled data from the radial arm maze task – We find specific clustering for the training paradigm used by Zhou and Crystal (2009) for circadian rhythm parameters. – This suggests rats flexibly use data reduction methods to construct policies and solve the task. Conclusions Project: – We’re closing in on a general reinforcement learning method that integrates data reduction techniques with standard RL methods. – We still haven’t built a full algorithm. – The approach provides a satisfactory treatment of episodic memory and makes a clear, computationally specific, set of predictions that can be compared with human and animal behavior. Acknowledgements: – Paul Schrater (collaborator) – A. David Redish and Matt van der Meer Questions? Making sense of data Eigen-analysis – The principle axes of a given data set can be obtained by identifying the eigenvectors and associated eigenvalues for a transformation A – If we use covariance, we find principle components. Episodic memory Data reduction, construction and cognition – Learning can occur most quickly by reducing the data to a few very simple dimensions. – Certain tasks require more complex state-spaces and will necessitate use of more eigen-vectors. – The process of adding eigen-vectors (e.g. 2nd, 3rd principle components) allows construction of a richer state-space. – The construction process reflects the primary aspects of experience but is not verbatim recall of exact experience. Episodic memory in animals A radial arm maze task – Chocolate during second helpings is available in the same arm only during the mornings. – Rats show optimal behavior, searching for chocolate only when it’s available if a particular training paradigm is used. The animals do not display optimal behavior with other training paradigms. Zhou and Crystal (2009) PNAS Model-free RL and the brain Schultz, Dayan and Montague (1997) Science